Bradley Qu

CS 194-26: Project 6-1

FEATURE MATCHING for AUTOSTITCHING

Introduction

Stitching multiple consecutive images into a panorama with perspective projection. Some basic linear blending included. This time we use RANSAC for computing homography given candidate matching points.

The Photos

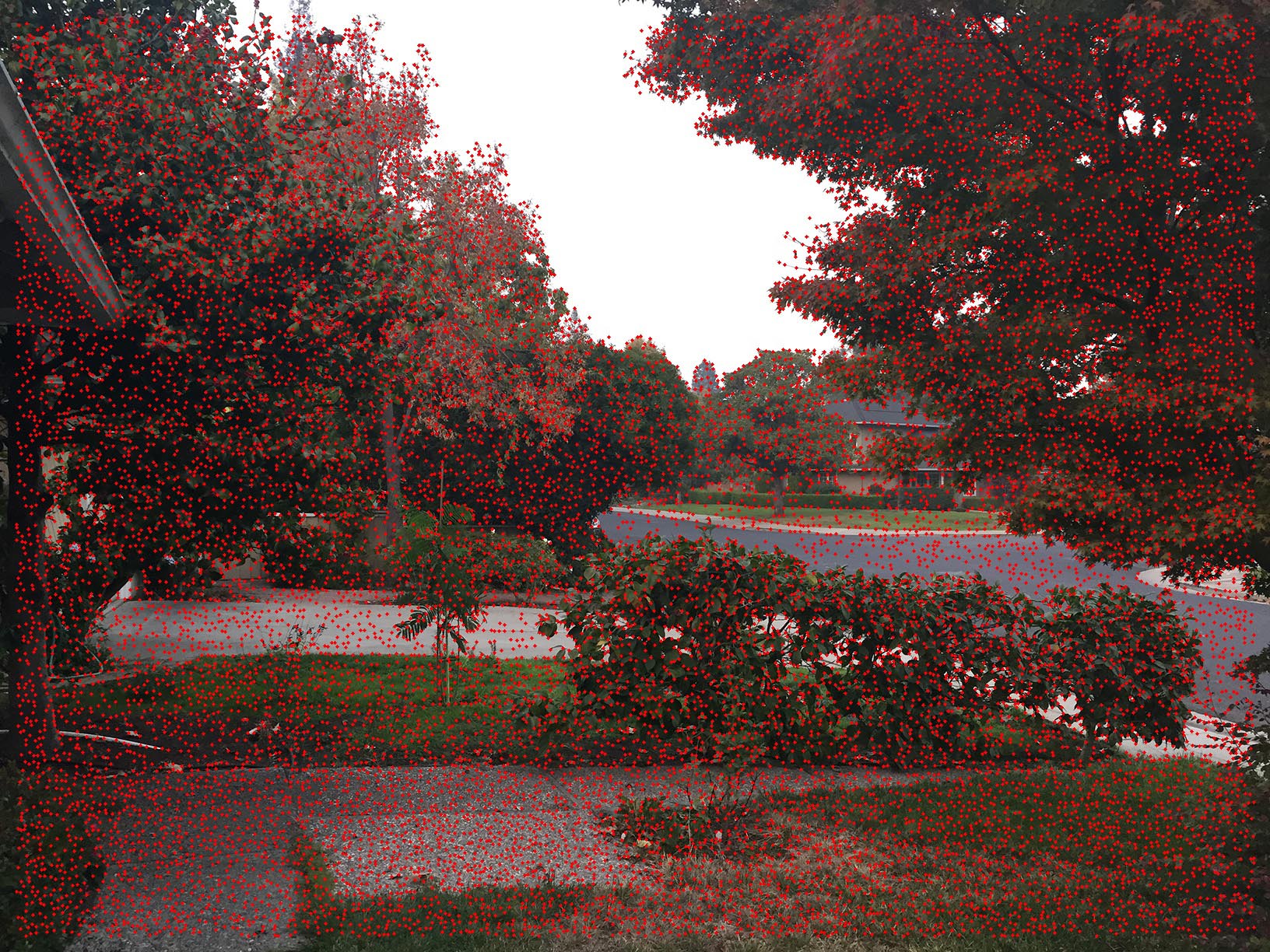

I took three sets of photos to attempt stitching. The first is a generic mid-long range scene of a japanese maple on a lawn.

|

|

|

|

This set is a basic test of the stitching script. Highly intricate features allows easy feature selection. Long distance subjects also means less error from camera motion.

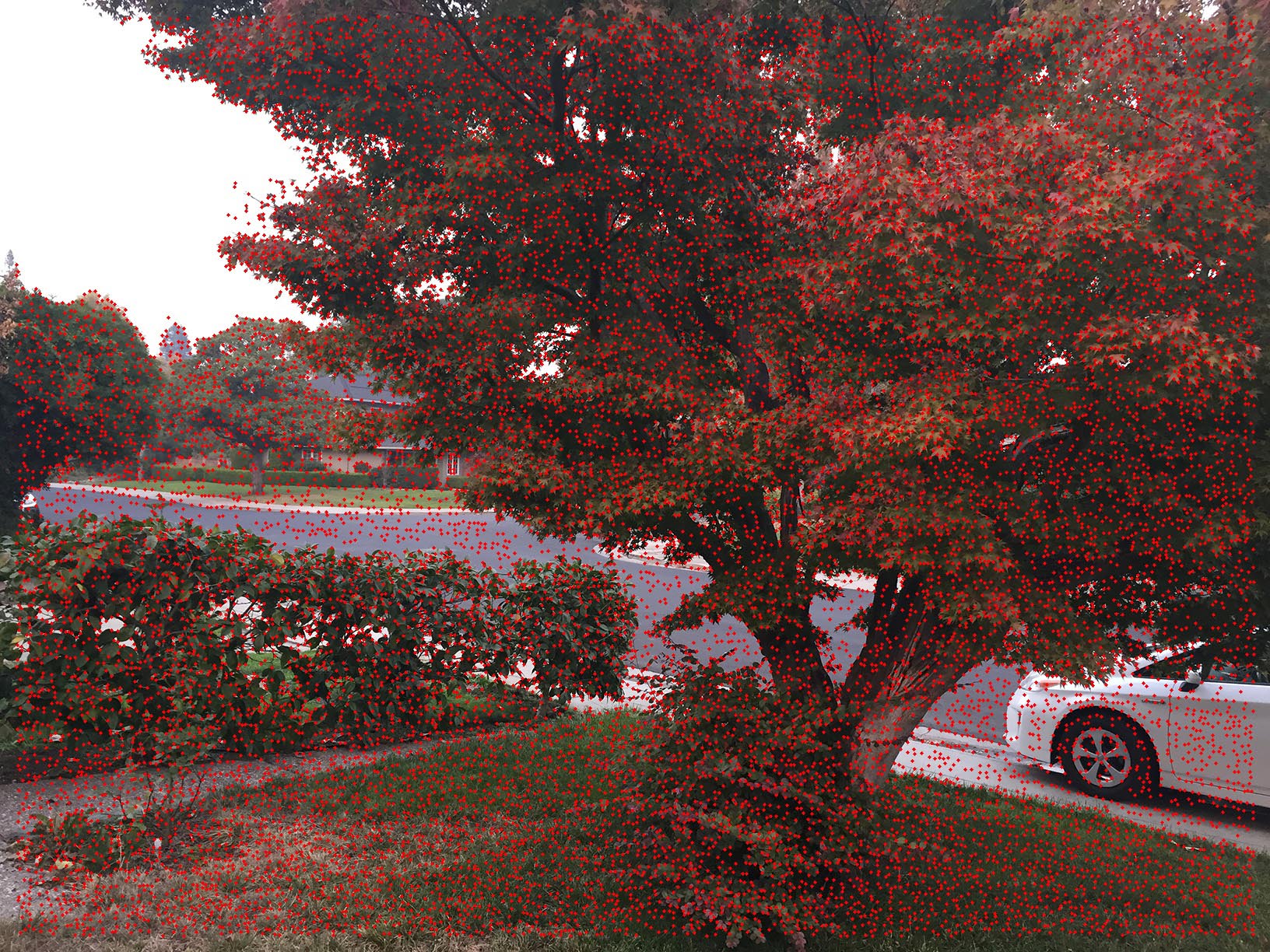

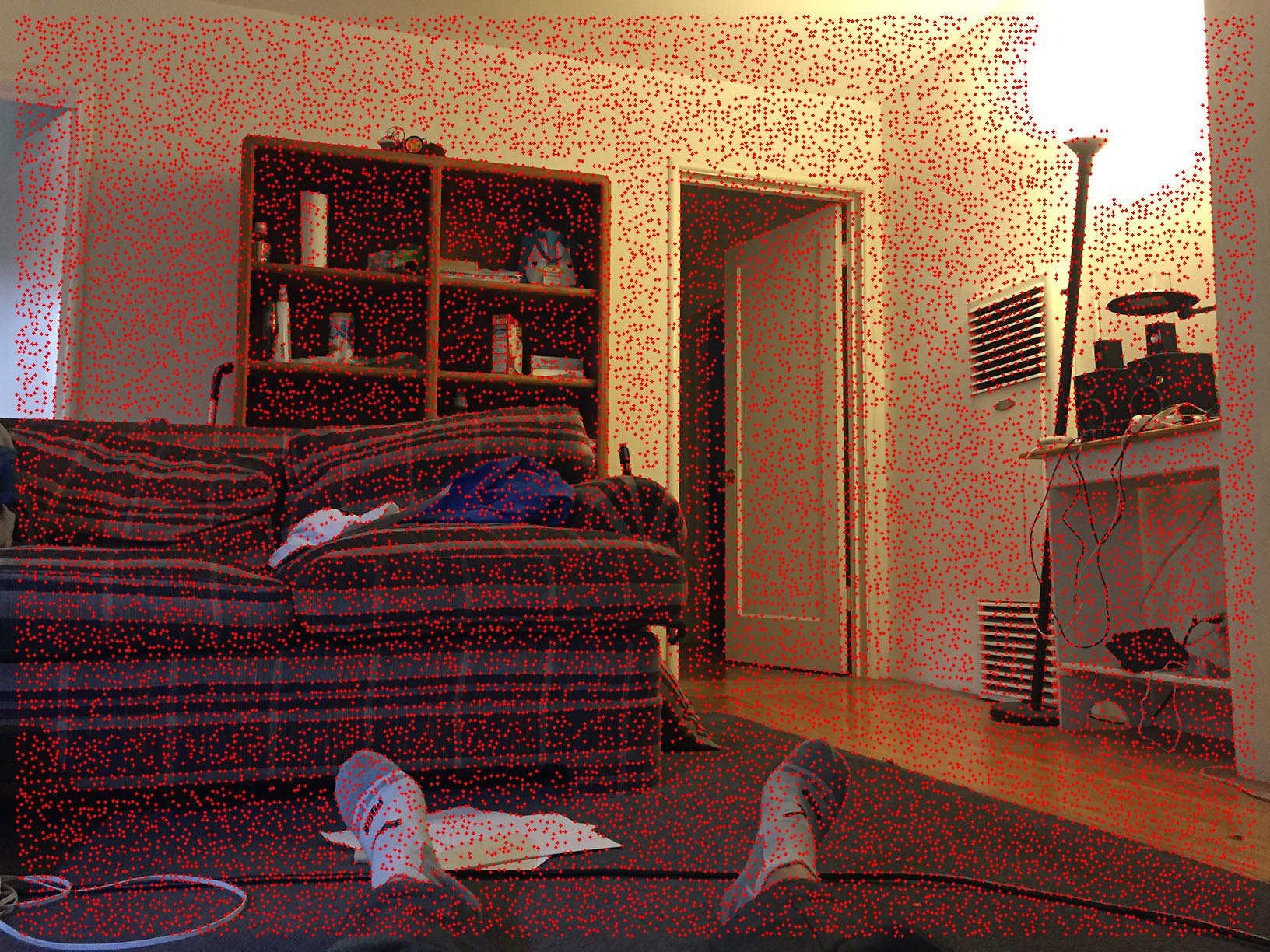

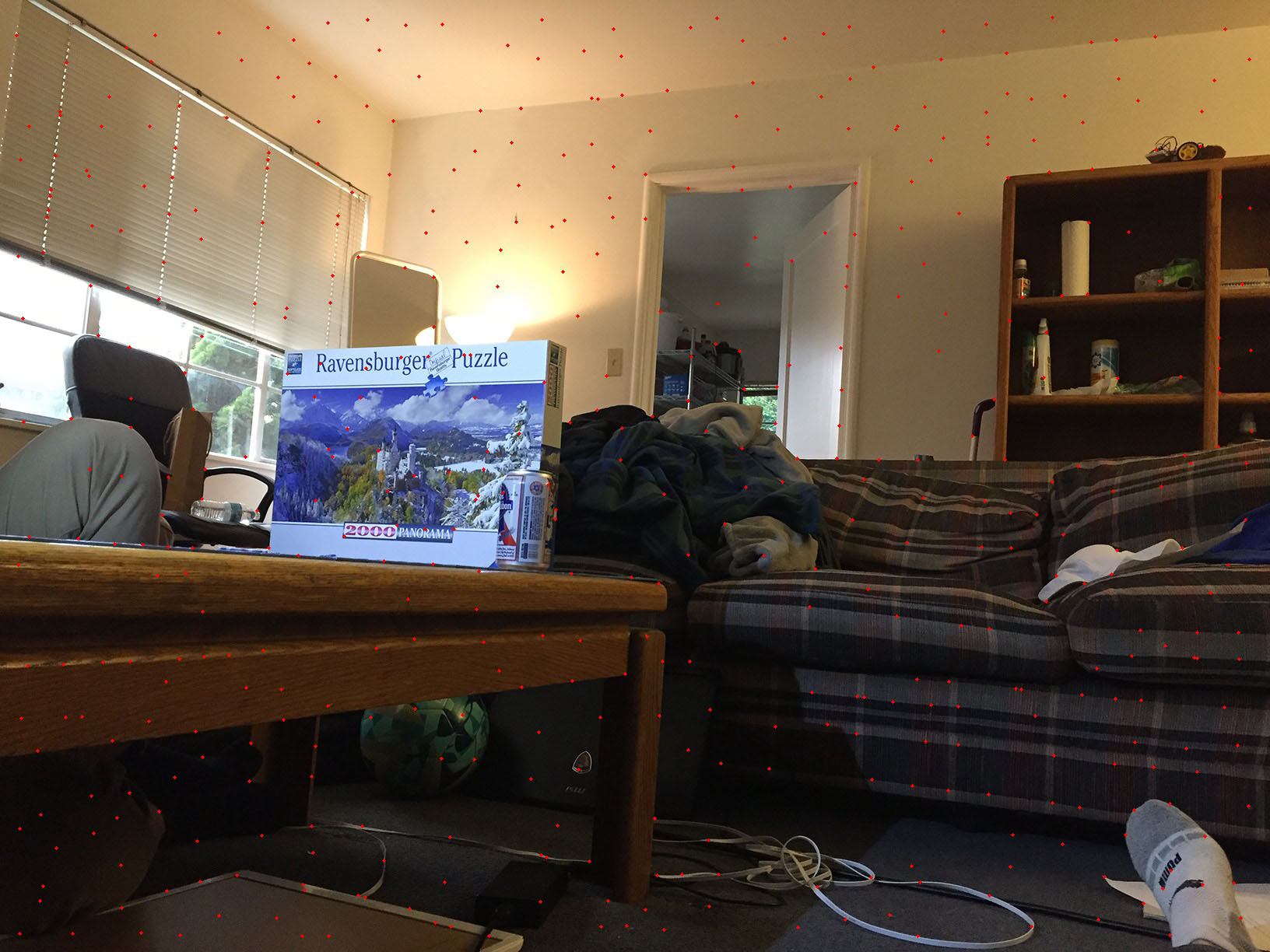

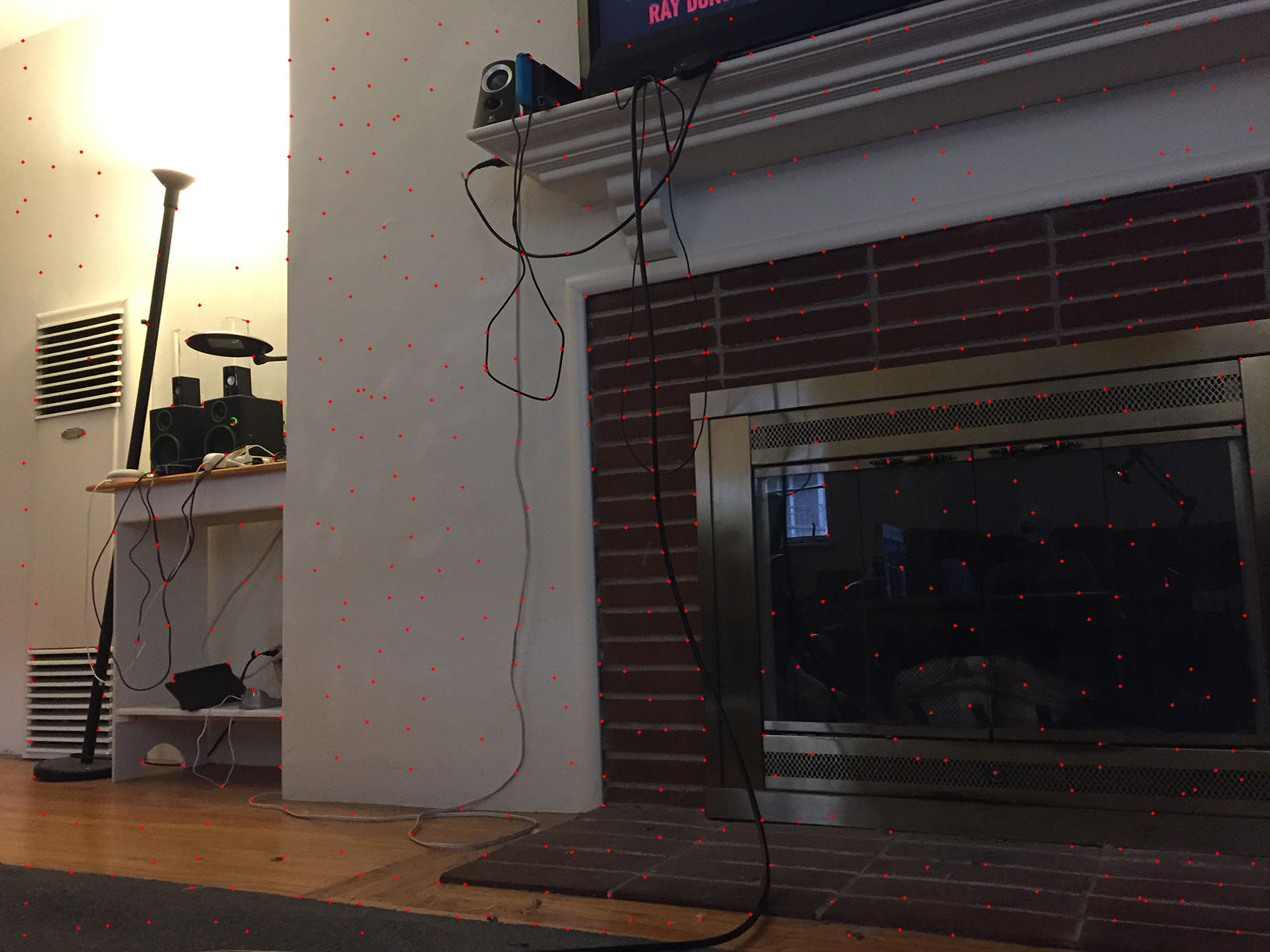

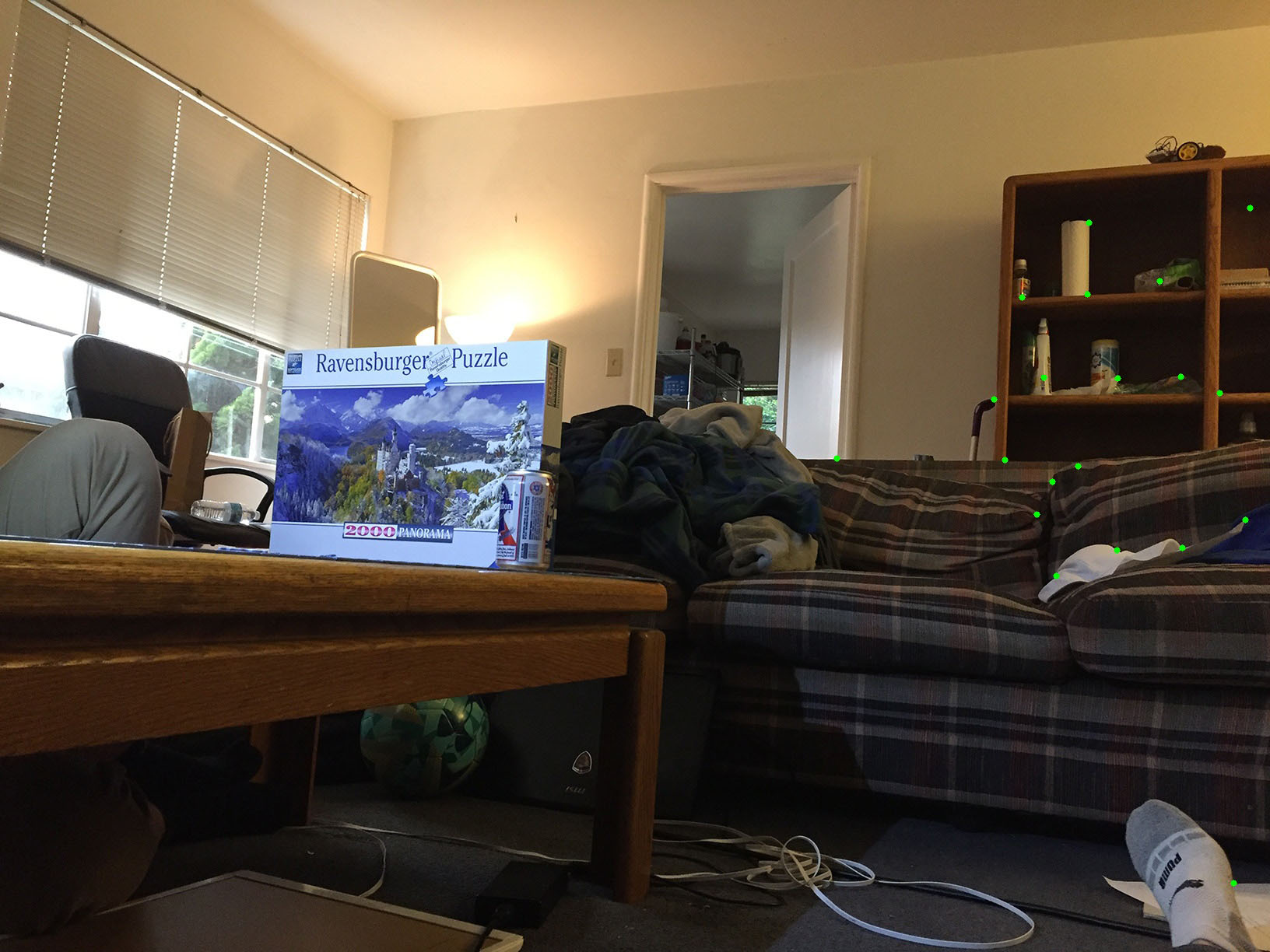

The next set is a short range set from inside my apartment.

|

|

|

|

The short range means that camera and control point error will play a larger role. This will test the ability of the feature matching to select corresponding points in a space with higher error. It will also test the ability for feature matching to be saturation and brightness independent as each image has a different exposure.

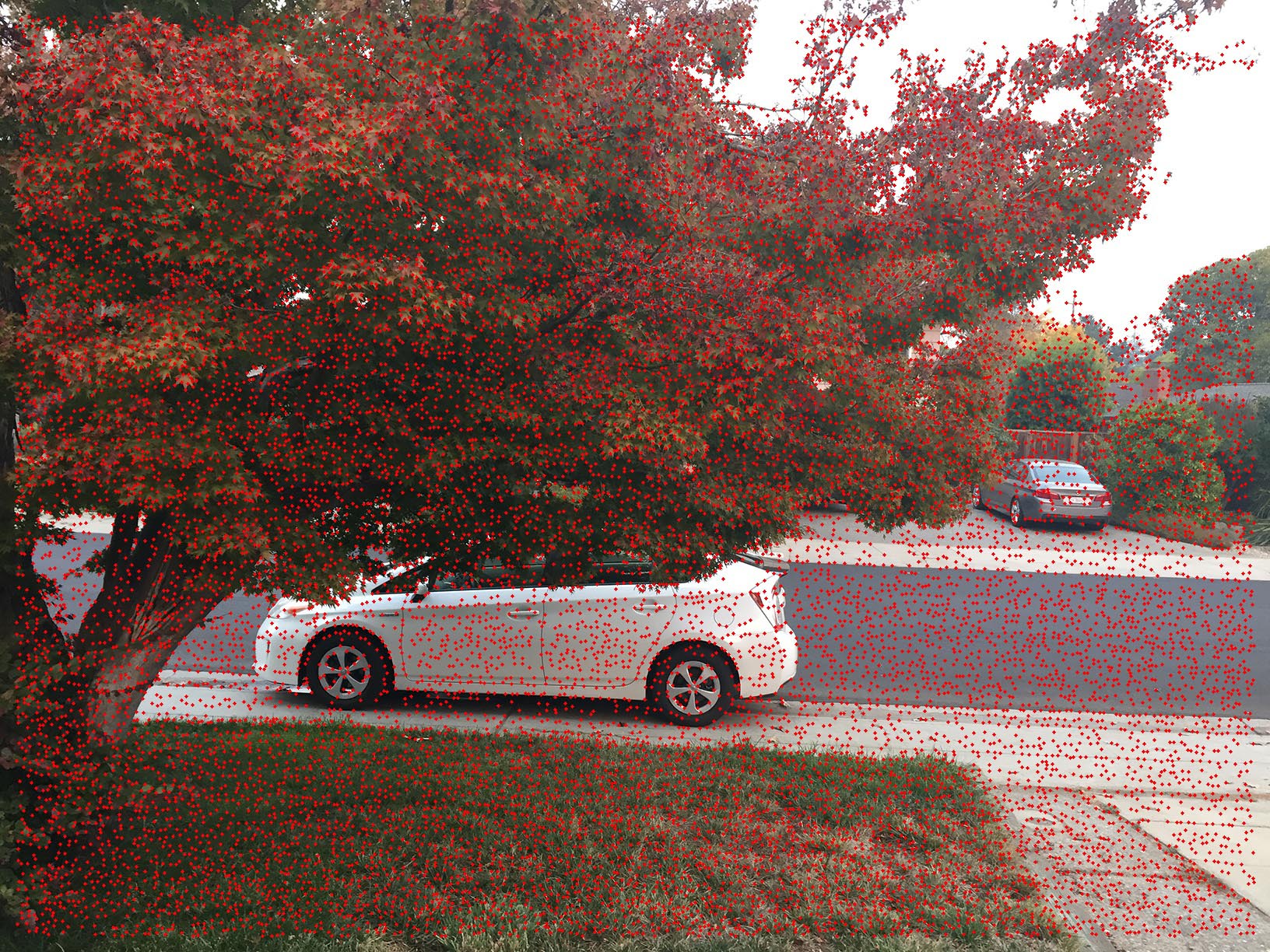

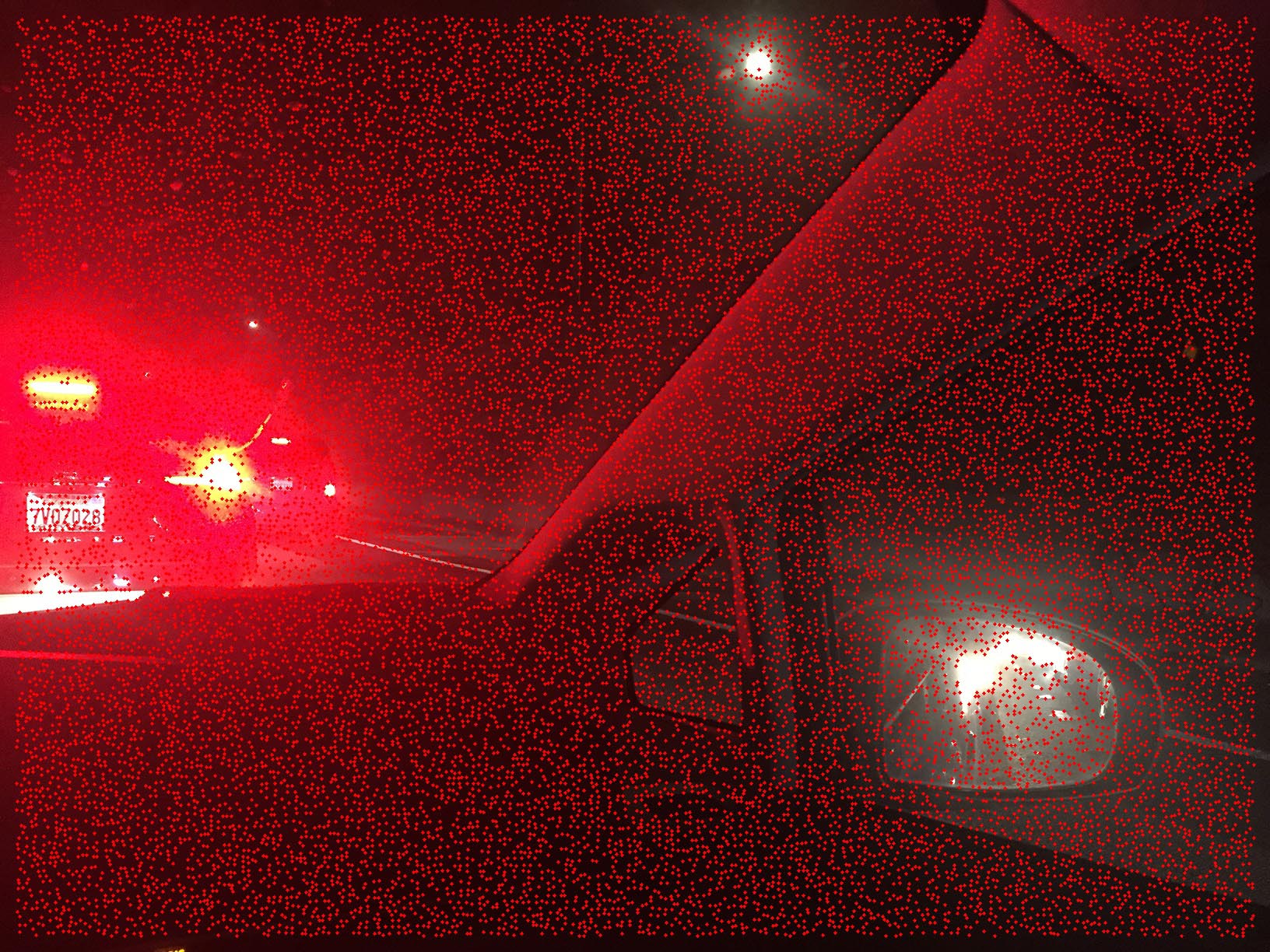

Night-time traffic is the worst traffic. This is a mix between very short range and mid range.

|

|

|

|

The camera error is big in this one. Lets see what the results are like when the camera is noticably not in the same place for each image. It is also a difficult testing set for automatic feature matching as most of the features avaiable are lens flares that are seemingly similar to each other.

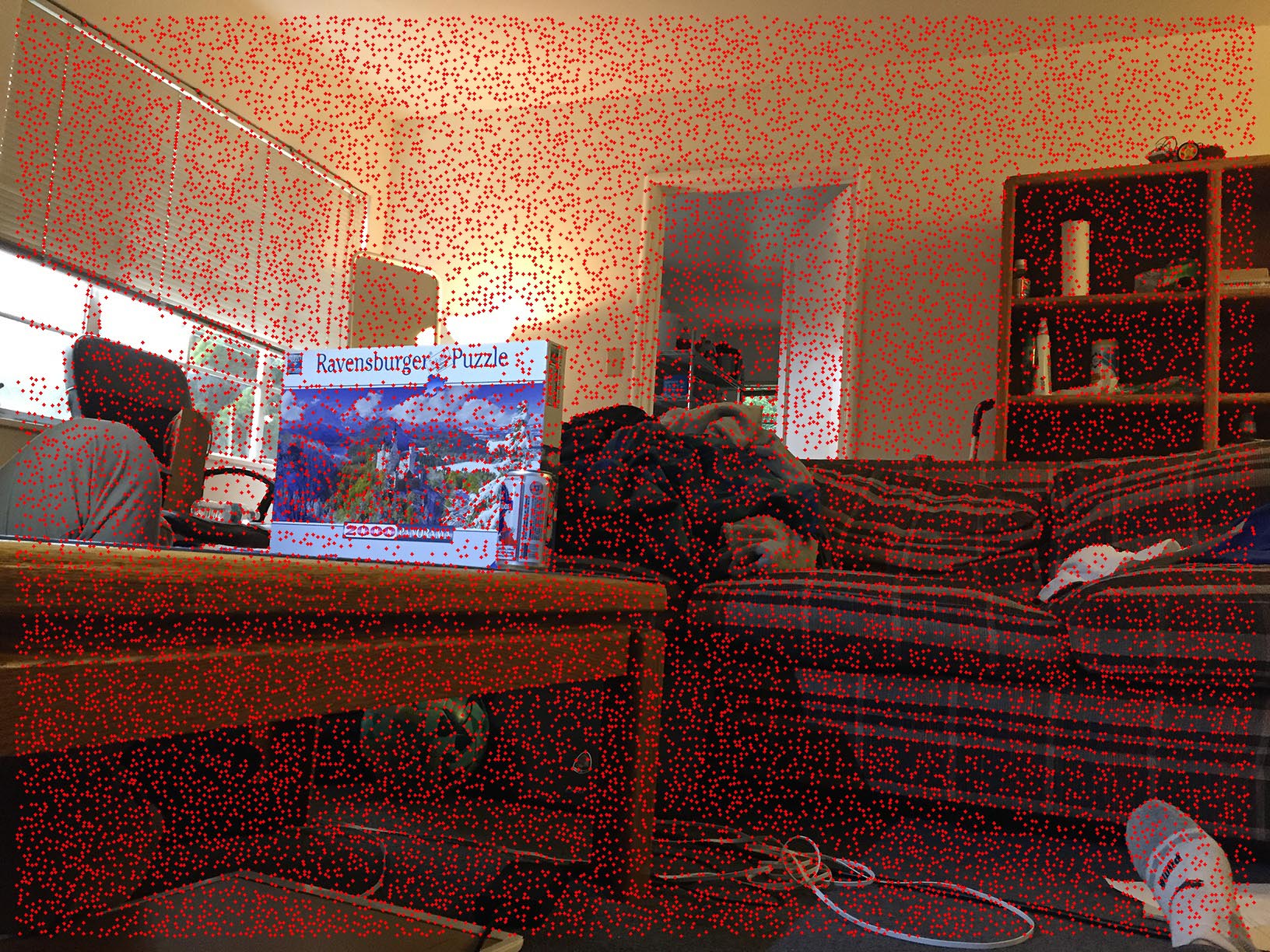

Detecting Corner Features

First I must find all corner features. This can be done through the supplied library. The results of just applying the function provided are shown below.

|

|

|

|

|

|

|

|

|

Next, we need to conduct Adaptive Non-Maximal Suppression. At first this seems complex, but the process is relatively simple. First, I modified harris.py to sort the returned harris points by magnitude (of best fit). Using this, list, I can iterate in decreasing order, computing the maximum radius of each point by only checking points of higher magnitude. Once the max radius is determined, resort the list with respect to radius. Take the first n elements as your ANMS points.

|

|

|

|

|

|

|

|

|

Feature Matching

Now we match features. This is done by calculating the feature vectors of each point. My implementation uses 8x8 features at 32x32 downsampling with 3 color channels for a total feature vector length of 192. Use Lowe thresholding ratio. Here are the results.

|

|

|

|

|

|

|

|

|

|

|

|

RANSAC Final Results

|

|

|

|

|

|

|

The traffic set is understandably difficult for automatic feature detection. There are many overexposed areas and much of the image is blurry. Consequently, it was hard for the feature detection algorithm to find enough corresponding points to stitch the images. Image 1 and 2 actually had quite a few correspondences (look hard at the cars), but image 2 and 3 only has one. I tried tuning the parameters to get more, but it just wouldn't work without introducing significant noise to the matchings. This set also brought out the problem of normalizing features: amplification of noise. I ended up fixing it with a ridge parameter in the denominator of the error ratios. This set really brings the light the shortcomings of small feature vectors and the robustness of human perception.

Extras!

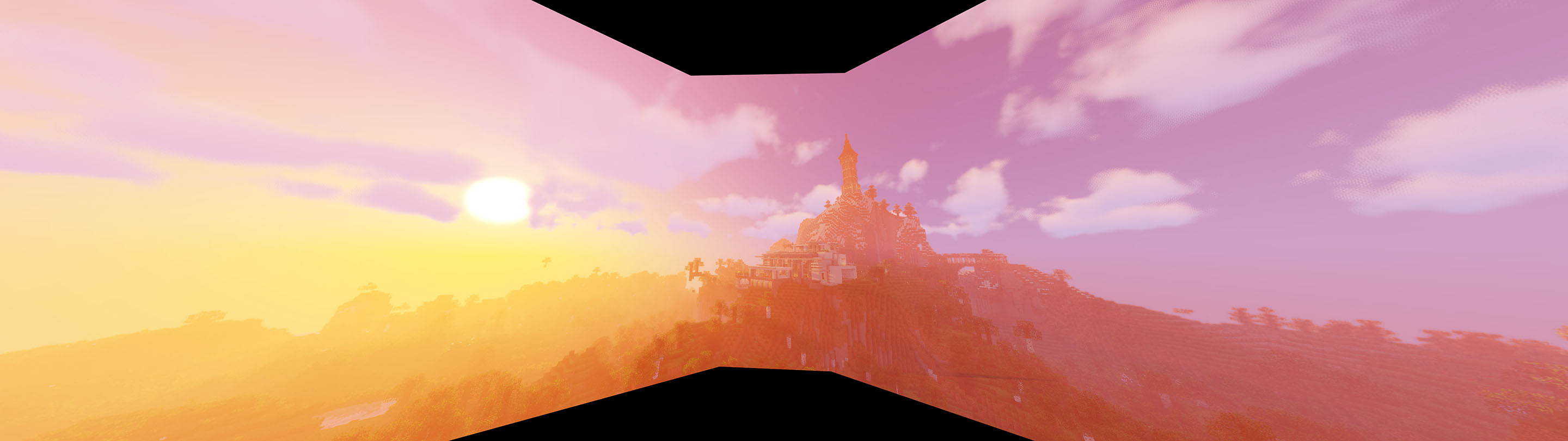

To make up for the missing panorama for "Traffic," I tried making some panoramas in games I play. Here are the results.

|

|