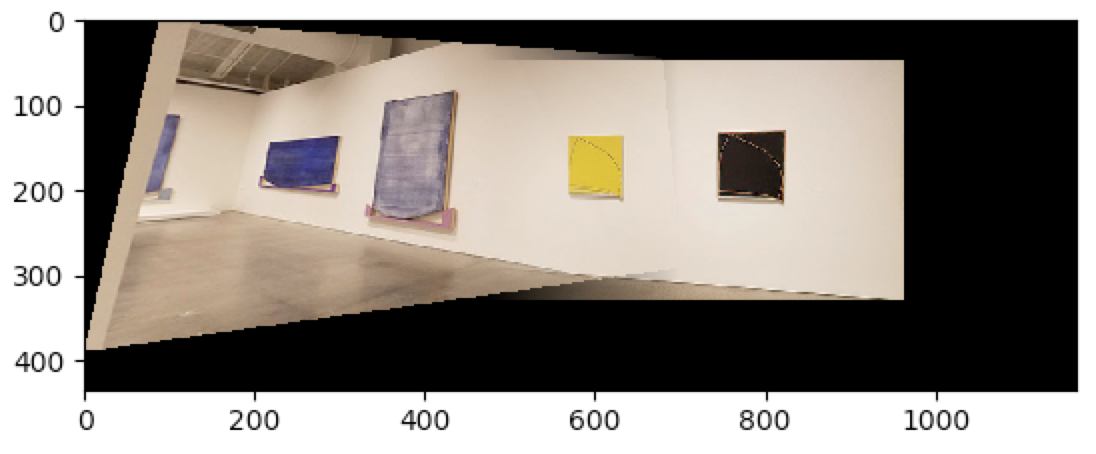

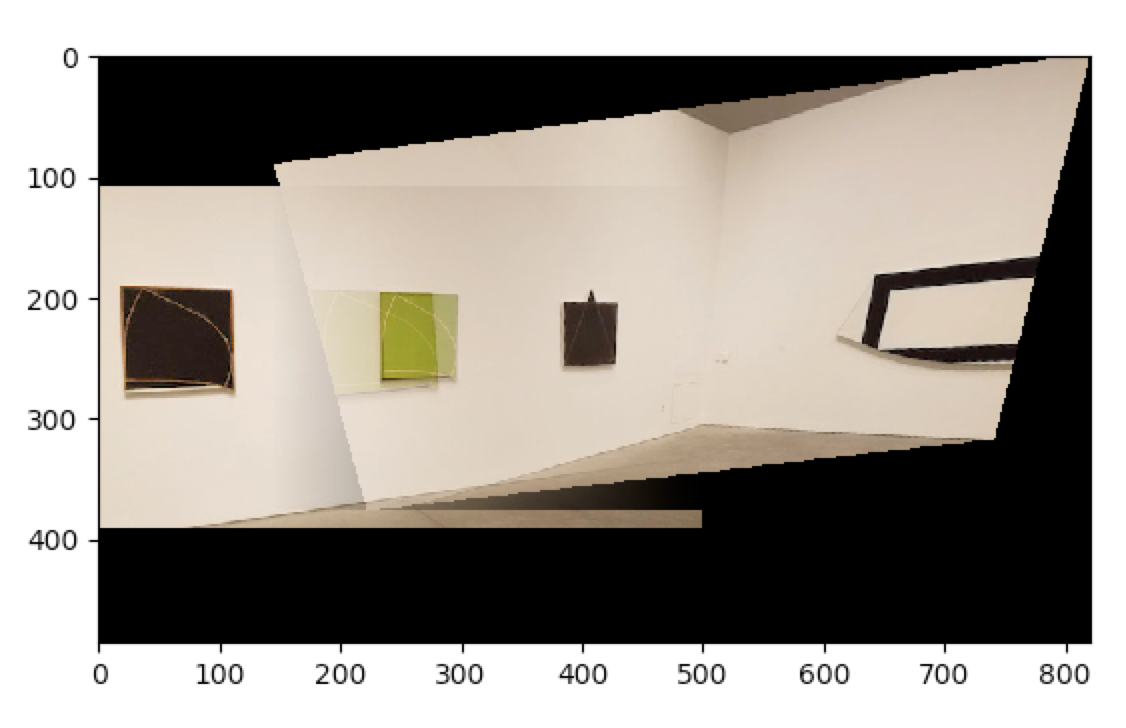

CS194-26-aggThis project was a general level-up from all the projects we've done so far. We're resizing images to stitch them together to construct a panorama!

This time, we're using a corner detection algorithm to streamline the point detections and automate the warping process. We first used Harris detection to identify corners, pared our points down further with ANMS, found some detected points using MOPS, and finally matched points while getting our correspondences with RANSAC. There were some issues along the way that dealt with my photo image quality, which I would want to consider further if I didn't have a deadline to follow.

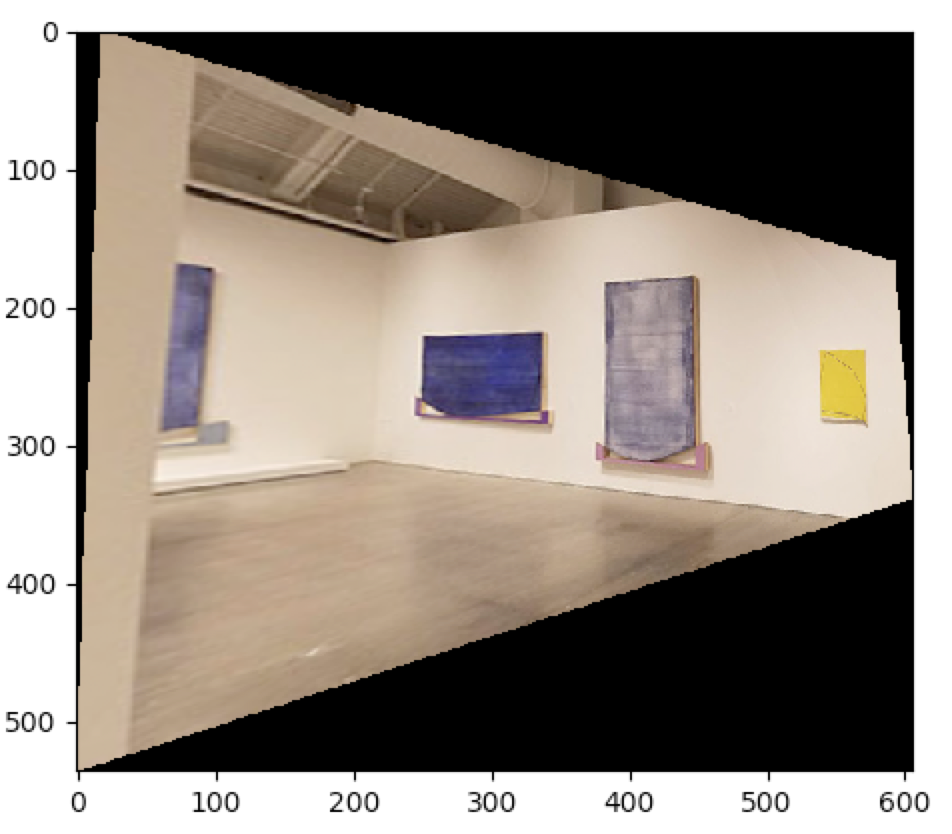

We need to pick good photos for our corner detection to work right. To acheive this, I went to the BAMPFA in downtown and the ASUC for pictures. There were plenty of squares in that area, so it was good for references in rectifying images.

A homography matrix is a matrix that can calculate changes in projection. With this, we are able to reshape and warp images with multiple degrees of freedom. However, we need a minimum of 4 reference points per image.

To compute this, we have a large matrix with n rows and 9 columns, n being twice the total number of corresponding points. We then append a row of 8 zeros and 1 one to prevent our matrix from just being zero. We multiply that to get the zero vector, with one 1. This ensures that our ninth value in the matrix will always be 1, and we won't just multiply our matrix with the zero vector.

Wit this, we can warp our images to get rectilinear transformations. I used the exit sign and a photo from BAMPFA to straighten into a square. This was done by extrapolating the coordinates of our new image using the homography, and then, for every point, interpolating the new coordinates from our older image

|

|

|

|

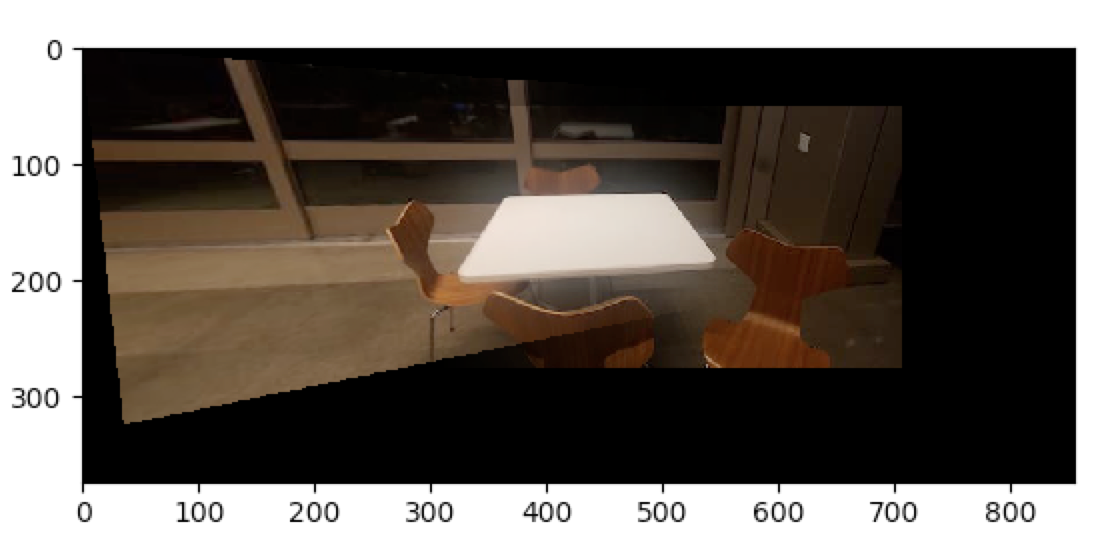

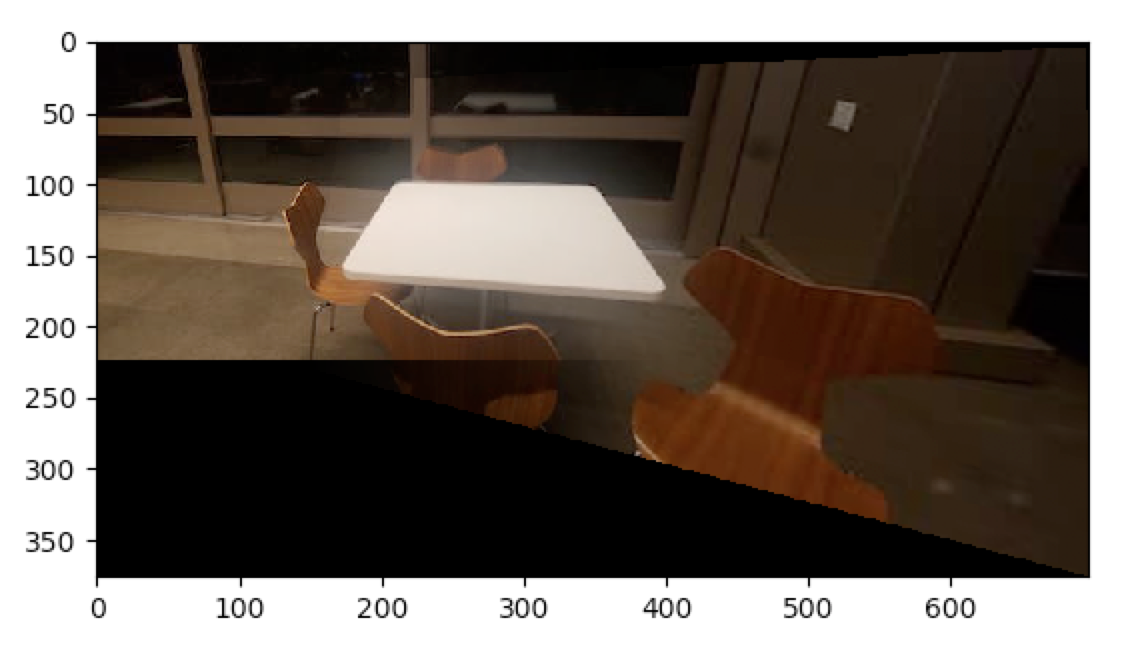

This is where I started running into problems. First off, I started my blending thinking that I was always going to blend from left to right. After all, my image warps took in a left value and a right value. For panorama's of two images, the difference was hardly apparent. But throw another image in, and lo and behold, I was truly done for. The images would go from bad to worse, and I'd end up with a trapezoidal image more than the actual aesthetic insta images.

|

|

|

|

|

|

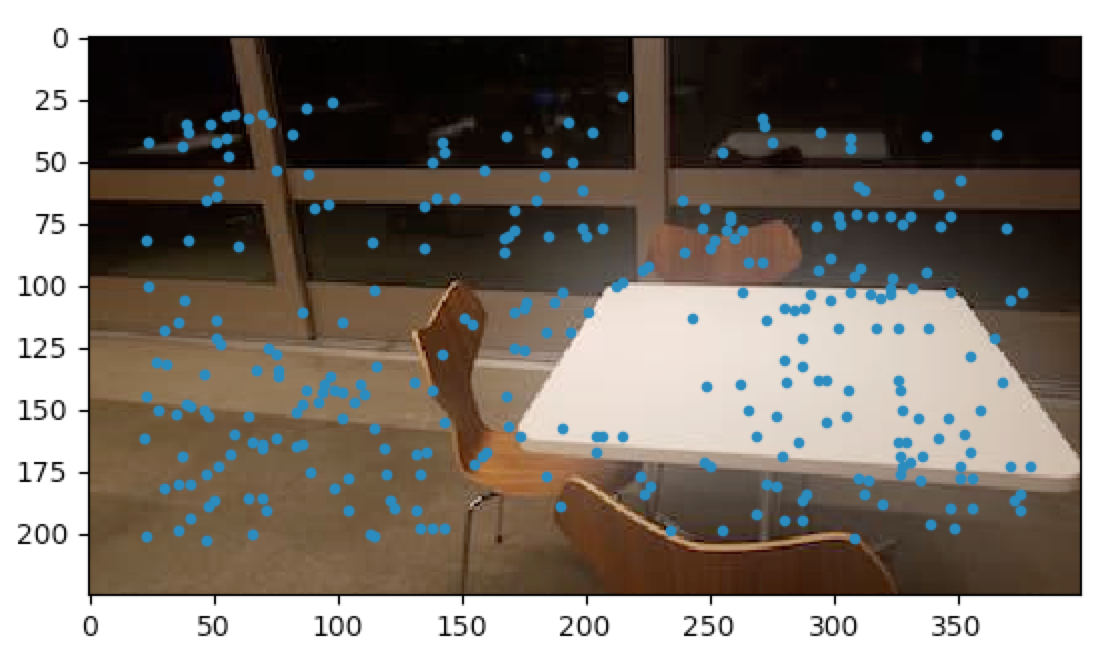

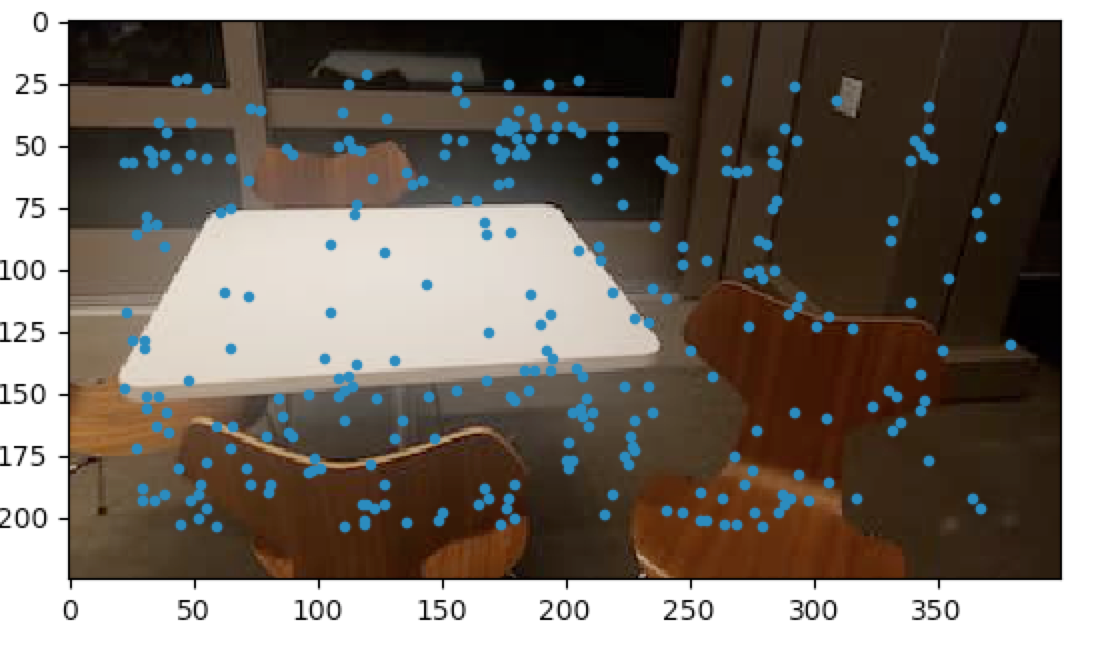

For the sake of runtime, I had images no more than 500 pixels in any direction. This caused some issues that I'll get further into, but for now, I needed to detect points

In this part, we were graciously provided with starter code that allowed us to pick corners with Harris Interest Point Detection. For this part, I detected over 1000 points for each image

We then had to pare the number of points down in such a way that we captured the local maxima. This way, we wouldn't have a cluster of high frequency points when we sort our corners. This algorithm involves comparing every corner with every other corners, keeping values that are sufficiently far away enough or has a high enough maximum with the minimum distance. We sort the points in terms of distance and return however many points we need in our result. Below is an example of this.

|

|

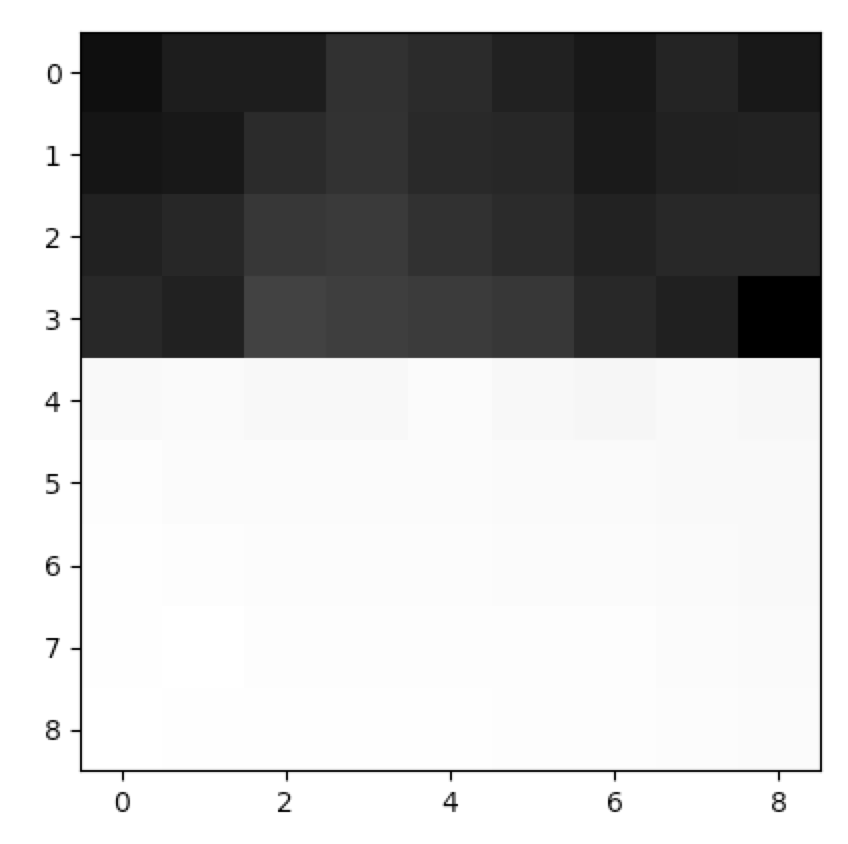

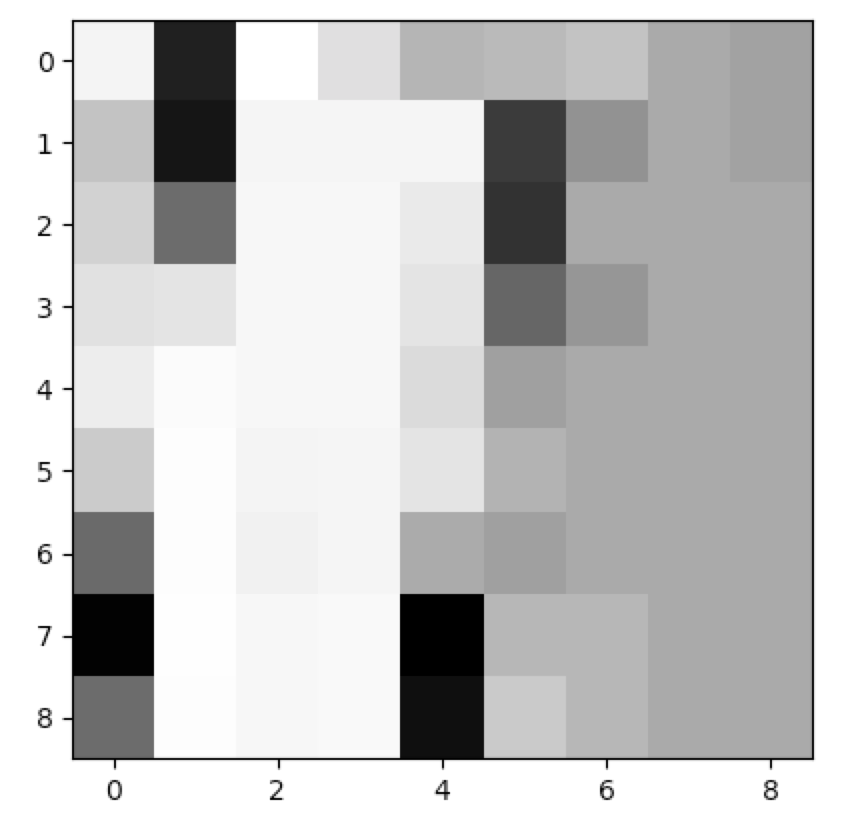

In this part, we had to extract specific features from each of ANMS selected corners. For each of our corners, we extracted a 41 by 41 patch of surrounding image. Then, for robustness, I resized each feature to be 8 by 8, and returned a list of such features. Below are two features that I extracted, before and after I resized them.

|

|

|

|

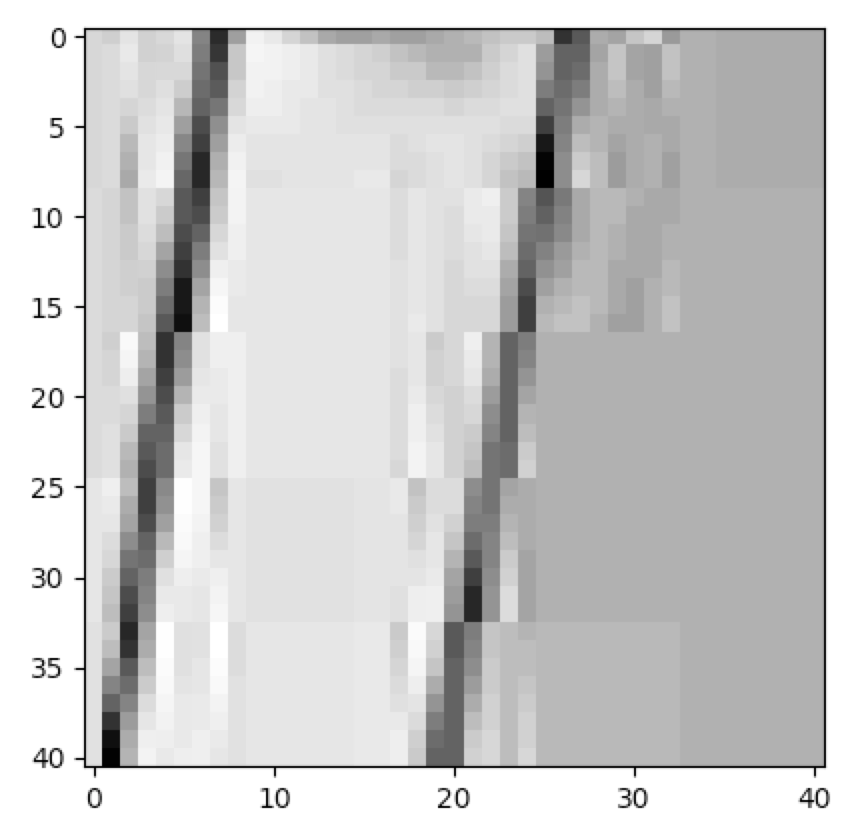

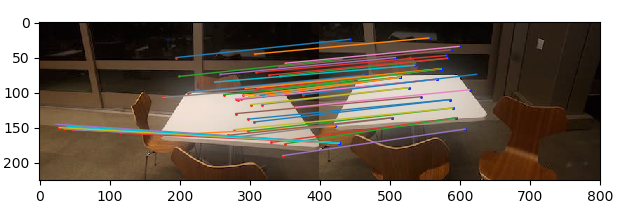

Here we match the features with second nearest neighbors search. It was here that I realized that my image resolution was too low for my feature size to be this big. This meant that I had a lot of features bleeding into one another. This resulted in a lot of points connected to one another.

|

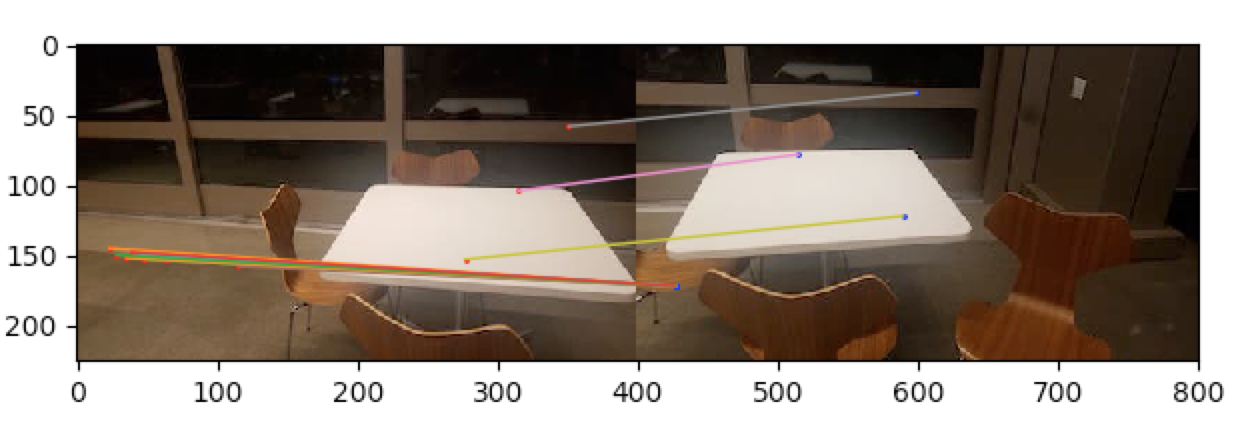

Having a low resolution means that a lot of our images came together at a single point. My RANSAC implementation picked the homography matrix with the most amount of points coinciding at the same place, but with not enough correspondances, I ended up with many points mapping to a single point. This was possible with my homography, but not what I intended

|

|

|

|

|