Panoramas

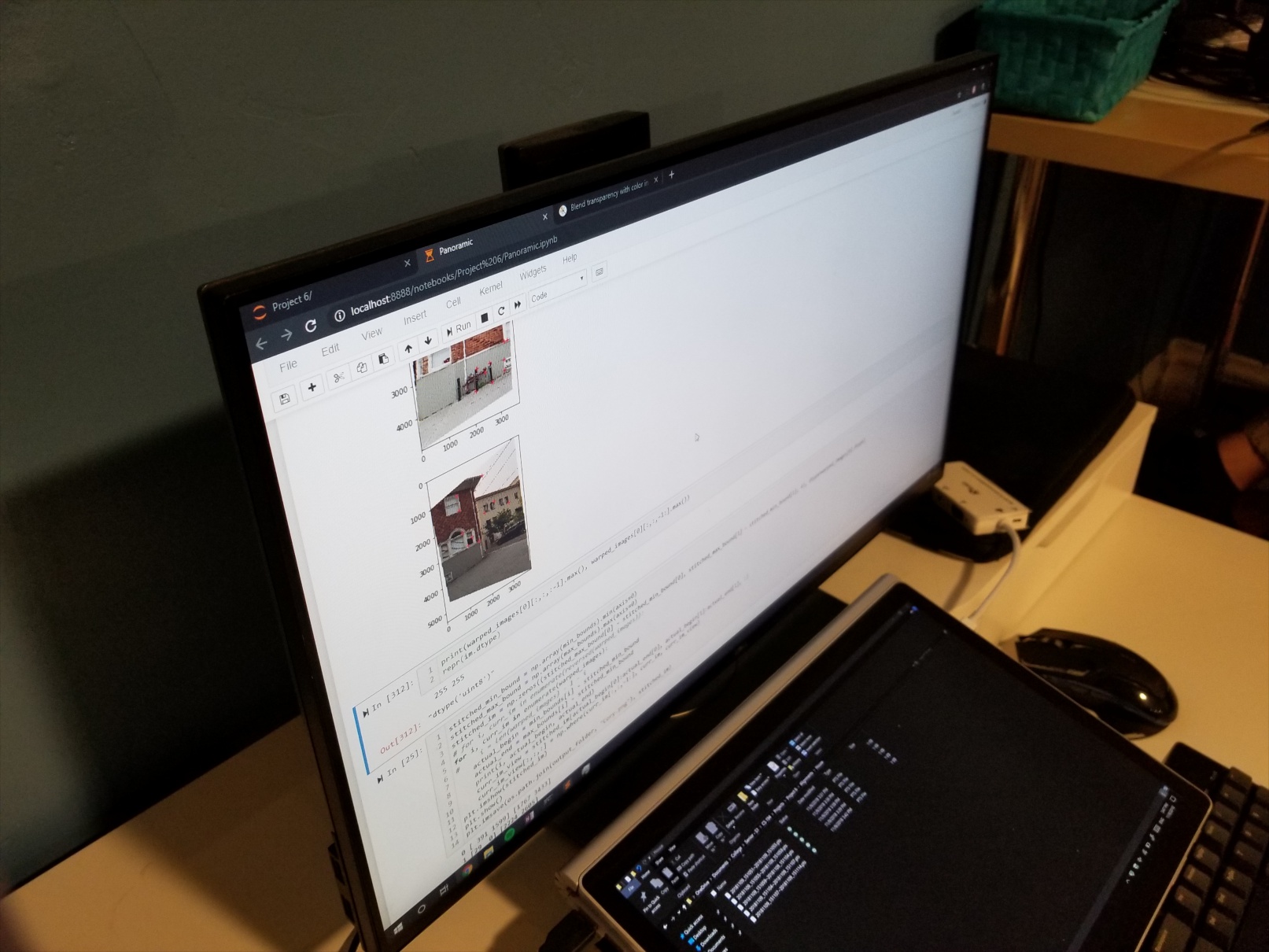

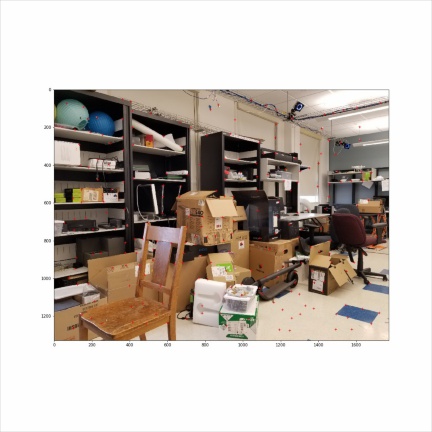

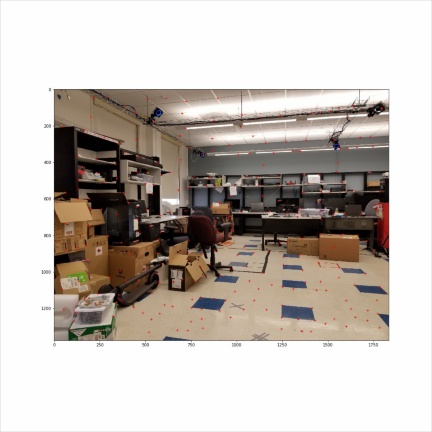

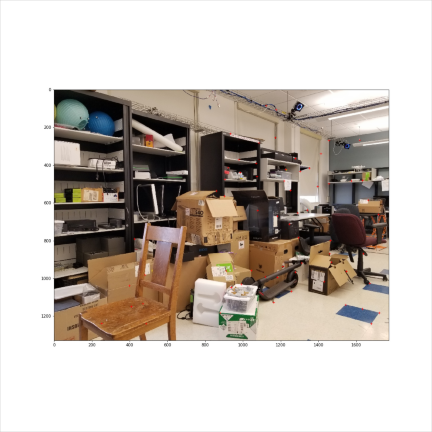

These are the original images taken from the same position, rotating the camera.

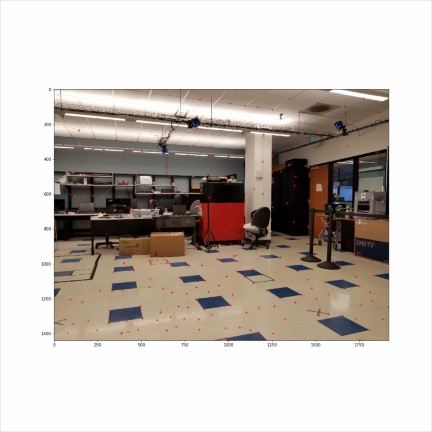

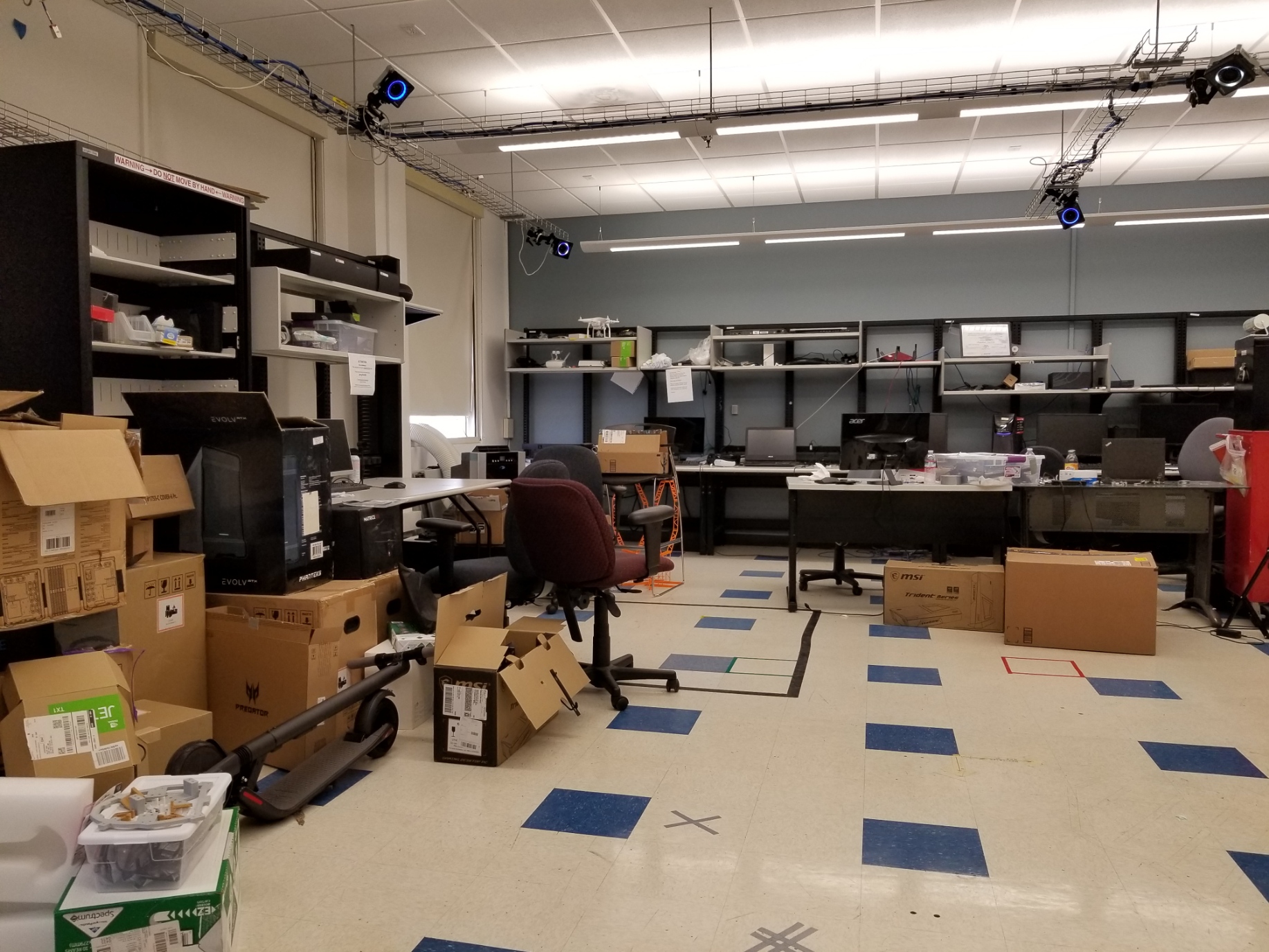

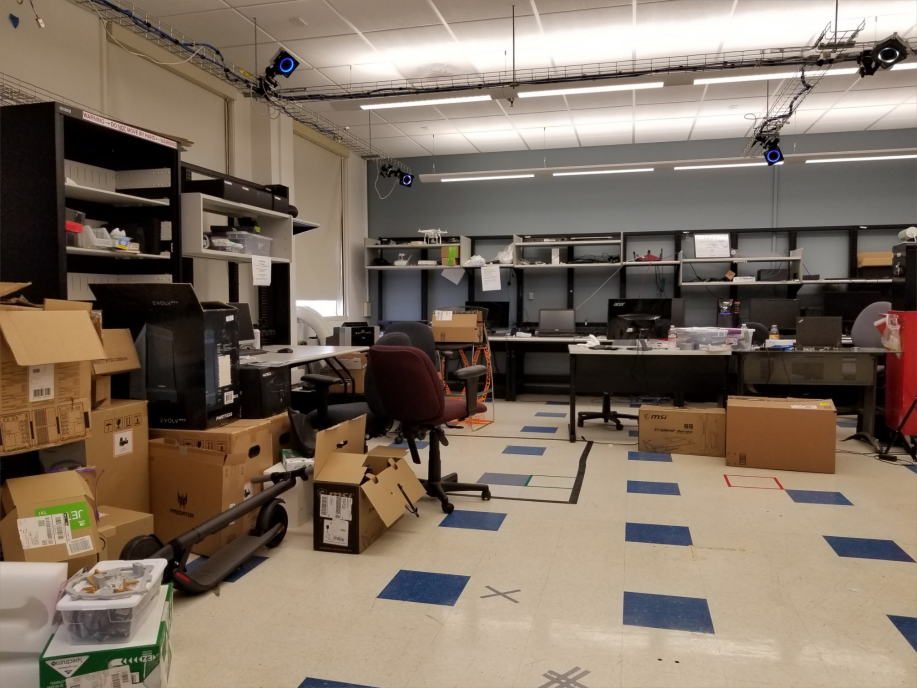

Next the side images are warped to match the perspective of the middle image.

Finally, the images are blended into one image.

One drawback to the flat projection that is used is that the closer the field of view approaches 180 degrees, the larger the size of the image, due to the perspective transformation. This makes taking more than 3 photos with a normal wide angle lens not as useful.

One interesting thing I tried was to take vertical photos to take advantage of the wide field of view to expand vertical view and using the panaramo stitching to still maintain a wide horizontal field of view.

Note how close to the house this picture was taken. A normal phone camera would not be able to capture this much of the house standing from the sidewalk beside it in one shot without panorama functionality. Even wide angle lenses will warp straight lines, which is not noticeable in this stitch.