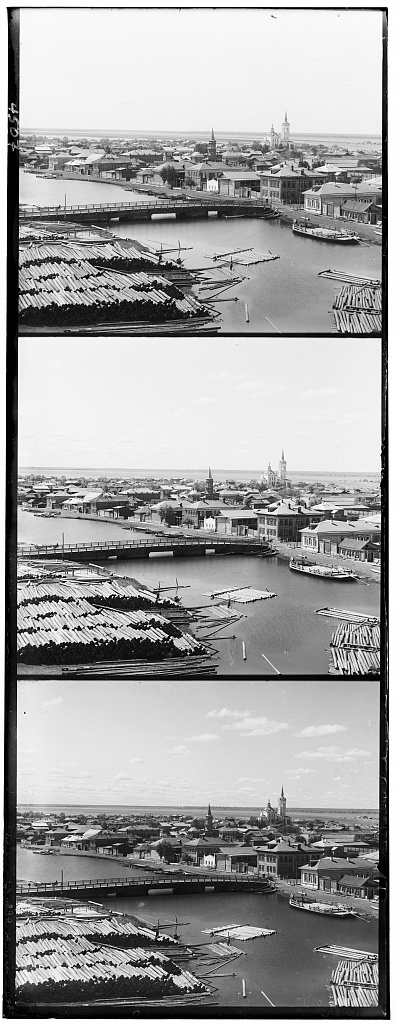

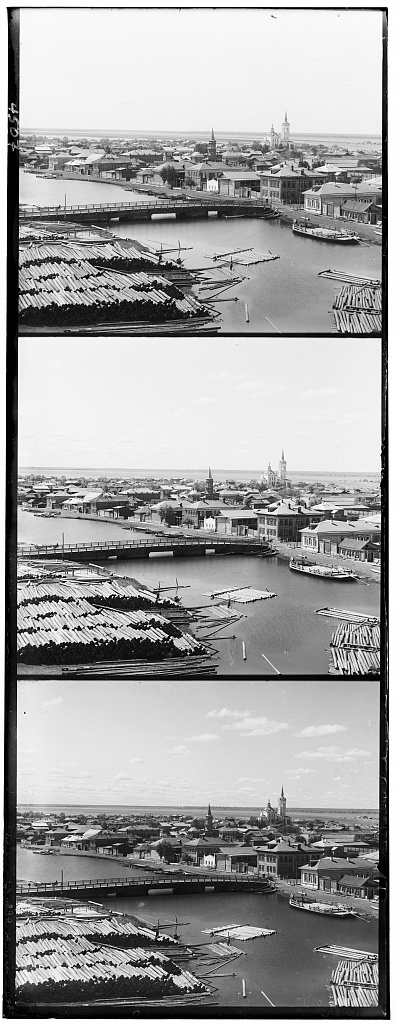

This project is based on images from the Prokudin-Gorskii image collection, a set of images of Russia where each scene was photographed in black-and-white three times using different color filters (R, G, B). I reconstructed the original colored scenes by aligning and stacking the black-and-white images into their respective color channels.

First, each combined image is divided into three based on height. Then the red and green channel images are independently aligned onto the blue channel image. My original implementation of alignment searched a [-15, 15] displacement range in x and y directions and chose the displacement that optimized an alignment metric between the channels.

The first alignment metric I used was the Sum of Squared Differences. The formula looks like $$SSD = \sum_{i, j} (u_{i, j} - v_{i, j})^2$$ where the sum takes place across the elements of the image matrices \(u\) and \(v\). Minimizing this function is the same as minimizing the Euclidean distance between the image vectors.

However, this method struggles with differences in brightness across different color channels. To fix this, I used the Normalized Cross-Correlation: $$NCC = \left \langle \frac{u}{||u||}, \frac{v}{||v||} \right \rangle$$ where \(u, v\) are image vectors. The normalization helps with brightness differences, and I maximize the correlation across displacements. This improved the results for many images, but some (in particular the Emir) still had poor results.

Bells and Whistles: To further improve the metric, I made a couple of small adjustments to NCC: I first centered the image vectors about \(\vec{0}\). Then, when taking the inner product, I took the absolute values of the element-wise products before summing them together. Intuitively, what this does is it strongly correlates weak areas in one color channel with strong areas in another, as well as weak-with-weak and strong-with-strong; before this modification only strong and strong areas had high correlation. This improved the output for the Emir, whose outfit shows up strongly in the blue and green color channels but weakly in the red. Below, we can see how the red channel is not well-aligned with the other two with unmodified NCC.

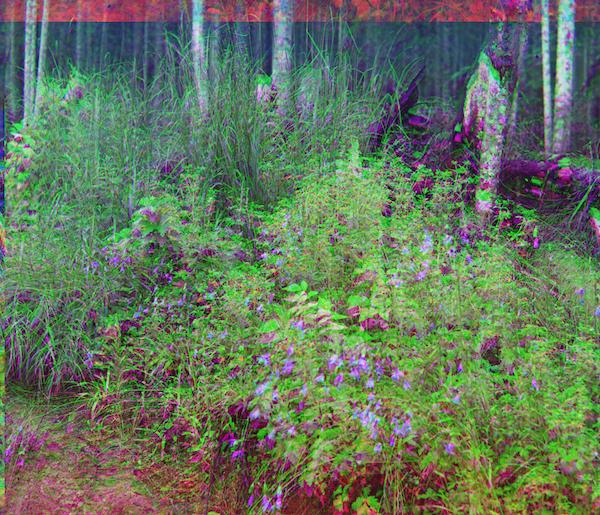

However, this metric was not always better than basic NCC. One of my extra test images was a bush; the CNCC algorithm did not produce a good result on this one, likely beecause the strongly green leaves and the weakly green shadows matched up roughly equally well with anything. To solve this, I first tried changing the pre-summation elementwise absolute product from CNCC to the following: $$\begin{cases} x \times y & \text{if \(x \times y \geq 0\)} \\ -c(x \times y) & \text{else} \end{cases}$$ where \(c \in (0, 1)\), in order to give a weaker weighting to the \(\text{strong} \times \text{weak}\) case compared to \(\text{strong} \times \text{strong}\) or \(\text{weak} \times \text{weak}\).

I was not able to find a suitable \(c\)-value; instead, I took a weighted minimum between CNCC and NCC as follows: $$\min(1.4 \times CNCC, NCC)$$ The 1.4 was obtained experimentally. I called this metric MOE for mixture of experts, and it gives pretty good results for all images I tested on. In some cases, displacements seem a couple pixels worse than with NCC, but there are no particularly terrible results like the NCC Emir or CNCC bush.

At the same time as I iterated on alignment, I also tried a few methods for displacement.

To displace the red and green channel matrices, I originally considered a circular shift, where elements that leave one side of the matrix will wrap around to the other side; e.g. if we displace \([1, 2, 3, 4]\) to the right by 1, the result is \([4, 1, 2, 3]\). However, it doesn't really make sense to consider how well the wrapped parts of the displaced image match with the original. Instead, I performed a non-circular shift and padded the new empty space with 0's. While this worked for some images, it isn't necessarily more appropriate than circular shifting; for example, with NCC, it may lower correlation too much for any offset image. A similar issue arises from padding with 1's (the pixel values are [0, 1]): the reward function of matching with an offset area is often too high.

When I used 0 to pad, I got good results for some images and bad results for others; when I used 1 to pad, some of the previously good images became bad, and some of the bad ones became good. Compared to circular shifting, which had consistently near-good results, the good results were better but the bad results were worse. Then I tried 0.5 padding to balance between the two, and it worked pretty well, giving good results for all of the images.

In addition, before doing any other processing of the 3 color channel images, I removed 1/15 of the pixels around the edge of each image, in order to remove the borders, which interfere with alignment metrics.

After this cropping, there was not much difference between padding vs. wrapping for displacement shifting: wrapping the borders (largely black and white) probably hurt the results, but wrapping the cropped images causes comparatively tamer pixel values, perhaps near 0.5 on average. That being said, some images still came out better with padding.

The [-15, 15] pixel displacement range is not enough for the higher resolution .tif images, but calculating all the correlations for this small displacement range is already very slow on such large images. To solve this, I implemented an image pyramid: the algorithm optimizes the metric on a [-1, 1] displacement range for a scaled-down version of the image (starting from roughly 16x16). This displacement is taken into account, and the algorithm then optimizes on [-1, 1] with a 2x larger image (32x32). This continues until we reach the original image. A [-1, 1] range is sufficient to search all displacements within the optimal displacement of the previous level, scaled up by 2. The maximum displacement in this way is [-1/8, 1/8] of the image (twice the starting pixel size in each direction).

The worst result was probably on three_generations. The man on the left is not perfectly aligned. However, since the fence on the right looks pretty well-aligned, I think this may be due to some movement of the man or his clothes between captures. This is not something that could be solved with linear displacement, and may require some other transformations.

NCC metric |

Improved MOE metric |

NCC metric |

Improved MOE metric |