Images of the Russian Empire: Colorizing the Prokudin-Gorskii photo collection

Table of Contents

1 Overview

In this project, we are given 3 grayscale images in a RGB space. We are supposed to convert the digitized Prokudin-Gorskii glass plate images to color images with as few visual artifacts as possible.

2 Approaches

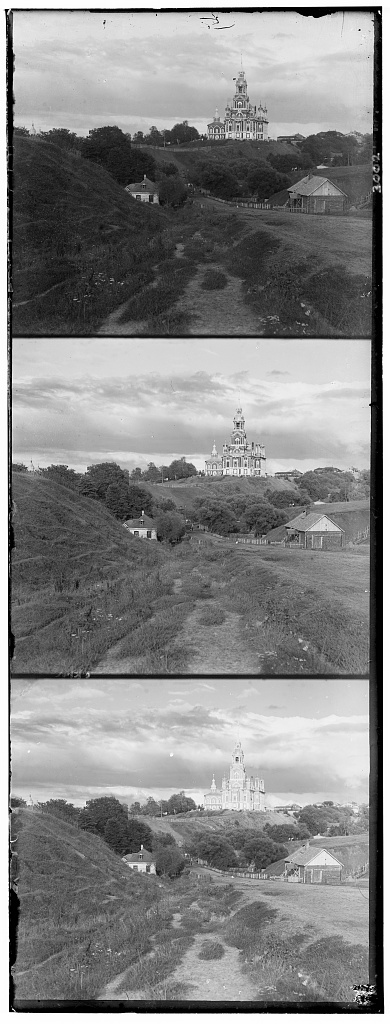

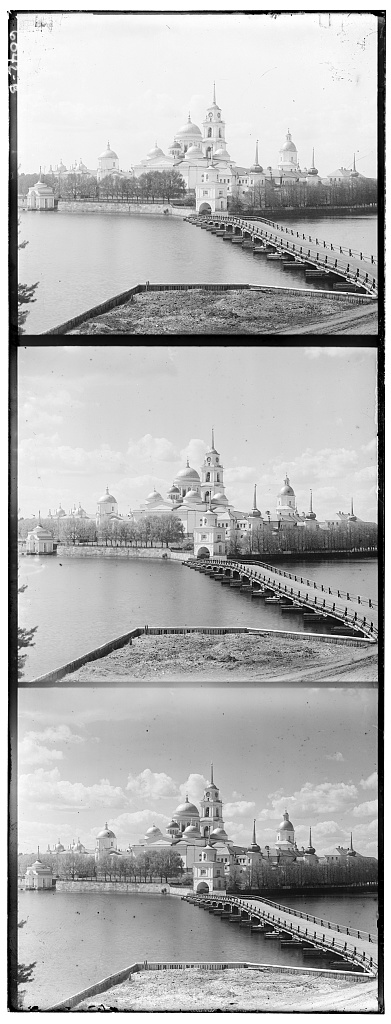

We need to extract the three color channel images, place them on top of each other, and align them so that they form a single color image. Some examples of the Prokudin-Gorskii glass plate images are here:

We need to find a way to calculate an optimal movement for each channel. We achieve this both with a naive method (exhaustion) or optimized (pyramid method) , which is needed for large images.

3 Naive Methods

One way to align the parts is to exhaustively search over a window of possible displacements (say [-15,15] pixels), score each one using some image matching metric, and take the displacement with the best score. There are two metrics used to score how well the images match: Sum of Squared Differences and Normalized Cross Correlation. My implementation compares the Green channel to the Blue channel, applies the best displacement on the Green one, compares the Red channel to the resulted modification and applies the final transformation, leaving the Blue channel untouched.

3.1 Sum of Squared Differences

This function is sum(sum((image1-image2).^2)) where the sum is taken over pixel values.

3.2 Normalized Cross Correlation

The Normalized Cross Correlation(NCC) performs a dot product between two normalized vectors: image1/||image1|| and image2/||image2||. As we can see, the results for this method were much clearer than the ones before, even though it took a little bit more time.

Applying NCC to the smaller images (.jpg) generates the following result:

cathedral - R: (2, 10); G: (2, 4); B: (0, 0)

monastery - R: (2, 4); G: (2, -2); B: (0, 0)

tobolsk - R: (2, 6); G: (2, 2); B: (0, 0)

4 Image Pyramid Method

Exhaustive search will become prohibitively expensive if the pixel displacement is too large (which will be the case for high-resolution glass plate scans). In order to align the bigger images, we need to move the images in a more optimized way. We first find the best movements for downsized versions of the image and then rescale the image by factors of 2. The processing is done sequentially starting from the coarsest scale (smallest image) and going down the pyramid, updating the estimate. Since the best results were the outputs of the NCC algorithm, we will use it instead of the SSD.

We generate the following results using the provided large images:

![]()

icon - R: (20, 92); G: (16, 44); B: (0, 0)

emir - R: (16, 112); G: (8, 14); B: (0, 0)

melons - R: (4, 180); G: (4, 84); B: (0, 0)

harvesters - R: (-8, 184); G: (-4, 120); B: (0, 0)

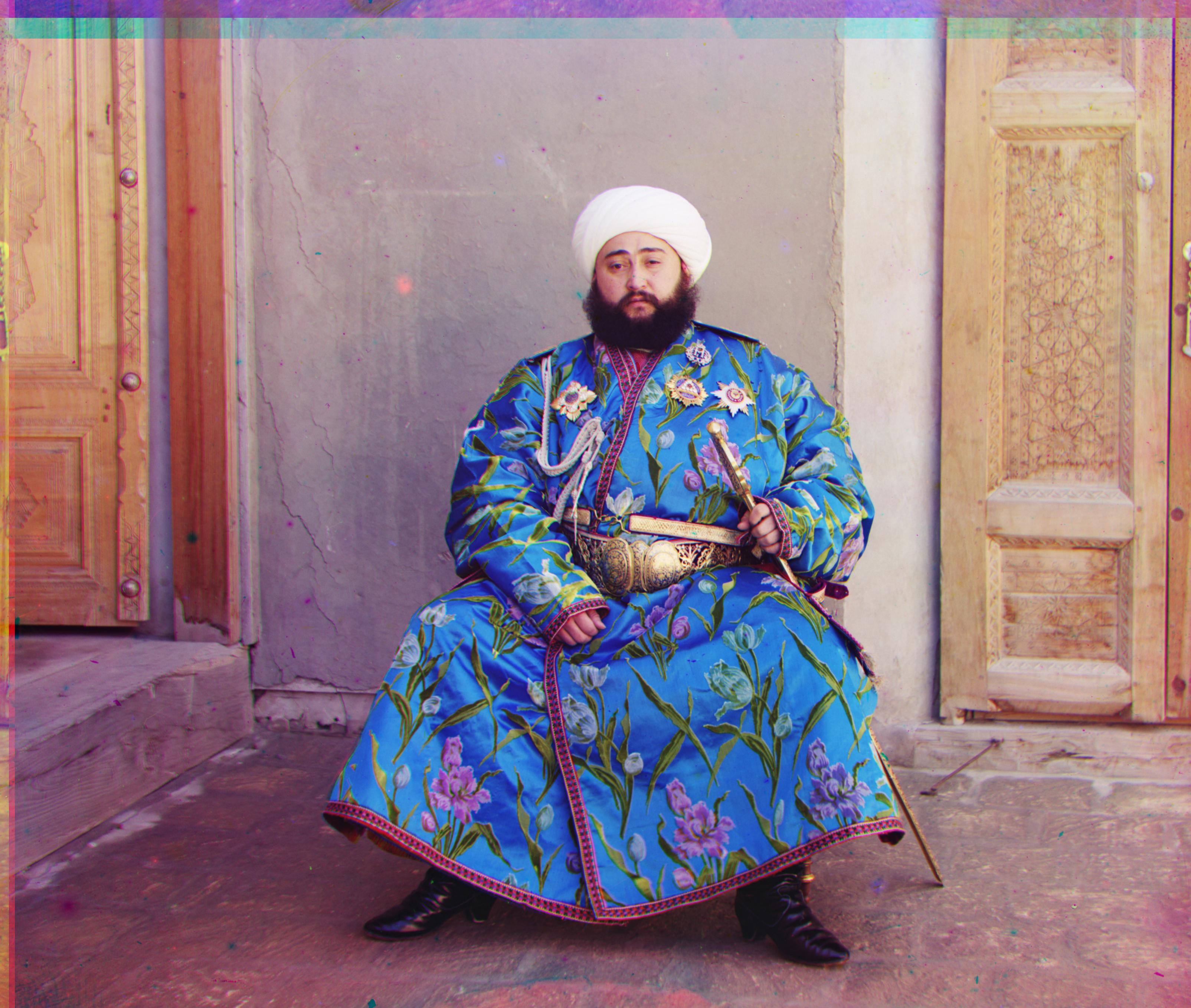

We can see that both the harvesters image and the emir image don't align well. Looking at the grayscale images, we see that the images of emir do not have the same brightness values (they are different color channels). Moreover, the harvesters probably moved during the picture exposure, making the image blurry and the channels having difference values. Thus, we need to find a new way to evaluate the alignment.

5 Bells & Whistles

Auto Cropping

I implemented auto-cropping which identifies the black borders and cut them. This will make the images easily alignable.

I used the Sobel filter on the image and looked for white patterns that represent a border.

To do this, I specified that the border has to be at max 10% of the image axis and created a threshold from which the sum of an axis is considered a border.

Auto Contrast

In my implementation, I find a threshold value that separates the distribution modes well in the intensity histogram then rescale the intensity using that value. Specifically, I'm using Yen's method to get the upper threshold value. All pixels with an intensity higher than this value are assumed to be foreground.

6 Results

Considering all the results, it is easy to conclude that the recursive pyramid method with auto-cropping and auto-contrast generate the optimal pictures without losing much information. These results hold for the small sized images as well.

These are the commands to generate final outputs:

python3 main.py ./lady.tif ./lady_final.jpg --autocrop --autocontrast python3 main.py ./onion_church.tif ./onion_final.jpg --autocrop --autocontrast python3 main.py ./icon.tif ./icon_final.jpg --autocrop --autocontrast python3 main.py ./harvesters.tif ./harvest_final.jpg --autocrop --autocontrast python3 main.py ./emir.tif ./emir_final.jpg --autocrop --autocontrast python3 main.py ./cathedral.jpg ./cathedral_final.jpg --autocrop --autocontrast python3 main.py ./monastery.jpg ./monastery_final.jpg --autocrop --autocontrast python3 main.py ./tobolsk.jpg ./tobolsk_final.jpg --autocrop --autocontrast python3 main.py ./melons.tif ./melons_final.jpg --autocrop --autocontrast

Here are my final outputs:

![]()

icon - R: (20, 88); G: (16, 40); B: (0, 0)

lady - R: (12, 108); G: (8, 48); B: (0, 0)

melons - R: (16, 180); G: (12, 84); B: (0, 0)

harvesters - R: (12, 124); G: (16, 60); B: (0, 0)

emir - R: (40, 104); G: (28, 48); B: (0, 0)

onion_church - R: (36, 108); G: (24, 52); B: (0, 0)

self-portrait - R: (36, 172); G: (28, 76); B: (0, 0)

train - R: (32, 88); G: (4, 44); B: (0, 0)

three generations - R: (8, 112); G: (12, 52); B: (0, 0)

workshop - R: (12, 104); G: (0, 52); B: (0, 0)

Also, I applied this method to some example images from the Prokudin-Gorskii collection.

R: (-4, 152); G: (0, 40); B: (0, 0)

R: (8, 108); G: (4, 24); B: (0, 0)