Project 1 - Colorizing the Prokudin-Gorskii photo collection

Anderson Lam (3033765427)

Overview

For this project, we look at the Prokudin-Gorskii photo collection and attempt to colorize them.

We are able to do this because the photos were taken on the red, green, and blue filters individually.

The basic idea is to separate the image by color channels, then align them so the colors come out nicely.

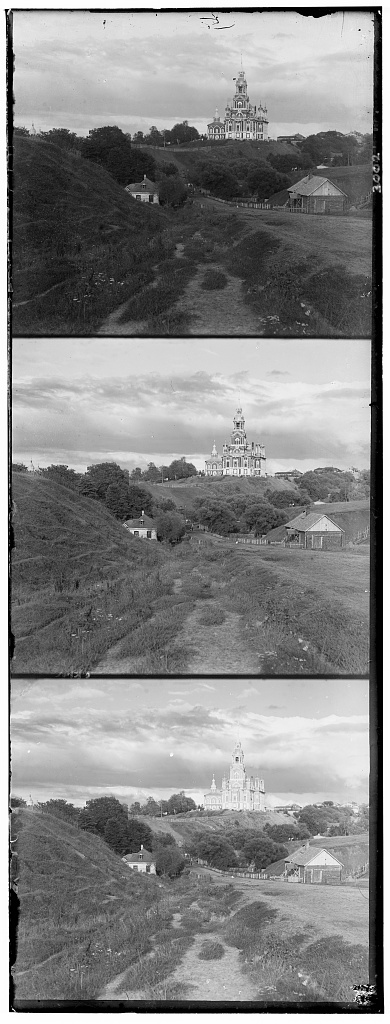

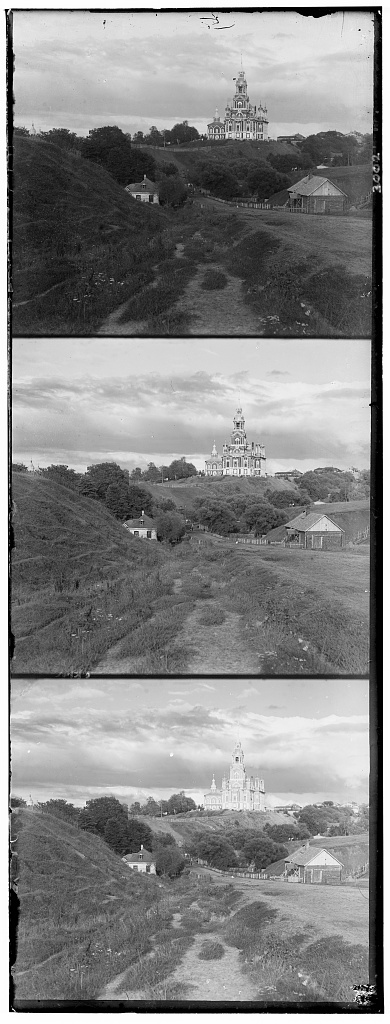

Here is a sample of an image that hasn't been stacked yet:

Cropping

According to the "Final Advice" page for this project, "The borders of the images will probably hurt your results, try computing your metric on the internal pixels only."

After testing out cropping and not cropping, I found this to drastically improve results as the image borders are often meaningless in order to properly

align the images. I decided to crop all the images by 10% on their width and height before running my align functions.

Aligning

In class, we were given suggestions of performing SSD (Sum of Squared Differences), NCC (Normalized Cross-correlation)

in order to generate a score that helps indicate how well aligned two images are. We compared the scores from iterating through

a window of [-15, 15] and circularly shifting the images. We started with the idea of aligning

the red image to blue, then green image to blue, and then stacking them together. However, I found it to work better after

aligning the green image to blue, then aligning the red image to that greenblue image. In other words, I did: ag = align(g, b), ar = align(r, ag), then np.dstack([ar, ag, b]).

SSD

I started with performing SSD first, which is basically the sum of (image1 - imaged)^2. This worked well by looking at the

[-15, 15] pixel range. This method for aligning worked really well and I continued to use it for my pyramid function.

NCC

I also tried to use NCC, which is basically the dot product between the two normalized vectors of the images. My implementation of NCC

didn't work as well as my implementation for SSD. So, I discontinued using it as SSD worked fine for aligning the images.

Pyramid

We implement a pyramid algorithm as it is a much faster search procedure for extremeley large photos as we can see with the .tif files.

If we didn't do this, the normal SSD or NCC functions would take hours to run for a large .tif file.

For my implementation, I used SSD as the base case (if the image dimensions are small) and otherwise I would rescale the photo

and run the pyramid function recursively. In addition, I also ran the SSD function on a smaller window of pixels (than [-15,15]) based on

the number of levels the recurisve function had taken (2^level). This is needed to make up for any inconsistencies when rescaling.

I found this approach using the class piazza thread.

Results + Displacements

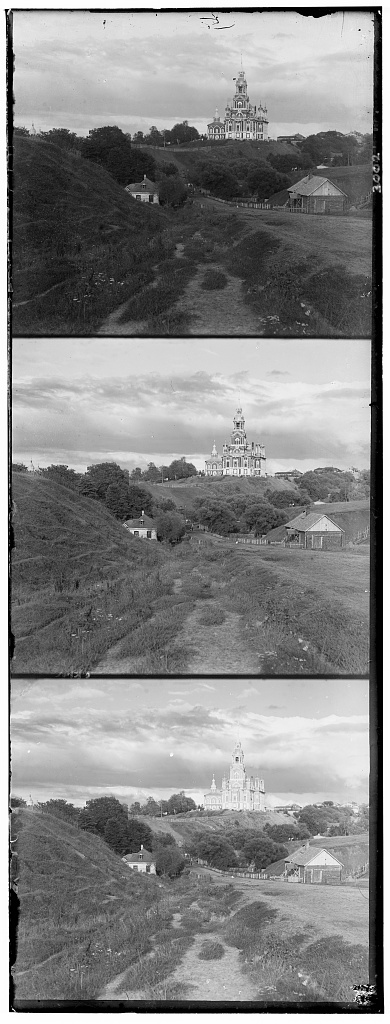

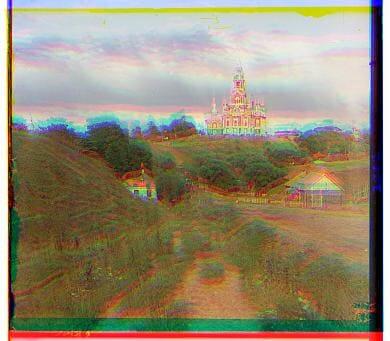

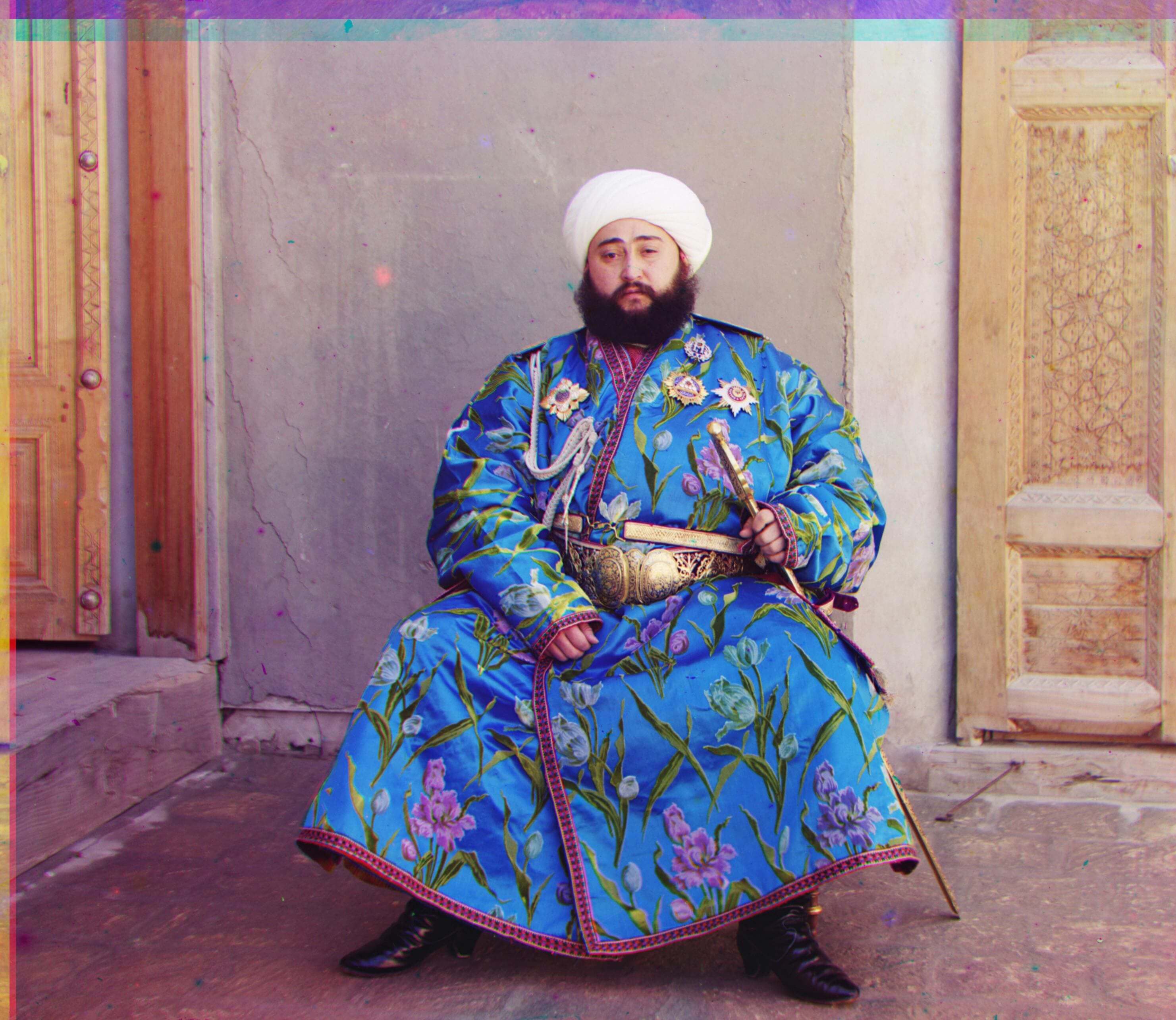

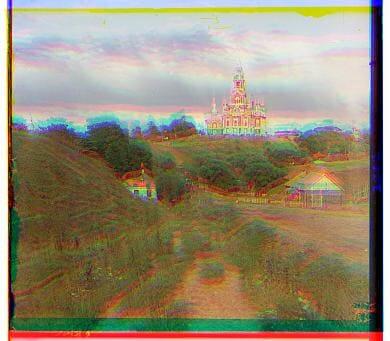

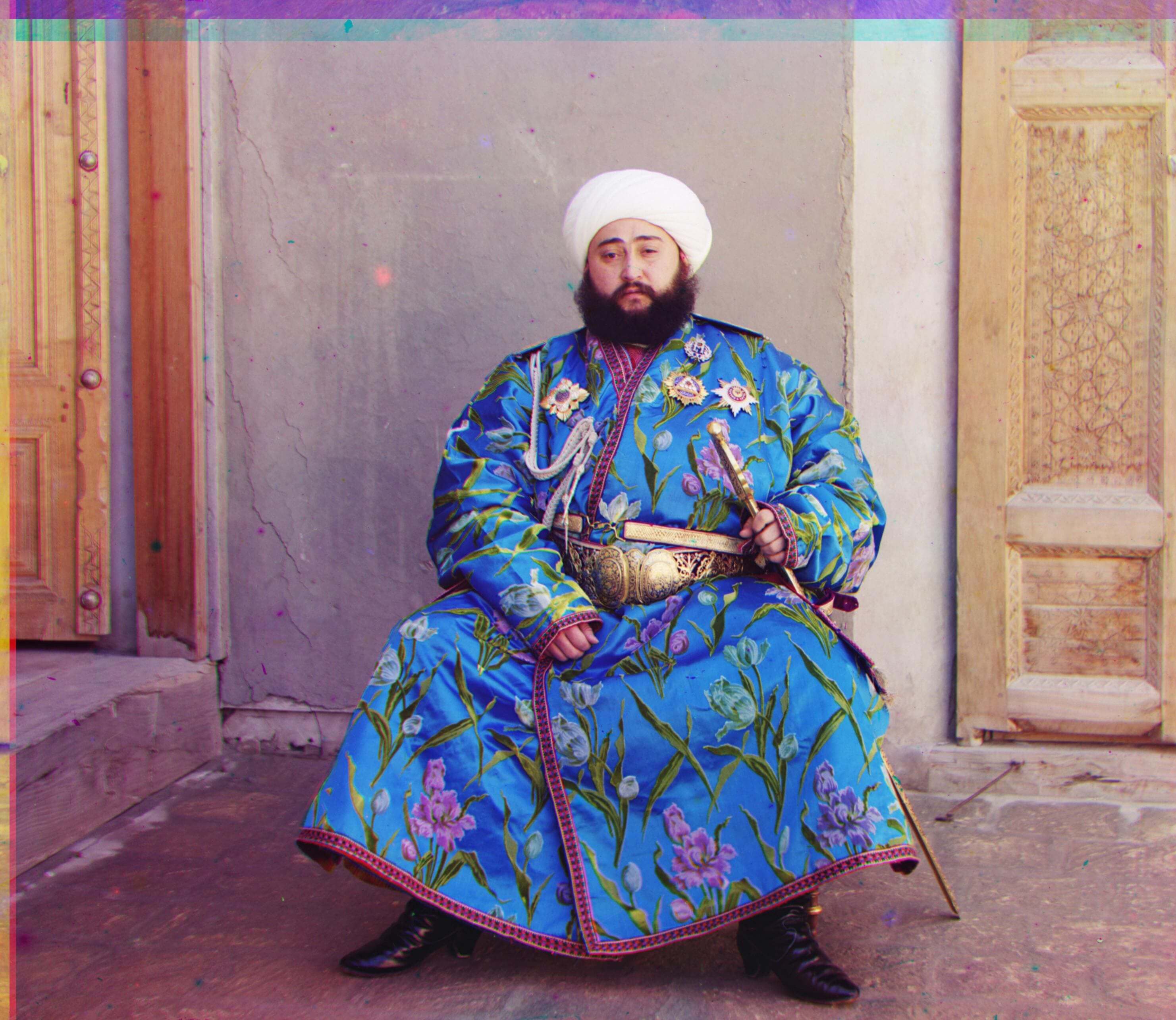

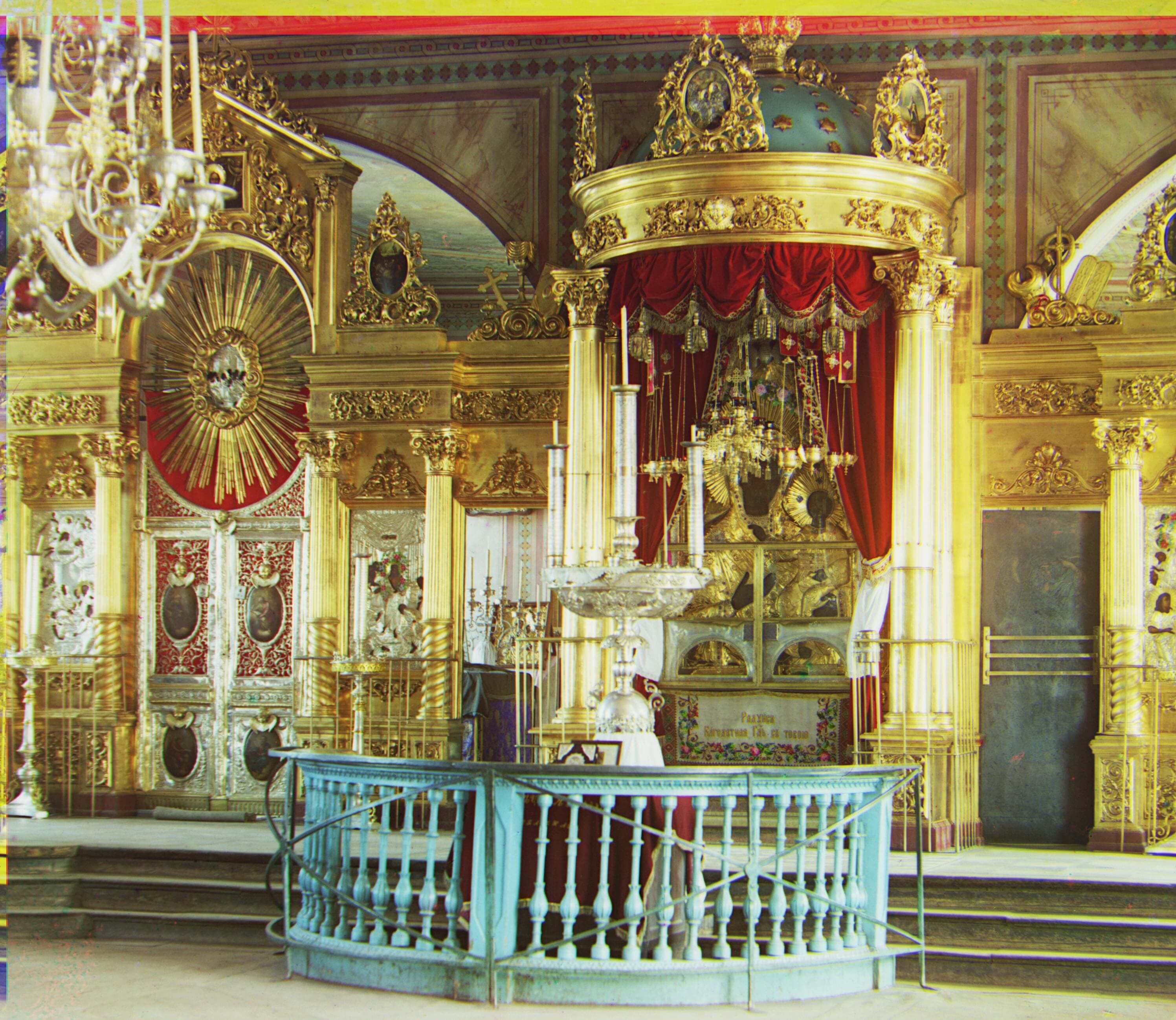

The following pictures show before cropping + aligning and after with

the displacement values. I was able to align my images well, although some images had

a little misalignment with some channels maybe due to the subject moving, difference in lightning, or just because

the colors were extremeley similar.

1. castle.tif

Green to Blue: [34, 2], Red to GreenBlue: [96, 4]

2. cathedral.jpg

Green to Blue: [5, 2], Red to GreenBlue: [12, 3]

3. emir.tif

Green to Blue: [48, 24], Red to GreenBlue: [104, 40]

4. harvesters.tif

Green to Blue: [60, 16], Red to GreenBlue: [124, 12]

5. icon.tif

Green to Blue: [40, 16], Red to GreenBlue: [88, 20]

6. lady.tif

Green to Blue: [50, 8], Red to GreenBlue: [112, 12]

7. melons.tif

Green to Blue: [80, 8], Red to GreenBlue: [176, 12]

8. monastery.tif

Green to Blue: [-3, 2], Red to GreenBlue: [3, 3]

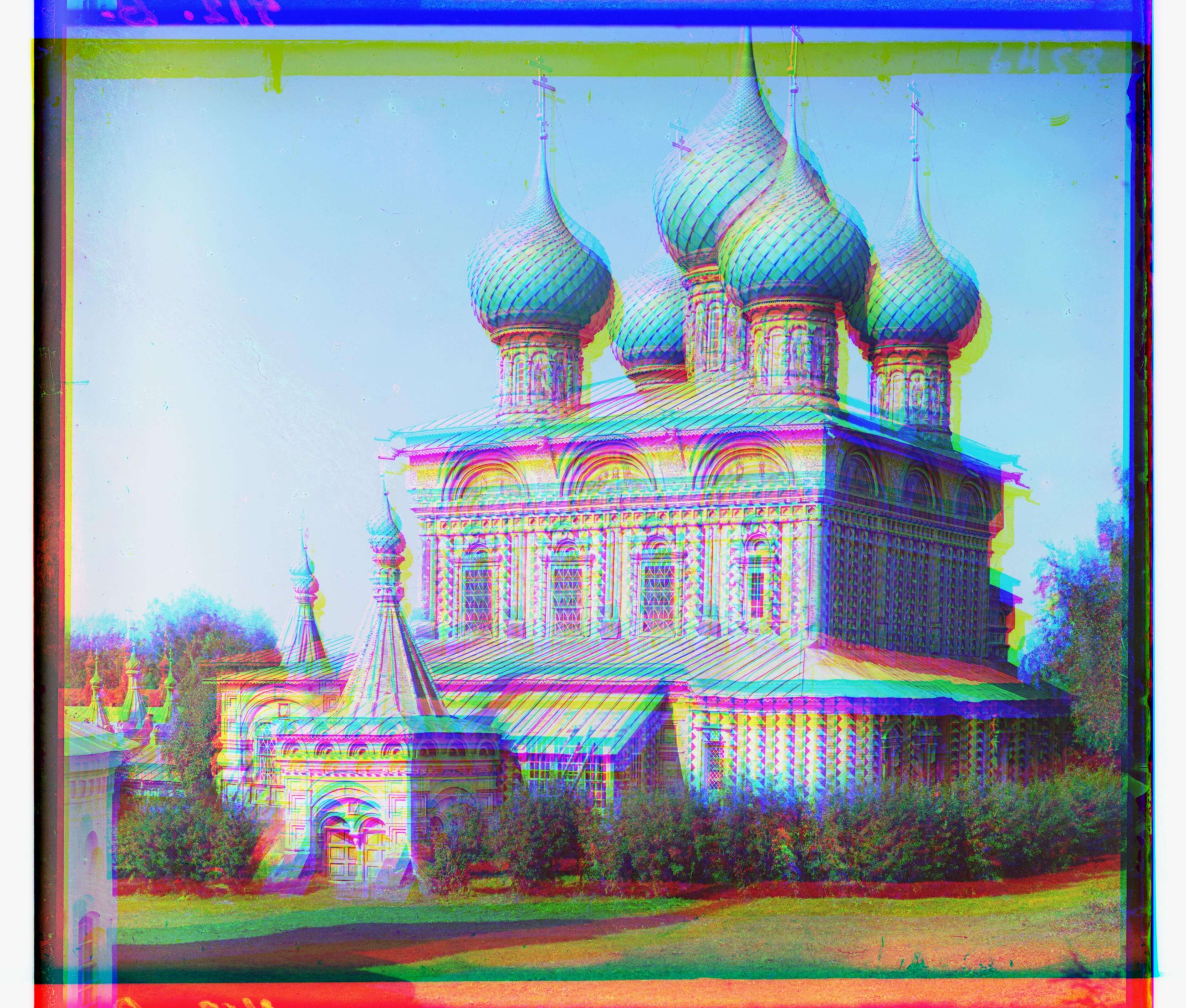

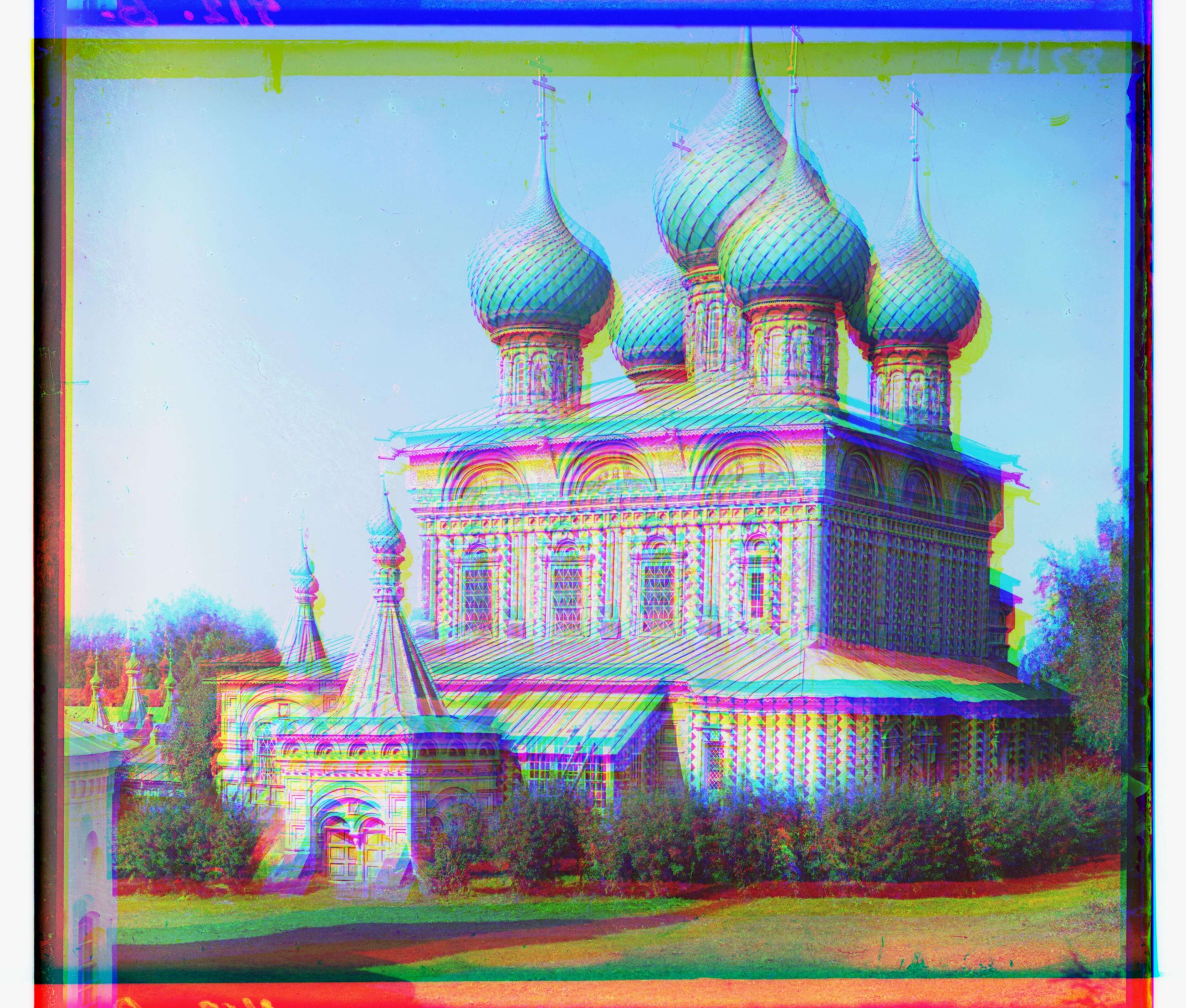

9. onion_church.tif

Green to Blue: [52, 26], Red to GreenBlue: [108, 36]

10. self_portrait.tif

Green to Blue: [80, 28], Red to GreenBlue: [176, 36]

11. three_generations.tif

Green to Blue: [54, 14], Red to GreenBlue: [112, 12]

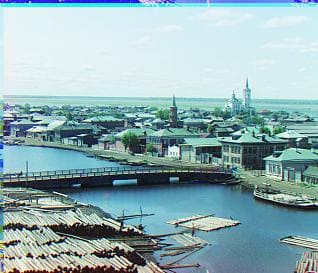

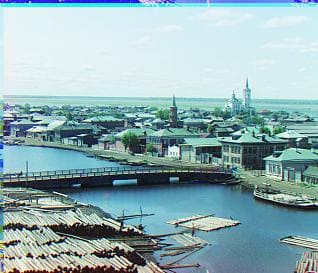

12. tobolsk.tif

Green to Blue: [3, 3], Red to GreenBlue: [7, 4]

13. train.tif

Green to Blue: [42, 4], Red to GreenBlue: [84, 32]

14. workshop.tif

Green to Blue: [52, 0], Red to GreenBlue: [104, -12]

My own images

15. capri.tif

Green to Blue: [40, -12], Red to GreenBlue: [96, -12]

16. church.tif

Green to Blue: [20, 24], Red to GreenBlue: [32, 30]

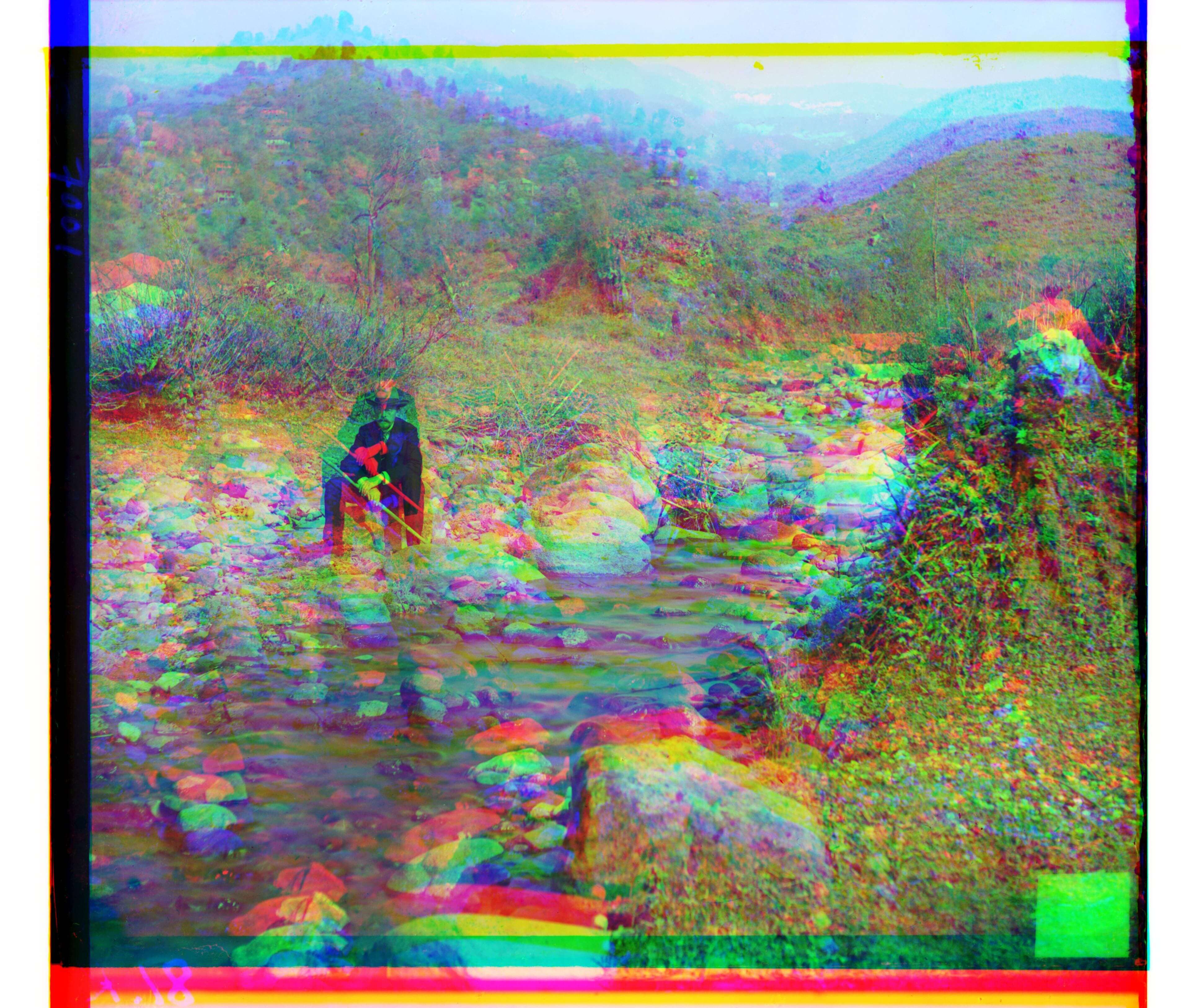

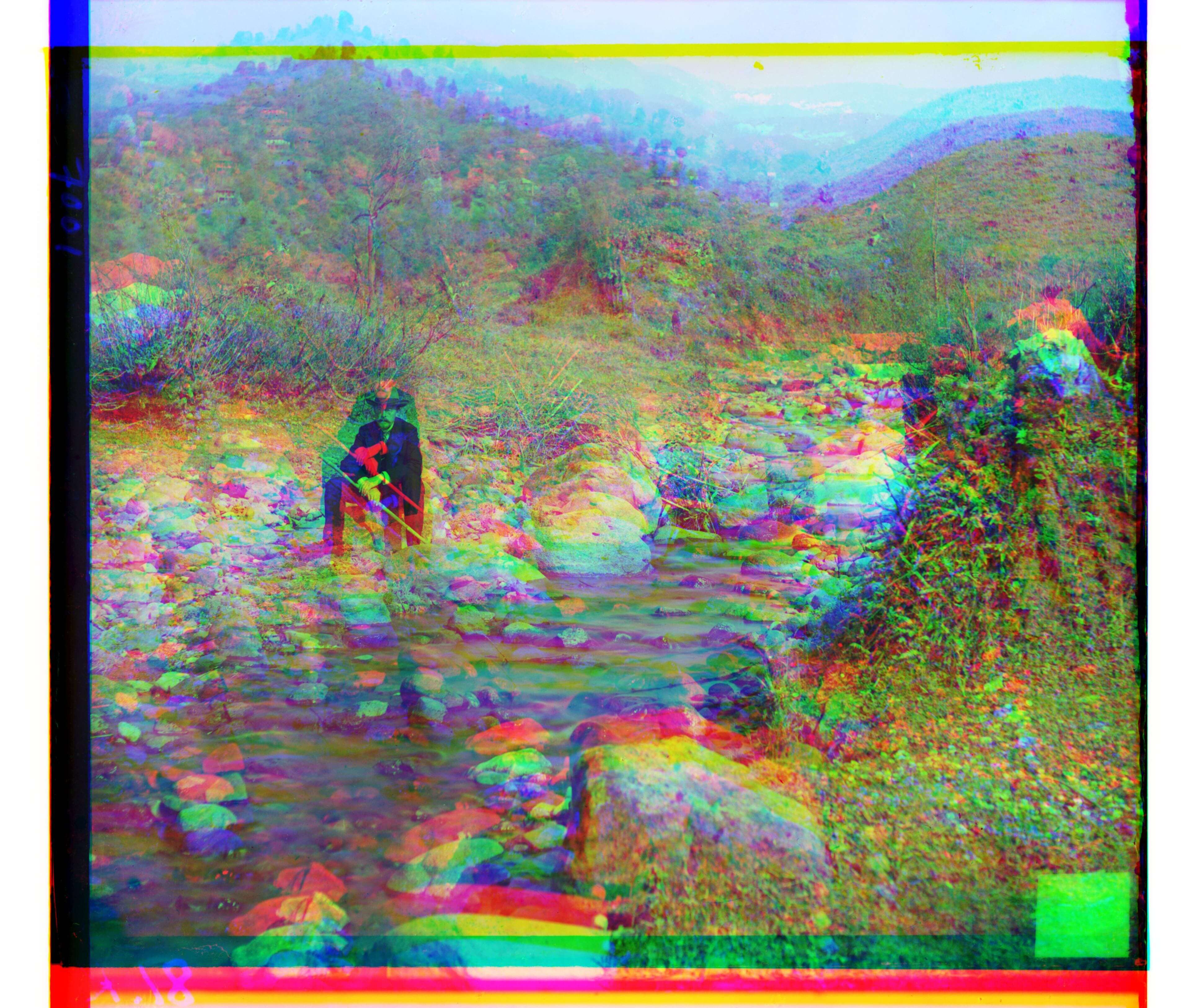

17. river.tif

Green to Blue: [17, 3], Red to GreenBlue: [140, 20]

18. tracks.tif

Green to Blue: [50, 4], Red to GreenBlue: [108, 0]

Pain Points

I didn't realize how much cropping can affect the end result, but thinking about it now, it makes a lot of sense why. I had trouble with a couple images

like melons and self_portrait even after cropping, but I fixed the issues by considering more pixels for displacement. My runtime isn't too long (at most a minute per photo), but can be shortened.

Aside from that, I didn't have too much issue with emir even though emir's photo has different brightness values.

Bells and Whistles

For this section, I am looking to implement auto cropping, improving white balance and sharpness (if possible). I also hope to optimize by looking into other metrics

or functions rather than SSD and also performing edge detection.

For edge detection, I found a skimage feature, canny, and applied it to the image. I messed with the sigma values (currently at 1), and my results are below. There does not seem to be a significant difference

with the canny feature and my normal displacement finding. Included are some results and the displacement values.

emir

Green to Blue: [50, 24], Red to GreenBlue: [108, 40]

three generations

Green to Blue: [56, 12], Red to GreenBlue: [116, 8]

lady

Green to Blue: [56, 10], Red to GreenBlue: [120, 12]

melons

Green to Blue: [80, 8], Red to GreenBlue: [176, 12]

In addition, I tried auto cropping and color balance fixing with some algorithmns I found online created by others; however, they didn't work well for my usage.

Code I found for those can be found in my submission.