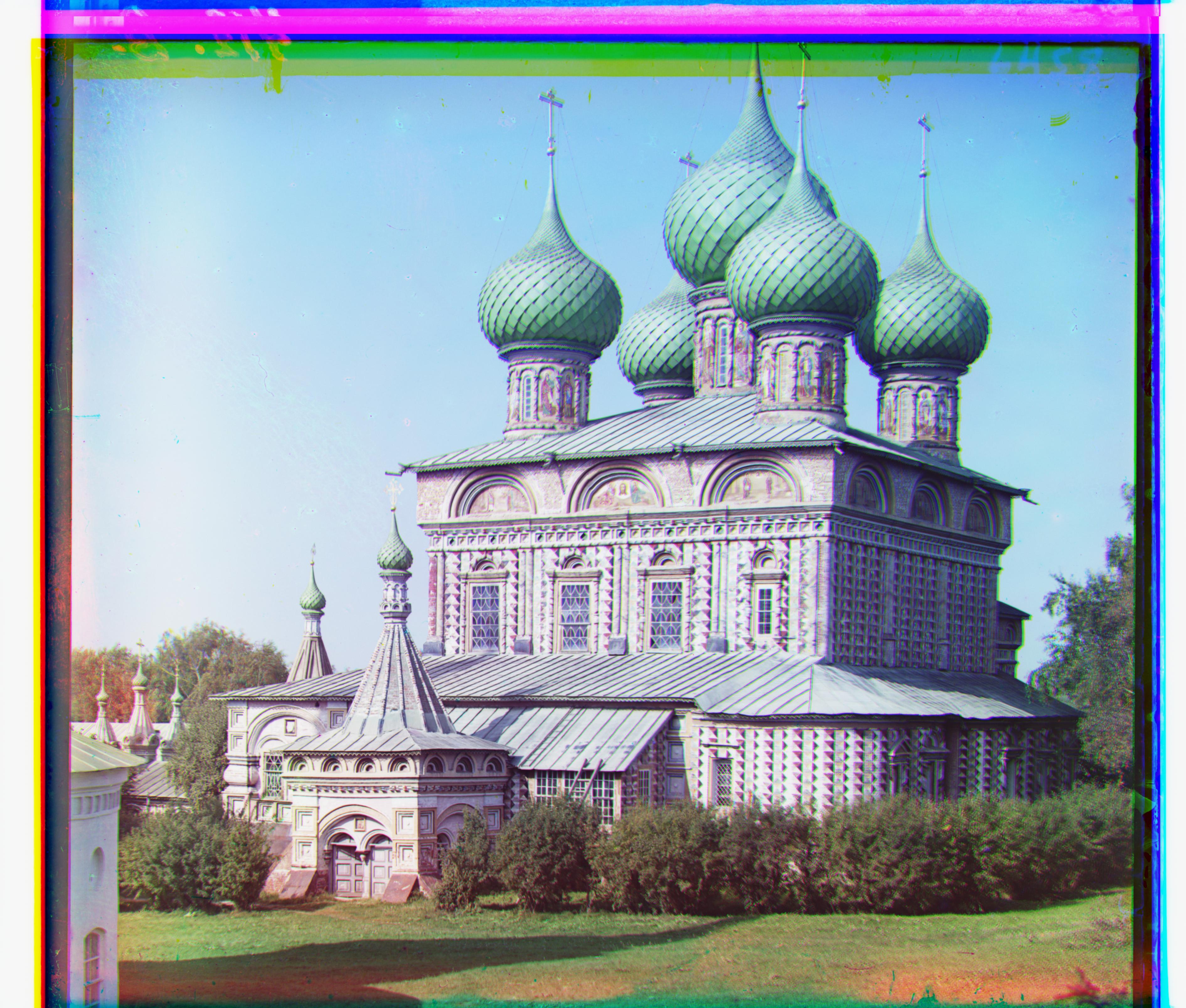

Green Channel Displacement: (3, 36)

Red Channel Displacement: (5, 97)

This project generates color images from the digitized Prokudin-Gorskii collection. For each image, I get the R, G, and B channels by splitting the image evenly into 3 parts. Then, I align the R and G channels to the B channel. In my implementation, for small images, this is done by naively iterating through potential vertical and horizontal displacements and finding the combination that best aligns the channels. I bound the displacements to be in the range [-15, 15) (pixels), so this approach evaluates 900 possible alignments, and the alignment with the highest normalized cross-correlation is returned.

Since larger images may require much larger displacements to be properly aligned, this approach becomes too slow for the larger images in the collection (>10m total pixels). Instead, I search for the proper alignment using an image pyramid. Specifically, I recursively find the best displacement for a scaled-down copy of the channels, then find the best displacement for the full-scaled image by searching within a range of the estimate from the recursive call. I described the scale factor using a parameter alpha, and at each step, I search for a better displacement within +/- 1/alpha pixels from the previous estimate. Initially, I had alpha set to 0.1, but this resulted in a very shallow pyramid (depth=2) that took a long time to evaluate at each step, so instead I opted for a deeper pyramid with an alpha of 0.5, which was considerably faster.

The resulting images were often very blurry and not very well aligned. I ended up fixing this by modifying the normalized cross-correlation metric I used to evaluate displacements. In particular, before computing the normalized cross-correlation, I cropped out the outer 15 pixels from each edge of both channels, so that only the inner pixels of the image contributed to alignment. The original images often had black borders around the edges, which I believe was messing with the alignment of the actual subjects of the images, so this simple workaround produced much better results.

|

Green Channel Displacement: (3, 36) Red Channel Displacement: (5, 97) |

|

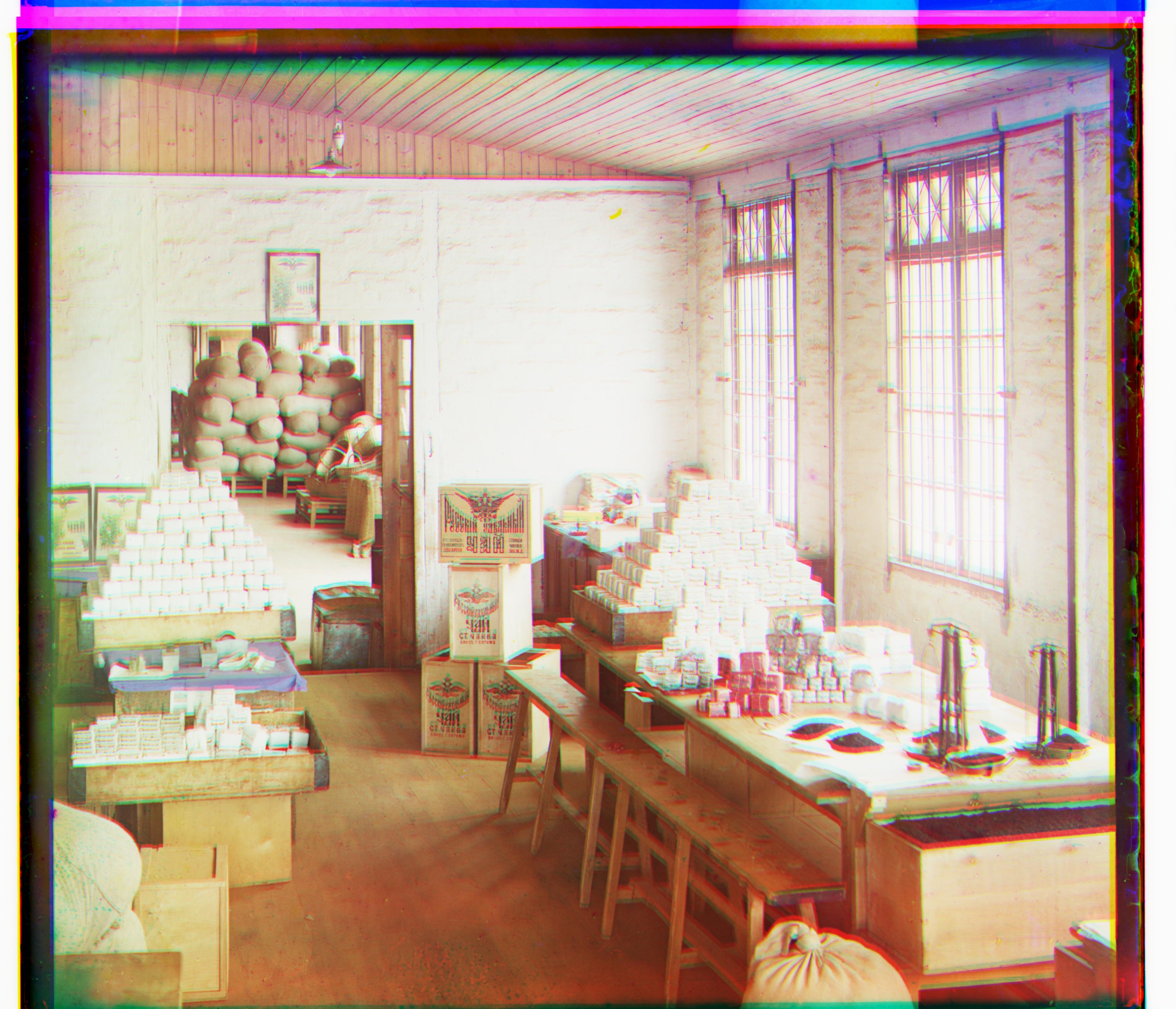

Green Channel Displacement: (2, 5) Red Channel Displacement: (3, 12) |

|

Green Channel Displacement: (14, 42) Red Channel Displacement: (34, 106) |

|

Green Channel Displacement: (10, 59) Red Channel Displacement: (7, 120) |

|

|

Green Channel Displacement: (16, 41) Red Channel Displacement: (22, 90) |

|

Green Channel Displacement: (-6, 57) Red Channel Displacement: (-14, 101) This is the blurriest of my results. It looks like the red channel is not properly aligned. I believe more agressive cropping could have alleviated the issue, but my approach always crops by a constant number of pixels from each edge, and 15 seemed to work well for the other images in the collection. |

|

Green Channel Displacement: (4, 83) Red Channel Displacement: (7, 173) |

|

Green Channel Displacement: (2, -3) Red Channel Displacement: (2, 3) |

|

Green Channel Displacement: (22, 52) Red Channel Displacement: (35, 108) |

|

Green Channel Displacement: (10, 74) Red Channel Displacement: (18, 166) |

|

Green Channel Displacement: (5, 52) Red Channel Displacement: (7, 110) |

|

Green Channel Displacement: (2, 3) Red Channel Displacement: (3, 6) |

|

Green Channel Displacement: (-2, 41) Red Channel Displacement: (10, 91) |

|

Green Channel Displacement: (-5, 53) Red Channel Displacement: (-15, 90) |

Below I also have listed a few other examples from running the algorithm on some other images from the collection.

|

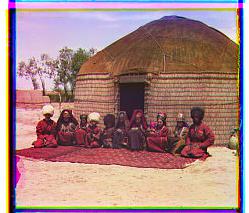

Green Channel Displacement: (-1, 2) Red Channel Displacement: (-1, 5) |

|

Green Channel Displacement: (2, 4) Red Channel Displacement: (3, 8) |

|

Green Channel Displacement: (-3, 2) Red Channel Displacement: (-5, 7) |