Images of the Russian Empire: Colorizing the Prokudin-Gorskii Photo Collection

Arvind Sridhar, CS194-26 Project 1

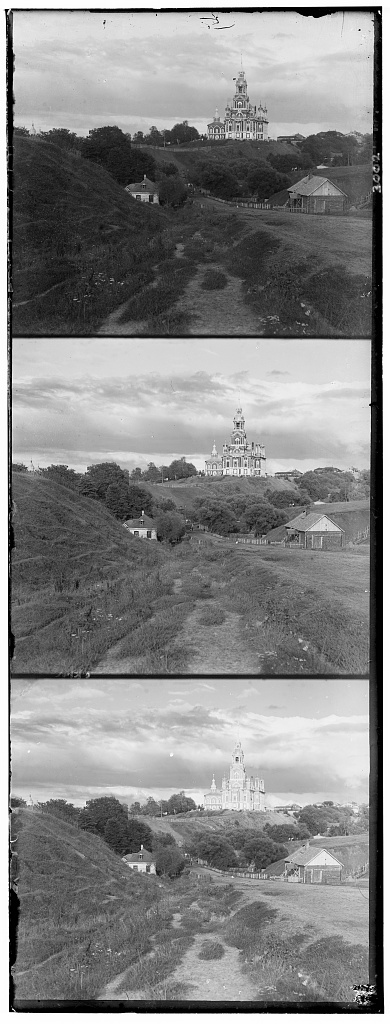

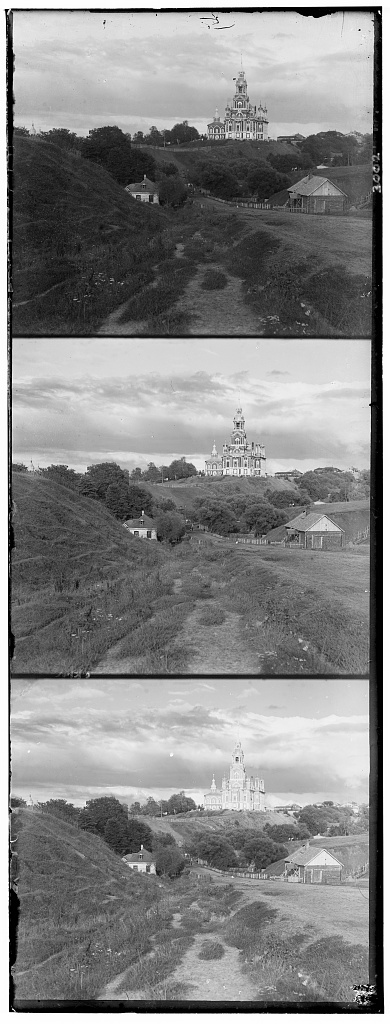

The Russian chemist Sergey Prokudin-Gorskii pioneered color photography during the early 1900s. Under permission from the Tzar of Russia, Prokudin-Gorskii was able to capture different scenes around the empire using his camera, capturing 3 different exposures on glass plates with blue, green, and red filters respectively (as below). While he was unable to fully realize his vision and piece together the different-exposure slides to produce full color images, his rich set of pictures has enabled future researchers to overlay the different channels together and reproduce the color image that Prokudin-Gorskii wanted to capture. This project develops an algorithm to systematically and computationally overlay the blue, green, and red channel images from the Prokudin-Gorskii collection in order to produce high-quality color images.

The simplest approach to align images deals with measuring the differences between actual pixel values between the different channels, for one particular image. Because the 3 different channels have slight discrepancies in terms of their alignment, an intelligent re-allignment must be applied as the channels are overlayed for each image. To do this, I first experimented with a window of (x, y) displacements of (-16, 16) pixels each, for each of the green and red channels, using an exhaustive/purely iterative approach taking 32*32 = 1,024 steps. I used the negative normalized cross-correlation metric (NCC) in order to quantify the difference between the displaced green/red channel and the underlying (ground truth) blue channel, and chose the displacement that minimized the NCC for each of green/red. Once I determined the optimal displacements, I overlayed the channels on top of each other to produce the color image. This worked well for the JPG images in the dataset.

For the larger TIF images however, the above approach quickly became unsustainable, as the images were too large and a displacement window of (-16, 16) wasn't big enough to find the correct displacement. Therefore, I implemented a pyramid alignment algorithm to iteratively find the optimal displacements for scaled-down versions of the images. To start, I chose a scale factor of 6, meaning that I began my algorithm by scaling down my images by 1/(2^6), then 1/(2^5), ..., down to 1/(2^0) = 1. For each downscaling, I applied my simple alignment algorithm with a window size equivalent to a width of ±2 around the previous optimal x and y displacement found by the previous scale-down level. Since the pixel level resolution increases by 2X for each successive iteration of this recursive algorithm, the ±2 window enabled me to hone in on where the optimal displacement was in O(logn) time instead of O(n) time; in short, I didn't have to iterate through each possible displacement of the full image, but rather recursively focus in on the area that mattered most. This enabled me to get optimal displacements across a wide window range (-128 to 128 pixels of displacement for each x and y) in about 64 seconds per large TIF image.

I used image cropping to improve my results. Because the shifting of the images during the displacement algorithm creates noise at the borders, I cropped away the borders in order to focus on the internal pixels when evaluating the NCC difference between the displaced green/red channel and the underlying blue channel. I applied the same crop to both g/r and b, enabling a more accurate difference computation. For simple alignment, I used a cropping of 64 pixels along each border (top, bottom, left, and right). For pyramid alignment, I cropped 256 pixels along each border (for the full-scale image), rising from 4 pixels based on the scale level (6 to 0).

Results

The following are my results on the dataset provided for this project:

castle.tif

Green displacement: [35, 3], red displacement: [98, 4]

cathedral.jpg

Green displacement: [5, 2], red displacement: [12, 3]

emir.tif

Green displacement: [49, 24], red displacement: [101, -80]

harvesters.tif

Green displacement: [60, 17], red displacement: [124, 13]

icon.tif

Green displacement: [41, 17], red displacement: [89, 23]

lady.tif

Green displacement: [55, 8], red displacement: [117, 11]

melons.tif

Green displacement: [82, 11], red displacement: [178, 13]

monastery.jpg

Green displacement: [-3, 2], red displacement: [3, 2]

onion_church.tif

Green displacement: [51, 26], red displacement: [108, 36]

self_portrait.tif

Green displacement: [79, 29], red displacement: [176, 36]

three_generations.tif

Green displacement: [53, 14], red displacement: [112, 11]

tobolsk.jpg

Green displacement: [3, 3], red displacement: [7, 3]

train.tif

Green displacement: [42, 6], red displacement: [87, 32]

workshop.tif

Green displacement: [53, 0], red displacement: [105, -12]

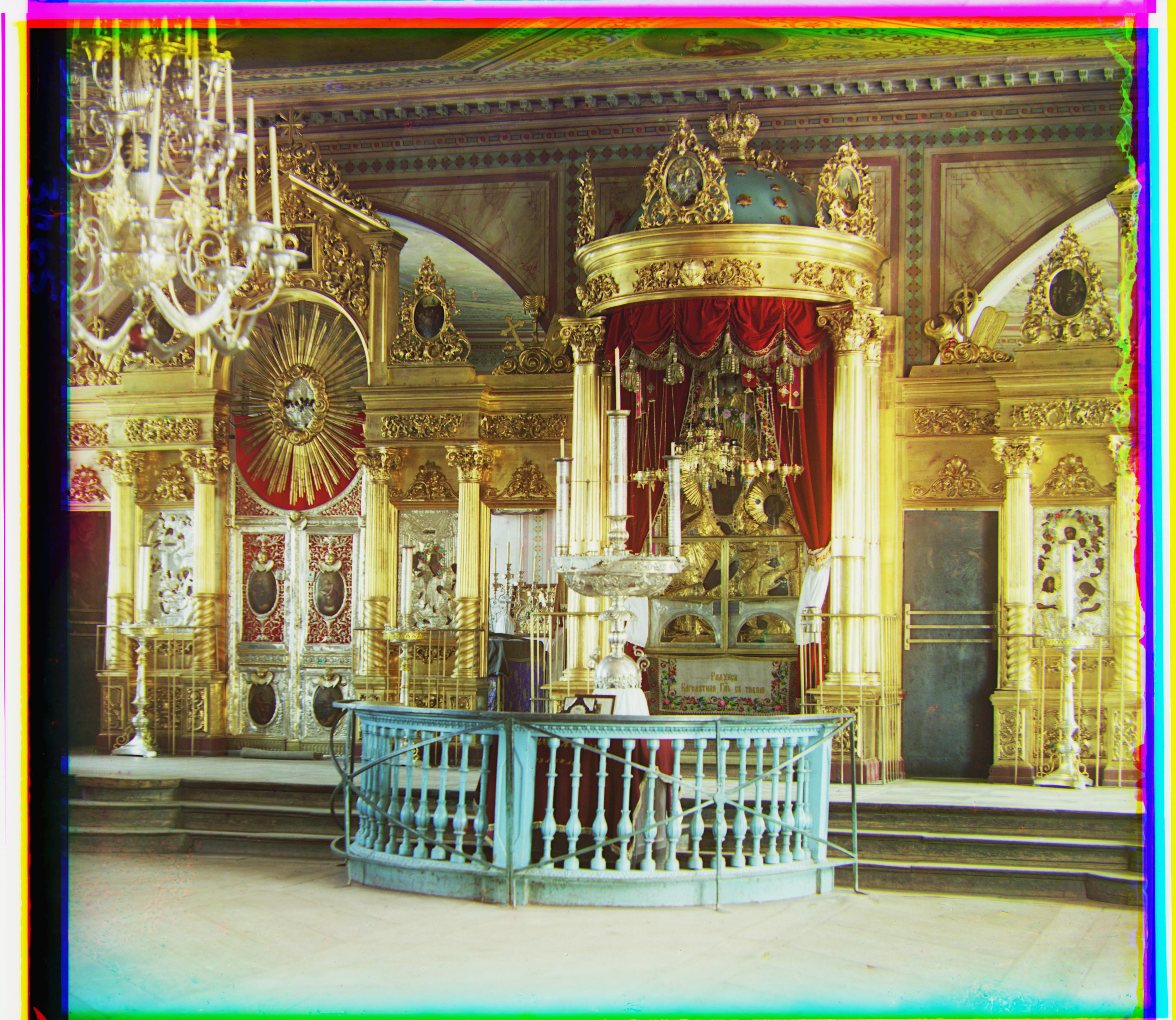

I also ran my algorithm on the following extra images from the Prokudin-Gorskii collection:

cliff.tif

Green displacement: [-3, -2], red displacement: [75, -9]

hut.tif

Green displacement: [57, 31], red displacement: [130, 49]

river.tif

Green displacement: [42, -2], red displacement: [97, 9]

vase.tif

Green displacement: [25, 5], red displacement: [111, 7]

Discussion

I experimented with several different crop values, and finally fixed a base crop of 64 pixels for the JPG images and 256 pixels (scaled) for the TIF images. Almost all of the images (all but emir.tif) aligned reasonably well with these hyperparameter settings, including some extra images from the collection, as can be seen above.

I noticed that emir.tif did not align correctly with this crop level and the NCC difference metric, instead being slightly mis-aligned. I attributed this to 2 reasons:

1. The brightness values across the different color channels do not match, meaning that even a good alignment would have a high NCC difference score. This added noise causes the algorithm to get confused and pick a bad alignment which it "thinks" is correct, since this alignment happens to have the lowest noisy NCC score of them all. The brightness difference is particularly apparent in the dress of the emir, where the different channels have significantly different brightness levels (looking almost inverted).

2. The crop level of 256 pixels along each border is too much. Looking closely at emir.tif, we can see that the doors along the edges of the image are prominent features, whereas much of the center of the image (apart from the emir himself) is empty whitespace. This means that cropping too much can delete the relevant features along the border, preventing the algorithm from identifying the correct displacement. I found that when reducing the crop level to 128 pixels (scaled down accordingly for each pyramid level), the computed displacements become correct, and the emir is perfectly aligned.

Below is the picture of the emir, aligned with a smaller crop level (128 pixels) and the NCC difference metric:

As can be seen, the emir is now perfectly aligned with less cropping allowing the border features to more prominently influence alignment. A final note: I also tried aligning the images by minimizing the SSD (L2) difference metric, and found the results to either be equal or inferior to NCC in all cases. This is likely because SSD penalizes brightness differences quadratically (owing to the square of differences), whereas NCC does so linearly. Since there seems to be slight brightness differences among the different channels of nearly all of the images in the collection, NCC is least influenced by this noise, and therefore generally performs best.

Bells and Whistles

Automatic contrasting

I implemented automatic contrast stretching in order to improve the perceived sharpness and quality of the images. I first computed the 7th and 93rd percentile pixel intensity values from the final color image, and rescaled those percentiles to clip to 0 and 255 respectively (for each channel). This led me to the following results:

Before

After

Before

After

As can be seen, the perceived image sharpness and quality is improved with contrast stretching for both self_portrait.tif and melons.tif, without affecting the channel alignment in any way.