Part 1: Fun with Filters

The first part of this project is to show how we can use gradients and convolutions to detect edges and straigthen images

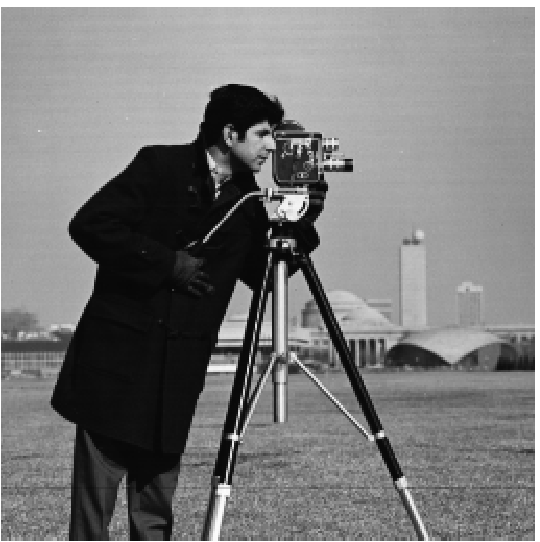

Part 1.1 Finite Difference Operators

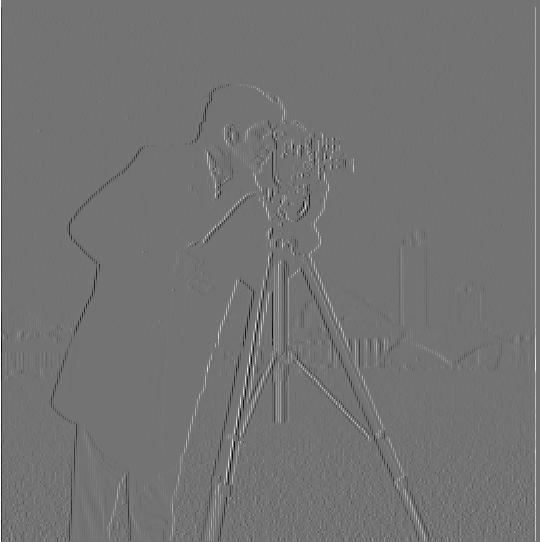

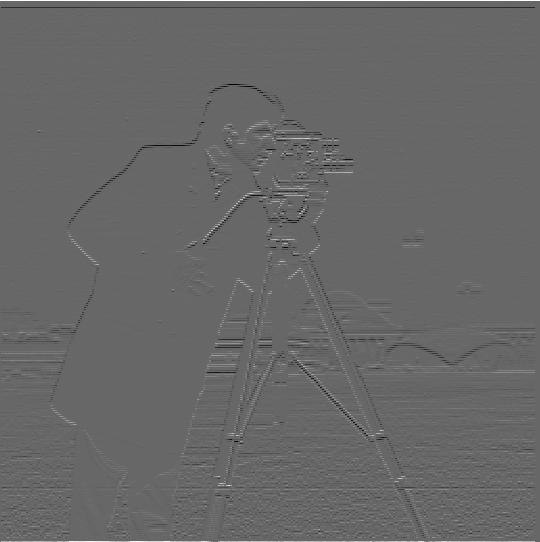

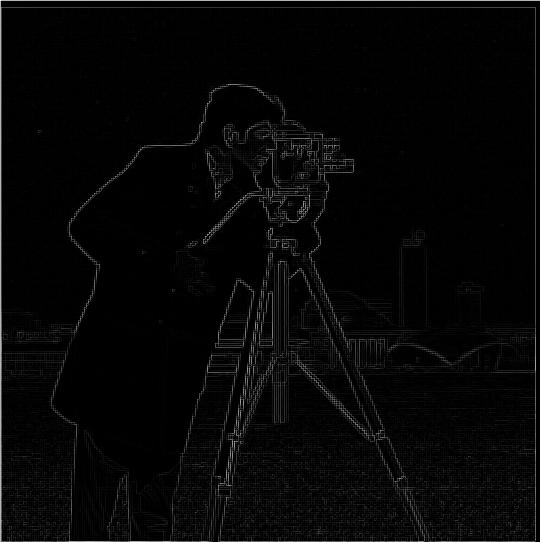

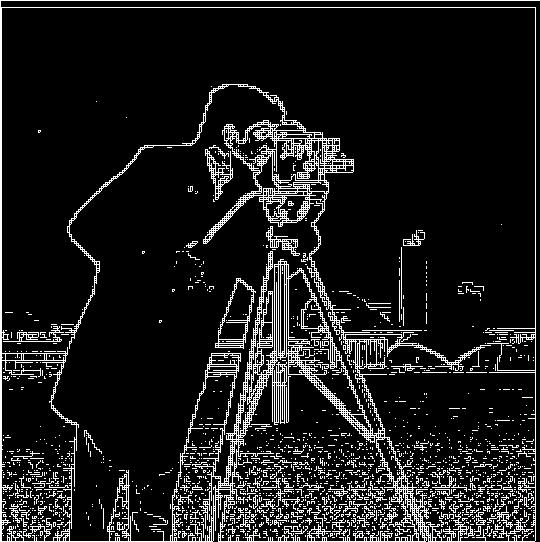

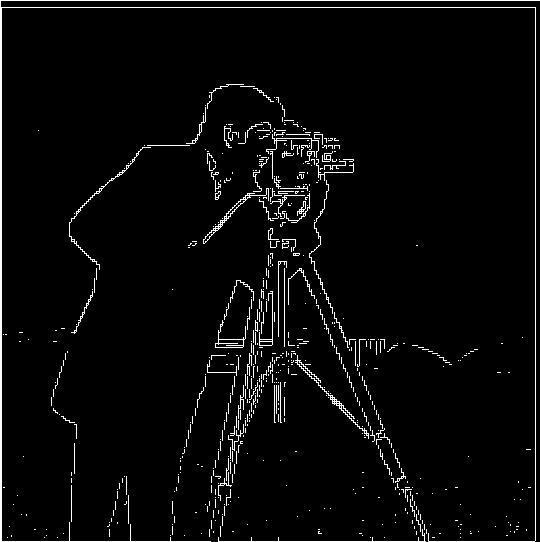

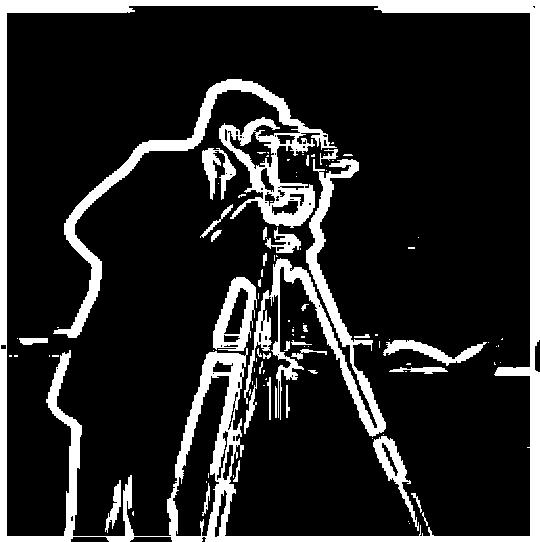

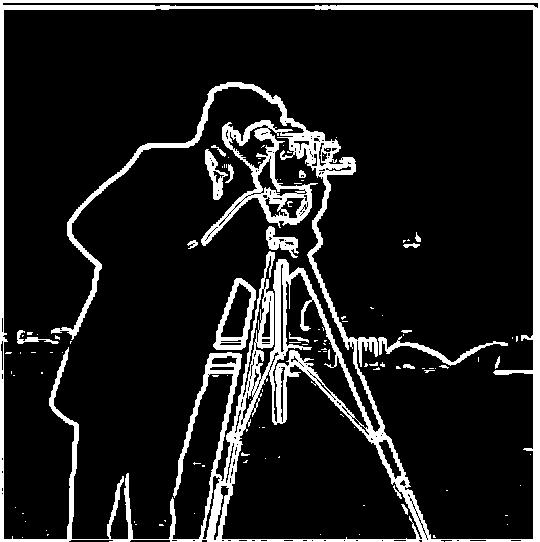

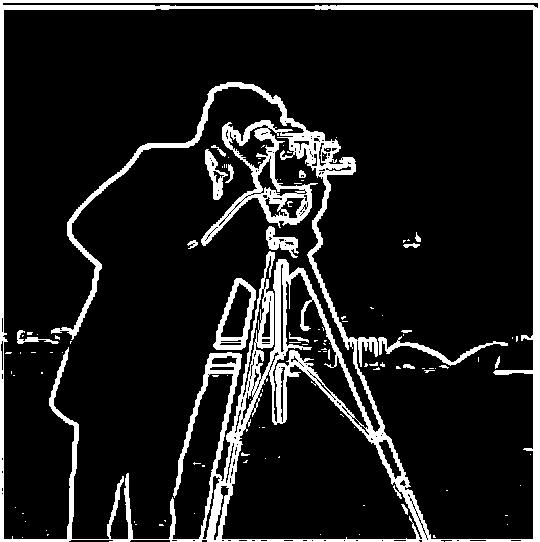

The first thing we have to do to detect edges is to detect the gradients of each images to see the directions of the colors. Below is the original image and the edges.

Part 1.2 Derivative of Gaussian (DoG) Filter

We see that even though we can get pretty good edge detection from the methods above, the images were rather noisy. However, we can enhance the edges by first blurring the image by convolving with a Gaussian and then running the same algorithm as above.

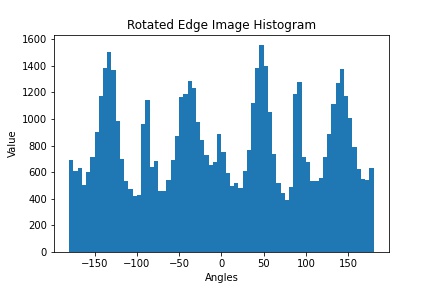

Part 1.3 Image Straightening

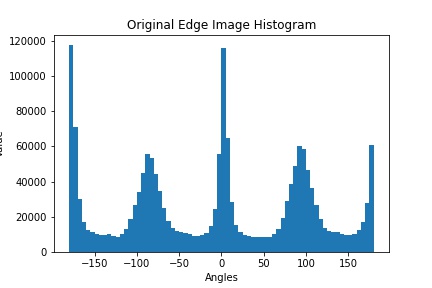

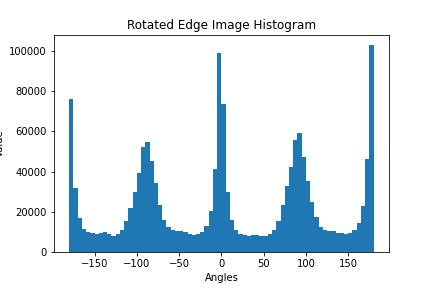

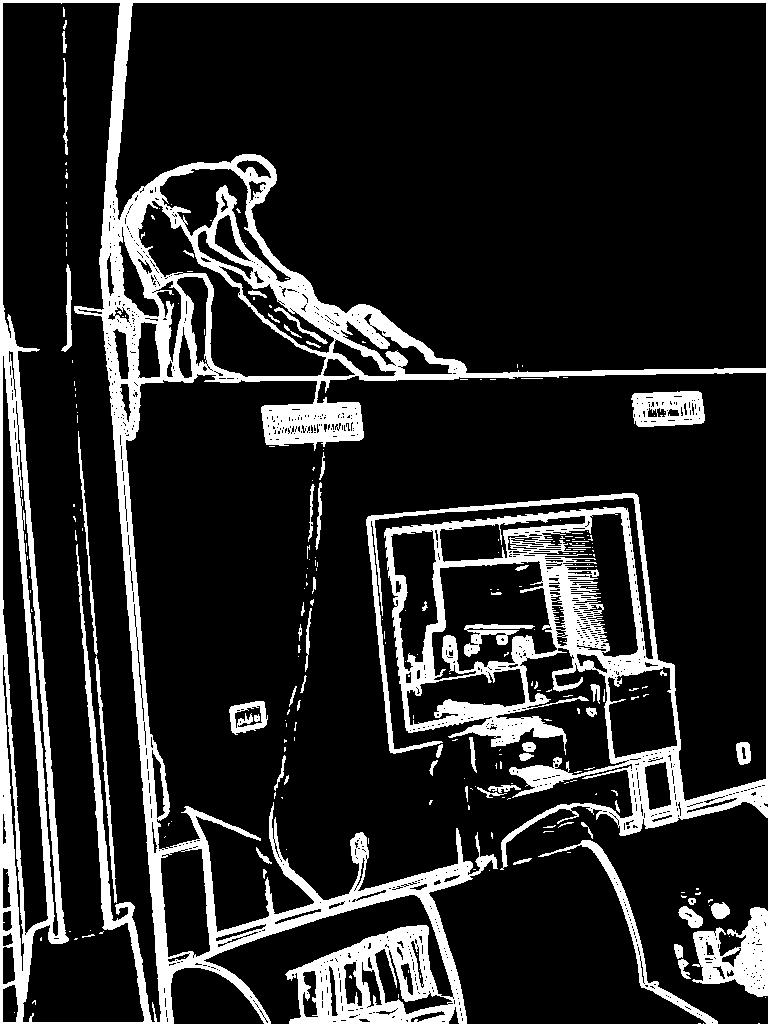

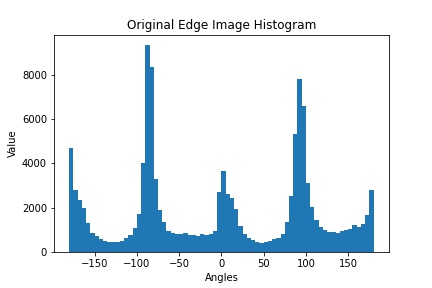

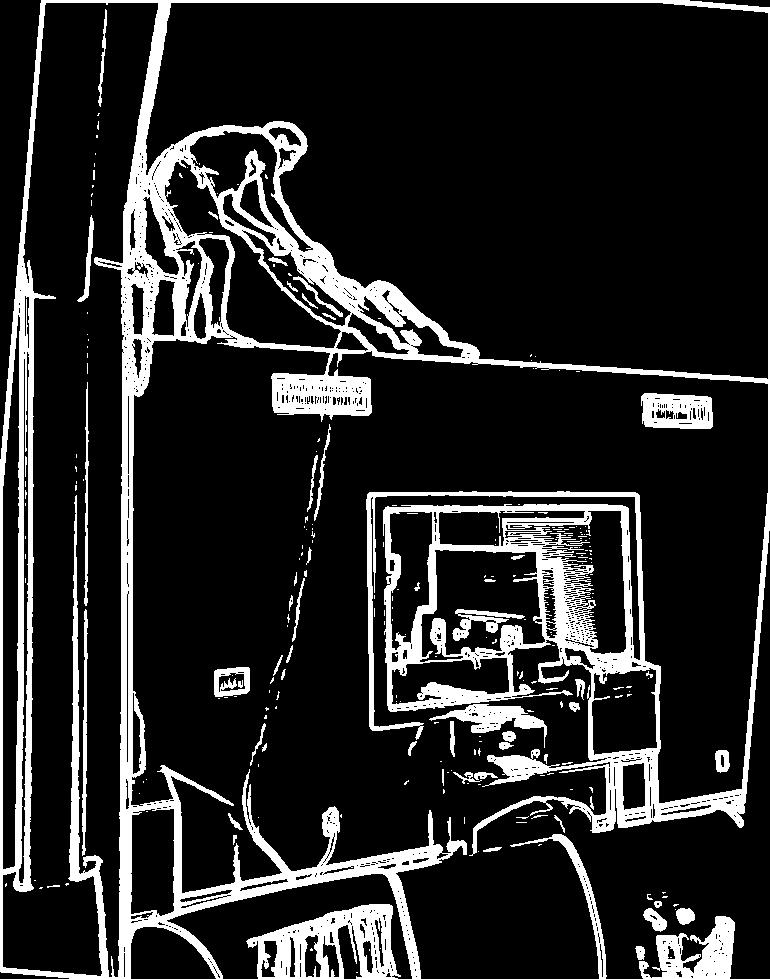

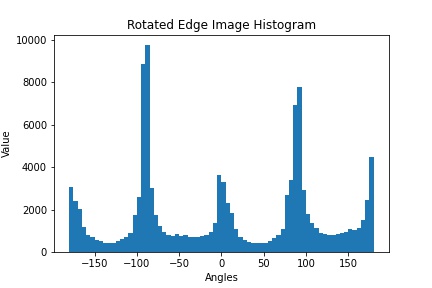

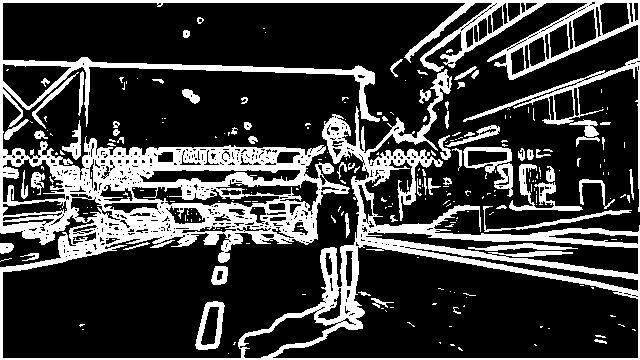

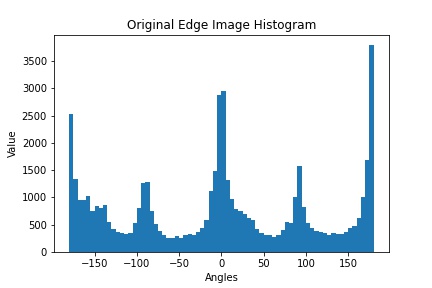

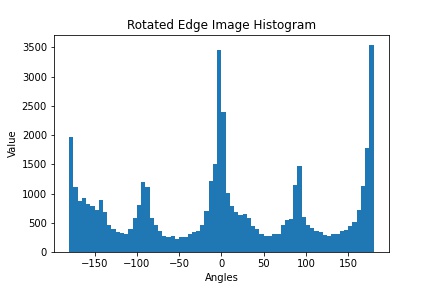

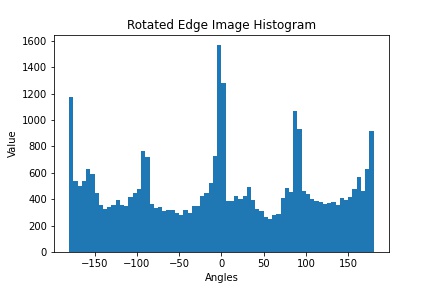

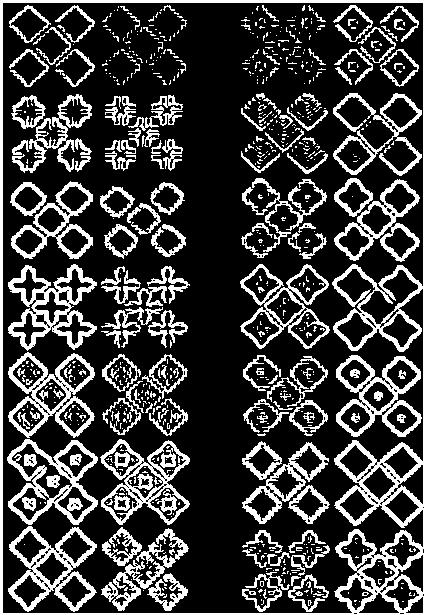

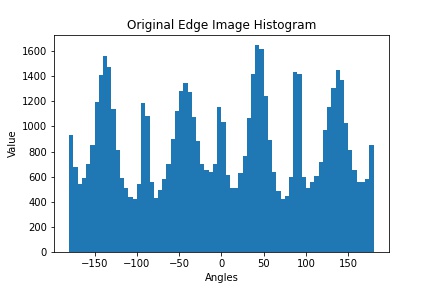

Now that we can detect edges properly, we might be able to straigten images. For many things in this world, a straight image maximizes the number of vertical and horizontal edges. In each of the next sections, we will display a before and after of the image, each with the original image, the edges, and a histogram of what the gradient angle is at each visible gradient edge pixel. Vertical edges have angles that are closer to 90 degrees, and horizontal edges have angles that are closer to 0 or 180 degrees.Rotated Building

Vacuuming a Wall

Joker at the Hospital

Po

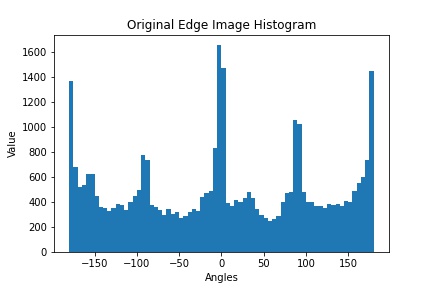

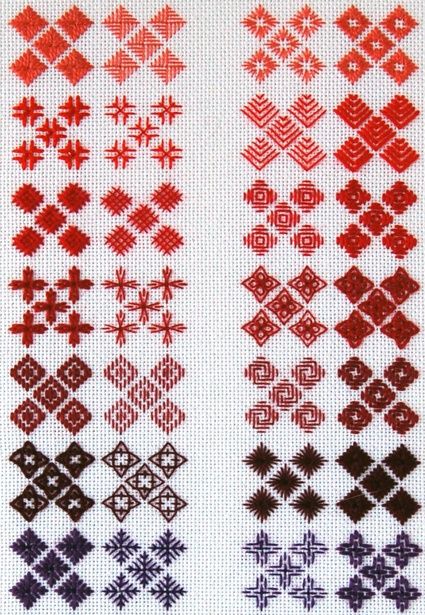

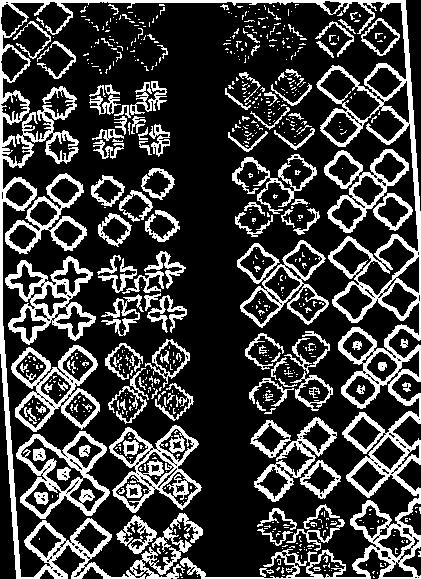

X-Patterns

In the following image, our assumption that an image with the most amount of straight lines means that it is straightened and the best quality is challenged because we are using x patterns. Thus, image straightening does not work as well here

Part 2: Fun with Frequencies

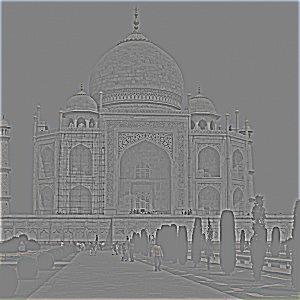

In the previous part, we showed that we could use gradients and edge detectors to find the edges of the image. In this part, we will play around more with frequencies so that we are able to sharpen and combine images.Part 2.1 Image Sharpening

In this part, we try and sharpen an image. We do that by first calculating the low frequencies and subtracting them from an image. Then we amplify the high frequencies and put them back into the image. We will show the transformation of each example image below.Taj Mahal

Joker Burns Money

Part 2.2 Hybrid Images

In this part of the project, we create hybrid images by combining images by using the high frequency images of one image and combine it with the low frequencies of another.Derek and Nutmeg

We start off with Derek, a former professor, and Nutmeg, his cat to see how the image stacks up.Original Images

We then convert the images to the frequency domain.

Frequency Images

Final Image:

Steven and Pastor

Original Images

Final Image:

Yoda and Baby Yoda

Original Images

Final Image:

MrBeast and the Most Liked Egg

Original Images

Final Image:

Part 2.3 Gaussian and Laplacian Stacks

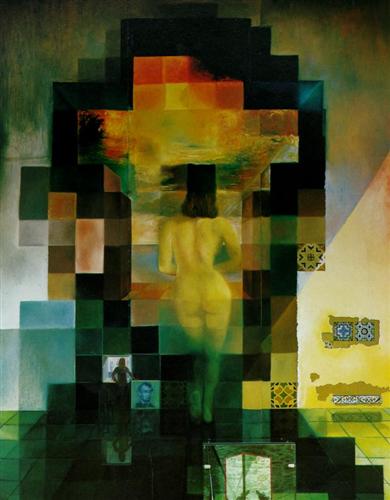

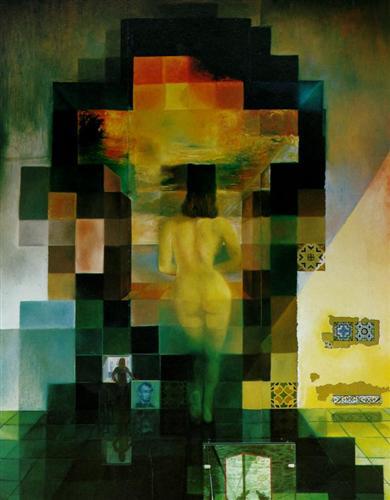

In this part of the project, we create hybrid images by combining images by using the high frequency images of one image and combine it with the low frequencies of another. We will be looking through the different layers of the stack.Lincoln

Original

Derek and Nutmeg

Let's see if we can apply the same Laplacian stack with our fun photo from above, Derek and Nutmeg.Original

Part 2.4 Multi-Resolution Images

In this part of the project, we blend together two images by creating a mask and then finding the individual edges of each image through a Laplacian stack, and then we morph them together through a Mask.Orapple

Original Images and Mask

Burning New York

Original Images and Mask

NYC Laplacian Stack

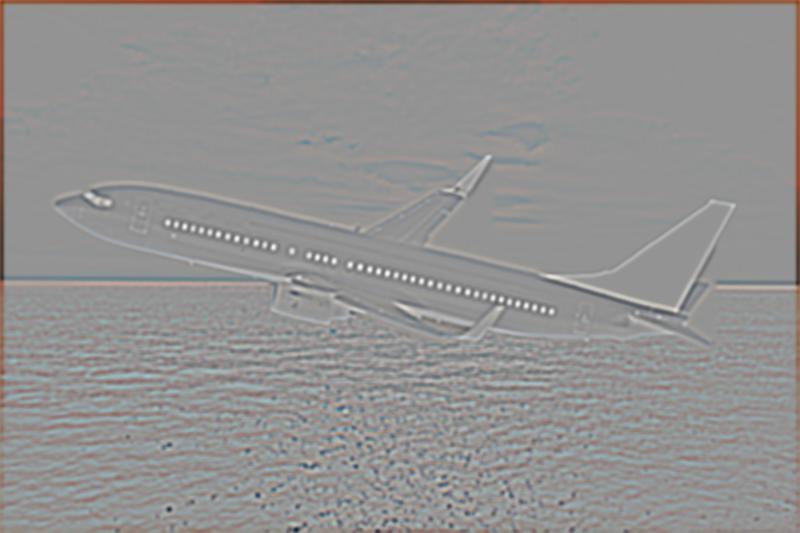

Plane over Ocean

Original Images and Mask

Plane and Ocean Laplacian Stack