Project 2 - Fun with Filters and Frequencies!

by Pauline Hidalgo

Part 1.1

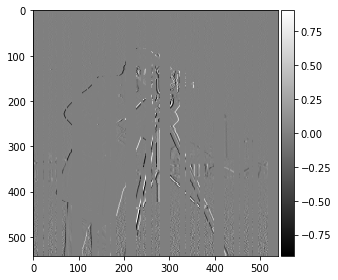

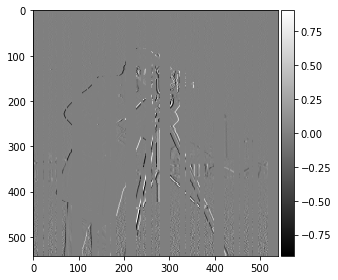

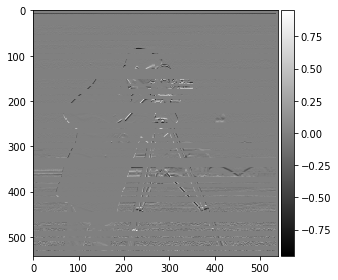

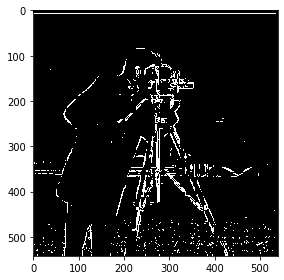

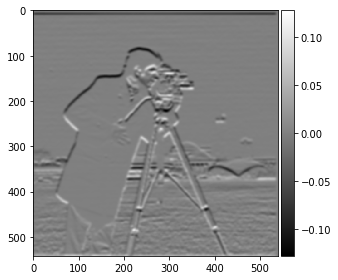

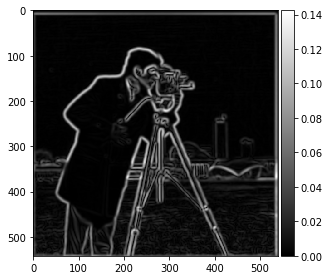

I computed the partial x and y derivatives by convolving the cameraman image with D_x = [1, -1] and D_y = transpose of D_x respectively. The gradient magnitude is computed by adding the squares of the partial x and y derivatives then taking the square root e.g. sqrt(partial_x^2 + partial_y^2). Given the amount of noise in the image, it was difficult to find a threshold which removed most of the noise while preserving the edges. After some experimentation, I settled with 0.15 as higher thresholds began to remove the man's silhouette, but unfortunately noise from the grassy area remains.

partial derivative w.r.t. x

partial derivative w.r.t. y

gradient magnitude

binarized gradient magnitude (.15)

Part 1.2

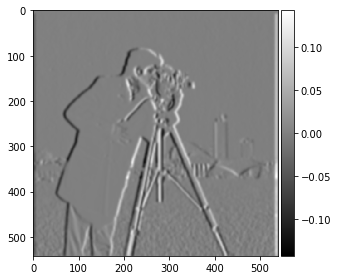

In this section, I first convolved the cameraman image with a 2d gaussian, which was created by taking the outer product of a 1d gaussian with its transpose. I set sigma = 2.5 to sufficiently blur the image and kernel size = 5*sigma to ensure the gaussian isn't cut off. Now, the edge images are much less noisy since the gaussian blur removed the noise from the grass, which made it much easier to binarize the image too. The edges for the man, camera stand, and even background are much less choppy and slightly thicker as well due to how the gaussian spreads out and weights adjacent pixel values.

partial derivative w.r.t. x

partial derivative w.r.t. y

gradient magnitude

binary gradient magnitude (.05)

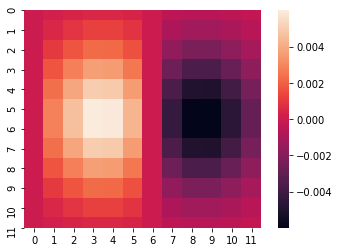

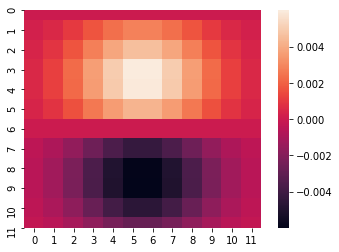

Instead of convolving the image first with the gaussian then again with the finite difference operators, we can also convolve the original image with the partial derivatives of the gaussian to get the same result.

partial derivative of gaussian w.r.t. x

partial derivative of gaussian w.r.t. y

gradient magnitude using derivatives of gaussian

Part 1.3

Implementation details:

- I used the derivatives of the gaussian from part 1.2, which gave cleaner edges and better results.

- I tested 10 angles evenly spaced between -5 and 5.

- I ensured every image rotation was the same shape as the original by setting the reshape kwarg, and cropped 20% of pixels from each side before counting the vertical and horizontal angles. Note the display images below are uncropped.

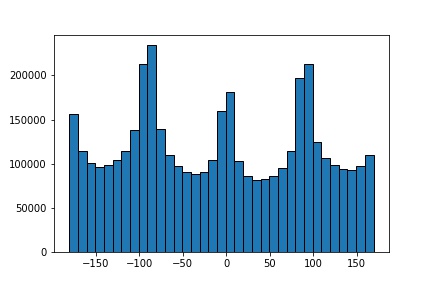

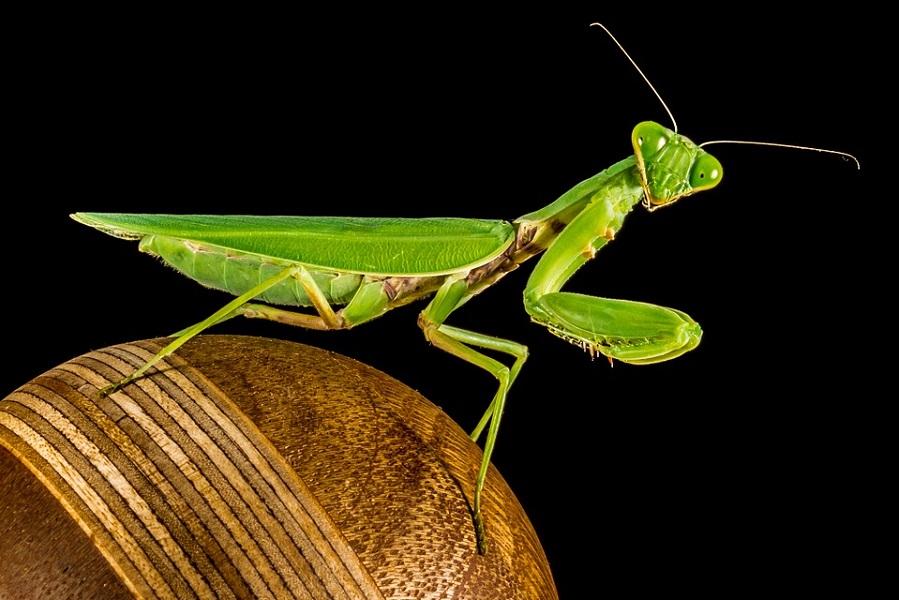

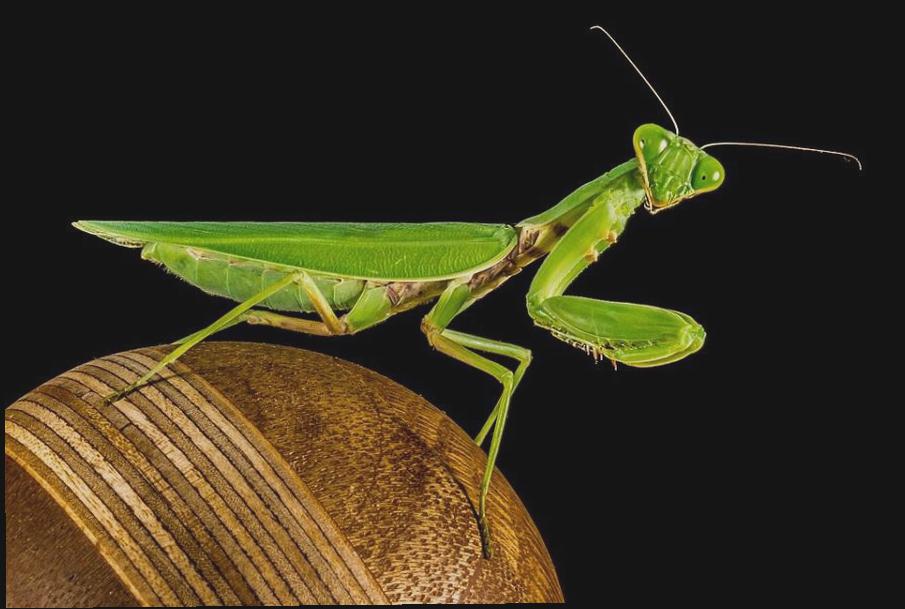

The mantis image serves as my failure case: it should be straightened counterclockwise so that it appears as if it is standing on flat ground. However, little adjustment is made since the mantis' back is already horizontal and the ideally straightened image wouldn't have many vertical or horizontal angles either.

original

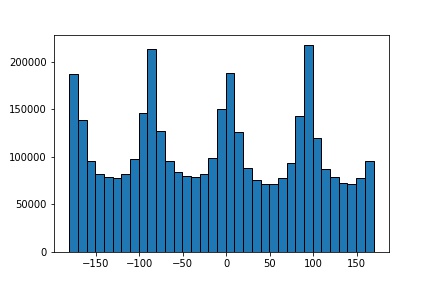

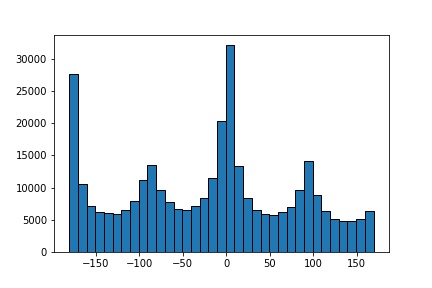

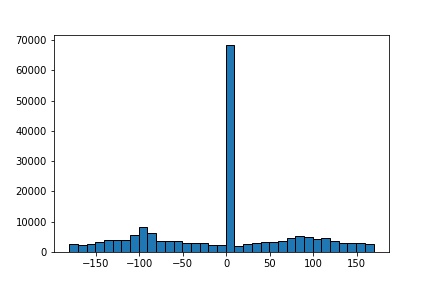

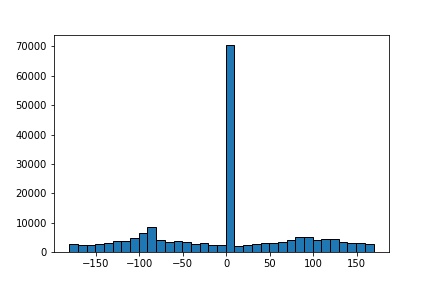

original angle histogram

straightened

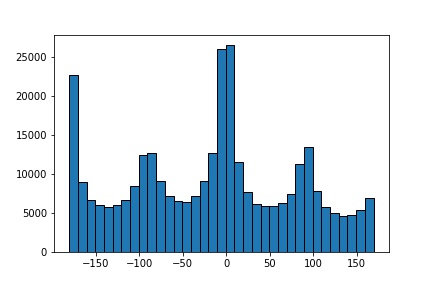

straightened angle histogram

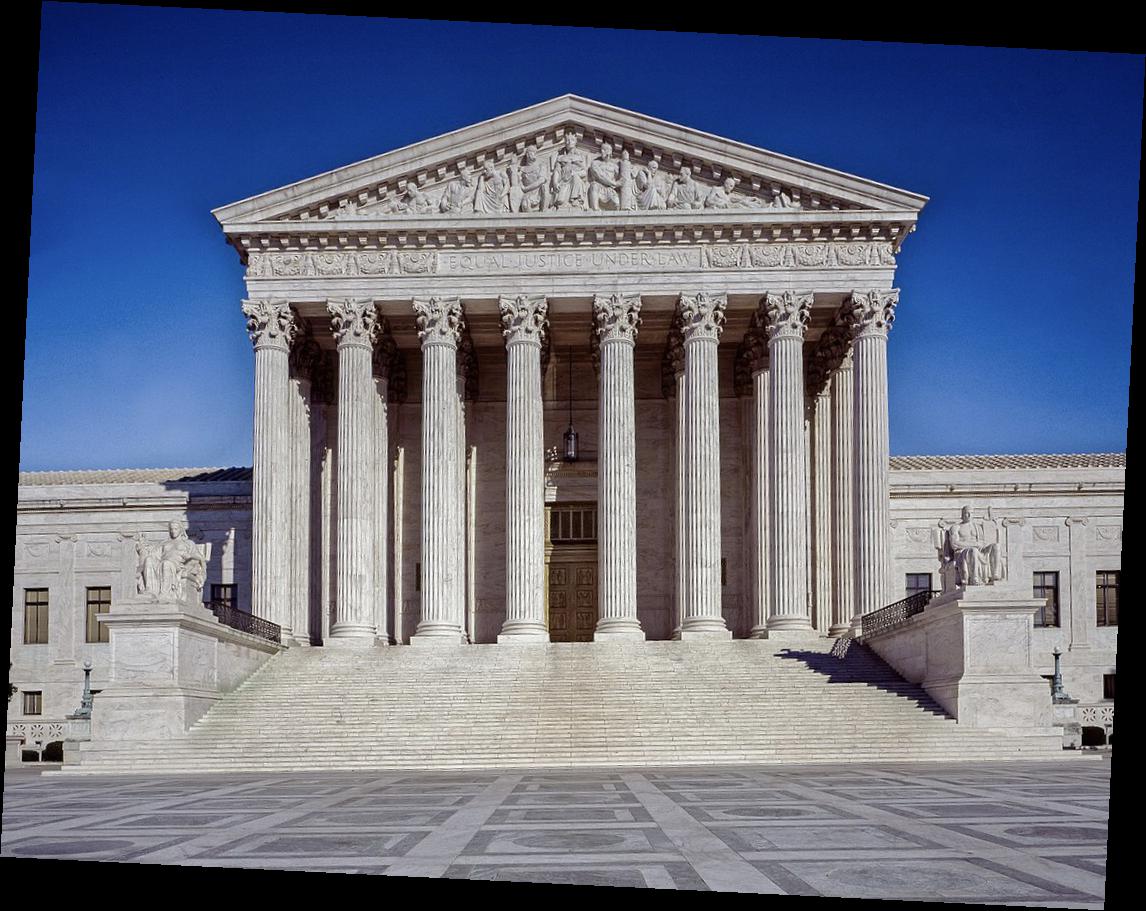

original

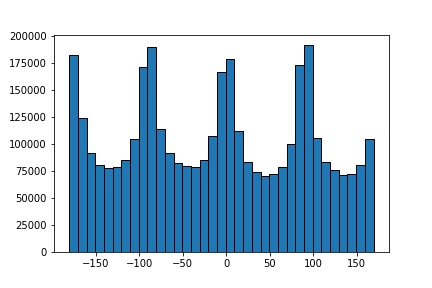

original angle histogram

straightened

straightened angle histogram

original

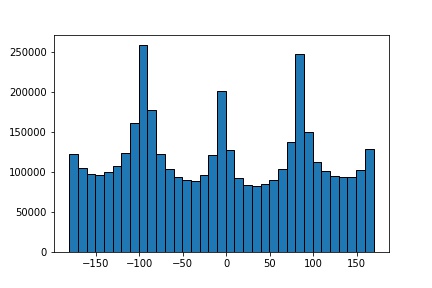

original angle histogram

straightened

straightened angle histogram

original

original angle histogram

"straightened"

straightened angle histogram

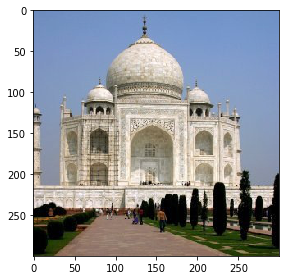

Part 2.1

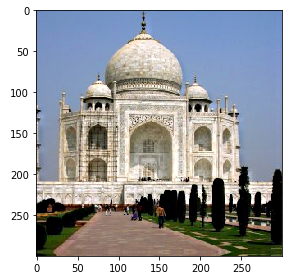

The Taj image was sharpened with an alpha of .5 and a gaussian with sigma = 2.5. I picked a sharp image of Lombard street in San Francisco, blurred it using a gaussian with sigma = 5, then attempted to resharpen it with an unsharp masking filter with alpha = .5. It is clear that information is lost when trying to sharpen the blurry image, because the resharpened image lacks the same detail as the original (the brick lines in the road, leaves of the plants, and houses in the distance, for example, are not as sharp as the original).

original

sharpened

original

blurred

resharpened

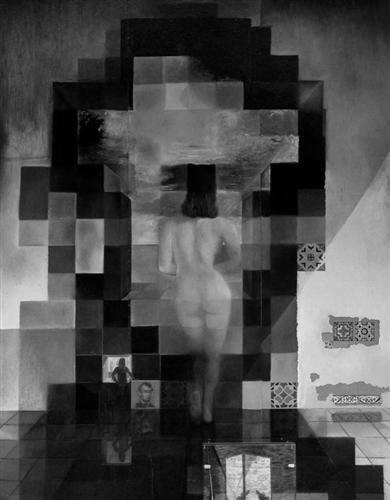

Part 2.2

For all of the input images, I used sigma = 2 for the high pass filter and sigma = 5 for the low pass filter. I also used color to enhance the effect by creating a hybrid on each color channel separately, then taking the average brightness of the low and high frequency component for that channel since the sum made the final image too bright.

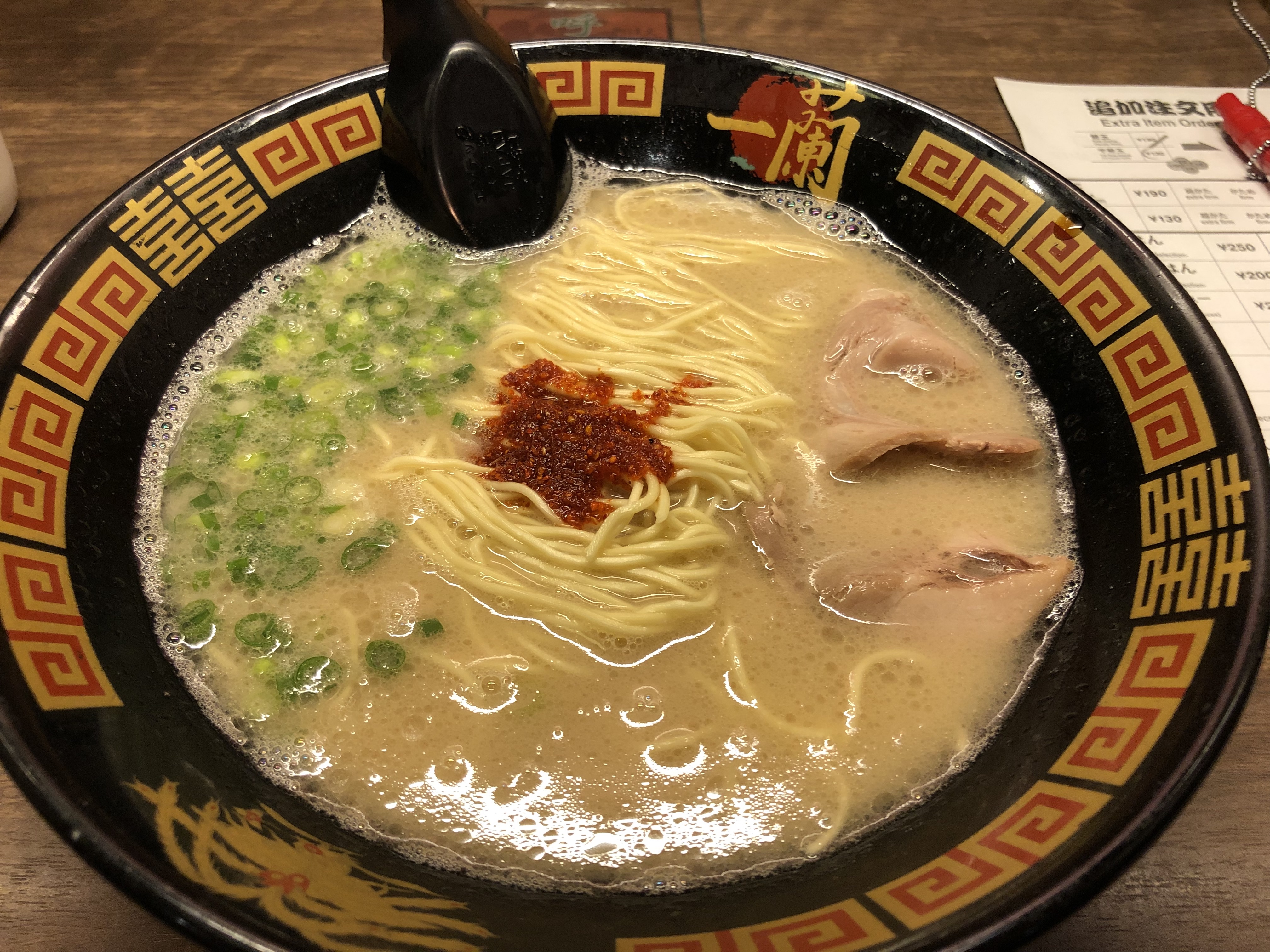

The ramen picture doesn't achieve the same effect of seeing one image from a distance and a different one up close--instead it just looks like the same bowl of ramen with toppings from both input ramens. This makes sense because the input images are too similar (both have toppings in the higher frequencies and broth in the lower frequencies), so there wouldn't be much distinction between them in a hybrid image.

high freq input

low freq input

hybrid result

high freq input

low freq input

hybrid result

high freq input

low freq input

hybrid result

gray hybrid result

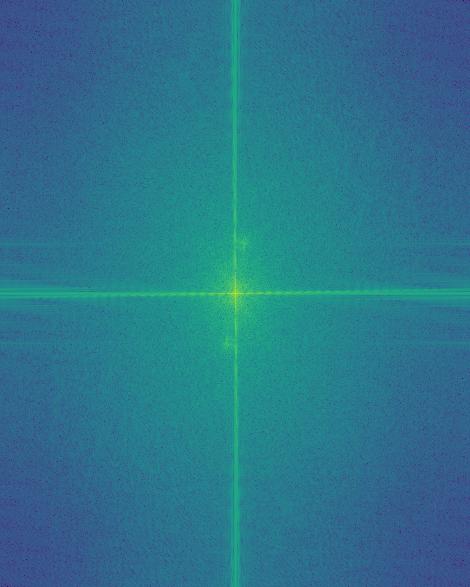

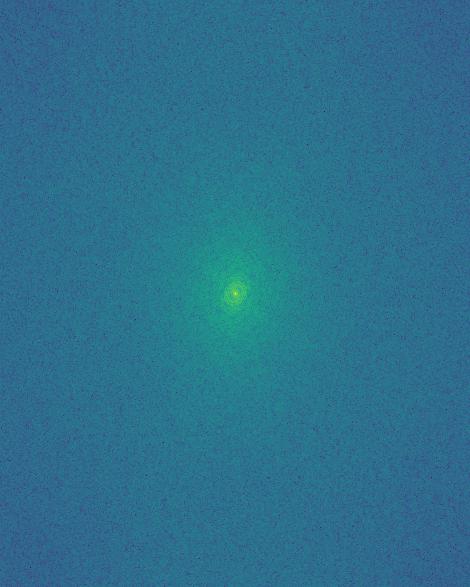

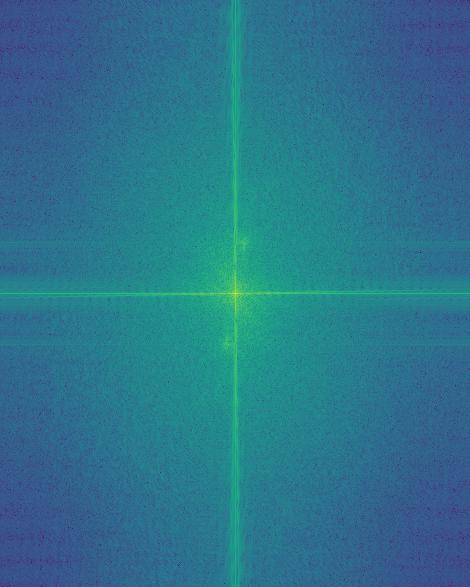

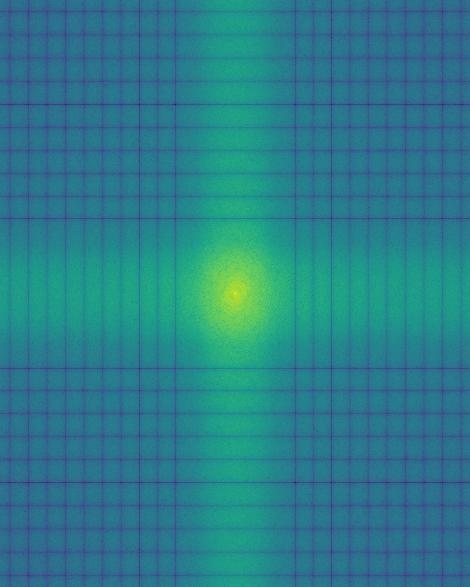

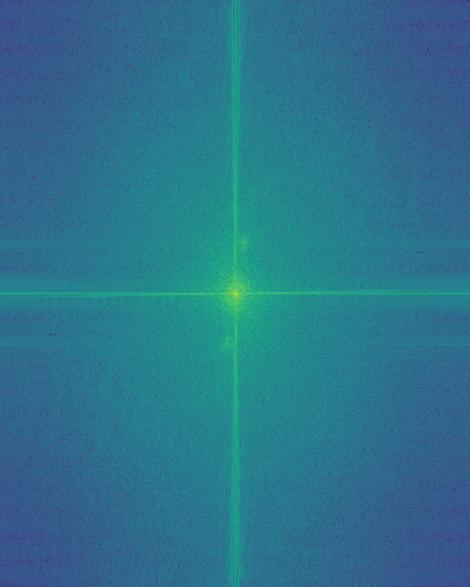

Here is the cat and cinnamon roll fusion in the Fourier domain:

high freq input FT

low freq input FT

high pass filtered FT

low pass filtered FT

gray hybrid result FT

Part 2.3

Each level of the gaussian stack was created by applying a gaussian filter to the level before it, with the first level as the original image. Each level of the laplacian stack is the difference between adjacent levels of the gaussian stack, with the last level as the top of the gaussian stack (lowest frequencies). For the below figures, the top row contains the gaussian stack with N = 5 levels and sigma = 2, and the bottom row contains the corresponding laplacian stack.

Here are the gaussian and laplacian stacks for the cat and cinnamon roll hybrid from part 2.2.

Part 2.4

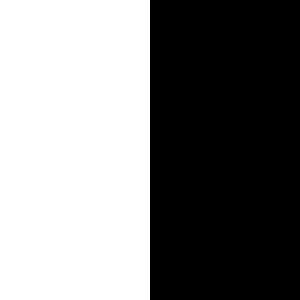

To blend two images using a mask, I computed the laplacian stacks for the inputs and a gaussian stack for the mask. I combine the laplacian stacks by taking a weighted sum (with weights from the gaussian stack). To get the final image, I sum all of the levels of the blended pyramid. To get a color image, this approach simply is applied to each of the color channels separately, then stacking them.

apple

orange

mask

blended

oatmeal raisin cookie

chocolate chip cookie

mask

blended

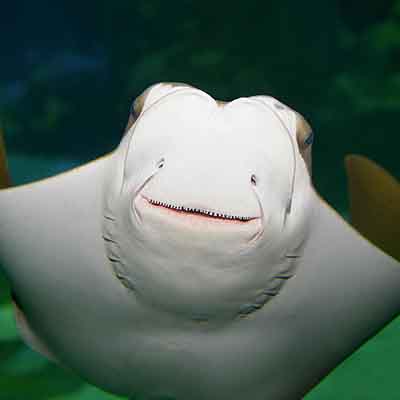

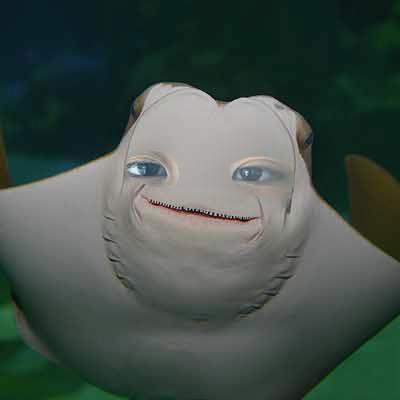

human

stingray

mask

blended

Finally, here are the laplacian stacks for the above result and each of the masked components:

Overall, I really enjoyed this assignment and thought it was really cool how the laplacian stack breaks down the frequencies and explains the optical illusion in the hybrid images like Lincoln + Gala and cat + cinnamon roll!