Project 2 -- Fun with Filters and Frequencies

By Myles Domingo

Overview

In this project, we create various constructions using 2D convolutions and filtering.

1.1 Finite Difference Operators

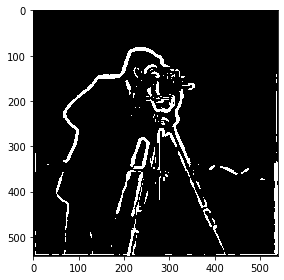

To calculate the gradient magnitude of our cameraman image, we take the partial derivatives in x and y. We convert our image to grayscale and convolve it with dx = [1, -1] and dy = [ [1], [-1] ]. This gives us two images that showcases horizontal and vertical edges in our image.

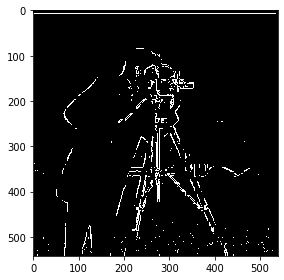

To render the gradient magnitude image, we take the euclidian distance of our partial derivatives, such that gradient = sqrt((dx / df)^2 + (dy/df)^2). We binarize the output gradient and set a threshold = 0.20 to reduce noise while capturing most of the edges on the image.

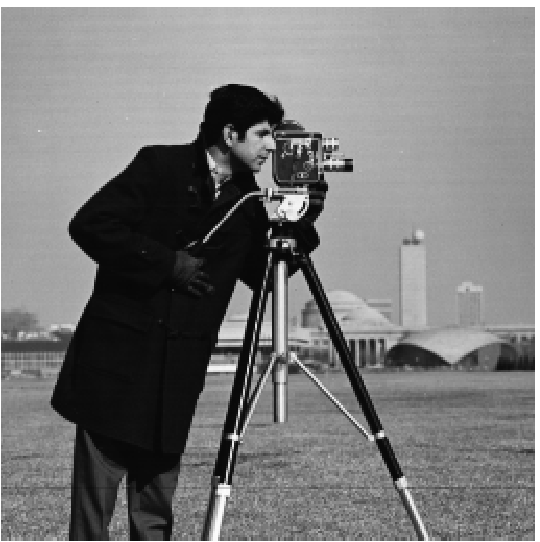

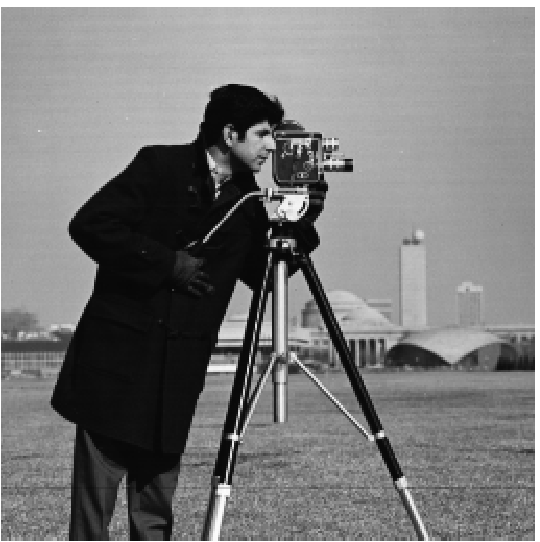

Original Image

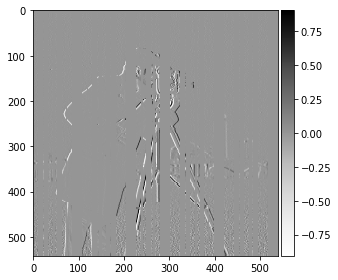

Partial derivative with resepect to x

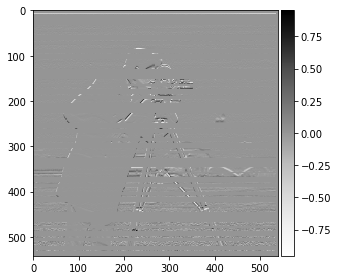

Partial derivative with resepect to y

Gradient Magnitude

Binarized Gradient Magnitude, threshold = 0.20

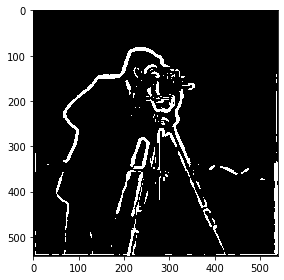

1.2 Derivative of Gaussian Operator

Our initial implentation of our gradient magnitude image remains noisy. To reduce noise, we convolve our original camerman image with a Gaussian Filter G, with kernel size = 10 and sigma = 5. When we perform the same operations to get our gradient magnitude image, we can see the difference in that there is a lot less noise and smooth, fatter edges. We reduce our cutoff threshold when we binarize the image to capture a greater amount of edges.

We can also generate our gradient magnitude image by taking a derivative of our Gaussian Filter G and applying it to our image, as they are both linear functions.

Original Image

Gaussian Filter applied

Gradient Magnitude with Derivatives of Gaussian

Binarized Gradient Magnitude, threshold = 0.04

1.3 Image Straightening

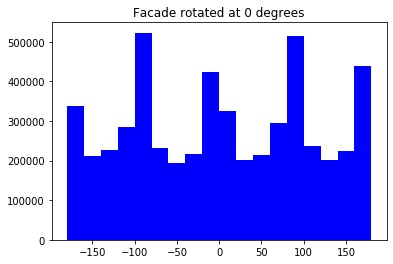

We can determine if an image is straight if it has a significant amount of vertical or horizontal edges (in most cases). To create an auto straightener, we set an angle window [-10, 10] degrees and find the optimal angle rotation that yields the largest number of -180, 90, 0, 90, 180 edges. These edges can be found by calculating atan(dx/df, dy/df).

To prevent generating new edges when rotating, we center crop 15% of the image and count edges within the image. Most input images have worked well within the angle window provided; the only cases where this would not work is with purposefully slanted objects or necessary rotations outside of the angle window.

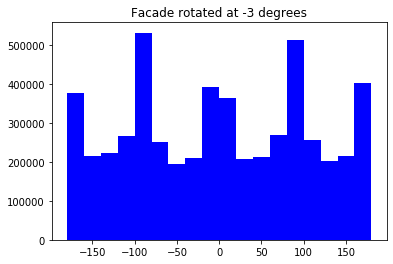

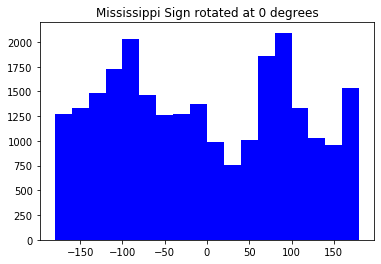

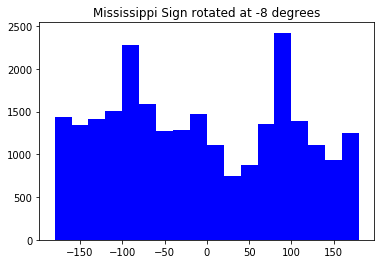

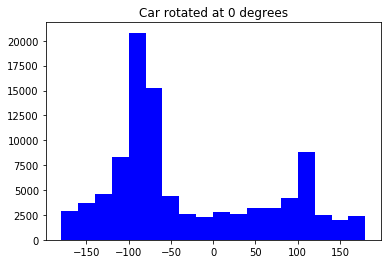

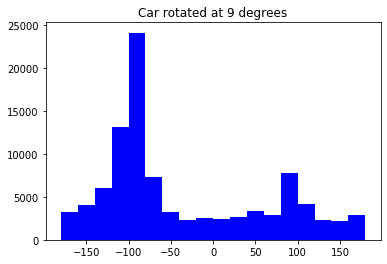

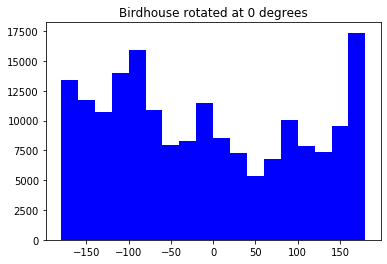

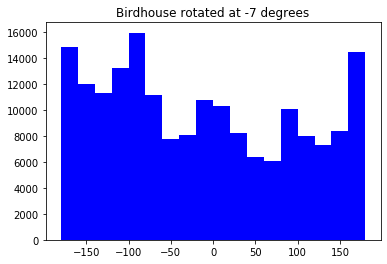

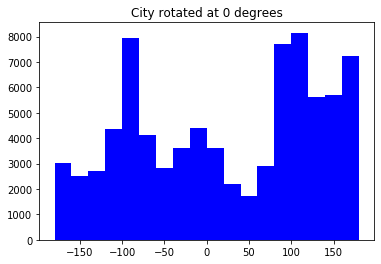

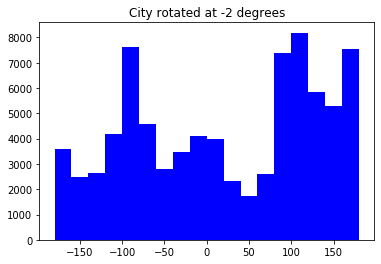

Note: We see that this is the optimal angle rotation not only visually from the images, but also in our histograms. We see that there are a significant increase in number of edges at -180, 90, 0, 90 degrees after rotation. When assessing, be mindful of the y-scale.

Facade, Original

Facade, Rotated -3 degrees

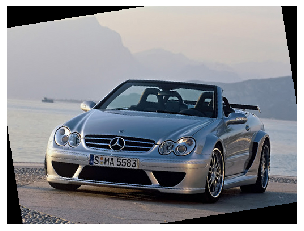

Car, Original

Car, Rotated -8 degrees

Car, Original

Car, Rotated 9 degrees

Birdhouse, Original

Birdhouse, Rotated -7 degrees

City, Original

City, Rotated -2 degrees

Note: We see that this final image is a failure case. It is already straight, however, the building has diagonal lines which create false straight edges under our scoring system.

2.1 Image Sharpening

We can sharpen image by creating a low pass filter, subtracting it from the original image, and collecting the high frequencies of the image. We can add these high frequencies to the original image using the unsharp mask filter.

Taj, Original

Taj, with a sharpen filter

Flower, Original

Flower, applied Gaussian blur

Flower, re-applied sharpen filter

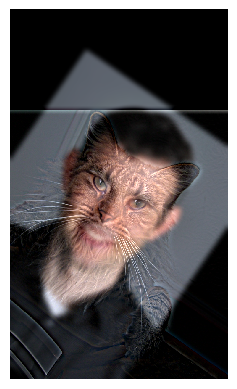

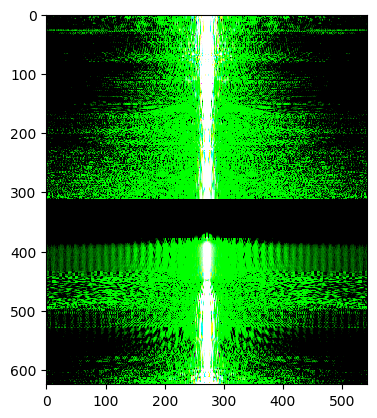

2.2 Hybrid Images

We can create hybrid images that change in interpretation based on viewing distances by combining the low pass filter of an image and a high pass filter of another. We align the images by dominant features, such as nose bridges, eyes, ears, and scale such that these points align with each other.

We also experiement with cutoff ranges by modifying sigma of our Gaussian filter to increase/decrease visual dominance of our low and high filters.

Bells and Whistles I introduced colors into the hybrid images by separating each color channel of the image and performing the convolutions for the low-pass and high-pass filters. I personally think it looks better for both when the color paletters are somewhat the same — otherwise, the low frequency component as it provides a sturdy base for the image.

Low Pass Filter, sigma = 10

High Pass Filter, sigma = 5

Low Pass Filter, sigma = 5

High Pass Filter, sigma = 10

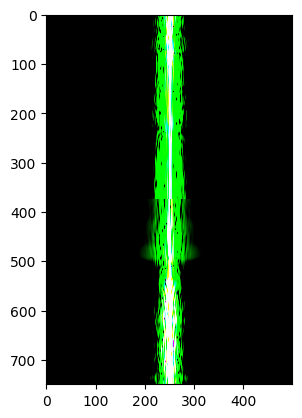

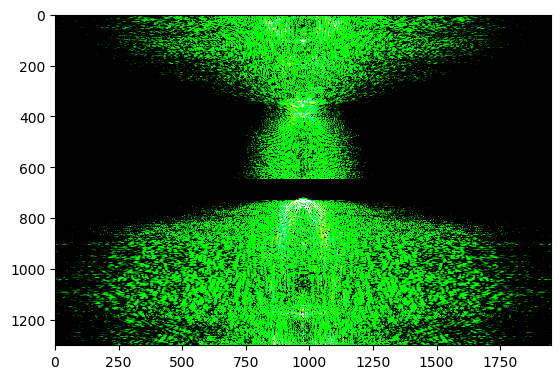

Fourier Analysis: Here are frequency analysises in the fourier domain of these images. We can see the additive relationship between the low pass and high pass frequency domains.

Low Pass Filter

High Pass Filter

Hybrid Image

Low Pass Filter, sigma = 10

High Pass Filter, sigma = 8

Note: I consider this image is a failure case. The shapes of the face and animals are too compositonally different, and the black of eyes of the seal are dominated by the portrait's eyes regardless of sigma and viewing distance.

2.3 Laplacian and Gaussian Stacks

We create a Gaussian stack by applying a Gaussian filter across the same image multiple times. This removes the need for downsampling an image, and can preserve dimensions.

Gaussian Stack, N = 5 Lincoln

Note: Failed to create a laplacian stack because I believe my implenetation for gaussian stacks are too slow, and takes too much time to iterate and calculate the laplacian for each level as a function of the gaussian stack. Normally, how this would work is that I would take the difference of current and next level of the gaussian stack.

Attempt at Creating Laplacian Stack, N = 5

Challenges and Conclusion

Even if unfinished, I really enjoyed implementing this product. The main things I’ve learned are the vast numerous use cases of a Gaussian filter, which can be used with a simple convolution. The main challenge was understanding the underlying python libraries, including how to plot images, separate color channels, and handle convolutions of arrays of different dimensions.