Project 3: Face Morphing

Thanakul Wattanawong: 3034194999

For this project I had to showcase a variety of morphing techniques.

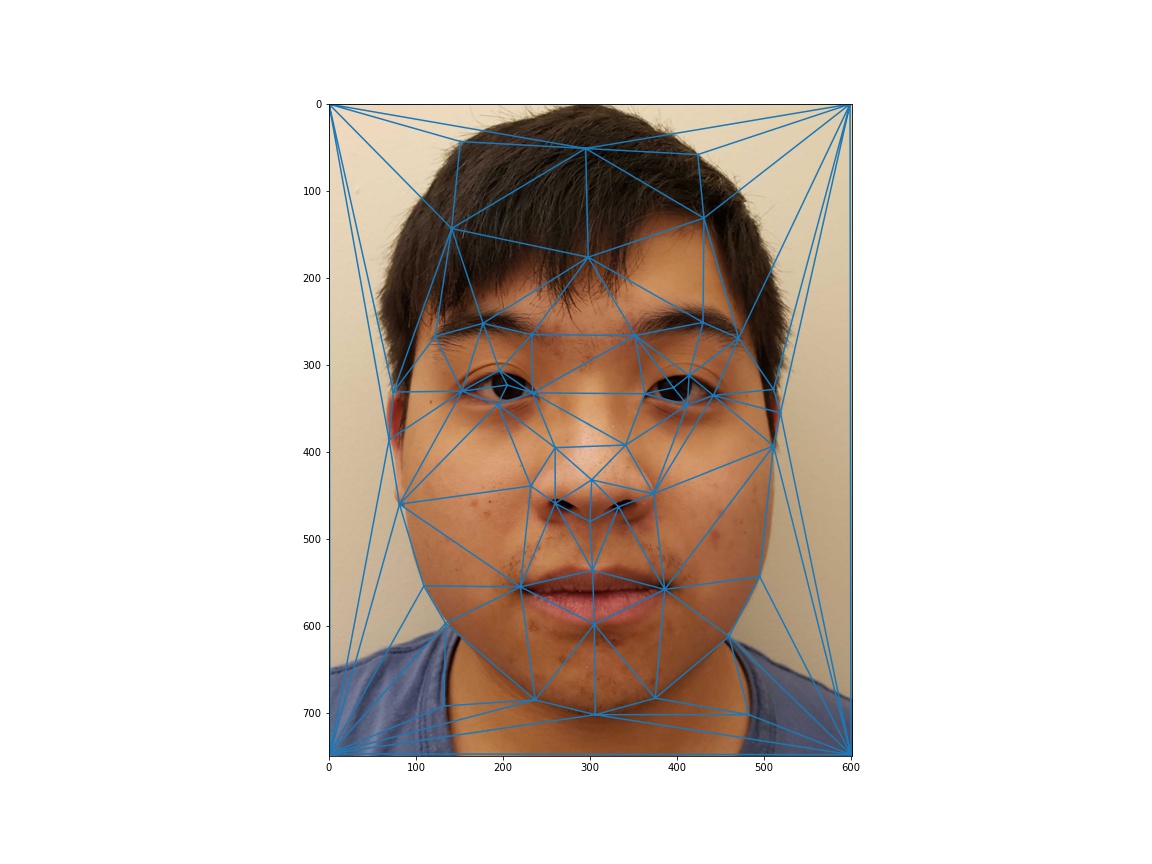

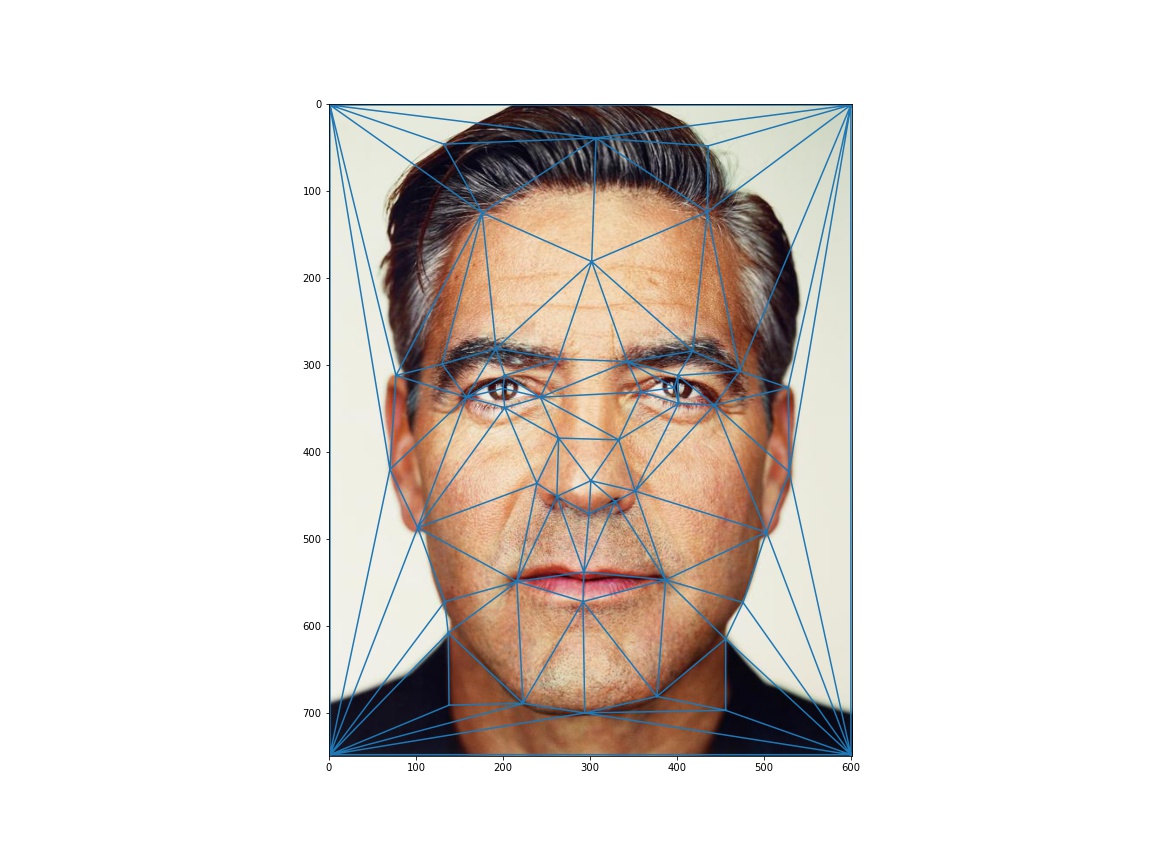

First I aligned my images using Photoshop in order to make the future morphing smoother, then I used cpinput in order to define correspondence points between my portrait and George. Then I used scipy’s Delaunay function to compute the triangulations. The results are below:

For the midpoint face, first I computed the element wise average of the correspondence points I defined. After that, I solved for the affine transformation matrix and used that to inverse warp the triangles from both me and George into the average shape. For interpolation I used RectBivariateSpline over all three color channels. Finally, I cross-dissolved the images by averaging their color values. Here are the results:

Here I made an animation morphing me to George. While I set warp_frac and dissolve_frac linearly from 0 to 1, it might be interesting to vary the function and see if results are better. Here is the result:

Link in case the above image is not animated: https://giphy.com/gifs/z8MHKuIux4cRvkudeO

I chose the Danish Computer Scientist dataset for this one, specifically the males. After reading in all the images I found the average of all their shapes and then morphed all the faces into it. Here is the result, along with some of the faces morphed into the average:

You can see the fourth image is a little weird and I assume his huge forehead had something to do with it. In addition the perspective seems slightly off suggesting he was not facing the camera fully.

I also had to compute my face warped into the average geometry and the average face warped into my geometry:

There were some artifacts here in the right image but the center of the face is mostly intact. The eyes are slightly further from the nose and the cheeks are a little widened. I assume the artifacts are due to the triangles that link to the dummy points in the corners as you can see from this illustration post-morph:

I chose to exaggerate the shape in this section, and to do this I found the delta between my points vs. the average Danish male computer scientist, then added various factors of that back to the mean face before morphing. Here are the results for various factors (where k = 1 is morphing back to my face’s shape):

K = -1.5 | K = 1.5 |

K = -2 | K = 2 |

You can notice some triangles inverting and I think this is because the points are getting crossed. However, it’s possible to see some of my features such as cheeks and wider eyes being exaggerated and de-exaggerated depending on the magnitude and direction of K.

So according to the paper described in lecture a good way to change features is to compute prototypes and use the movements between those two means in order to transform arbitrary input images in the subspace.

However, as a student on a deadline I wonder if we can get somewhere similar with a bit less work. Ideally, by being smart about what average dataset you use you’d be able to perform shape and appearance transfer with only one set of correspondences between an image and an average that differs only in the feature that we want.

For this part I decided to use my friend Andrew Aikawa and an average of the girl group TWICE, where the primary change here is the gender.

Original images:

The shape transfer is simple, and doing it the way described in this project produces very acceptable results if there were no glasses:

Blindly warping the appearance from the average also works well enough.

Performing both, and cross dissolving at 0.5 we get the following result. This is the same as the midway face step except the shape is biased fully in favor of the average.

A major problem with cross dissolve here is since only the faces form a subspace, the hair and clothing all overlap and are both present. An idea here is to use a mask to only select the region we want to merge.

Luckily the natural way of defining correspondences for this project already gives us labeled data on where the face is:

So it’s natural to form a mask of the appearance we want, then work from there. I created a mask of the face, then gaussian blurred it to avoid sharp edges, then started cross-dissolving that region at various ratios:

Mask | Cutout |

Merged images:

0.2 | 0.35 | 0.5 | 0.65 | 0.8 |

You’ll notice there are still some dark parts that come from the black in the post-mask image. This could probably be fixed with proper normalization during the merge process and if the 189 midterm wasn’t on Tuesday I would do it.

I think 0.35 looks the best. We get a lot of the fairer skin, larger lips, and symmetrical facial features all for free. Additionally the Dali-esque glasses have kind of faded out.

I participated in a class morph video with Roma Desai, Vanessa Lin, Jason Wang, Jenny Song, Ankit Agarwal, Won Ryu, Briana Zhang, April Sin, Tushar Sharma, Michael Wang, Ja (Thanakul) Wattanawong, William Loo, Gary Yang, and others. Gary is handling the full video so please check out his page. My contribution is below:

Link in case the above does not work: https://giphy.com/gifs/y6Uq80QbzlkR6i6m5e

This was again a very fun project! It got a little tedious at times doing all the labeling manually but it seems like the next project will remedy that. Also, it’s interesting to see how qualities such as perfect symmetry start to appear over a large average of imperfect image faces.