This project will explore many concepts of face morphing and produce several "morph" animation of my face to someone else's face.

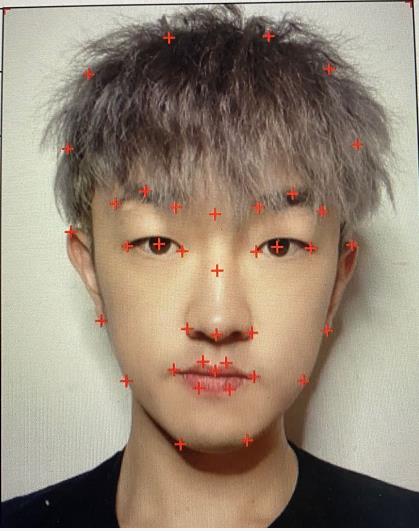

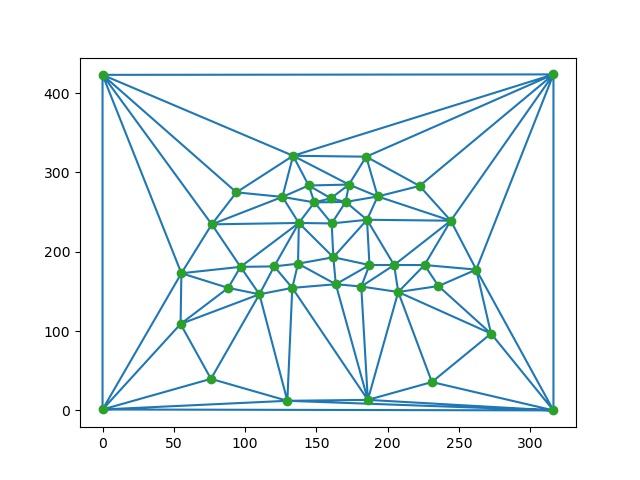

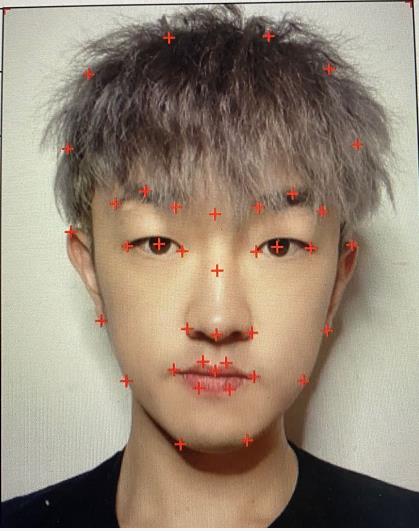

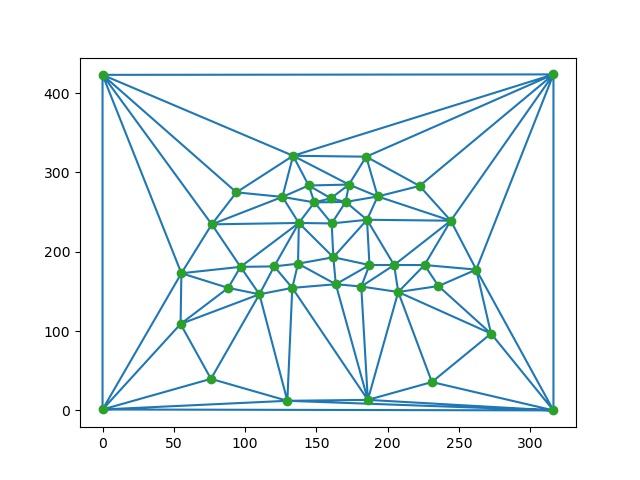

In all images I used for face morphoring in the following sections, I took 42 points in totoal: 38 points on the face and 4 points at the corner of the image. Then, in order to save the amount of manual work, I logged each point after the first time I manually set the points. The example of a image, its key points, and log is shown as follows:

I used this patter for most of the morphing process below except for the one interacting with Brazil's dataset. For that one, in order to have the correct correspondence, I used the 46 points in order as annotated in the dataset.

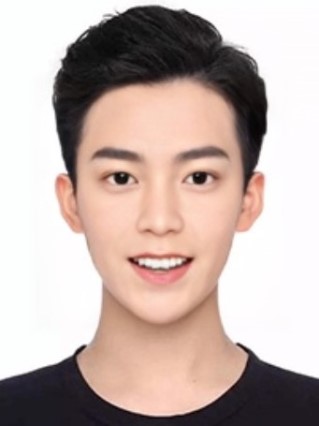

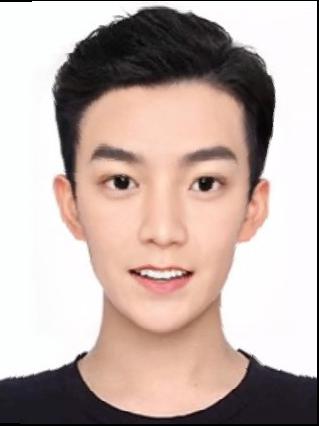

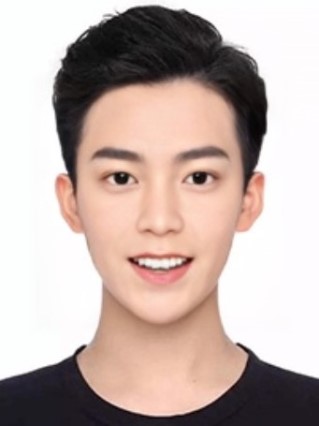

In order to compute the mid-way face, I first computing the average shape by taking the average of the key points of my source and target face image. Thereafter, I warpped both faces into that shape, and averaged the colors together. The process is shown as follows:

Using warp_frac and dissolve_frac control shape warping and cross-dissolve respectively, I generated several Morph Sequence videos, which show the process of face morphing from one to another. Some examples are shown as follows. They are representative because the first one is a normal morphing, the second one is a morphing across gender, and the third one is morphing with lots of noises (both for the subject and for background):

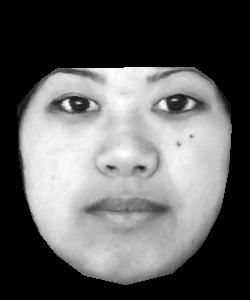

In this part, I chose to use Brazil's dataset and the pre-obtained keypoints for computing mean face. In this part, I first parsed all keypoint position from the log file provided in the dataset. Thereafter, I computed an average face shape on the whole population (200 people) for 2 sets (normal facial expression and smile). Then I warped all faces to the average shape according to their set. Finally, I computed the average face of the population and got the following results:

As we noticed, all warped images only have their face left, and is correctly warped to the same shape. The backgrond became black because the dataset did not cover the corner of the image as its keypoint. Finally, the average face I got is as follows:

Also, I split the dataset into two subsets by gender, and the gender mean is shown as follows:

Then I warped my face into the average geometry and vice versa. In order to do so, I re-labeled my face image's keypoints so that they match with the dataset's keypoints labels.

In this section, I created a caricature of my face by extrapolating from the population mean with different values of alpha chosen. Also, in order to see the effect, I computed gender-specific mean and did extrapolating on both mean.

As we can notice, there is no obvious difference between the subset of gender specific pictures and that of the total mean. This may be caused by: 1) out data-set is not large enough to be inclusive; 2) The choice of keypoints ignored some important gender features (such as hair).

As shown in the previous question, using the images in set B will resulting my selfie become a "smiling picture".