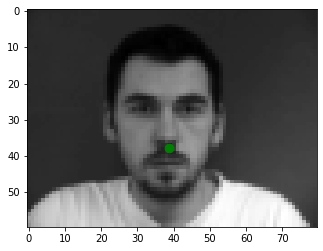

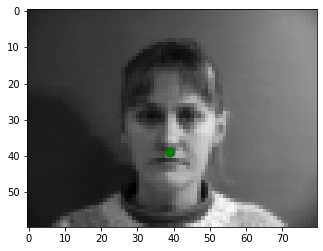

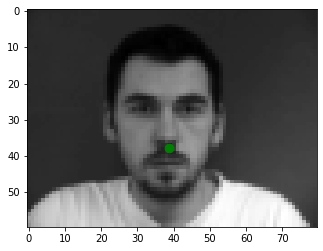

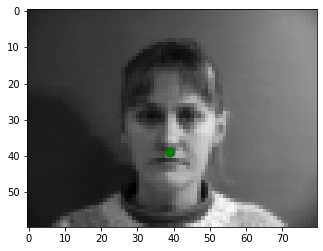

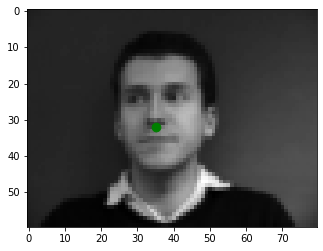

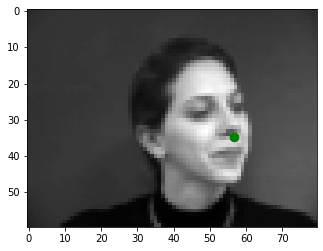

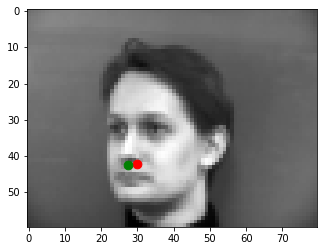

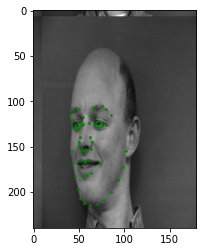

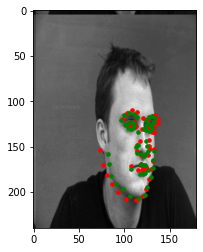

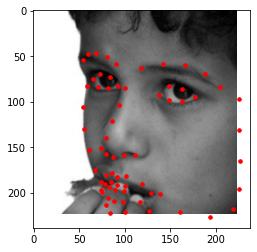

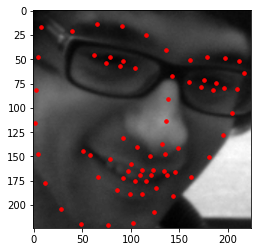

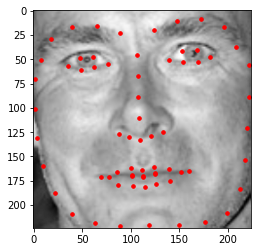

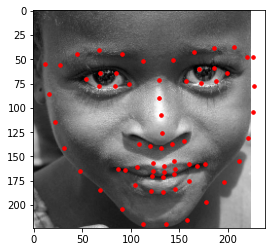

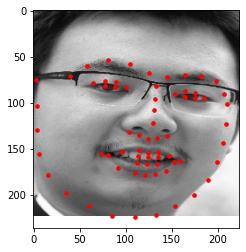

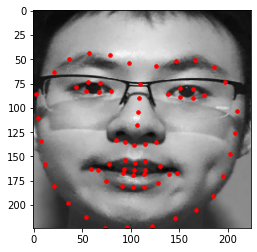

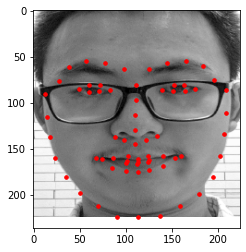

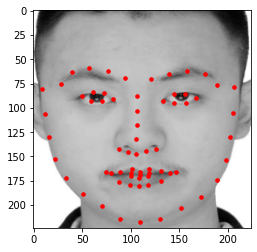

I used the pytorch dataset class and dataloader method to load the data and the groundtruth keypoints into the pipeline, here are sampled image from my dataloader visualized with ground-truth keypoints.

| class

NoseNet(nn.Module): def __init__(self): super(NoseNet, self).__init__() self.conv1 = nn.Conv2d(1, 32, 7) self.conv2 = nn.Conv2d(32, 24, 5) self.conv3 = nn.Conv2d(24, 12, 3) self.fc1 = nn.Linear(12 * 4 * 7, 6 * 4 * 7) self.fc2 = nn.Linear( 6 * 4 * 7, 2) def forward(self, x): x = F.max_pool2d(F.relu(self.conv1(x)), 2) x = F.max_pool2d(F.relu(self.conv2(x)), 2) x = F.max_pool2d(F.relu(self.conv3(x)), 2) x = x.view(-1, self.num_flat_features(x)) x = F.relu(self.fc1(x)) x = self.fc2(x) return x def num_flat_features(self, x): size = x.size()[1:] num_features = 1 for s in size: num_features *= s return num_features |

|

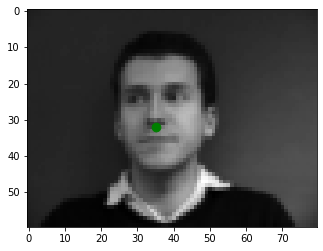

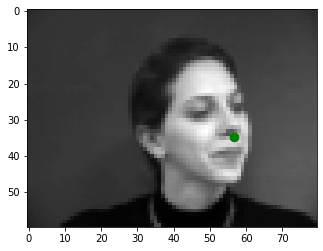

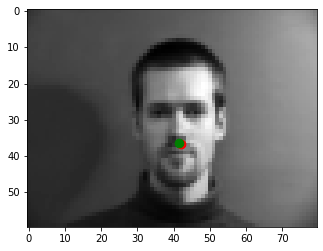

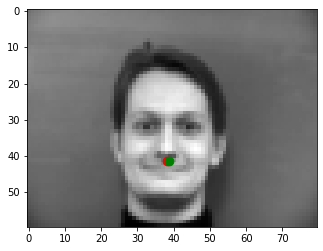

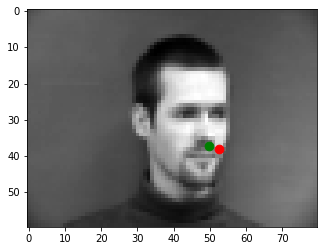

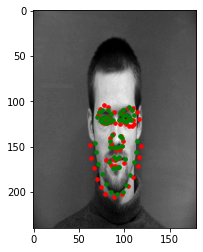

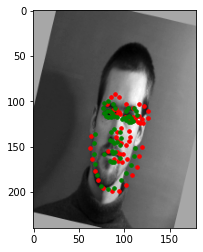

Here are 2 examples that worked and 2 that did not. I think the reason the model failed is that it could not detect noses on angled faces. You can see that for the same person, when the face is angled, the model consistently fails. The left two are the good examples and the right two are the failed ones.

|

|

| class

FaceNet(nn.Module): def __init__(self): super(FaceNet, self).__init__() self.conv1 = nn.Conv2d(1, 128, 3) self.conv2 = nn.Conv2d(128, 64, 3) self.conv3 = nn.Conv2d(64, 32, 3) self.conv4 = nn.Conv2d(32, 16, 3) self.conv5 = nn.Conv2d(16, 16, 3) self.conv6 = nn.Conv2d(16, 16, 3) self.fc1 = nn.Linear(16 * 9 * 5, 8 * 9 * 5) self.fc2 = nn.Linear(8 * 9 * 5, 116) def forward(self, x): x = F.max_pool2d(F.relu(self.conv1(x)), 2) x = F.max_pool2d(F.relu(self.conv2(x)), 2) x = F.max_pool2d(F.relu(self.conv3(x)), 2) x = F.max_pool2d(F.relu(self.conv4(x)),2) x = F.relu(self.conv5(x)) x = F.relu(self.conv6(x)) x = x.view(-1, self.num_flat_features(x)) x = F.relu(self.fc1(x)) x = self.fc2(x) return x def num_flat_features(self, x): size = x.size()[1:] # all dimensions except the batch dimension num_features = 1 for s in size: num_features *= s return num_features |

|

|

|

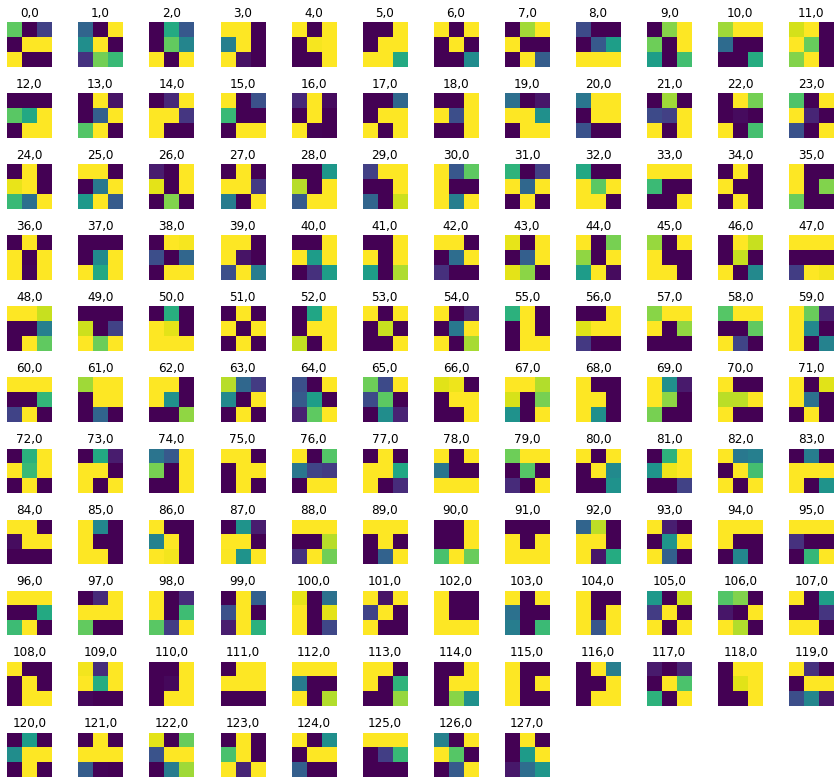

Here are the learned filters.

| net

= models.resnet18() net.conv1 = nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3,bias=False) net.fc = nn.Linear(512, 136) |

|

I incorporated the keypoint

detection into project 3's morph function and generated a video of the 4

images of myself shown in part 3 above morphed. Here is the link to the

video if the below is not loading. It is implemented by changing the

load_points function of project 3 to use the model trained in part 3

to detect keypoints and return them.

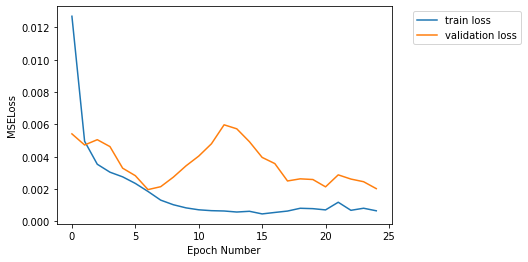

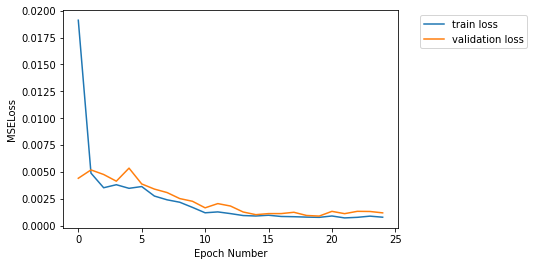

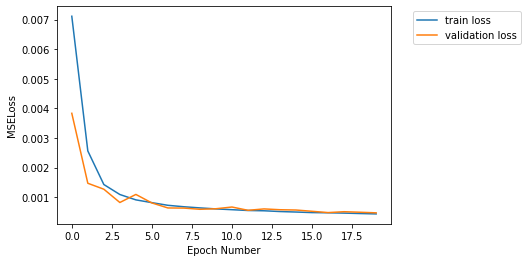

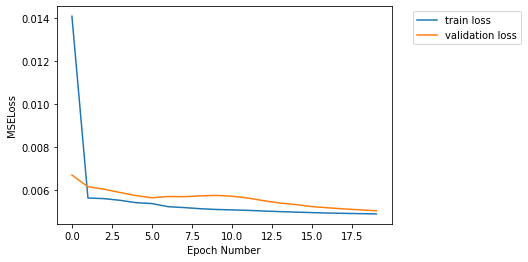

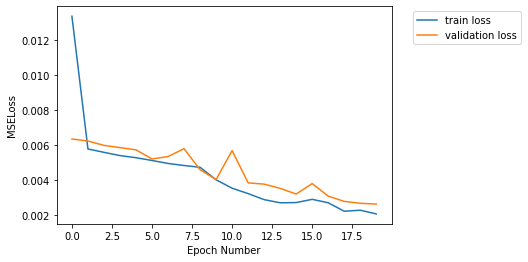

Using anti-aliased max pool to train the network in part 2 on 300 images from the iBug dataset and validating on 50 and doing the same without anti-aliased max pool shows me that when using anti-aliased max pool, the training tends to go smoother but slower. Below are the two train-validation loss graphs, the left being training with anti-aliased max pool and the right being training without anti-aliased max pool. The graphs are drawn for train and validation losses of 20 epochs, the x-axis got set automatically for less cluttered viewing.

|

|