Facial Keypoint Detection with Neural Networks

By Ruochen(Chloe) Liu

Overview

In this project, I use neural networks to automatically detect facial keypoints, and I use PyTorch as the deep learning framework.

Part 1: Nose Tip Detection

In the first part, I use the IMM Face Database for training an initial toy model for nose tip detection. I first define a customized dataset (torch.utils.data.Dataset) where I write my own __init__ and __getitem__ method for initializing and accessing the dataset. And then I transform it into a dataloader (torch.utils.data.DataLoader).

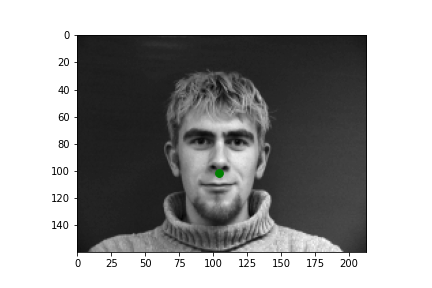

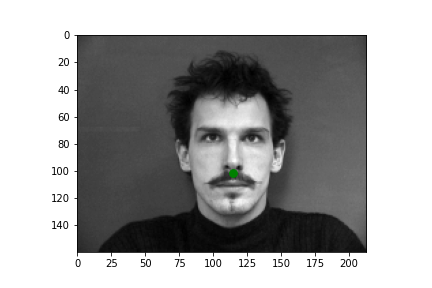

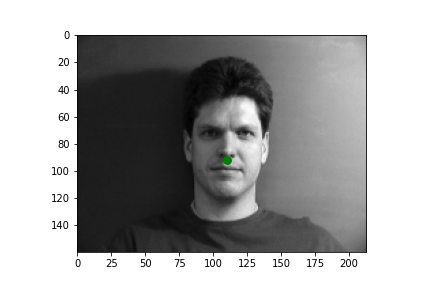

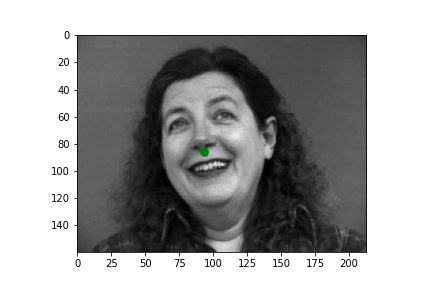

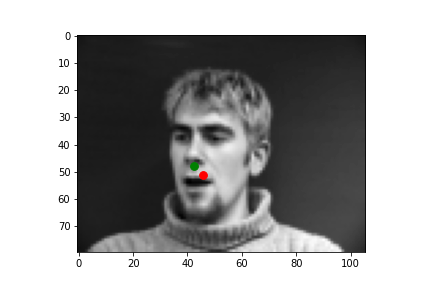

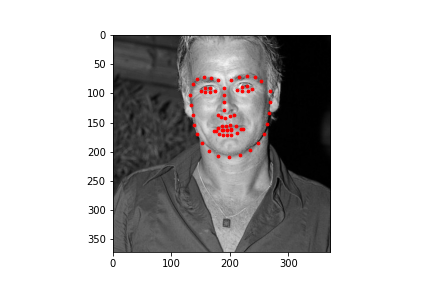

Below are some sample images with corresponding ground-truth keypoints.

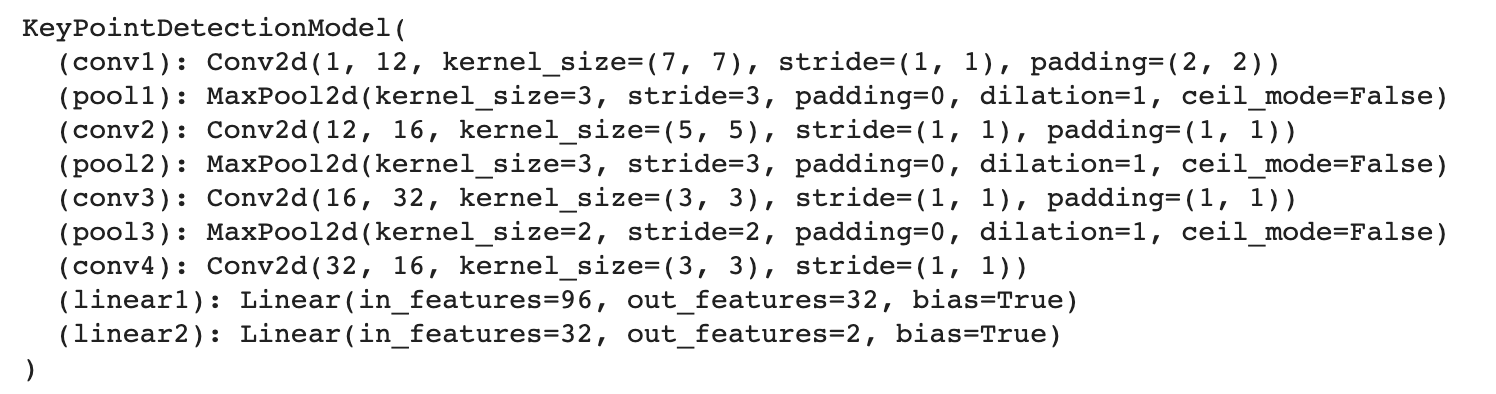

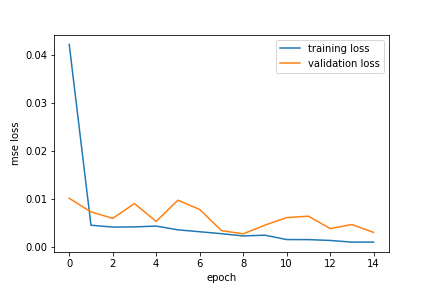

Later I define my own CNN model with 3 convolution layers, each followed by a max pooling layer and a Rectilinear Unit, and 2 fully connected layers in the end. I use the mean squared error loss (torch.nn.MSELoss) as the prediction loss and Adam (torch.optim.Adam) with a learning rate of 1e-3. I train my model for 15 epochs.

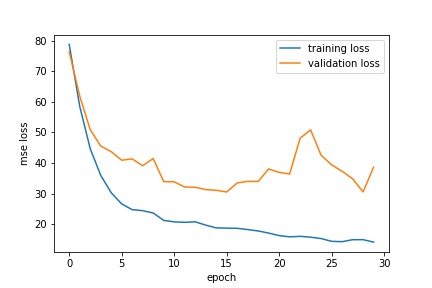

Below are the details of my network and the training curves for train and validation accuracy.

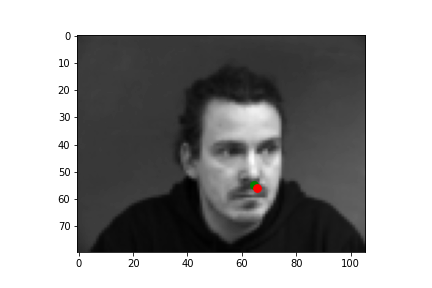

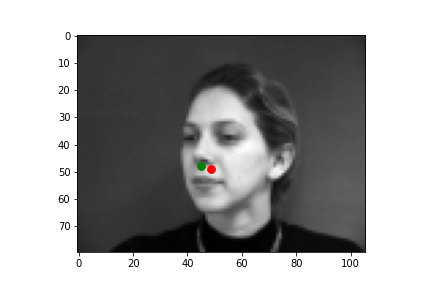

Here are some of the images and the prediction output of my CNN model. The images on the first row are quite successful while the ones on the second row are bad. It is probably because our dataset is relatively small, and if in most of the sample images the person face the front, when we encounter images where the face direction is different, the model could not generalize very well and thus gives us bad result.

Part 2: Full Facial Keypoints Detection

In this section I move forward and detect all 58 facial keypoints/landmarks in the same datasets. The procedure is pretty much the same as the previous part, while in this part I also do some data augmentation, which means randomly changing the brightness and saturation of the image (torchvision.transforms.ColorJitter) and randomly rotating the face for like -15 to 15 degrees, and I also have to update the keypoints to reflect the changes in images. In this way, I can prevent the model from overfitting.

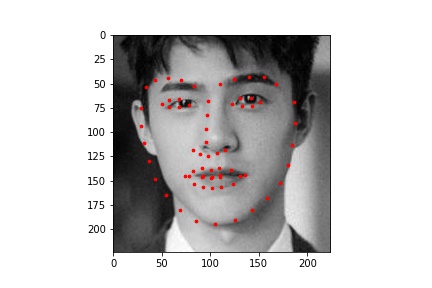

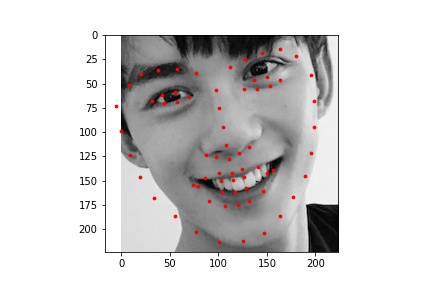

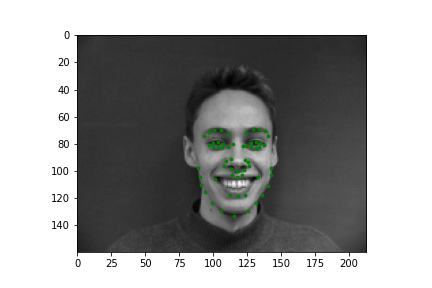

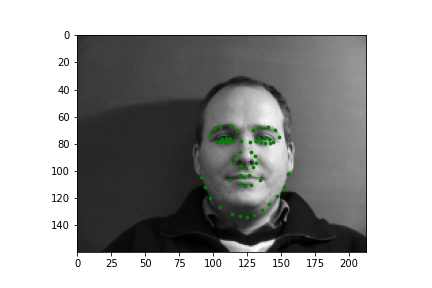

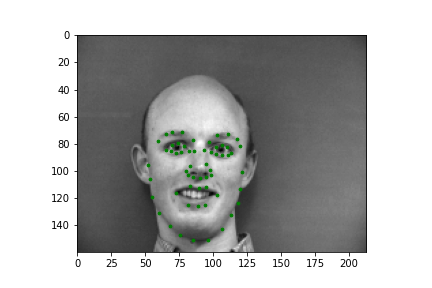

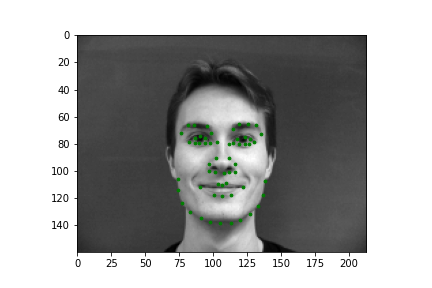

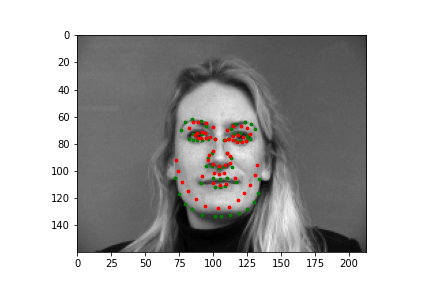

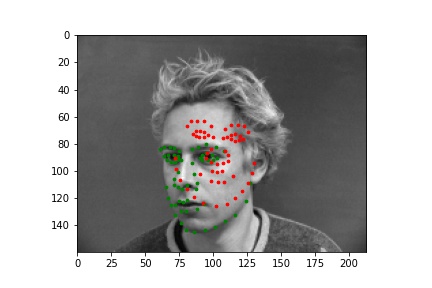

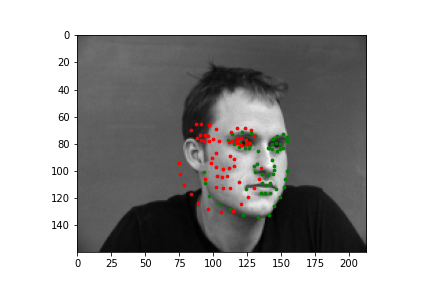

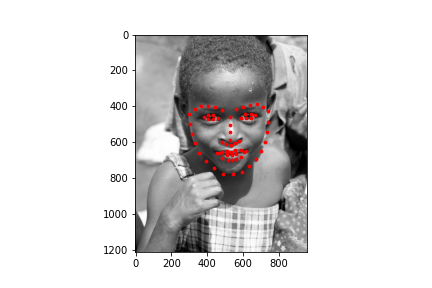

Below are some sample images with corresponding ground-truth keypoints.

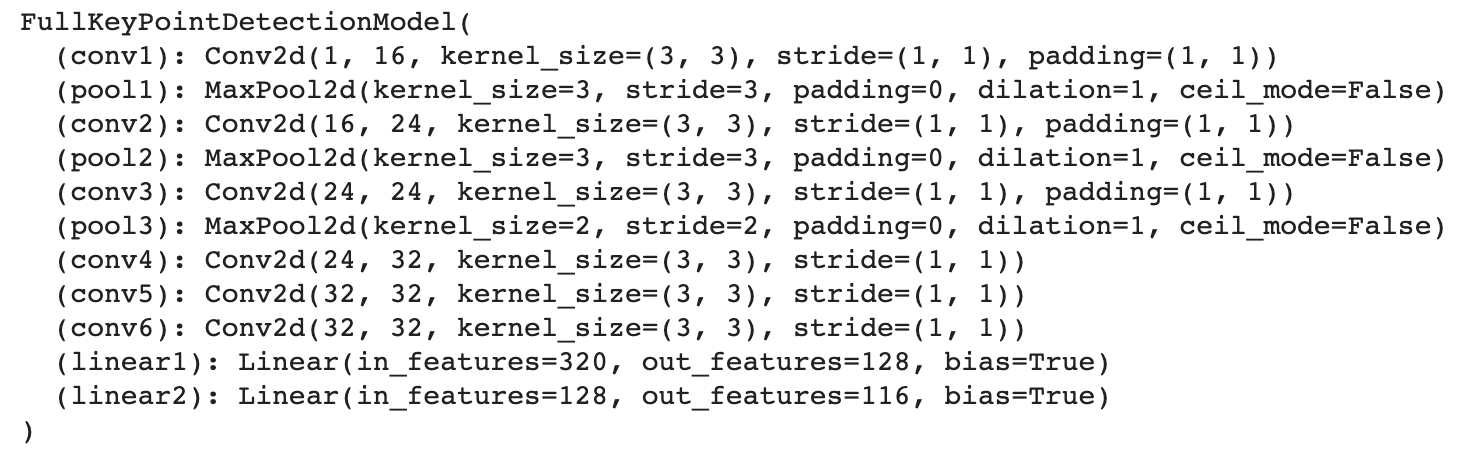

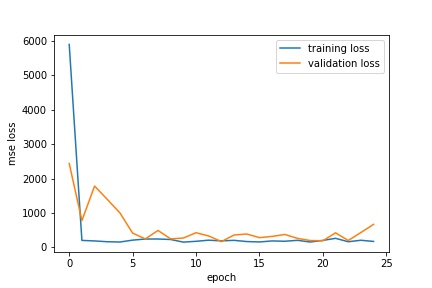

In this part, I define a more complicated CNN model with 4 convolution layers, 3 maxpooling layers and 2 fully connected layers. Again, I use the mean squared error loss (torch.nn.MSELoss) as the prediction loss and Adam (torch.optim.Adam) with a learning rate of 1e-3. I trained the model for 25 epochs. The details of the model architecture and training curves are as follow:

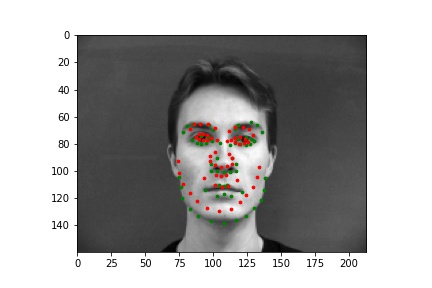

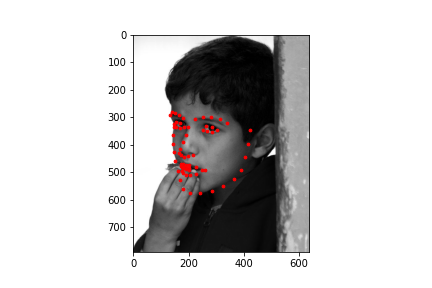

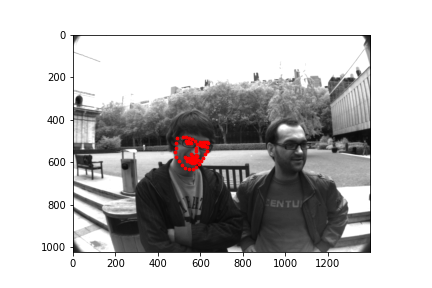

Here are some sample images and my model's predictions. The first two look good, while the two on the second rows are off. Again, this is probably because we do not have enough data, so if most faces in the training set are centered and angled straight forward, my model might have a hard time predicting when it encounters images in the test set that do not follow this pattern.

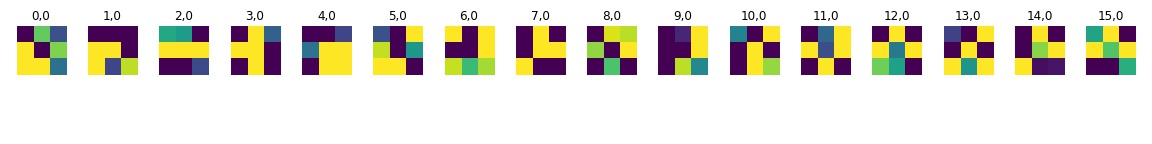

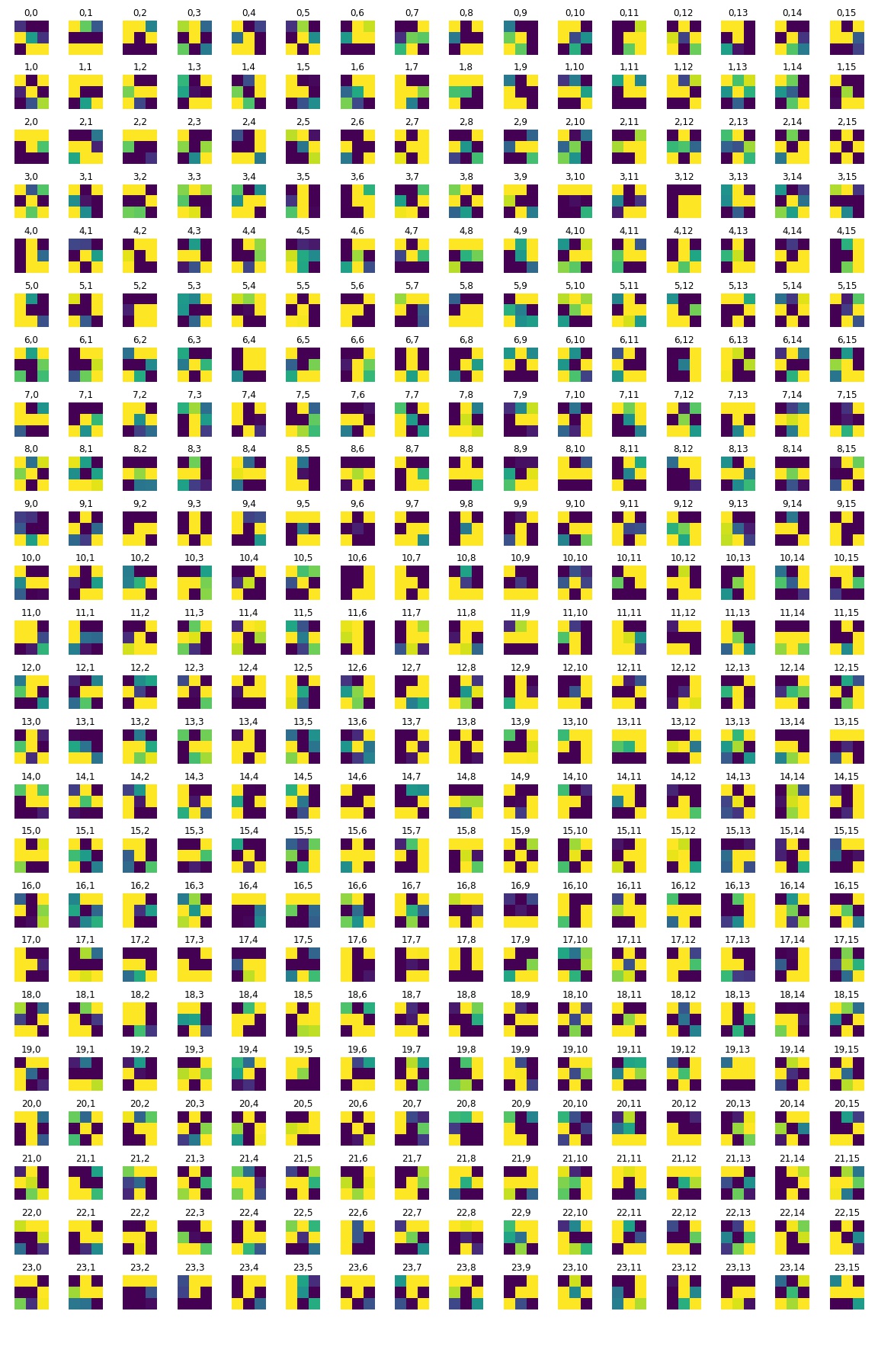

Below is the visualization of the learned filters for the first two convolution layer in my model.

Part 3: Train With Larger Dataset

For this part, I use a larger dataset, specifically the ibug face in the wild dataset for training a facial keypoints detector. I perform the same data augmentation as in the previous part and use the pretrained Resnet18 model. I change the dimension configuration in the first and last layer in this model to fit my training set and leave all the other parameters unchanged. I train it for 25 epochs with a learning rate of 0.005. During the training, I keep track of the lowest epoch loss and save the best model. Then I evaluate my model on a test set, and according to Kaggle, the score of my prediction is 10.64230.

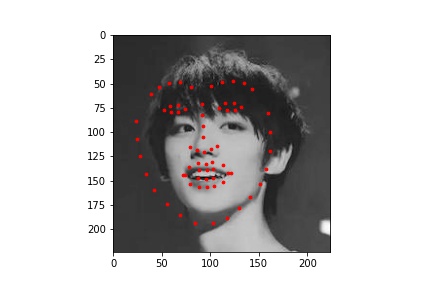

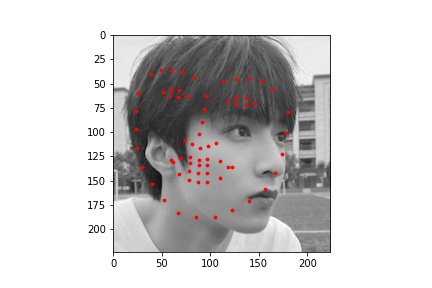

Here are some sample images from the test set and my model's predictions.

And finally, I picked some images from my own collection and tried my model on them. We can see that the model works pretty well on the first two while it does not do as well on the last two images. This is probably because I cropped the first two images in exactly the same way as the IMM training sets while for the last two, I leave more background and this might add difficulties for the model to make the correct predictions.