|

|

|

The goal of this project is to create a Neural Network that can detect facial keypoints. We do this by constructing convolutional neural networks (CNNs) and training them on sets of labeled facial data.

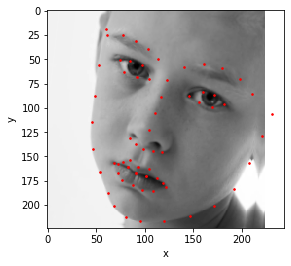

We first approach the simpler problem of building a nose detector. We need to construct a datapipeline to allow for efficient batch training and preprocessing. To accomplish this, we make use of PyTorch's Dataset and Dataloader classes. For preprocessing, I converted all images to grayscale and converted from unit8 0 to 255 to normalized floats between -0.5 and 0.5. Afterwards all images were downsampled to a 80x60 image. I visualized below some samples from this dataloader with the sample images and keypoints shown.

|

|

|

For my network I used the 3 convolutional layers with each convolutional layer being followed by a ReLU and then followed by a MaxPool. The network ends with two fully connected layers, the first of which is followed by a ReLU. The flow of the network is illustrated as follows

input -> Conv2d(1, 12, kernel_size=(7, 7), stride=(1, 1)) -> ReLU -> MaxPool (2x2)

-> Conv2d(12, 24, kernel_size=(5, 5), stride=(1, 1)) -> ReLU -> MaxPool (2x2)

-> Conv2d(24, 36, kernel_size=(3, 3), stride=(1, 1)) -> ReLU -> MaxPool (2x2)

-> FullyConnected(1008, 800) -> ReLU

-> FullyConnected(800, 2) -> output

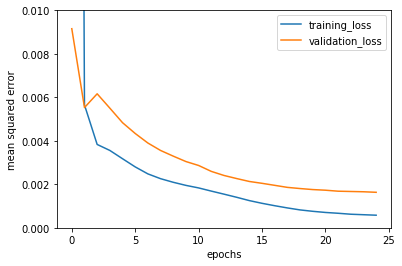

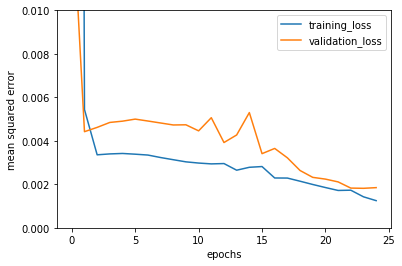

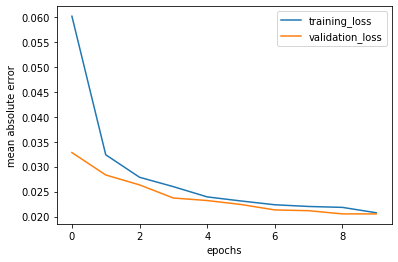

The dataset was split into training and test with the first 32 persons in the dataset being used for training and the last 8 being used for validation. I trained using Adam with a learning rate of 1e-4 and a batch size of 4. The training and validation loss at each epoch is shown below

|

Below are two images in which the nose detector worked relatively well. Red represents the ground truth point while green is the output of my neural network

|

|

Below my nose detector fails in particular due to the fact that the faces of the people are tilted/rotated. This is consistent with the fact that the net predicts well in the case of people directly facing the camera. The training dataset in particular is biased towards images where the subject is directly facing the camera, so it makes sense that the net doesn't handle the two cases below well.

|

|

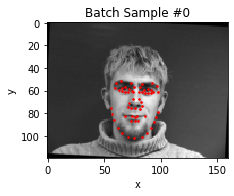

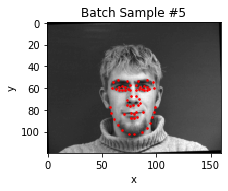

We turn our attention to doing facial keypoint detection. The process is similar as the previous part except we only downsampled by a factor of 4 and augmented our dataset by taking the source data and adding rotated (between +/- 3 degrees) copies of it to the entire dataset. Some examples of the augmented dataset is shown below

|

|

|

For this task, I used 6 convolutional layers all of which had a ReLU activation and with a MaxPool every other one and ended with 3 fully connected layers. The particulars of the architecture are outlined below:

input -> Conv2d(1, 12, kernel_size=(7, 7), stride=(1, 1)) -> ReLU -> MaxPool (2x2)

-> Conv2d(12, 24, kernel_size=(7, 7), stride=(1, 1)) -> ReLU

-> Conv2d(24, 36, kernel_size=(7, 7), stride=(1, 1)) -> ReLU -> MaxPool (2x2)

-> Conv2d(36, 48, kernel_size=(5, 5), stride=(1, 1)) -> ReLU

-> Conv2d(48, 60, kernel_size=(5, 5), stride=(1, 1)) -> ReLU -> MaxPool (2x2)

-> Conv2d(in_channels=60, out_channels=60, kernel_size=(5, 5), stride=(1, 1)) -> ReLU

-> FullyConnected(1440, 800) -> ReLU

-> FullyConnected(800, 800) -> ReLU

-> FullyConnected(800, 58 * 2) -> output

I use the same train/test split as the previous section. My optimizer was Adam with a learning rate of 1e-3 and a batch size of 36. The loss of this classifier is shown below

|

We can see the loss decreases dramatically in the first few epochs. It overcomes some bumps before finally bottoming out. We can see the final filters learned by the network which I visualize below. I sampled one filter from each convolutional layer of my network, the layer number labeled in the title.

|

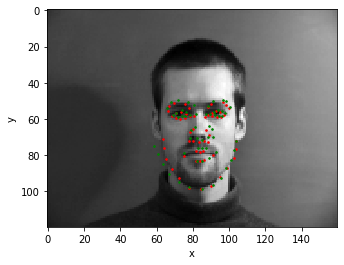

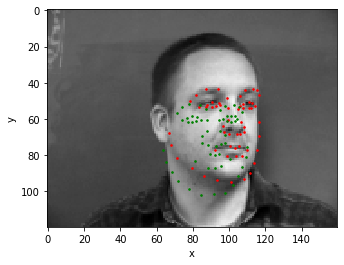

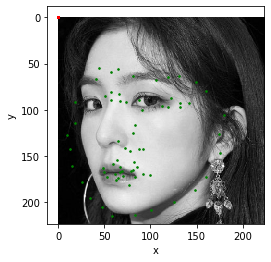

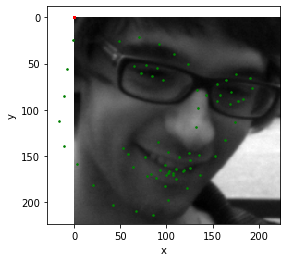

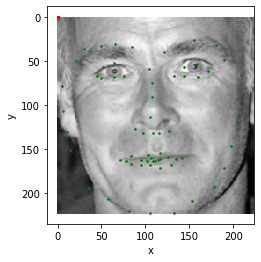

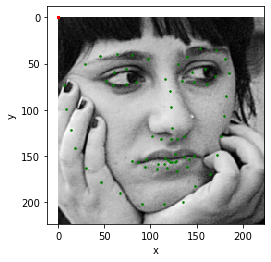

Below are two images in which the full keypoint detector worked relatively well. Red represents the ground truth point while green is the output of my neural network

|

|

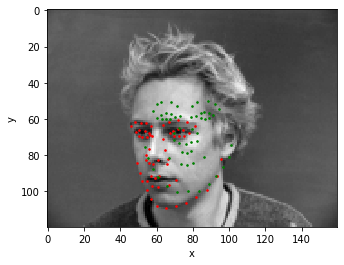

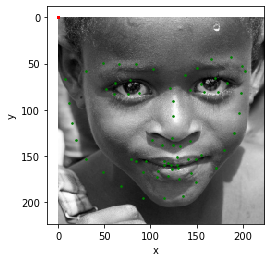

Similar to my nose detector, my facial keypoint detector fails on faces that are turned away from the camera. Again this is due to the skew of the training set distribution to have mostly images where the subject is facing the camera.

|

|

For this section we now train on a much larger dataset of 6666 images. I also augment this dataset, now using 6 different angles between +/- 15 degrees. A couple of results of the dataloader for the training set are shown below

|

|

For this section we now train on a much larger dataset of 6666 images. I also augment this dataset, using a combination of scaling and rotation. For this dataset, we had to crop the images using specified bounding boxes and resize the images to 224x224. A couple of results of the dataloader for the training set are shown below

For this task, I used ResNet18, the only modification being the size of the input was changed to accept grayscale images and the output to be the size of the 68 keypoints. My batch size was 36 with an Adam optimizer with learning rate of 1e-3. The loss of training this classifier is shown below

|

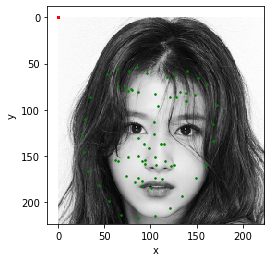

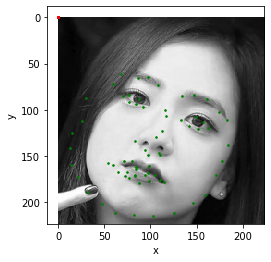

I tested this network on a couple test images of kpopstars, the results of which I show below.

|

|

|

The detector works okay. It fails for the photo where Irene is facing away since much of the left side of her face is turned away. Also the photo of Sana underperforms she has hair covering the figure of her face. The photo of Jisoo comparatively performs well given that the visage in the photo is facing the camera with no obstructions

I ran this on some of the test dataset the results of which are shown below

|

|

|

|

|

After submitting to Kaggle, my best result gave me an Mean Absolute Error of 14.26

From this project I learned that epochs and batch size are important hyperparameters to validate. Too many epochs and the network can just end up memorizing the training set instead of learning the underlying features

Thank you to the CS194 staff for making such a creative project, even if it was very frustrating at times (90% time spent preparing datapipelines, 9% doing machine learning/hyperparameters, 1% crying). I was really excited to use PyTorch since I've never touched a neural network framework before. I must admit I walked away amazed after finishing... amazed that it worked at all... amazed that I got it to work while still not being sure exactly why it should work aha....