Overview of the Project

The goal of this project was to create convolutional neural networks using Pytorch in order automatically predict landmark points on faces of a given dataset.

Part 1: Nose Tip Detection

The first part of the project was to detect the nose point from the IMM data set. This dataset contains 240 images of 40 people where each person has 6 different pictures of themselves and each image contains 58 labeled facial keypoints. I used DataLoader to load and visualize the images and the corresponding ground truth nose points from a DataLoader. Some examples are shown below.

|

|

|

The first step was to normalize the images, which would help the model converge faster. I split the data into training set of 192 images and validation set of 48 images and convert each image to grayscale. The next step was to implement a convolutional network with 3 convolutional layers and 2 fully connected layers. Each convolutional layer is followed with a Rectilinear Unit (ReLU) as non-linearity and a max-pooling layer. For training, I used a mean squared error loss (MSELoss) for the loss function and an Adam optimizer with a learning rate of 0.001. Then, I moved on to training the data for 25 epochs with a batch size of 6.

|

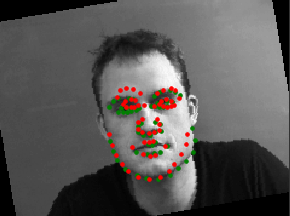

Now, we can see how well the CNN model predicted the nose tip. The ground truth nose points are in green and the predicted nose points are in red. Here, we can see the predicted and ground truth points are relatively close to eachother, signaling that the model did a good job.

|

|

|

The CNN did not perform well on some images shown below. I believe the these predictions are not successful because the positioning of the head and the face is different from the well predicted images as well as the shadows. Some of these images have people facing in different directions, which removed some features from the model that the CNN could use to predict the nose tip.

|

|

|

Part 2: Full Facial Keypoints Detection

In this part, I used a similar approach to the previous part inn order to detect all 58 facial keypoints of an input image by expanding my CNN and including all the facial keypoints, instead of just the nose tip. First, I adjusted my DataSet to extract and read all facial key points. After passing it into my dataloader, I was able to visualize these ground truth facial keypoints.

|

|

|

I added 1 more convolutional layer to my neural net along with 1 more max pool. I used optim.Adam again with a learning rate of 1e-3. I performed data augemntation and transformation on the images by using colorjitter, random rotation, and random translation. Data augmentation is used to prevent the model from overfitting as the random adjustment of the images on each epoch prevents the model from training on the same images various different times and therefore, preventing overfitting. I used the batch size of 6 for the training and the validation dataset similar to the previous part. I have displayed my network structure and the graph of the training and validation loss at each epoch. As we can observe, the losses are decreasing at almost every epoch and therefore, we can assimilate that the model is training well.

|

|

The ground truth facial key points are shown in green and the predicted facial key points in red. Here, I'm displaying some images where the model predicts well.

|

|

|

In this section, I am displaying some images where the model doesn't predict the key points very well. We can observe that the keypoints in the images where people were looking to our left are not predicting well, while the images with well predicted points contain people looking in the middle or sometimes to our right. I believe this is true because my model did not train on enough images where people are looking to our left and therefore, couldn't accurately predict the keypoints.

|

|

|

These are some of the filters that were learned by the neural net during the trianing process. Here, I have only shown filters for the first 2 layers.

|

|

Part 3: Train with Larger Dataset

The objective of this part was to train a predefined model on an extremely large dataset, therefore, I used Google colab to train the model. Here, I've displayed some images and their ground truth key points after image transformation was applied.

|

|

|

|

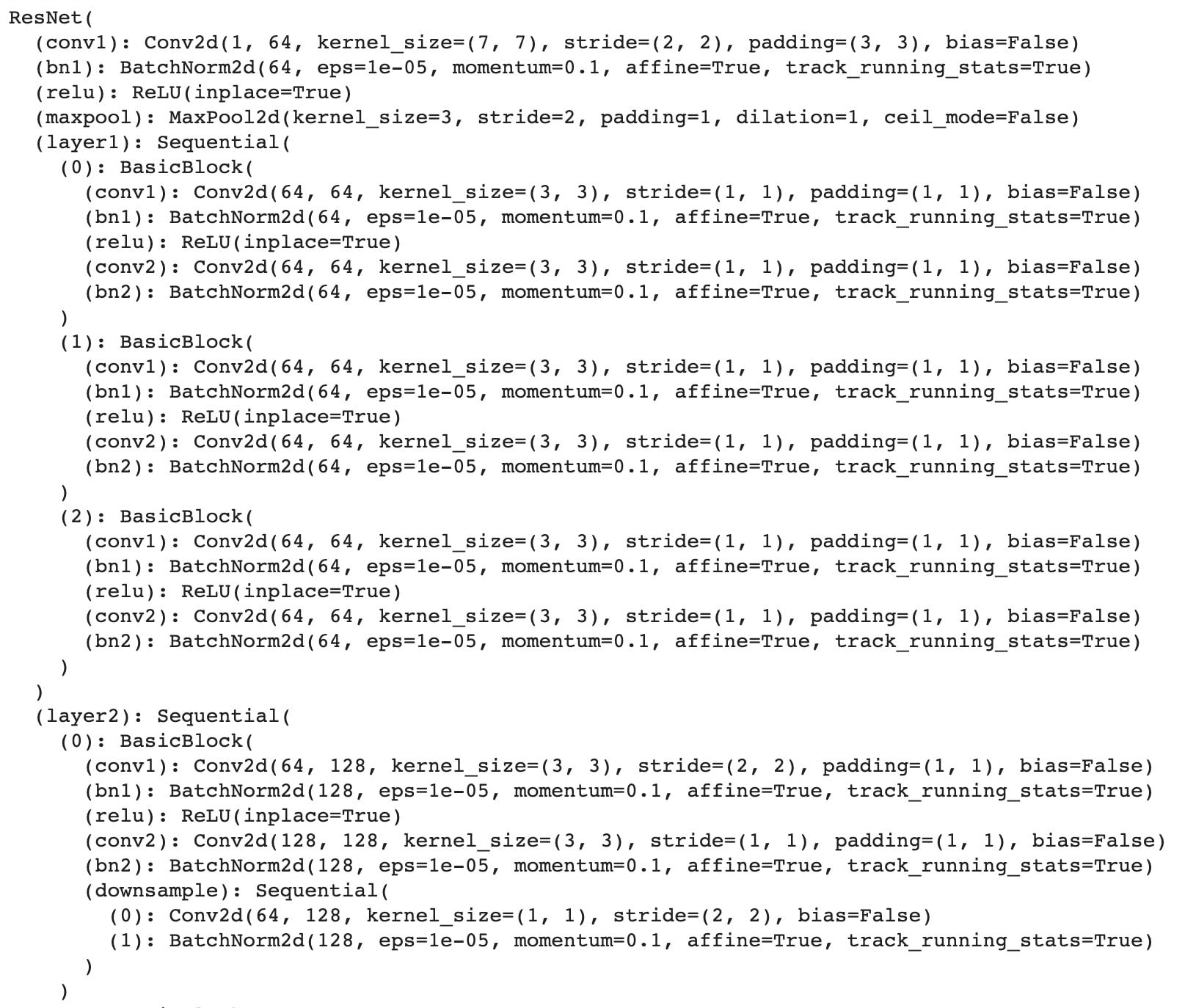

I used ResNet-34, one of the torch premade models, instead of my own neural network. I tweaked the ResNet-34 model and changed the size of the input channel to 1 and the size of the output channel to 136 (68*2), for the x and y value of the 68 facial key points of each image. The following images show my ResNet-34 structure.

|

|

|

|

I used GPU to speed up the training over 20 epochs. I used similar data augemntation techniques from the previous part as well as the same optim.Adam optimizer. My batch size for the training and validation set was 11 and I used a learning rate of 1e-3. I have dispayed the graph of the training and validation loss at each epoch.

|

The model produced the following predictions on the test set:

|

|

|

|

|

|

The following predictions are produced from my images.

|

|

|

|

Bells and Whistles

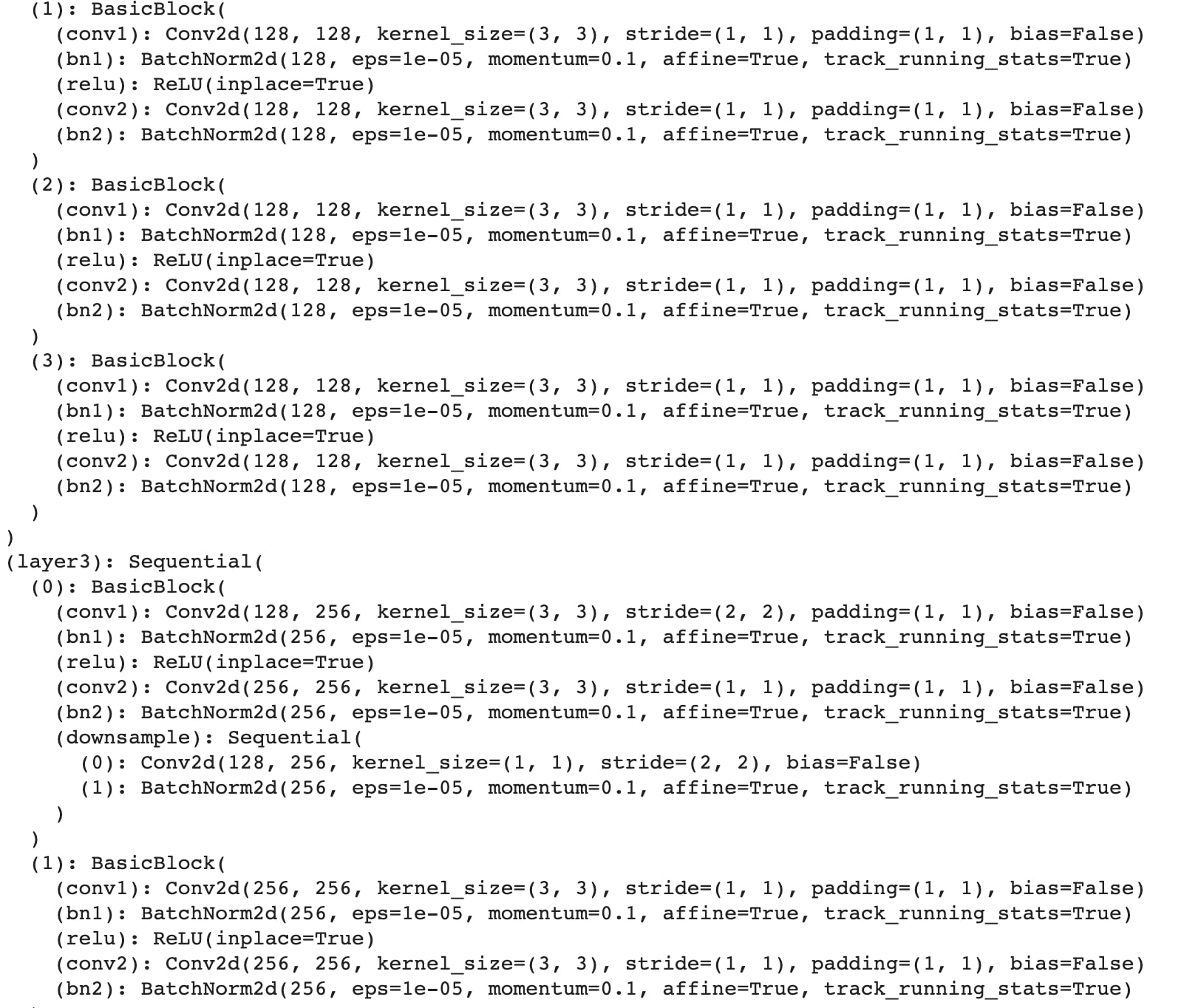

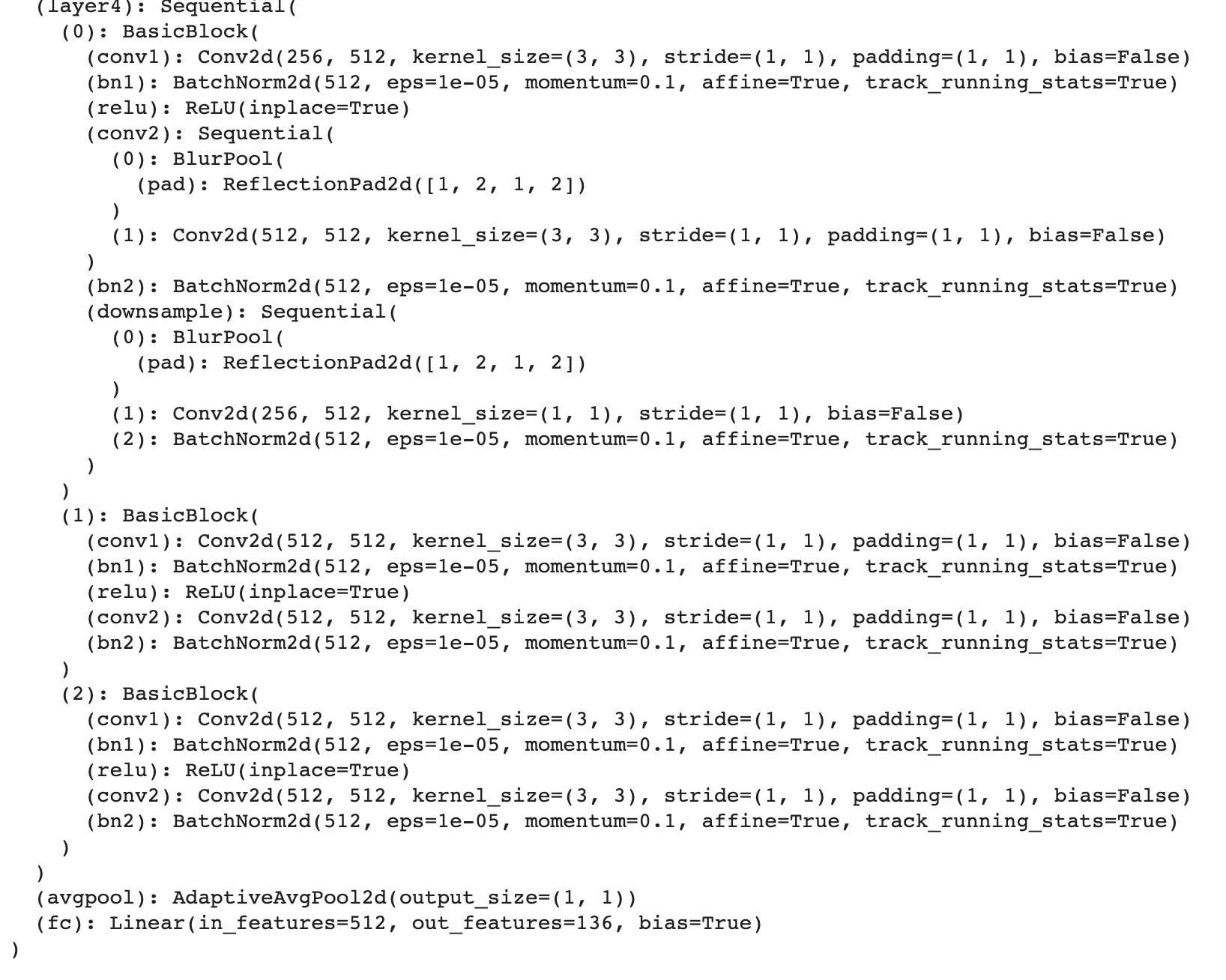

For the bells and whistles part, I used Richard Zhang's anti-aliased max pool. I switched the model from ResNet-34 to anti-aliased ResNet-34. I used the same learning rate of 1e-3 and batch size of 11 as part 3 and retrained the model. The following image shows the network structure.

|

|

|

|

|

The following graph displays the losses after training on 10 epochs. This graph is different from the one in part 3 as the training loss seems to start at a very small number compared to the validation loss.

|

Here, I am displaying the predicted key points on images from the test set that the anti-aliased ResNet-34 model produced after 10 epochs. As shown, it performs pretty well on the images. The predictions are very similar to the original ResNet-34 trained on 20 epochs.

|

|

|

Website inspiration- Charley Huang, acg.