Part 1

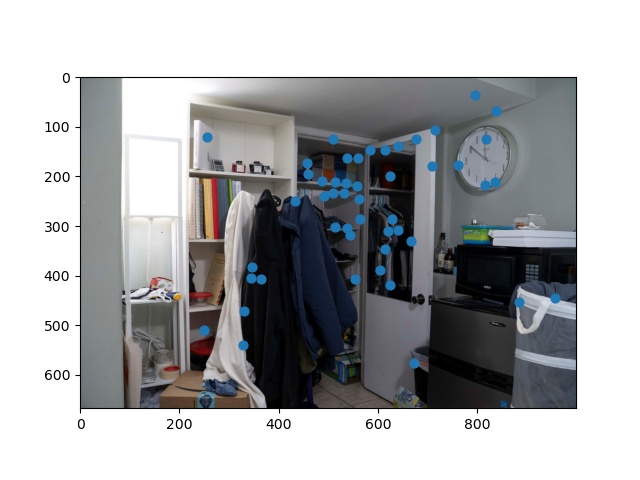

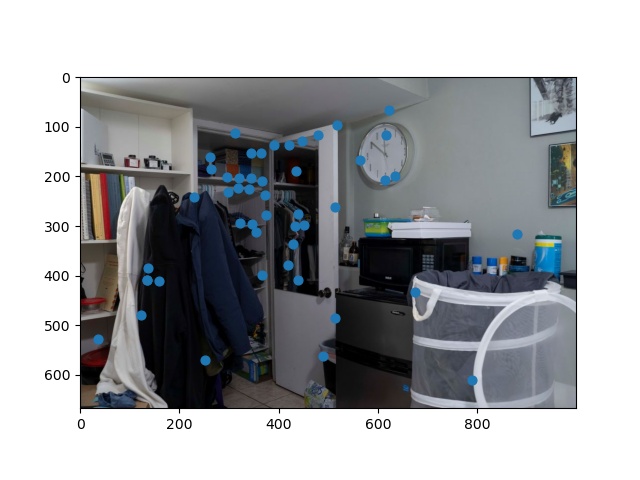

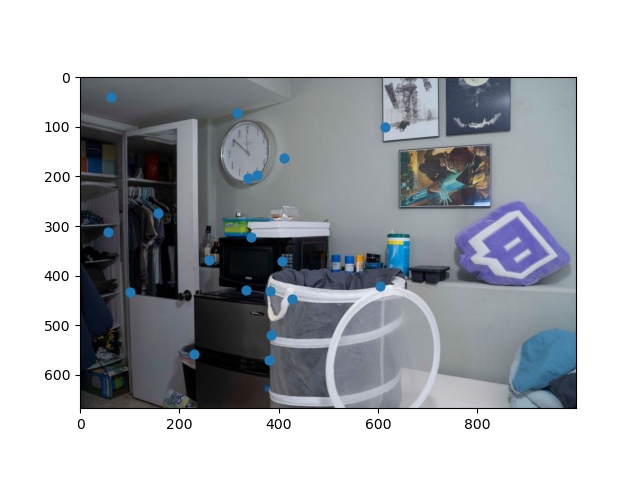

Shoot the Pictures

I used my DSLR (well, mirrorless technically) to take three photos of my room, each with the same exposure settings. I also tried to keep the sensor as level as possible between shots using the camera's built-in gyro. However, I did downsample the images substantially prior to usage in this project from 6000x4000 to 1000x666 to reduce processing time.

Recover Homographies

Nothing too interesting in thisi part - I used techniques discussed in lecture and online to recover the homographies by creating a linear system Ah = b, and solving for h. Then, I used h to

Warp Images

With the homography calculated, I simply applied it to the image (and the associated keypoints).

Image Rectification

Below, you can see two rectified images to align a tile and a keyboard to appear straight-on respectively. Due to initial downsampling and further processing by the homography, the images are unfortunately a little blurred. The homography also inverted the oranges and blues in my image, which was a weird bug that I was not able to pinpoint in time.

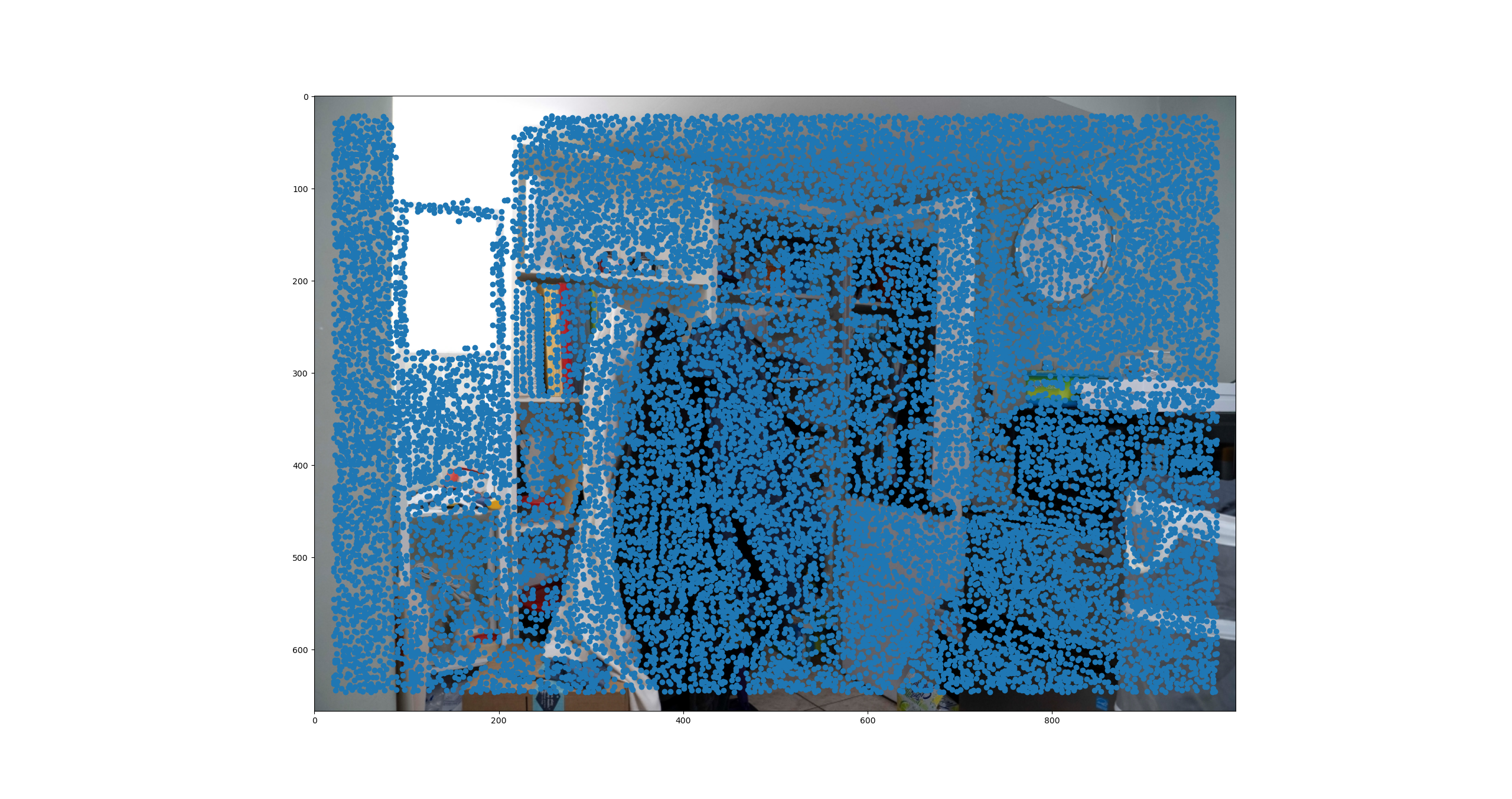

Mosaic Blend

Finally, we blend the images into a mosaic. Unfortunately I had a couple difficulties with this, I believe stemming from a bug in the homography matrix calculation. Although there appears to be some alignment visible, obviously the two images are not stacked properly, despite several attemps to fix both the blending of the images (I tried a mask, averaging, and max()) and manual alignment by overlapping one key-point in both images onto the same pixel.

What You've Learned

Honestly despite the challenges, I found this to be a pretty neat project. The rectification in particular was really cool to see as I previously had believed that it was simply impossible or infeasibly difficult to rectify an image.