CS 194 - 26: Computational Photography Proj 5A & B

Ritika Shrivastava, cs194-26-afe

Background

I will be working to stiching images!

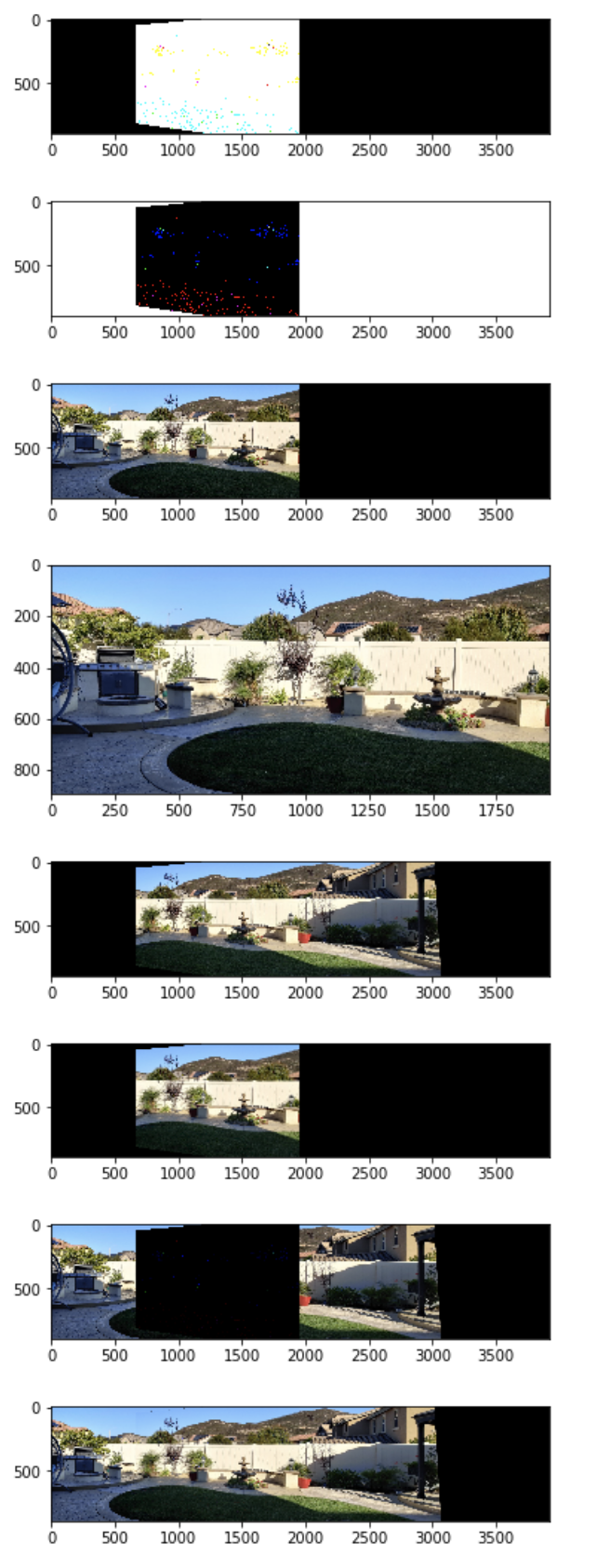

Part 1: Shooting images

I shoot these few images

Part 2: Recover Homographies

To recover the homographies, I assigned 4 points in the images above and then I calculated the homography using the equation below.

Part 3: Warping images

Then I applied the homographies to calculate the new projected image. These can be seen in the next section.

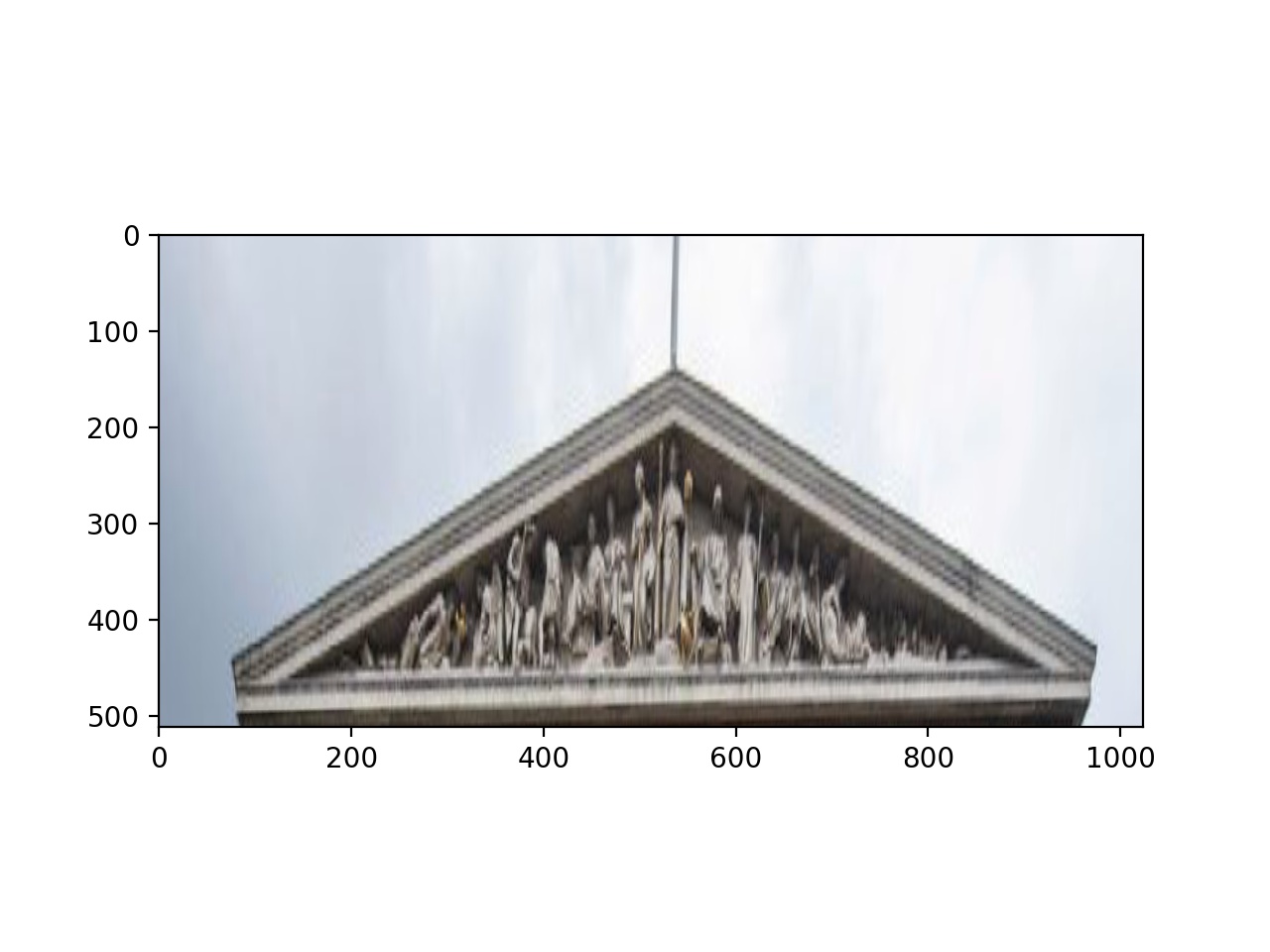

Part 4: Image Rectification

At this part I worked to “rectify” images. I took images I found online that had details on a planar surfaces, and warp them so that the plane is frontal-parallel. I got the results below.

I wanted to see some more details in these images. The left side is the original and the right is the rectified image.

Part A: End result!

Part B: Feature Matching!

In the previous part of the project, we had been manually selecting a point to compute the homography with. This is a tiring process and requires a lot of zooming in. So to simplify the process a bit, let's automate feature matching!

To do this I will be following the steps provided in the “Multi-Image Matching using Multi-Scale Oriented Patches” by Brown et al. paper but with several simplifications. (link: https://inst.eecs.berkeley.edu/~cs194-26/fa20/hw/proj5/Papers/MOPS.pdf)

This will be broken down in 5 main steps:

- Detecting Corner Features in an image

- Extracting a feature descriptor for each feature point

- Matching these feature descriptors between two images

- Use a robust method (RANSAC) to compute a homography

- Proceed as in the first part to produce a mosaic

Let's get started with the fun!

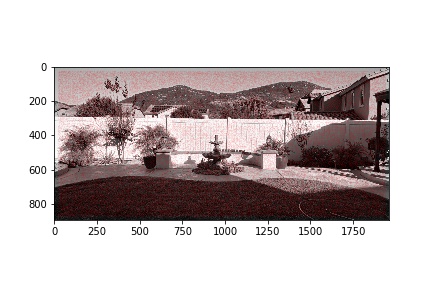

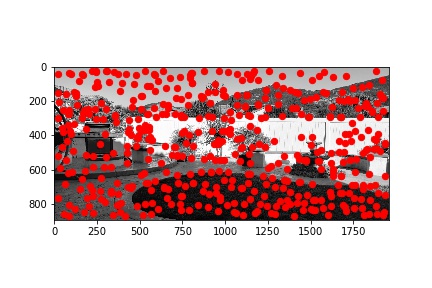

Part B.1: Detecting corner features

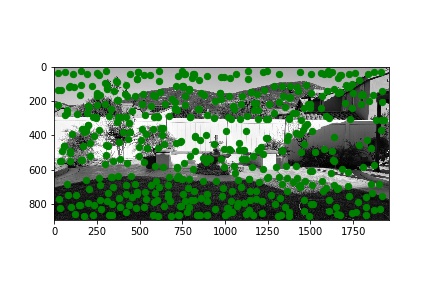

For this part of the project, I used the Harris Interest Point detector, which has been provided by the instructors. This resulted in too many edges being detected and I had to make the dot sizes small just so that the image is still visible. All the red dots seen below are the points that were returned.

Writing a feature descriptor for all these points would be excessive, so to solve this issue, I implemented the adaptive non-maximal suppression mentions in section 3 of the paper. The purpose of this was to get an even distribution of the strongest corners in the image. Going off of the top 500 corners alone would leave all points in a cluster, but this allows us to get a good distribution as seen below.

This resulted in a good number of points for feature descriptor extraction.

Part B.2: Extracting a feature descriptor for each feature point

For this part of the code, I sampled 40x40 patches around the feature points above. Then I converted this into 8x8 patch. I saved the patches in a dictionary with the point as the key and the patch as the value.

Part B.3: Matching Feature Descriptors

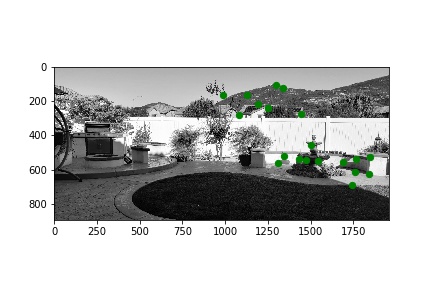

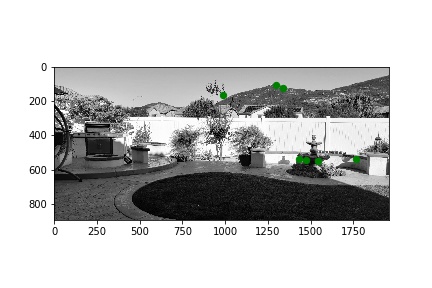

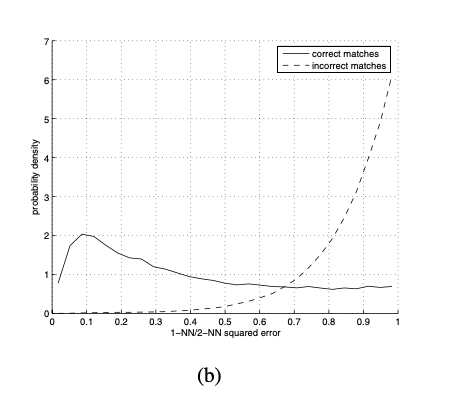

For this part, I matched the features descriptors from the last part by comparing each of them with one in the other image. I calcauted the square distance between the two patches and stored the last two best matches. I check that smallest error / second smallest error was less than 0.3, which I got from figure 6b in the paper (seen below). The results can also be seen below.

Part B.4: Writing 4-point RANSAC

To implement this, I used the RANSAC loop taught in class.

- Select four feature pairs (at random)

- Compute homography H (exact)

- Compute inliers where dist(p', Hp ) < ε

- Keep the largest set of inliers

- Re-compute least-squares H estimate on all of the inliers

Repeat steps 1-3 , 500 times. ;)

I got the following results from RANSAC.

Part B.5: Mosaic time!

For this part, I reused my code from part A. And I got the following Mosaic.

What I Learned

I really enjoyed this project. Found the process of automating the feature detection process very fun. It was a similar parallel to how we had found equivalents in the face project, once manual in project 3, then automating the process in project 4. This project was interesting because after words I attempted to make the same panoramas with my phone and I noticed how some of the steps of feature matching were being layed out.

Overall this was a fun project and I learned that for best results its good not to have repeating patterns in the images.

In the future, I would try to improve the blending process.

Below is the blending process I used.