CS194-26 Final Project

Michael Park - Fall 2020

Objective

The final project is consisted of two different image processing methods. The first is an attempt to recreate a neural network model from the paper A Neural Algorithm of Artistic Style by Gatys, Ecker, and Bethge (https://arxiv.org/pdf/1508.06576.pdf). The second is my take on image quilting algorithm, a technique to seamlessly "quilt" a pattern into a larger image.

Reimplement the Neural Algorithm of Artistic Style

The essesnse of generating style transfer images lies in the fact that an image can be separated into its

style and content representations, which can be independently combined to produce images with different

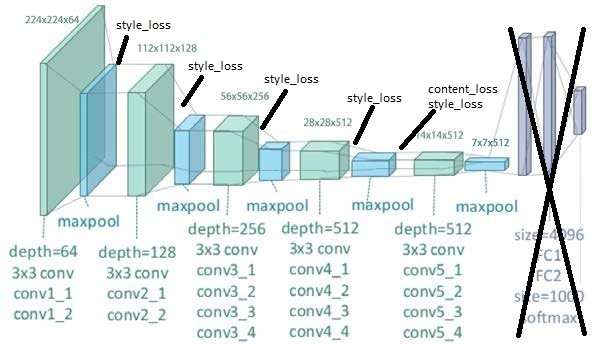

styles. To achieve this, I followed the paper and used the pretrained VGG-19 model. As suggested, I used the

feature space provided by 16 convolutional and 5 pooling layers without the fully connected layers. After

every convolutional layer, I constructed StyleLoss and ContentLoss layers as shown

below, which calculate metrics that represent similarities between convolutional activation values. For the

content loss, I used the MSELoss, the squared-error loss between two feature representations. For

the style loss, I implemented a method to compute gram matrices. Minimizing the squared-error loss of entries

of gram matrices gives us a stylistically similar representation.

Modified VGG-19

|

As seen above, I retrieved the style loss after all five convolutional layers, and the content loss after the last convolutional layer (not particularly suggested by the paper). I used the L-BFGS optimizer and performed gradient descent for 500 epochs. I initially experimented with image generation by weighting style and content representations, but I eventually settled with a large weight on style representation.

Before using the images, some preprocessing was needed. I first resized and center-cropped the images so that style and content images were consistent. Then, I normalized all images by a specified mean and standard deviation used to train VGG-19 CNN.

Below are the results. I first performed neural style transfer on the Neckarfront image like the paper to compare the results:

Neckarfront

|

Shipwreck

|

|

Starry Night

|

|

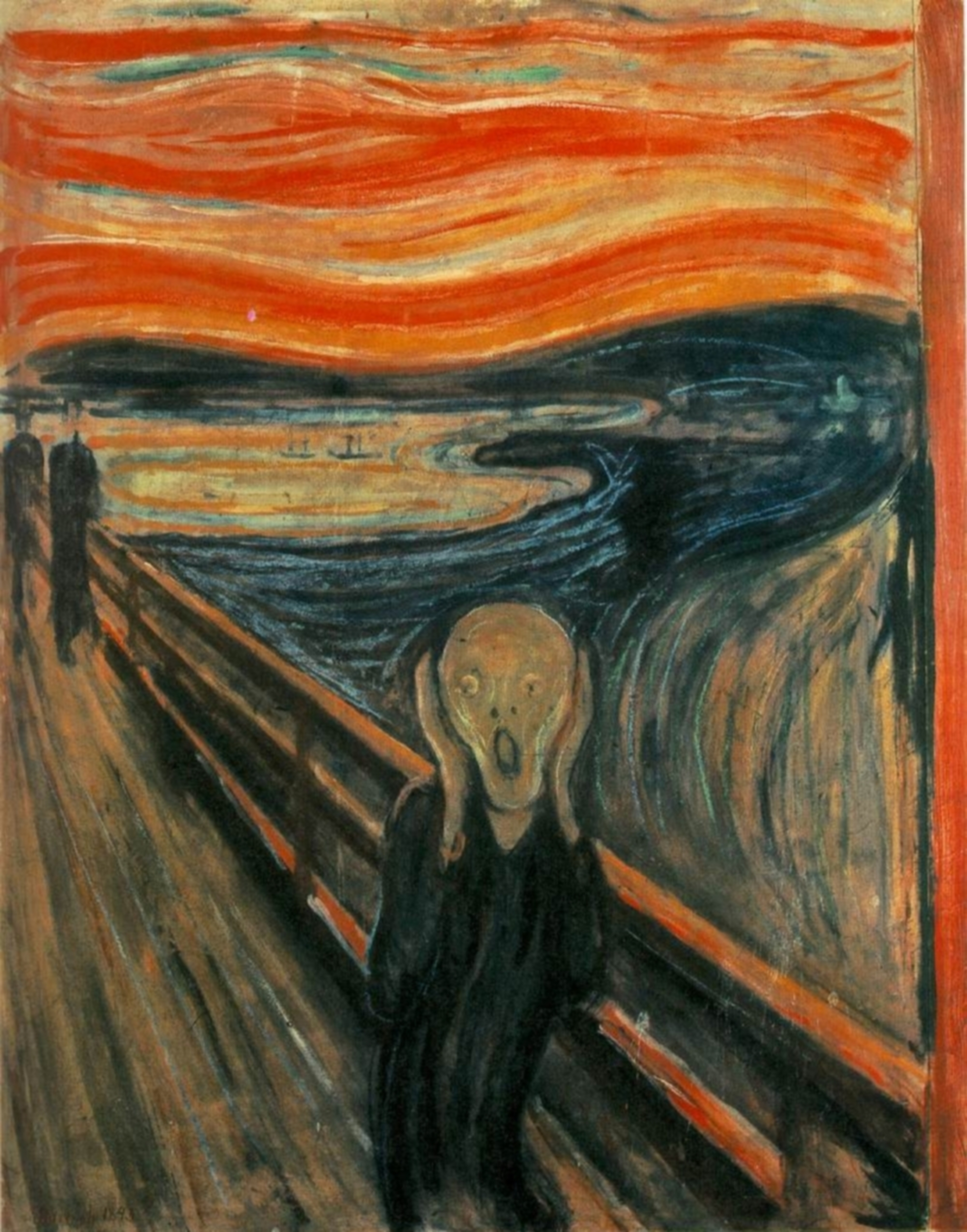

Der Schrei

|

|

While I believe that the resulting images do indeed reflect a stylistic transfer of input images, there are subtle differences between the images above and those exemplified in the paper. Images generated from my implementation seem to replicate the textures and colors well. However, unlike those shown in the paper, they do not fully reflect the defining patterns of the styles of input images. This may be because I begin the transfer from a copy of input content image rather than a blank image or a white noise.

Below are style transfers done for my own images:

|

|

|

|

|

|

|

|

Now below are the results using my own style images:

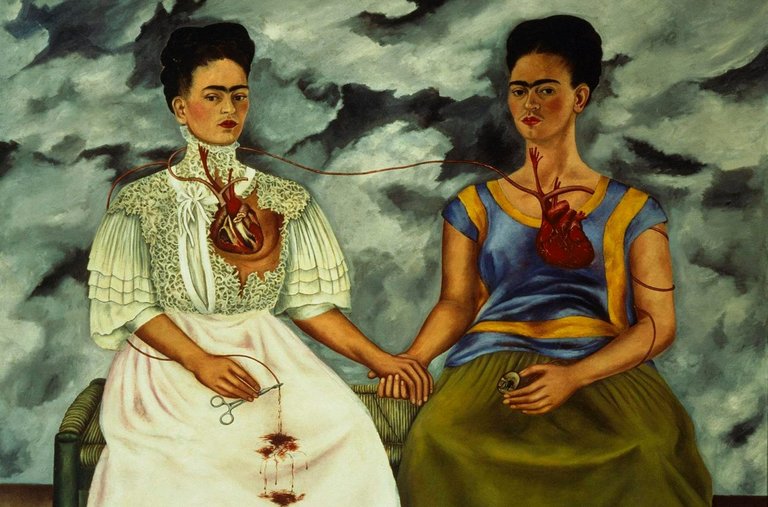

Frida Kahlo

|

Cave Painting

|

|

|

|

|

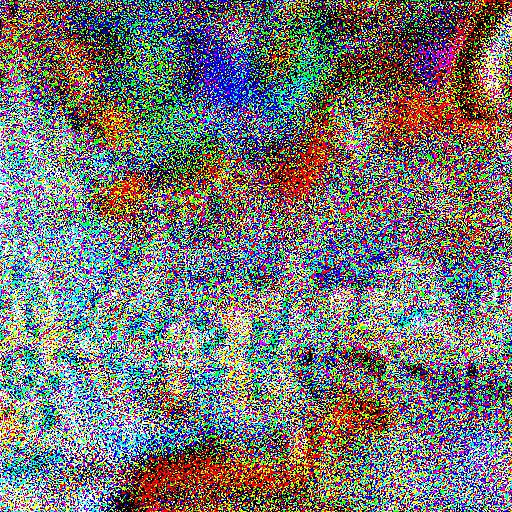

Unfortunately, there were some failed images. For some images, gradient descent seem to lead to divergence, creating an image where its style representation is akin to a noise. This may be mitigated by tuning the hjyperparameters such that overfitting is prevented, but I am not entirely sure what exactly causes this consequence.

Failed Neckarfront

|

Image Quilting

For this task, I explored different methods of using repetitive image patterns to form image "quilts". To do

so, I primarily used PIL.Image and numpy to crop and paste parts of images.

First, I generated a quilt using random sampling. I simply used the random number generator to sample patches of the patterned image for quilting. Below are the results:

|

|

|

Next, I used the overlapping patches technique to generate less random patches for smoother stitching. I calculated the Sum of Squared Differences (SSD) between overlapping portions of the previous patch and all possible patches. From the generated SSDs, I found their minimum value, multiplied it by a certain threshold (1.05 in my case), and then randomly sampled a patch from candidate patches with SSDs of less than that value. Using this sampling technique, I filled the resulting quilt starting from top left towards bottom right.

Below are the results. Note that the black borders are caused by overlapping the patches:

|

|

|

Lastly, to make the image smoother, I attempted to find a min-cut seam to smoothen the visible artifacts of quilted images. To do so, I came up with an algorithm that finds the min-cost contiguous path from the left to the right side of the overlapping regions of the patches (***Bells and Whistles). By doing so, I could find cuts that "cost" the least. For the cost metric, I continued to use the SSD. Then, I generated binary masks that masked the patches to appropriate shapes before stitching.

Below are the results of generating with given images and my own images. Unfortunately, for some images, my technique does not seem to produce "organic" results. This is most likely because my patterns are not detailed enough that it is difficult to find variations of patches that qualify under the threshold.

|

|

|

|

|