CS194-26 Project 5

Light Field Camera

This is first part of the final project. We have a set of images consist of multiple images over a plane orthogonal to the optical axis, which is 17 * 17 = 289 in our example used. The difference in each of the 17 sets of the images are only differ by slight shifting, and the difference in the 17 sets are mainly different averging. We are trying to reproduce those effects with the lightfield data.

First, we will do the depth refoucsing. Basically, we are changing the focus of the image to give the idea of we are looking at the image from a different point of view. We define a center coordinate of all the images we have. Next, we are shifting our images set by the difference with the center, which is the average coordinate. Repeat this process for the whole set of our image. Lastly, we take the average of the image set to be the output. That result in one of the processing image. We assignment a range, which is -3 to 3 in my example, as the amount of shifting weight, to form a sequence of image focal point shifting. The following images are the sample of change from one focal point to another, and the gif image is the process of changing focus point.

Perspective 1

Perspective 2

Process of changing focal point

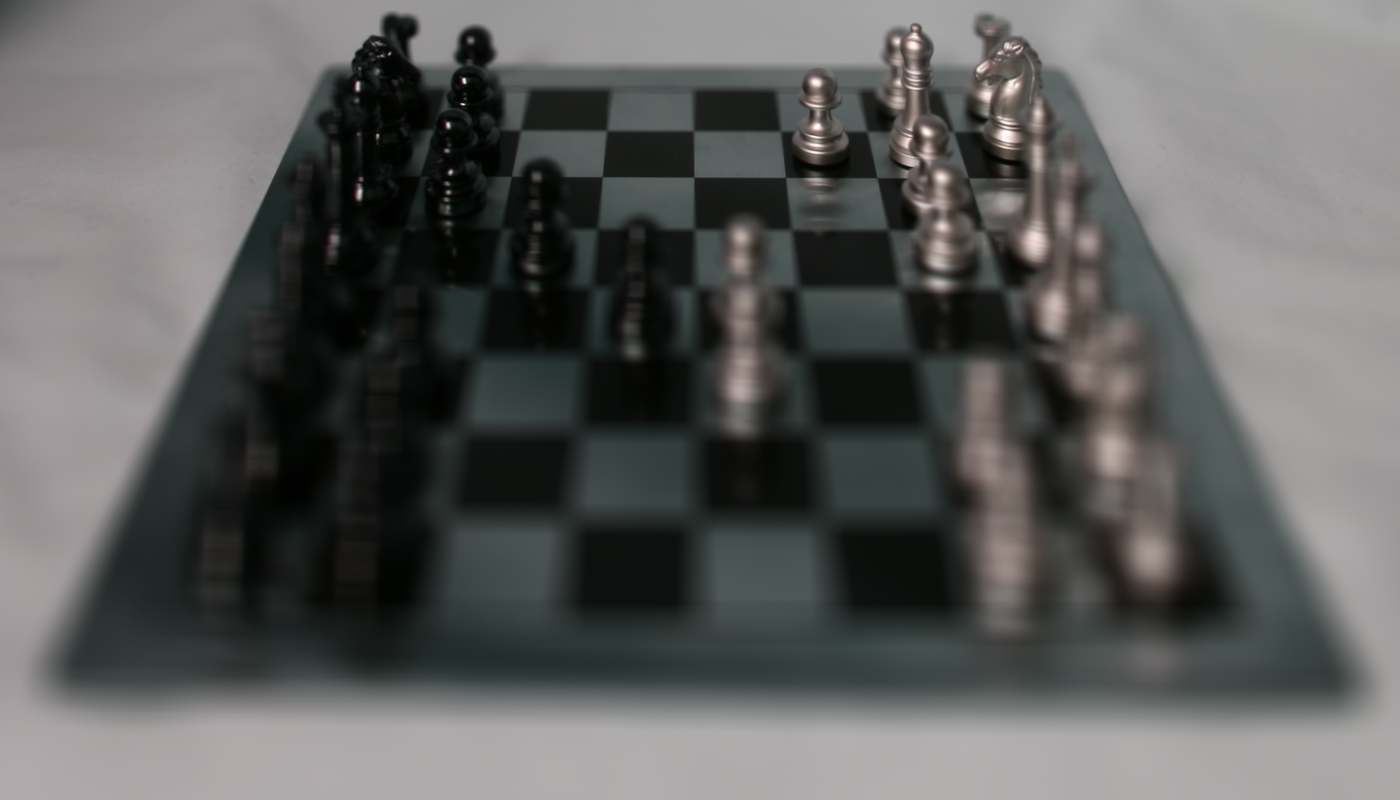

Next, we do the adjustment on aperture. Our goal is the adjust the aperture while we maintain the same focal point. Similar to the refocusing, we calculate the center matrix. But this time, instead of shifting the images in the set with the difference between the center, we define a radius that we will keep of the original set, and averge the rest similar to what we have done in the last part. The example are provided below, where the focal point is kept always on the top of the chess board.

Perspective 1

Perspective 2

Process of changing aperture

Bells and Whistle

For the bells and whistle, I try using my own data to do the light field camera effect. I use a set of 4*4 = 16 images of a banana I shoot. I use my phone camera to capture the images, and perform shifting between sets. Honestly, the degree of shifting is not perfectly performed. Hence, my result looks bad. I guess you can tell the algorithm is somewhat correct by finding out that some parts that are not affect too much by movement, such as the badground table looks fine, but the actual object, the banana, looks not so good. The reason my result fail might due to the angles shift are bad, and the data set is not large enough. I saw 2 of the intermedia returns are zero out. A possible improvement will be use better camera and fix the angle movement between each sets.

Original banana

Refocus (Failed)

Adjust Aperture (Failed)

A Neural Algorithm of Artistic Style

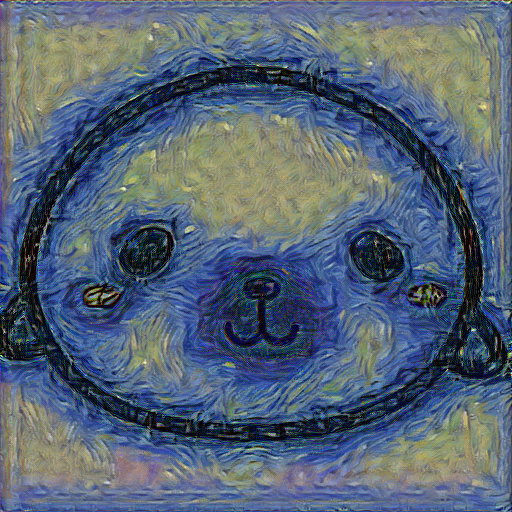

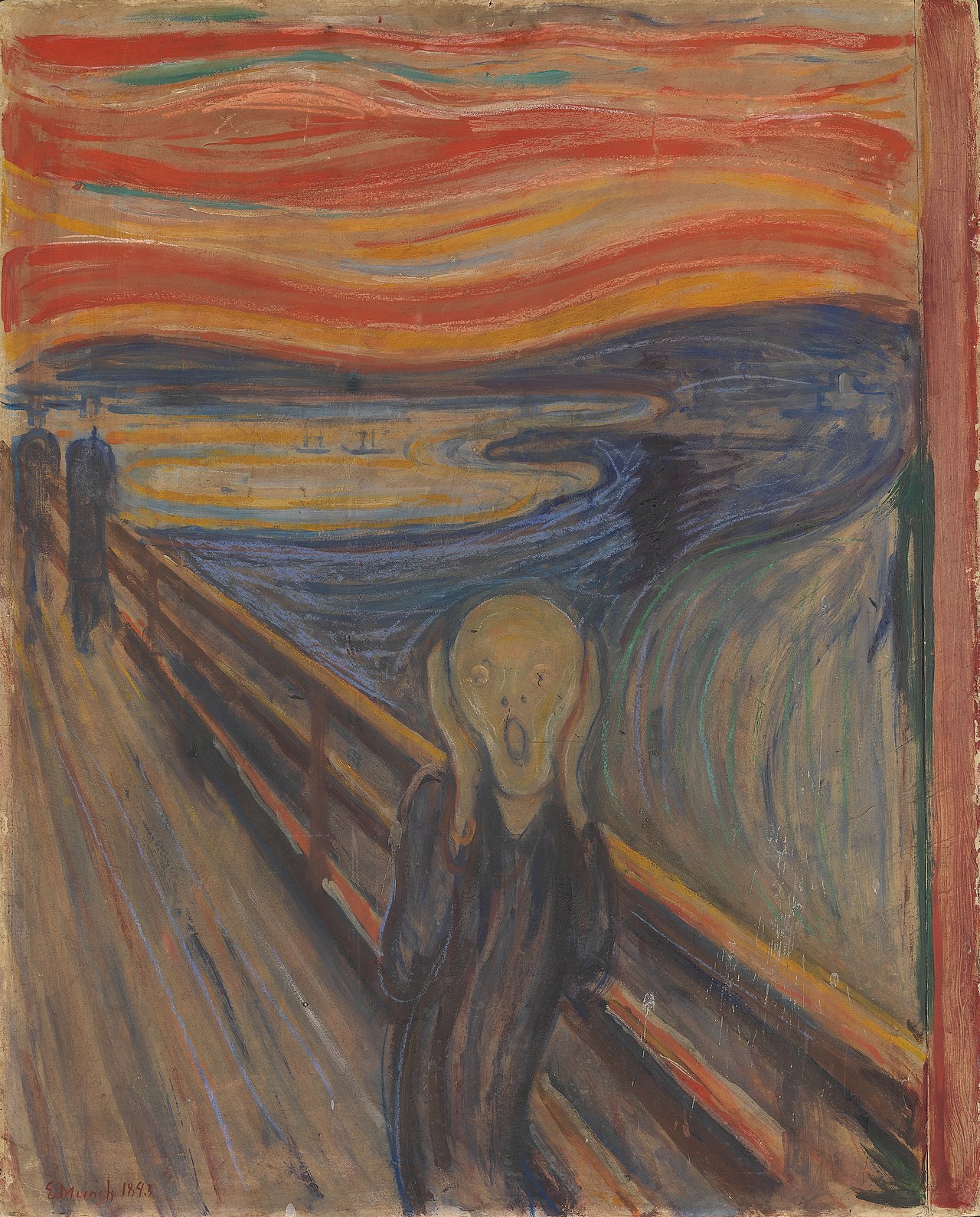

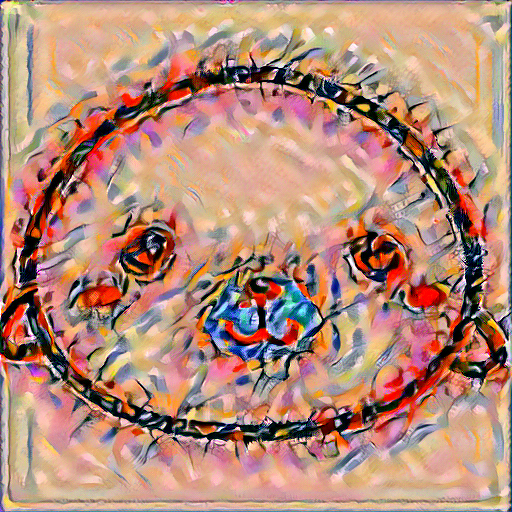

This is the second part of my final project, which is the reimplenmentation of the paper on a neural algorithm of artistic styles. As described in the paper, we are using the VGG19 for net, and LBFGS for optimization function. We define two losses, the style loss and content loss, where the style refers to the input artwork and the content refers to the content we input. We weight those two losses differently, which due to we mainly interested in style losses. I set a 300 iterations for my epoch. For the following sample images, I use the artwork that is same as the ones in the paper as the style input, and the seal image that I used for all my 194 webpages as the content input (the original image is on the left hand side).

Starry Night

Starry Night on seal

The Shipwreck of the Minotaur

The Shipwreck of the Minotaur on seal

Der Schrei

Der Schrei on seal

Femme nue assise

Femme nue assise on seal

Composition VII

Composition VII on seal

Conclusion

In the final project, I felt a learn a lot. The light field camera part is an interesting application of changing the focal point on the image, which I have seen in a lot of the apps. It is excited that I can write it by myself and apply it to images of my collections. Next, the neural algorithm can apply the artistic effects to other images, and that is so cool. Although this is my first class that code the real life application from ML (well I truely don't think PacMan from 188 is a "real-life" application). Seeing the interaction between art works and ML is exciting to me.