Following Piazza hints, I reimplement NST using vgg16 taken from pytorch. The hyperparameters I used are:

default LR, and as the main differences, I use only the first conv layer for the content, but the first 5 for content as from the paper.

I use these 3 images (van gogh, picasso, scream) as the style images, shown below:

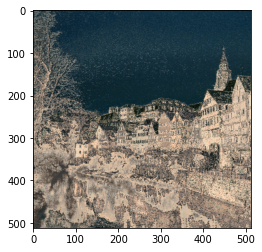

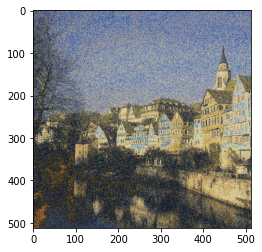

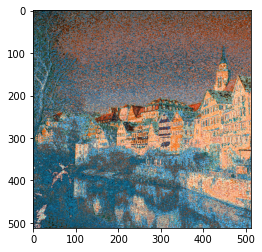

I transfer onto the following image, and get the following results, which don't capture as much of the brush style as the paper results do, but has the particles effect from the uneven distribution of paint on the brushes:

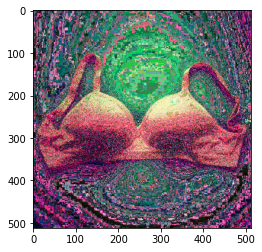

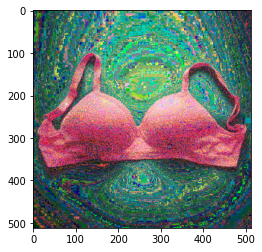

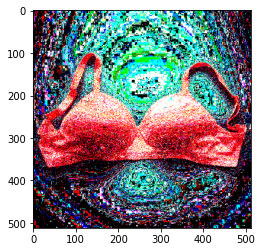

For my own chosen style, I chose this tropics painting, and mapped onto a bra, vaccine, and bottle image.

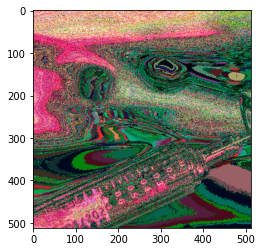

For the vaccine and bottle, the results are as shown below (bottle being a failure case, since the image is almost uniformly white/transparent):

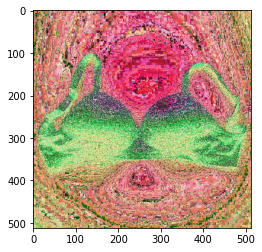

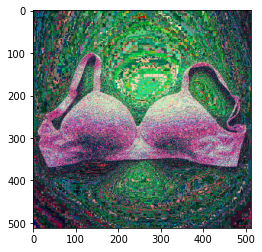

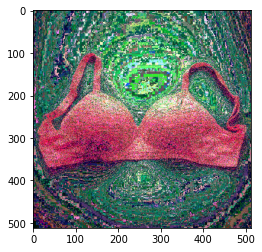

The bra looks very nice as a content for the tropics style, and shown below are some during the training process (and tuning for this may be why the more brush-ey strokes from the paper don't look as nice)

The yellow and white show up, and it's interesting that the teal gets converted to the lighter version of the dark green in the style image

Using light field data, we can simulate depth refocusing and aperture adjustment on a scene. We download the lego and chess datasets.

We can shift the different images w.r.t. to the center image, and compute averages. Shown below is the gif of the depth optimized refocusing.

We can also simulate changing the aperture by taking an average of different radii of images around the center.