This project looks very artistic and good-looking so I chose to work on this one. In this project, I re-implemented Gatys et.al.'s paper: A Neural Algorithm of Artistic Style, using feature maps in CNN to learn the content from the content image and style from the style image. Each convolution layer of a CNN learns some features of the image, and the paper identifies the layers that learns the style/content features. We then directly visualize these information and reconstruct the image from direct outputs of these layers.

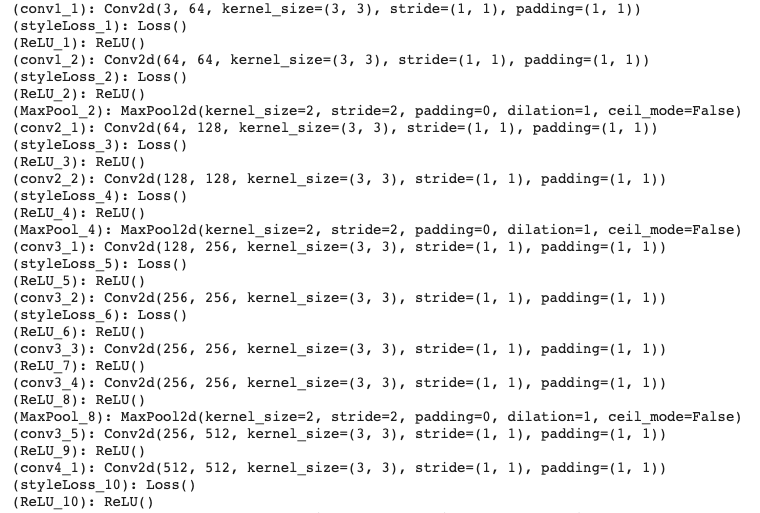

I used the same VGG-19 model as the paper did, which ahas 16 convolution layers. I labeled the convolution layers as follows, and select (conv1_1, conv1_2, conv2_1, conv2_2, conv3_1, conv3_2 conv4_1, conv5_1) as style layers (added 3 style layers after experimentation), and conv4_2 as the content layer. After each of these layers, I added a respective content loss or style loss layer.

|

Here is a full architecture of the modified network:

|

|

I defined the loss function for the network as a weighted sum of the content losses and style loss. The content loss is the mean square error of layer outputs from the original iamge and generated image. The style loss is computed by first finding the gram matrices of the corresponding connvolution layers, then computing the mean square error. The neural network is trained by back propagating on the total loss, with the style weight ratio: 1/10000 or 1/100000. I used the LBFGS optimizer and trained with 1000 number of steps.

Here are some results after re-implementing Neckarfront. The reasons that my results may differ from the paper's results can be: 1. The original art works I find online can have coloring differences from the works the paper uses (e.g. the Starry Night I used is a lot more bright), 2. I used a slightly different structure and different parameters.

|

|

|

|

|

|

|

|

|

|

|

|

Here are some other images from my collection:

|

|

|

|

|

|

Here is a failure case. It fails because the houses in the style image appears in the region of sky of the resulting style transfer, probably because the style image has too few things and thus too few features. Specifically, the neural network is unable to learn the style of "sky" from the style image which doesn't have a region for sky.

|

|

|

This is one of my favorite projects of this class. It's just amazing how the researchers realize which layers capture style vs content features and it would be interesting to learn about that possibly after the class.

In this project, I created the effect of light field cameras using techniques of depth refocusing and aperture adjustment, introduced in Ng et al.'s paper: Light Field Photography with a Hand-held Plenoptic Camera. We mimic the images taken from a light field camera with varying focus length and aperture size using images taken from a normal camera with grid-like displacement, orthogonal to the optical axis. The example data we used are from the Stanford Light Field Archive dataset, with 17*17 images per set.

If we move the camera around without changing the optical axis direction, the positions of objects from far away don't change much, but the positions of nearby objects may change significantly. We utilize this fact to reproduce the effect of refocusing on different depths of the image by shifting the images to match on different depths.

Given the 17*17 images, we identify the center image to be the (8,8) image and shift an image (i,j) by "f * (i-8, j-8)", where "f" is a hyperparameter that we manually choose to represent different focus depth. We may customize the range of alpha for each set of images. We then average the shifted images to get the refocused images at different depths, and combine them to generate the gif.

Here are some outputs I generated using the Stanford dataset:

|

|

|

|

We can also simulate variable aperture sizes of a light field camera, which result in varying extents of blurry parts. Intuitively, if we include more images in the shifting and averaging process, the displacement differences are larger, so the more blurrer the result will be.

The implementation to aperture adjustment is similar to depth refocusing. I used a hyperparameter to indicate different aperture radius. If for image (x, y), the displacement required to shit to the center is within the radius specified, the image will be selected as part of the set of images that are used to create the image with the aperture of the specified radius.

Here are some successful results I produced:

|

|

|

|

I took some real life pictures in a 5*5 grid, trying to reproduce the effects achieved in previous parts. However, due to technical constraints (using only my phone and a ruler to measure the grid), the pictures often deviate from desires grid points and cannot maintain a fixed angle. The results are quite blurry, and rather poor compared to the above dataset. This should be the main reason that this turned out to be a failure case. Another possible reason is that I used only a 5*5 grid of pictures instead of 17*17 grid of pictures, and not sufficient perspectives are provided by the limited data.

This is probably my worst performing results of this semester :(

|

|

|

|

|

Thia is also a fun project. It's quite surprising how the effect of a camera can be achieved wih simple operations and 10ish lines of code. It's also fun to learn about how cameras work.