Image-to-Image Translation with Modified CycleGANs

by Huy HoangIntroduction

In this project, I use a modified version of the CycleGAN model proposed in this paper to learn the style filter of my target dataset and apply it to my source dataset. My motivation for using this approach as opposed to the Neural Style Transfer approach proposed by Gatys et. al was that I wanted to learn the mapping between 2 image collections by capturing the correspondences between higher-level features (e.g. faces, trees, etc.), rather than just between 2 images.

Preparing Data for Training

In order to get the necessary data for training, I wrote 2 web scrapers using Python and Selenium. The first web scraper fetches images from WikiArt and the second one fetches images from Instagram. For this project, I decided to use Gustav Klimt paintings for my target dataset and photos by @by.hokim and @sakaitakahiro_ on Instagram for my source dataset.

Here are some photos:

Model Overview

The CycleGAN models first assumes some underlying relationship between the 2 image domains and tries to find a mapping G: X -> Y such that there's some discernible pairing between an individual input x and output y. For this purpose, it proposes that image translation should be "cycle consistent", meaning that if there exists a mapping G: X -> Y, we should have a mapping F: Y -> X that is effectively the inverse of G. In other words, when an input image x is fed into G and the result is then fed into F, we should end up with something similar to the original image x.

The original model uses a combination of cycle consistency loss that tries to make sure F(G(x)) = x and G(F(y)) = y and adversarial losses of each mapping function to make sure they can generate images that look similar to those in the target domain.

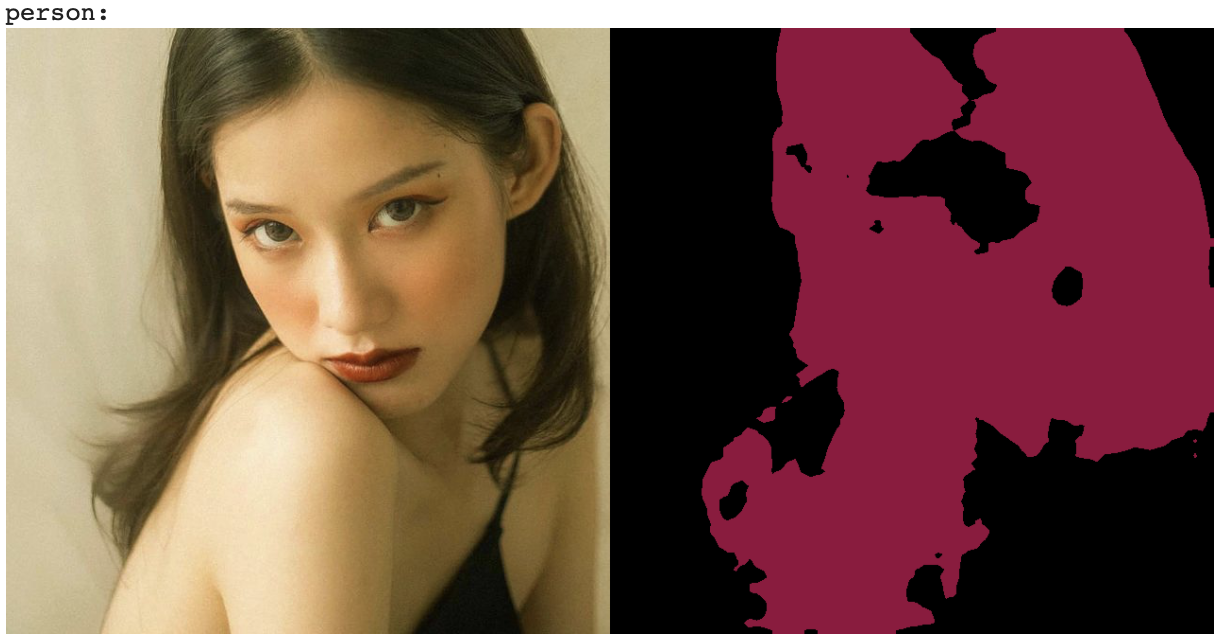

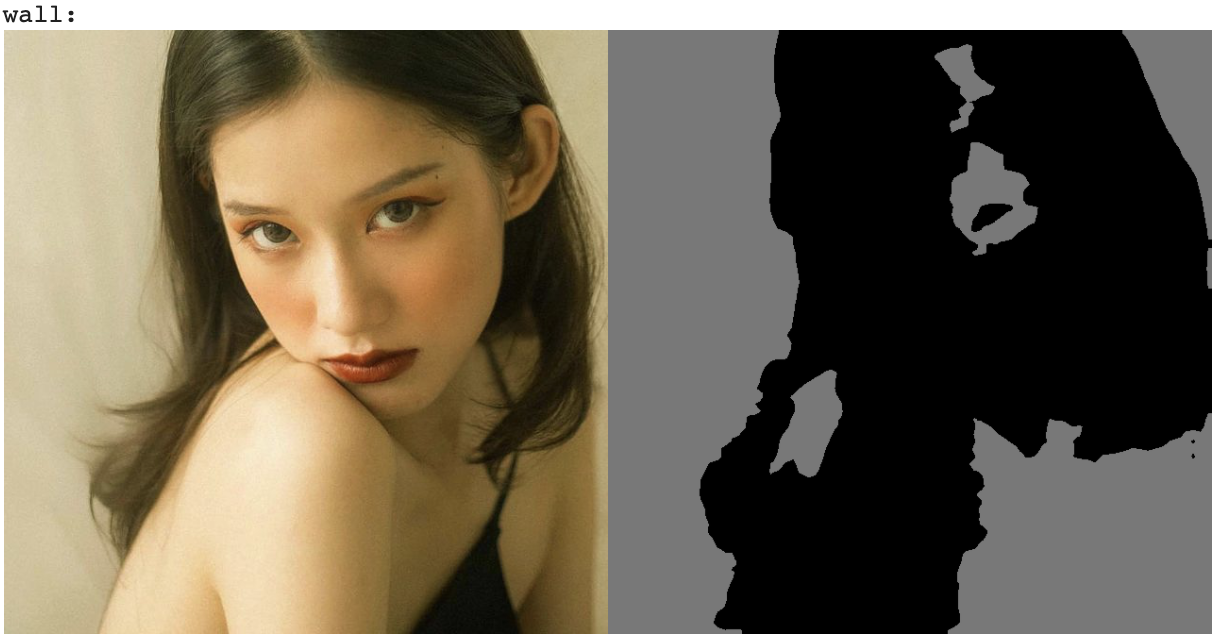

In addition to these 2 loss functions, I added another loss function based on a pretrained semantic segmentation network in order to prevent the model from changing the images too much that makes it unrecognizable. I found a PyTorch implementation of a pretrained model based on the MIT ADE20K dataset which worked decently in parsing my portrait photos. The models return a semantic segmentation mask that shows the predicted category for each pixel in the image. Below is an example of the result -- the first photo shows what the network perceives as a person and the second a wall.

Lastly, I wanted to prevent the network from making too many changes to higher-level features so I reduced the depth of the default generator & discriminator network. This ensures that the network focuses more on low-level features like color and simple textures.

Results

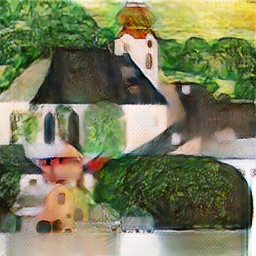

Below are some results where I try to translate the style of my target dataset (Gustav Klimt paintings) to my source dataset (photos by @by.hokim and @sakaitakahiro_ on Instagram) and vice-versa.

Here are the results for translating the style of these portrait photos to Gustav Klimt paintings

Observations

When translating styles from the portrait photos to Klimt paintings, I noticed a smoothing effect that it has on the final results, in addition to a change in color tone. This is expected as most @sakaitakahiro_ images has a slight green tint and unsurprisingly, the portrait photos are sharper and smoother than the paintings.

When translating styles from Klimt paintings to the portrait photos, I saw that my results varied greatly. I suspect that this might be because the Klimt paintings were of much lower resolution and a lot of them were not portraits. This mismatch in content of the images between the source and target dataset might be the reason why my results were not as pleasing as I had hoped.

Conclusion

Given more time and resources, I'd like to train my model for much longer than just 100 epochs and finetune it more to achieve better results. Additionally, picking datasets whose content are more similar would deliver better images as well. Overall, working on this project was a really fun experience and I learned a lot from it.