Tony Lian

Email: longlian at berkeley

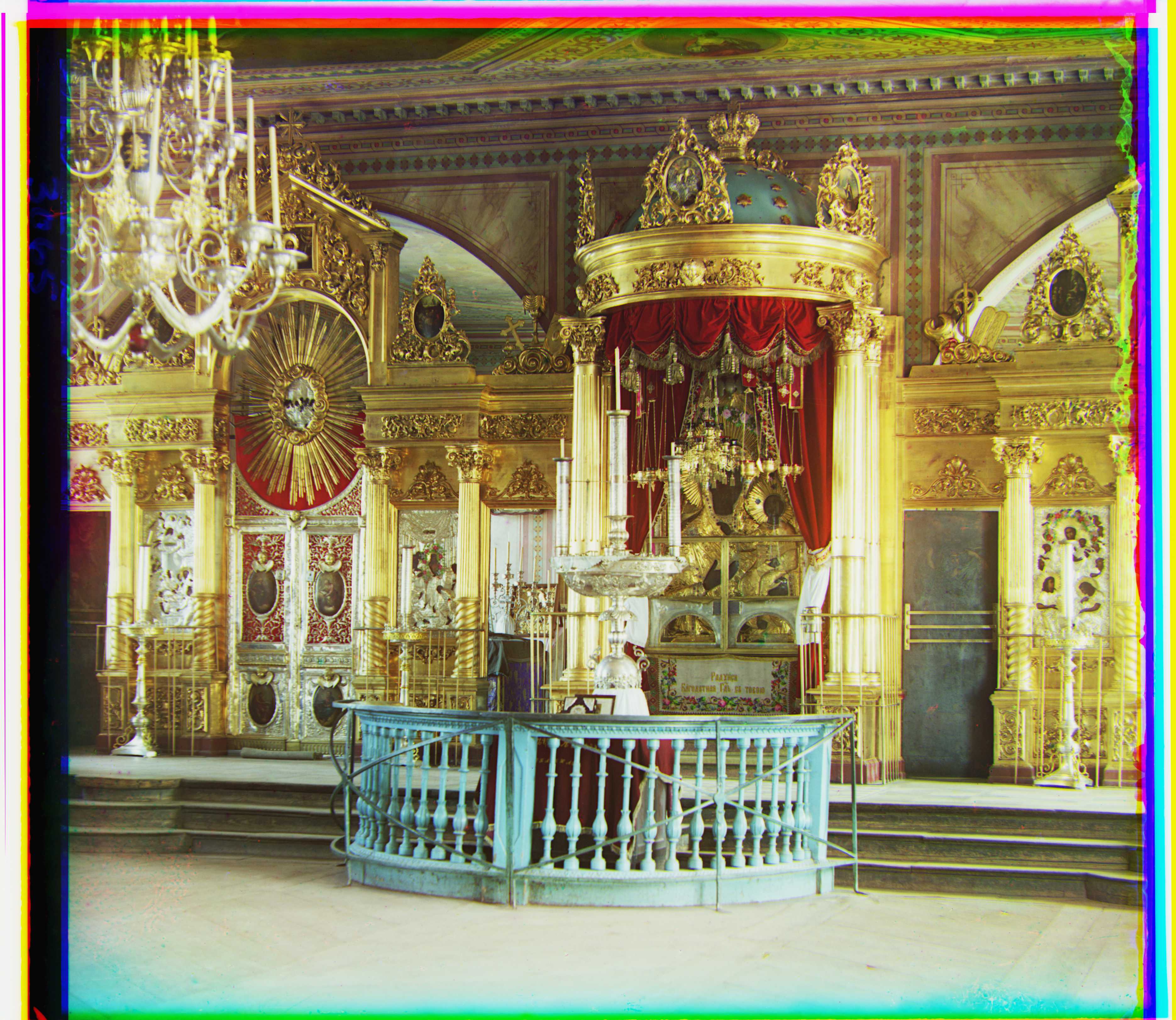

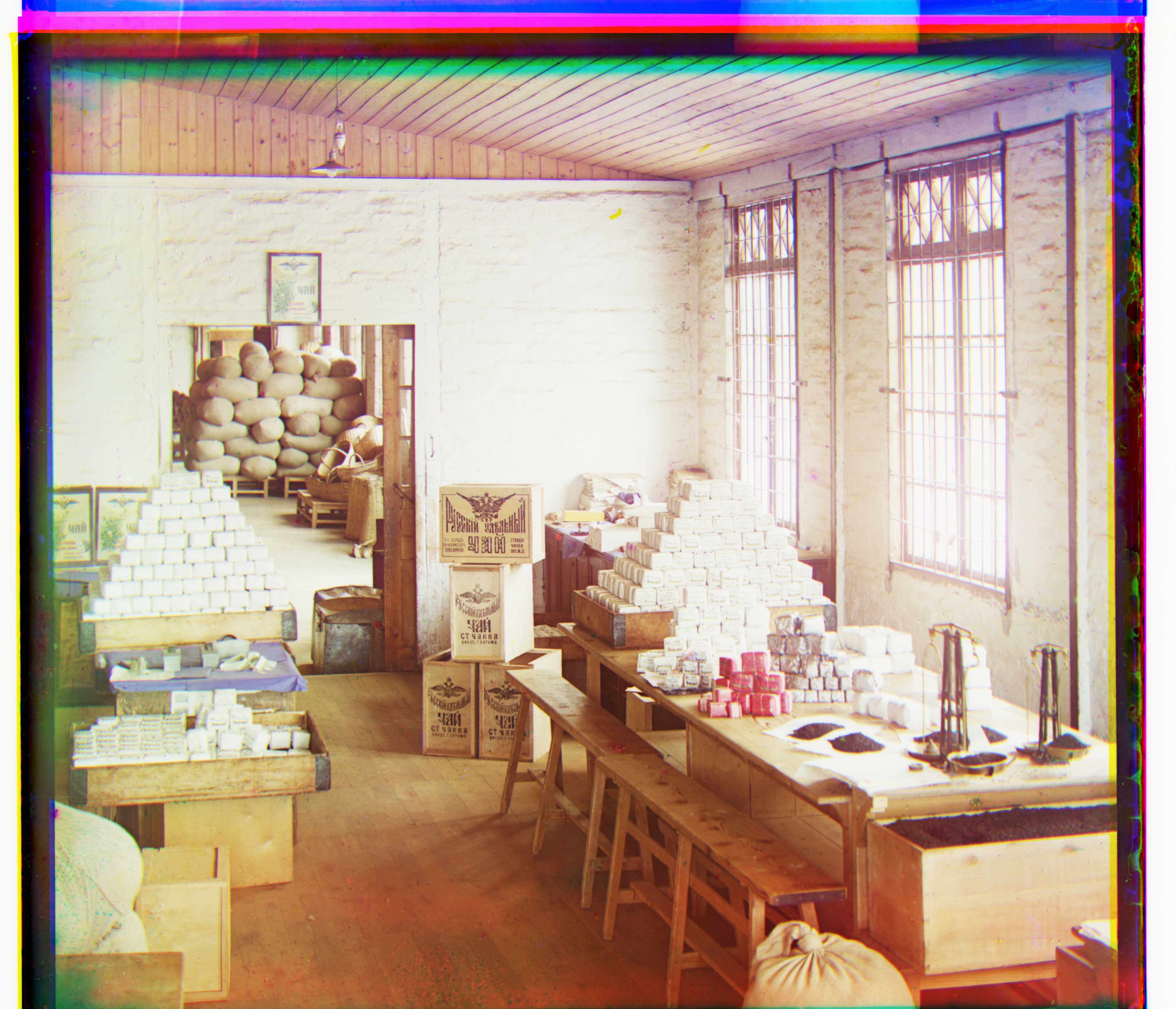

This project is trying to reconstruct colored images from Sergei Mikhailovich Prokudin-Gorskii's collections of captured photography. The original photos were captured with 3 different filters, namely R, G, and B. In this end, we are trying to reconstruct it.

For the single-resolution version, I'm using a simple metric which is NCC with a mean-standarize preprocessing: I first subtract the mean of a channel from the image channel, then calculate the dot product of these two channels after L2 normalization. I search over from -15 to 15 offset of G to B and then R to B channel to align the colored images. The metric is only evaluated on the center 80% of the image (the 10% of each of the 4 sides are cropped before evaluation). In the end, it looks pretty good (on small-scale images). However, -15 to 15 is way too small on the large .tiff images, and making the search range larger will significantly slow down the process of searching. Therefore, I need to use a multi-resolution version.

For the multi-resolution version, I use a Gaussian pyramid. I use a resize with Gaussian blurring to create such a pyramid. I use a pyramid with 5 scales, and each smaller scale is 2x smaller than the prev. larger scale. The search starts recursively from the first scale, and for the first scale, the offset range is from -15 to 15; for other scales, the range is from -4 to 4 to reduce running time for each image. The offset is calculated starting from a higher level of pyramid, multiplied by 2. This drastically speed up the runtime for large images because each level of the pyramid is given a good (but coarse) initialization and onyl needs to search a small offset from the initialization.

Note: offsets are in "G to B offset, R to B offset"

The reason that emir.tif does not work well is that when inheriting the initial offset from a higher level of pyramid, the error accumulates (since we multiply by 2) and at some point of time it goes sideways since the size of search is only 4 pixels in lower levels, and it will never come back if goes too far away, thus leading to this misalignment in the image. To fix this, we can use fewer levels and larger search ranges. By setting the number of scales to 3 and search range to 8, we get a much better alignment of the image.

I observe that for a row or a column of pixels, if it belongs to the boundary, the sum of the absolute value of the difference within such row/column will be large. To determine the boundary on y-axis, I first calculate the difference between each pixel and the pixel on the right, and take the absolute value. Next, I sum up the values and pass them into a moving average to smooth the values. After a threshold, I take the min and max of the positions of values above threshold. I apply similar things to x-axis.

Example (left is original, right is cropped):

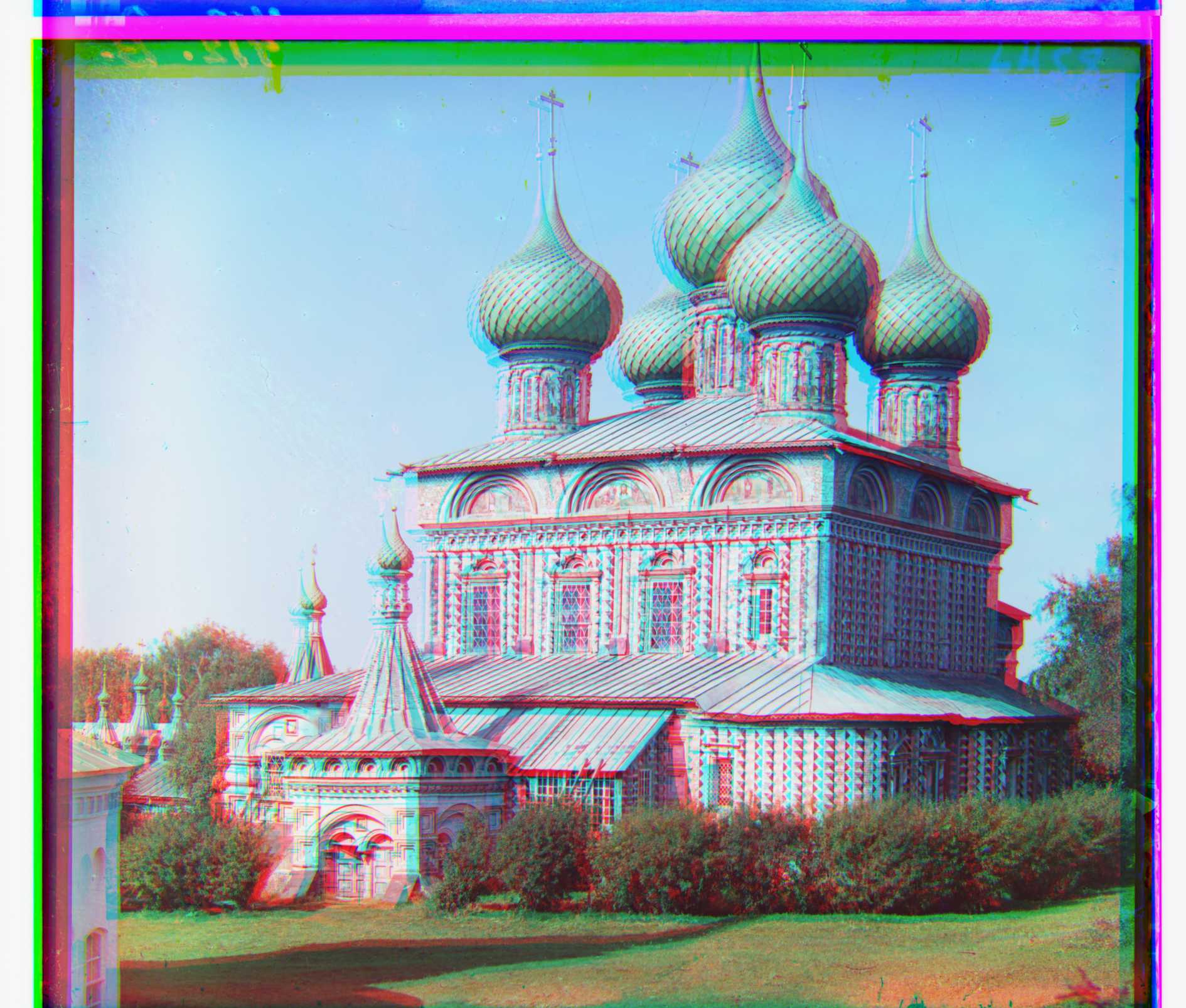

skimage library provides a lot of edge detection functions in skimage.filters. Here I use both sobel and roberts edge detection function instead of raw pixels to adjust images to align. In the end, roberts works much better than sobel. My reasoning is that, sobel images are smooth and the edges are not clear enough and many alignments can have similar values, thus not encouraging pixel-perfect alignment. However, robers images are much crisper in terms of the edges and requires pixel-perfect alignment to achieve a high metric value. I present two of the alignments, emir and onion_church. Emir is improved a lot in roberts, while onion_church seems as perfect as in roberts as the original.

Left to right: original, sobel, roberts

Emir: the roberts one improves a lot over the original.

Onion church: the original one is already very good, so the improvements of roberts is small. However, sobel does not work well here.