Overview

In this project, the goal was to transform the digitized Prokudin-Gorskii glass plate images into a single RGB color image. To do this, the rgb channels were separated, stacked and aligned.

Part 1: Exhaustive Search

Implementation Approach

For Part 1, I used exhaustive search to align the jpg images into a color image. My algorithm divides the glass plate images into the three channels r, g, and b

by slicing the image horizontally into three equal parts. After doing this, I first aligned the r channel with the b channel, then the g channel with the b channel. After these two alignments, I stacked the three aligned channels to produce the final input.

Diving deeper into the exhaustive search and align procedure, I searched over a window of [-15, 15] pixels rolled the image using np.roll, cropped the borders and used the lowest sum of squared difference to identify the best displacement.

Red: [9, 0], Green: [2, 0]

Red: [9, 0], Green: [2, 0]

|

Red: [6, 3], Green: [3, 2]

Red: [6, 3], Green: [3, 2]

|

Red: [4, -1], Green: [-1, 0]

Red: [4, -1], Green: [-1, 0]

|

Part 2: Image Pyramid

For Part 2, I implemented an image pyramid to allow for faster search with the high resolution glass plate scanes. To do this, I adapted my exhaustive search code. First, I wrote a function to construct the image pyramid. To do this,

I used im.resize and continuously scaled the image resolution by 2 until we reach a mininum resolution of 30x30 pixels, saving each layer into a list. After defining this function, I constructed the image pyramid for the r, g, and b channels.

With the image pyramids for all of the channels, I then aligned the pyramids. To do this, I ran exhaustive search on the lowest resolution image. After landing on an x, y displacement, I then scaled up the values by 2 (as that was the scaling factor used in the image pyramid), and ran exhaustive search on a smaller window in the higher resolution image.

I did this iteratively from the lowest resolution image to the highest resolution (original) image to arrive at the final x, y displacement. I aligned the g channel image pyramid to the b channel image pyramid. I also aligned the r channel image pyramid to the b channel image pyramid. Finally, with the displacement values, I stacked the aligned images to produce the final color image.

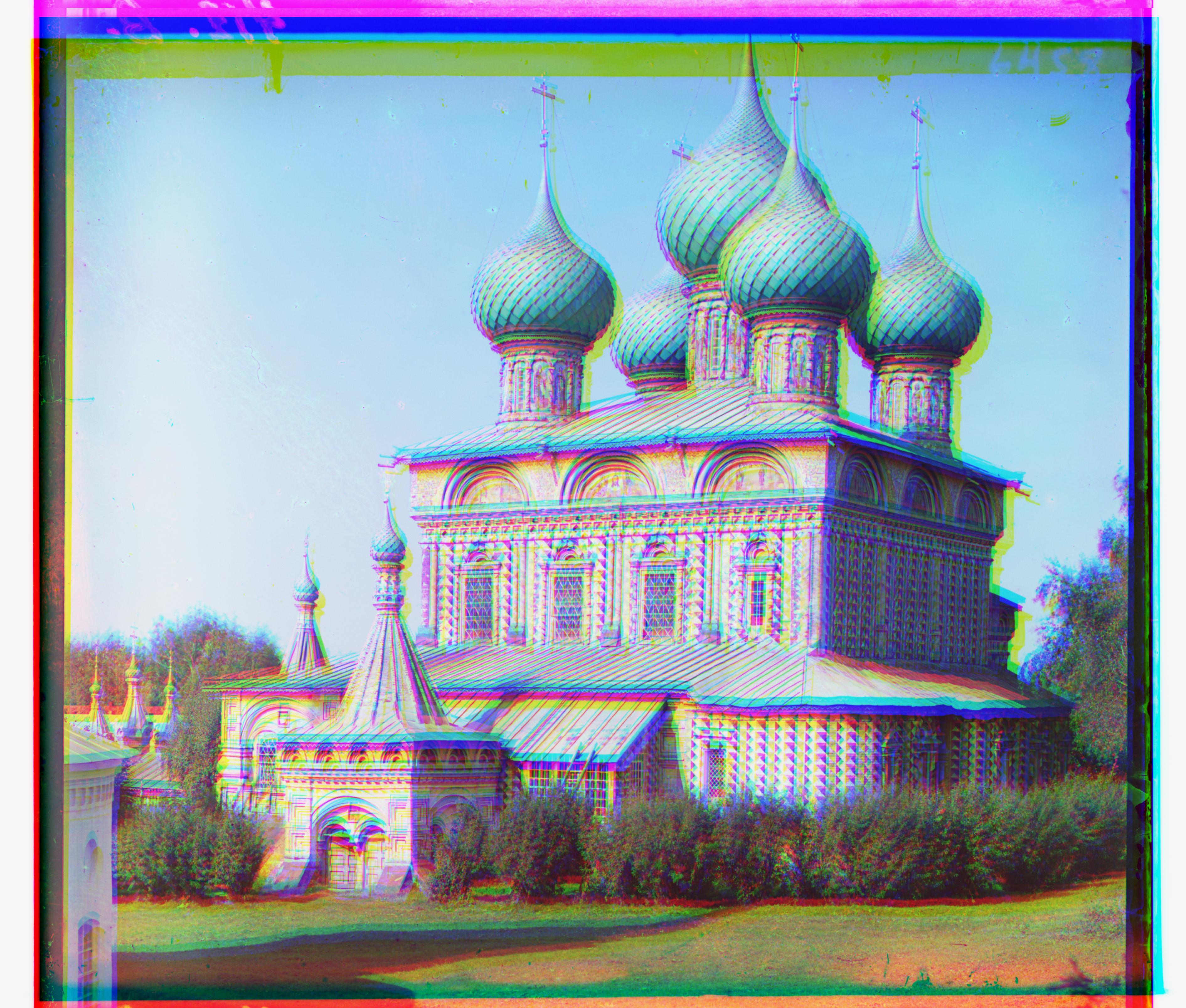

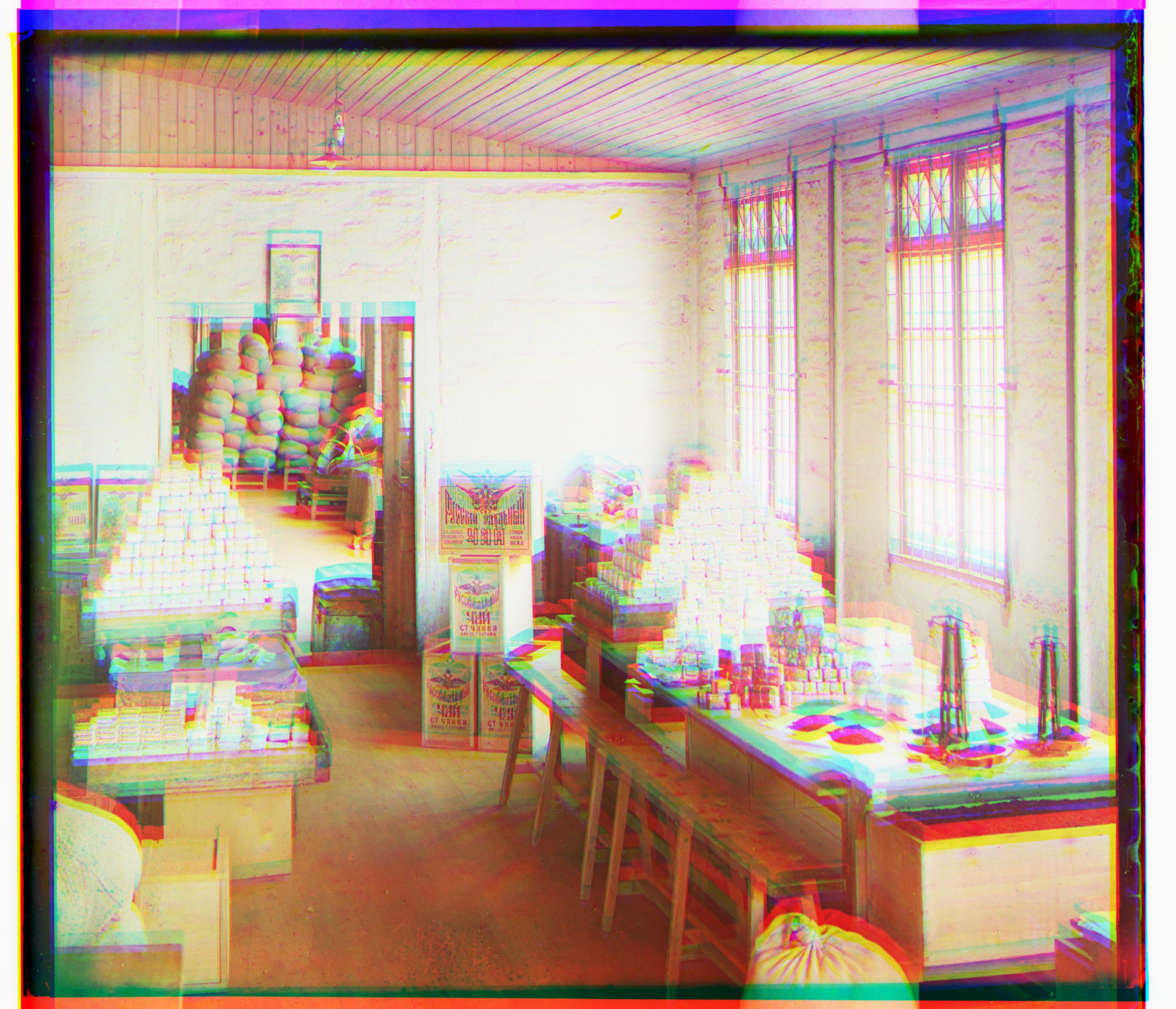

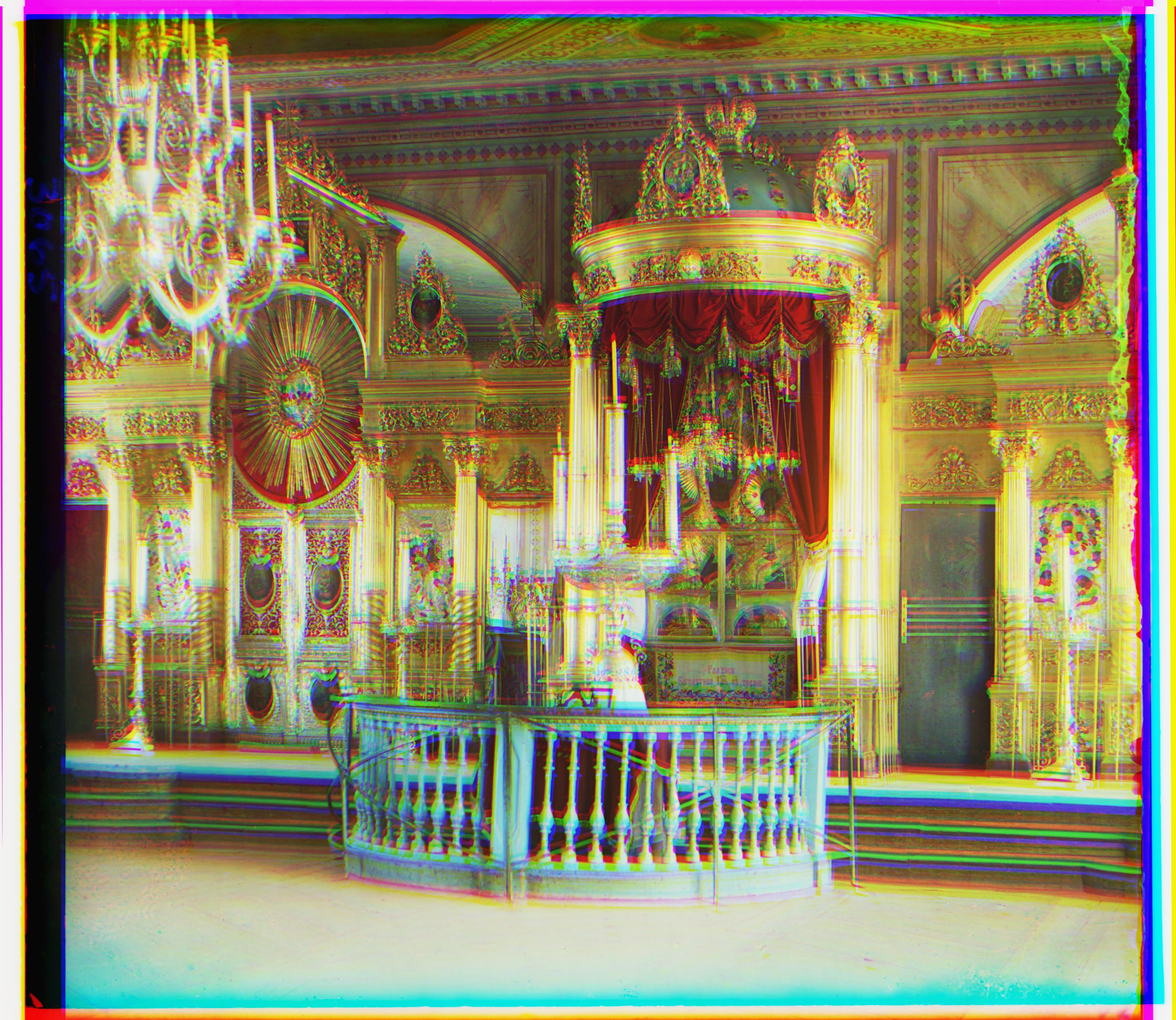

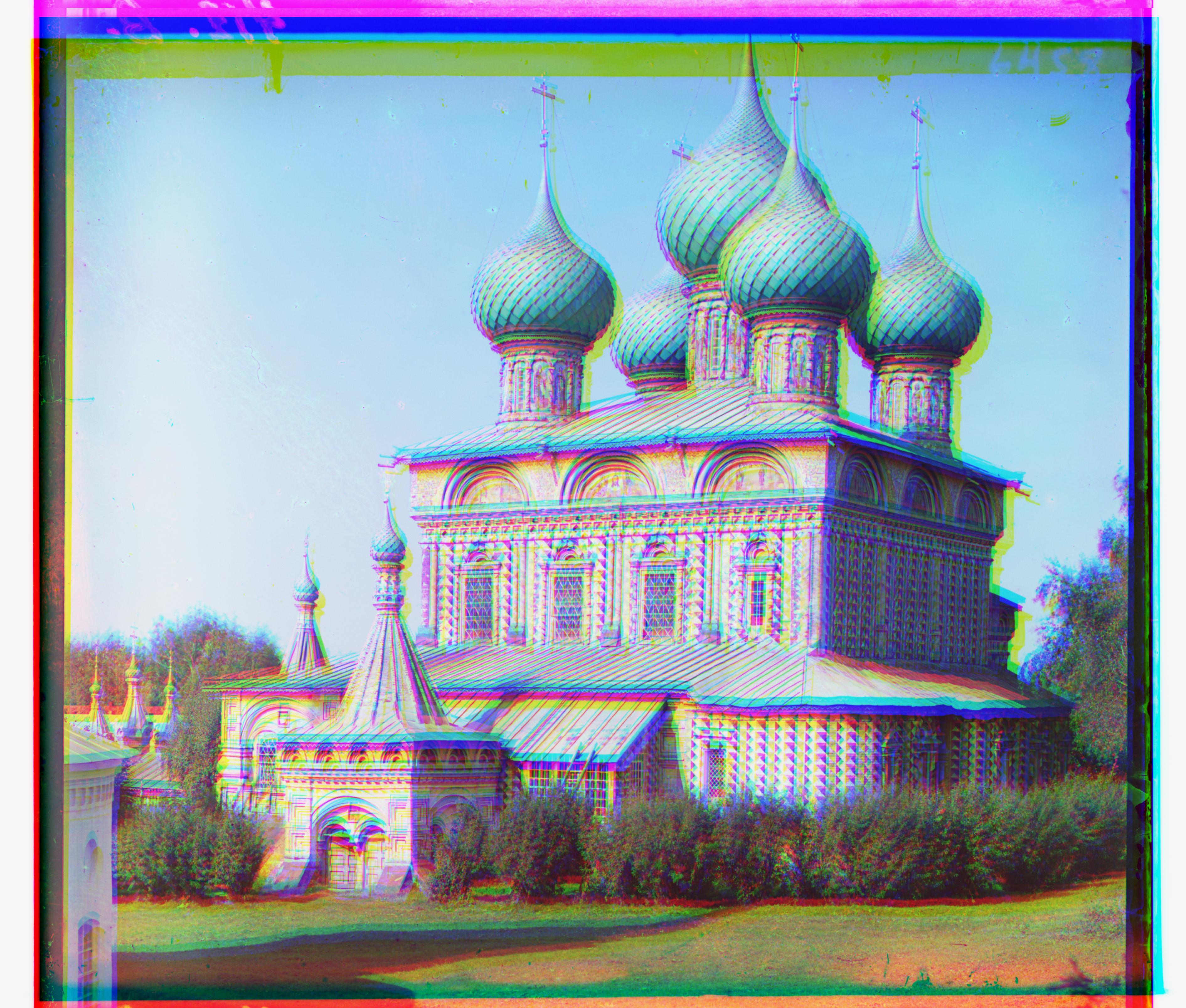

Red: [88, 3], Green: [39, 1]

Red: [88, 3], Green: [39, 1]

|

Red: [25, -4], Green: [0, -3]

Red: [25, -4], Green: [0, -3]

|

Red: [61, -9], Green: [29, -4]

Red: [61, -9], Green: [29, -4]

|

Red: [128, 7], Green: [-1, 3]

Red: [128, 7], Green: [-1, 3]

|

Red: [47, -1], Green: [29, 3]

Red: [47, -1], Green: [29, 3]

|

Red: [45, 11], Green: [20, 8]

Red: [45, 11], Green: [20, 8]

|

Red: [64, -3], Green: [24, -1]

Red: [64, -3], Green: [24, -1]

|

Red: [131, 1], Green: [25, 1]

Red: [131, 1], Green: [25, 1]

|

Red: [54, 17], Green: [25, -1]

Red: [54, 17], Green: [25, -1]

|

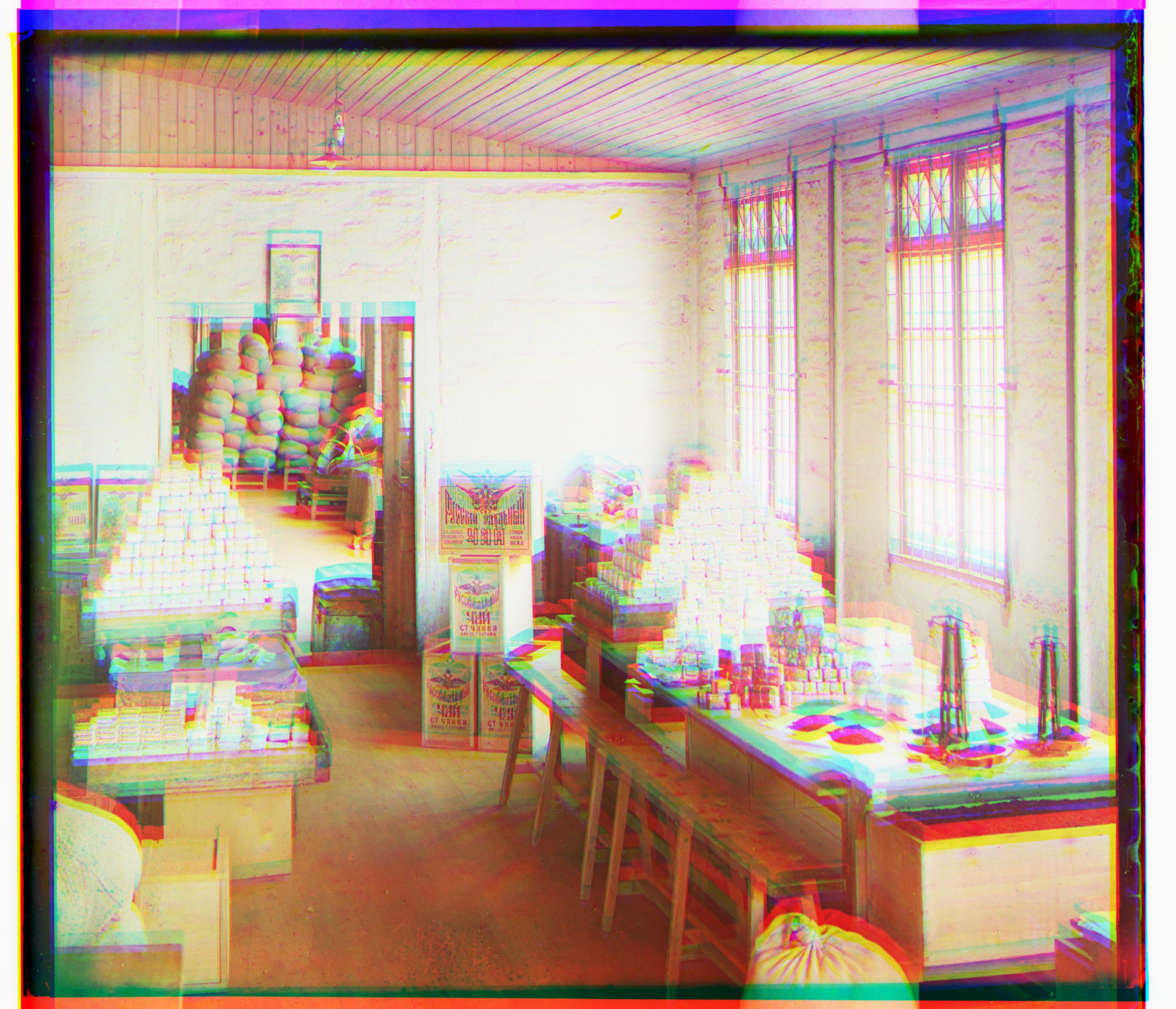

Red: [53, 1], Green: [55, -3]

Red: [53, 1], Green: [55, -3]

|

Red: [31, -8], Green: [27, -3]

Red: [31, -8], Green: [27, -3]

|

Red: [258, -10], Green: [-9, -1]

Red: [258, -10], Green: [-9, -1]

|

Problems Encountered

In this project, I encountered problems with image clarity. For both my exhaustive search and image pyramid approaches, initially, the output was not as clear as in the sample images (ie it looks correct, but is blurry).

Moreover, for some reason, when cropping the images, my code performed worse. And so, I went to OH to try to fix my issues. Unfortunately, I was not able to identify or fix the issue here.

When plugging in some past student sample values, I found while debugging that the SSD value was greater than the displacement values that I outputted. The reason for this stil eludes me.

To debug, I tried many things like tuning how much I cropped the images, experimented with rolling and cropping, used correct values for reference to try to guide my debugging, and tried to 0 out pixels when rolling in an attempt to get a more accurate output.