Overview

Given three unaligned images, each representing the information in the blue, green, and red color channels, the goal is to generate a final color image from the Prokudin-Gorskii photo collection. While the objective is straightforward, the task of aligning the three grayscale image channels creates efficiency challenges at higher resolution and the choice of preprocessed image features, alignment metric, and implementation hyperparameters is key to generating a successful result.

Single-Scale Exhaustive Search Results

A naive baseline is to exhaustively test all (row, column) alignment offset vectors within a given window and determine the best offset based on a similarity metric when aligning two channels. In all experiments (both naive and multi-scale), we hold the blue channel constant and align the green and red channels to the blue channel. The window used for single-scale experiments is [-15, 15] × [-15, 15]. The similarity metric used is sum of squared differences (SSD), as it was fast to compute and produced better qualitative results over normalized cross-correlation (NCC). We also tried using binary cross entropy as a metric and while it produced similar qualitative results to SSD, it was significantly more expensive to compute. All search algorithms in this project used SSD, with their objective to minimize the SSD between two image channels.

The borders of each grayscale channel are often black or uninteresting segments that can interfere with alignment, so we compute the offset a cropped version of the image features (center 70% of the image). To improve the visual quality, we also use a fixed-margin crop to shave off 20 pixels of each edge for the low-resolution JPG images (and 300 pixels off of each edge for the high-resolution TIFF images in later experiments).

A downside of this approach is that for higher resolution images, where the offset could be quite large, it can take a long time to search over the window of possible offsets.

| Image | Red Channel Offset | Green Channel Offset |

|---|---|---|

| Monastery | (2, 3) | (2, -3) |

| Tobolsk | (3, 6) | (3, 3) |

| Cathedral | (3, 12) | (2, 5) |

Multi-scale Pyramid Results

To improve the efficiency of the alignment algorithm, we implement an image pyramid: we first run the naive algorithm over a [-10, 10] × [-10, 10] search window on a downsampled version of the image (e.g. 1/16), multiply the displacement vector for that resolution by a preset common ratio, and then use that vector as the center of the search window on a version of the image at a resolution that is larger than the previous resolution by a factor of the common ratio. Repeating this process over multiple scales allows the search to effectively cover a much larger receptive field without explicitly computing every single possible offset vector in the full resolution space. The results below are generated with a 3-layer image pyramid with the exhaustive algorithm run at 1/16, 1/4, and 1x (full) resolution (common ratio of 4).

While this algorithm allows us to produce colorized images for many of the provided samples, the one exception is the photograph of the emir. This is likely due to the fact that the emir's BGR channels have very different pixel intensity values (the emir's clothing is much brighter in the red channel than in the blue channel), and therefore optimizing SSD usinig raw pixel intensity does not yield an aligned image. We mitigate this with improved features in later experiments.

| Image | Red Channel Offset | Green Channel Offset |

|---|---|---|

| Monastery | (2, 3) | (2, -3) |

| Tobolsk | (3, 7) | (3, 3) |

| Cathedral | (3, 12) | (2, 5) |

| Workshop | (-12, 105) | (-1, 53) |

| Emir | (15, -38) | (24, 48) |

| Church | (-4, 58) | (4, 25) |

| Three Generations | (13, 109) | (14, 50) |

| Melons | (13, 177) | (10, 81) |

| Onion Church | (37, 108) | (27, 51) |

| Train | (32, 85) | (6, 42) |

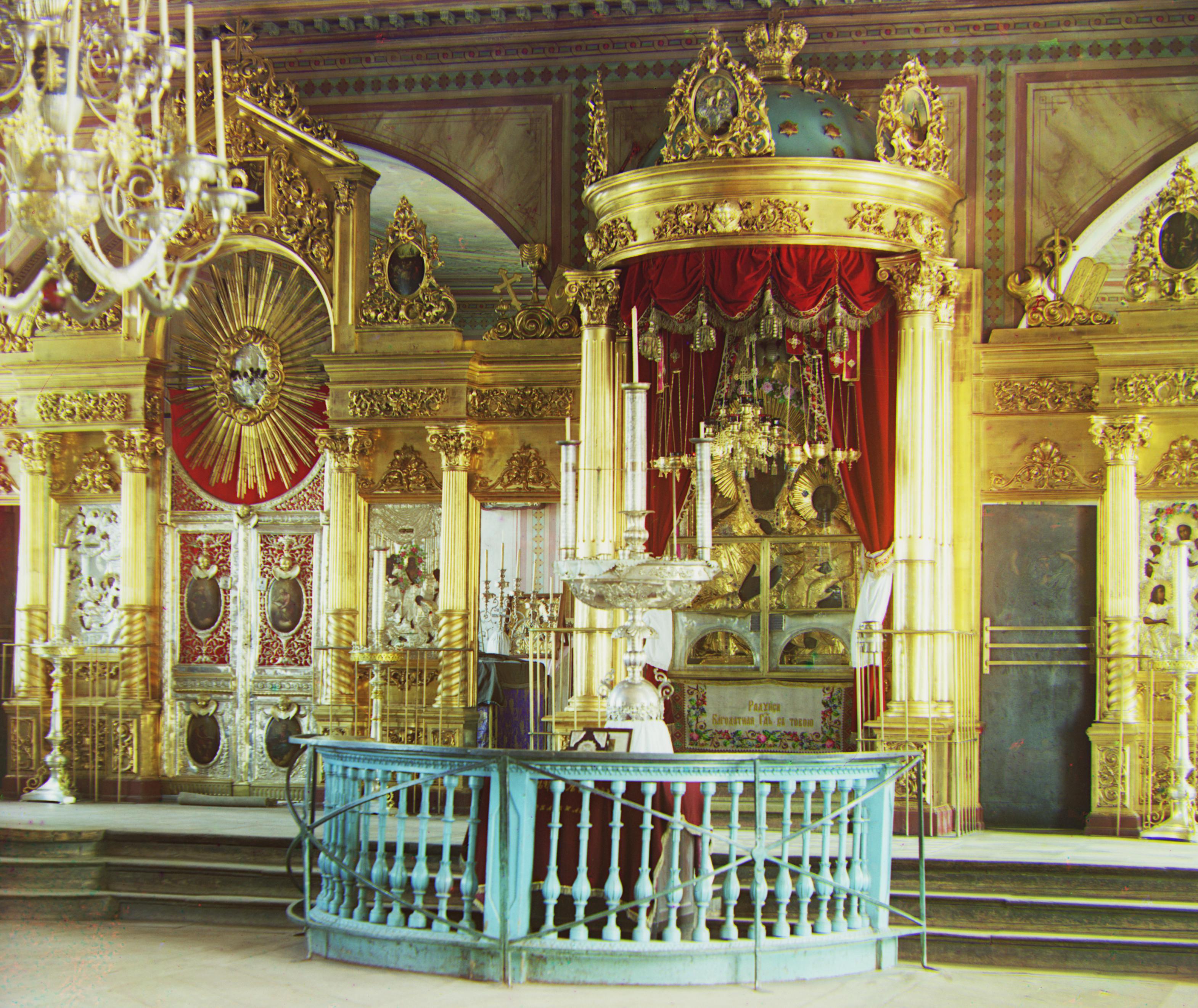

| Icon | (23, 89) | (17, 41) |

| Self Portrait | (37, 175) | (29, 78) |

| Harvesters | (15, 123) | (17, 59) |

| Lady | (12, 111) | (8, 51) |

Better Image Features

To improve the generated color images, especially for the emir where the raw pixel intensity across channels does not match well, we use a Sobel filter to generate edge features and run the multi-scale image pyramid algorithm directly on these preprocessed images. The hyperparameters used for this implementation are identical to the previous implementation, just with the preprocessing function changed from crop to crop + Sobel filter. This implementation does improve the emir's result, but causes a regression on the melon image. This is likely because the edge features across channels for the melon image are varied enough that optimizing SSD directly on those features is less informative than optimizing directly on pixel intensity (similiar to the problem experienced with the emir's photo in the originial multi-scale image pyramid implementation).

Additional Samples

| Image | Red Channel Offset | Green Channel Offset |

|---|---|---|

| Room | (45, 88) | (30, 40) |

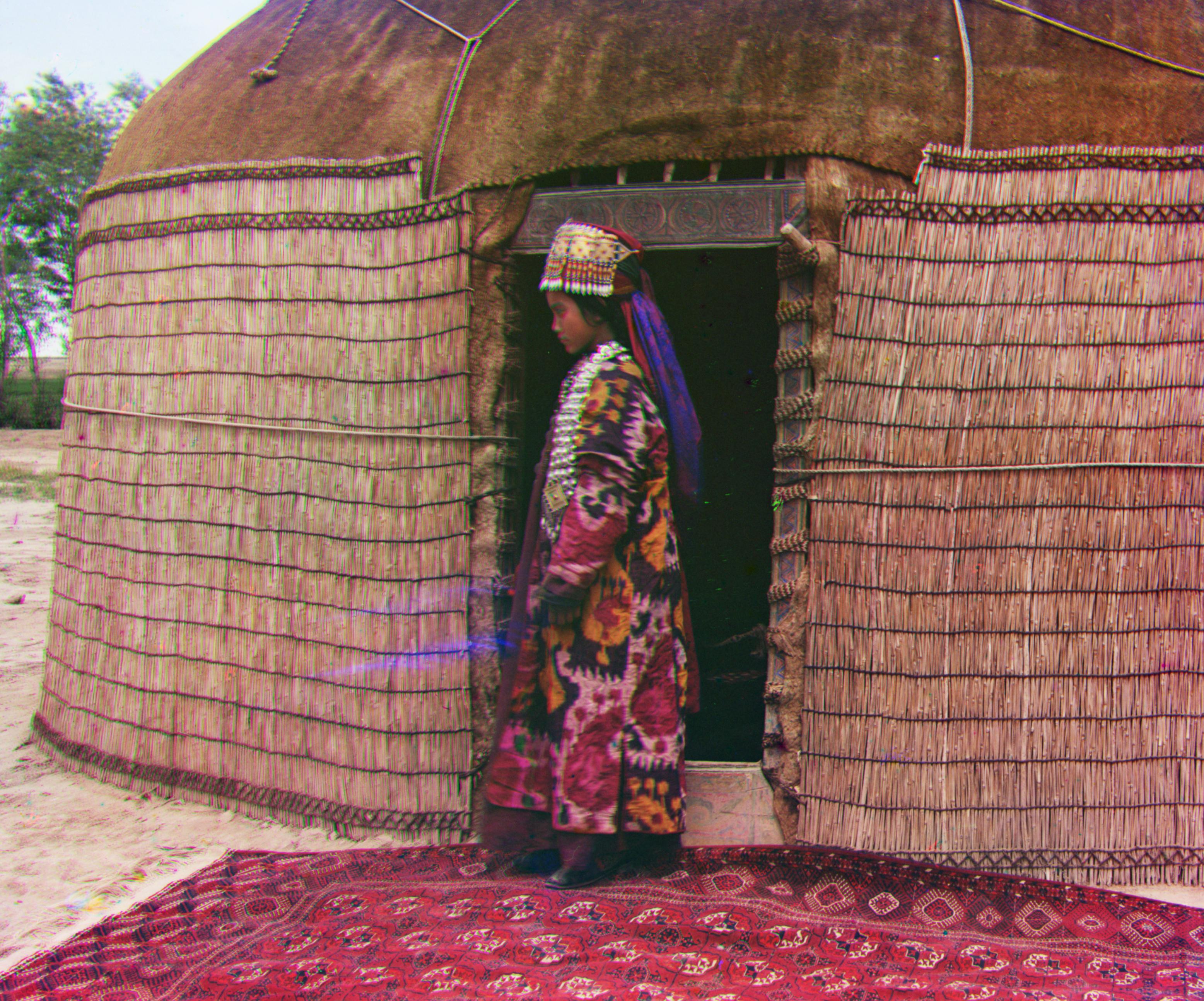

| Nomad | (51, 68) | (43, 23) |

| Cross | (2, 47) | (7, 21) |

| Windmills | (24, 126) | (16, 55) |