The project is simply a script that takes an vertically aligned image file with the rgb channels of each photograph in black and white, and

compositing it to become a single rgb image.

The naive implementation is simply trying out every possible alignment within a set range of pixel deviation (in our case, 15 pixels), and then

using a heuristic to rate how well aligned the images are. The heuristic is simply a normalised square sum of the difference between pixel values

for each of the RGB channels. To my surprise, this worked surprisingly well given that the alignment require no more deviation than 15 pixels in

any given direction, and the image itself had fairly small dimensions.

The implementation was simple, we first aligned the g-channel to the r-channel (note that this oddly specific order of choice was a result of misstyping rgb

and pure, unexcused lazyness. No I am not sorry) while altering the b-channel image in the exact same way as the r channel, irrespective of

the actual alignment. And then aligning the b-channel to the r-channel, and performing the same modification we applied to the b-channel in the previous

iteration to the g-channel.

The first of many issues we ran into was a consisten inability for the heuristic to correctly align our images. After much pain, we had noticed that the

non-aligned image was actually marginally worse-off than our unaligned images, meaning that some type of modification was being made, just very VERY badly. It turns out the

solution was simply inversing the heuristic, as we had mistakenly prioratised the worse alignment, rather than the better. Also, we noticed that there was one

particular implementation of the heuristic that prioratised cutting off as many pixels as possible which was not very helpful.

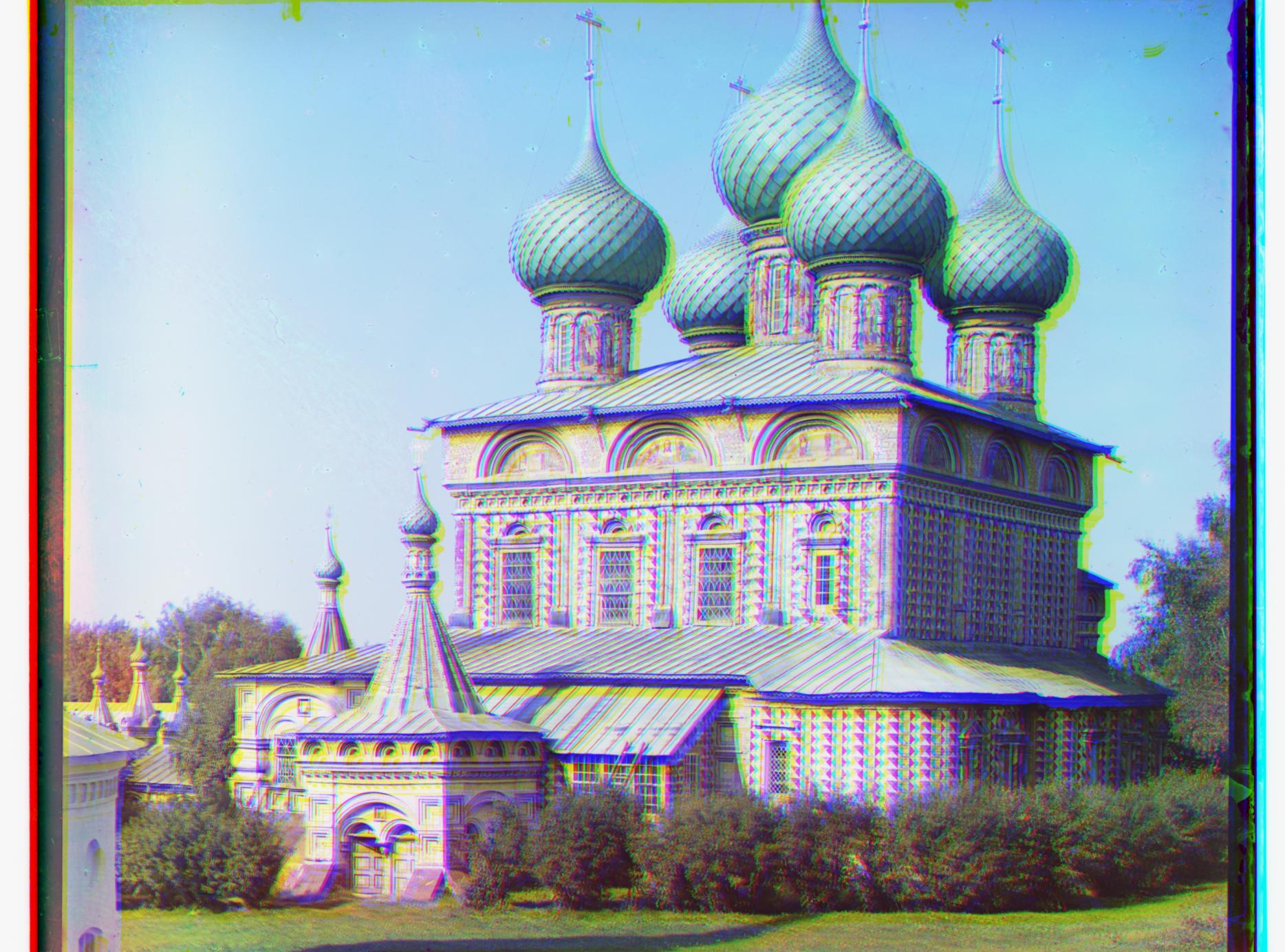

Another head-scratching problem with a stupidly easy fix was an rgb issue for all our images came out in a stunning shade of blue.

I, knowing nothing about Russia, made the visual colour correction in my head and the assumption that since Russia is cold, everything in Russia must be a

sappy shade of blue before noticing that perhaps this was not the correct rgb alignment. After toying around a bit (and a perhaps-unrelated trip to the optometrist),

I had realised that I completely forgot to stack the red channel to the other two, so that was an easy fix.

g_shift: [5 2] | r_shift: [12 3]

time elapsed: 0.443

g_shift: [-3 2] | r_shift: [3 2]

time elapsed: 0.449

g_shift: [3 3] | r_shift: [6 3]

time elapsed: 0.473

Implementation for the visual pyramid was fairly straightforward in that I simply applied repeated utilisation of my original coded, except also keeping

track of the previous displacement of coaser alignments, and then scaling it up appropriately to apply to a similar position on the finer iterations.

The idea behind it was fairly simple to understand, and also pretty straightforward to implement. It's the application and usage of it that really troubled me.

The first and largest issue that I faced in my implementation of the pyramid alignment strategy is a memory issue that I faced. A rather peculiar and strange

issue for which when running the program in python, it kept saying that I didn't have sufficient ram to store the whole 3k x 3k ndarray representation of the image.

This drastically slowed down testing, implementation, and overcomplicated my code to fairly extreme extents, forcing me to perform ubyte conversion of the float

arrays, restructuring the entire project etc... Testing one of my friend's code (which ran fine for them) yielded the same errors, so I think it may be computer-specific

but alas, there's little that anyone can do about it so I suppose I just had to weather it out. Nevertheless, optimization added no less than 10 hours to my overall

project leaving fairly little time to perfect the actual strategy, resuling in some poorly aligned images (melons, emir and lady in particular).

However, for some other images it was fine so I considered it "acceptable"

Something that's less of a "problem" and more of a "whoops I had forgotten" was the need for obtaining g_shift and r_shifts. My function shifts in a rather

Rather strange manner, aligning g to r, and modifying b in the same way r is altered, and then repeating the process aligning b to r and altering g. This means

This means that instead of an overall g_shift and r_shift, instead I have a compounded deviation value for both the g and b channels relative to r. These numbers may mean

nothing but I will list them here regardless.

As a part of the debugging and optimization process, it yielded me some rather interesting artifacts, most of which were fixed by just random tinkering such

as this "cursed" image which I have labelled "cursed" on my end.

And thinking it funny I decided to use a slighly less efficient version of my alignment algorithm to generate a slightly more cursed image because it looked funny.

So what I have a broken humour. Sue me.

best_dx: [34, 41], best_dy: [0, 0]

time elapsed: 14.542

best_dx: [77, 77], best_dy: [4, 9]

time elapsed: 14.399

best_dx: [87, 89], best_dy: [8, 54]

time elapsed: 14.326

best_dx: [51, 63], best_dy: [3, 11]

time elapsed: 15.088

best_dx: [54, 7], best_dy: [89, 0]

time elapsed: 22.269

best_dx: [37, 10], best_dy: [70, 20]

time elapsed: 21.936

best_dx: [67, 79], best_dy: [11, 11]

time elapsed: 14.575

best_dx: [37, 41], best_dy: [82, 8]

time elapsed: 14.378

best_dx: [66, 77], best_dy: [0, 2]

time elapsed: 14.462

best_dx: [87, 97], best_dy: [2, 0]

time elapsed: 14.411

best_dx: [56, 67], best_dy: [49, 0]

time elapsed: 14.606