Kaitlyn Lee Project 1 -- CS 194-26 UC Berkeley

Table of Contents

Project Description

Sergei Mikhailovich Prokudin-Gorskii envisioned taking color photographs of the Russian Empire by recording three exposures of every scene using red, green, and blue filters.

His RGB glass plate negatives were recently digitized by the Library of Congress, and made available online.

This project aims to reconstruct the color photos by realigning the images digitally.

We separate the images of the negatives into the red, green and blue channels, and then realign them on top of each other to recreate a color photo.

Part 1: Exhaustion Search (Low-res images)

For low-resolution images, since they're relatively small and the possible displacement is also relatively small, we can exhaustively search an area of displacement for the best possible alignment based on the sum of squared differences (SSD) of pixel values between layers.

Here, we aligned the red and green channels onto the blue channel. I searched a displacement area of [-15, 15] from 0 in both the x and y directions. In addition, when calculating the SSD of two channels, I only considered the middle 80% of the image (that is, the middle 80% of the height and middle 80% of the width), in order to reduce the error from the borders (not part of the image) as well the erroneous values as a result of rolling values when checking offsets.

Unaligned images are on the left, aligned images are on the right.

Cathedral: Green Offset [2, 5] Red Offset [3, 12]

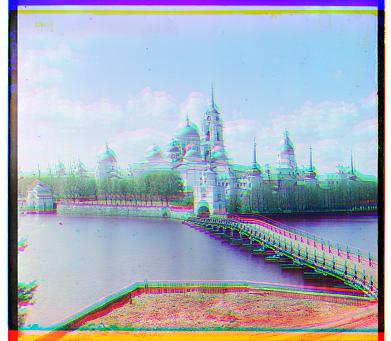

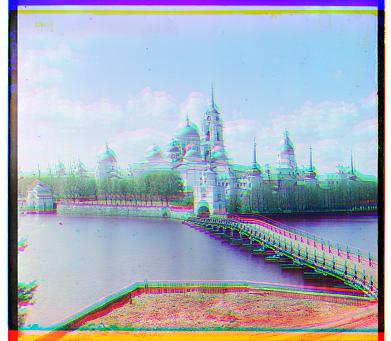

Monastery: Green Offset [2, -3] Red Offset [2, 3]

Tobolsk: Green Offset [3, 3] Red Offset [3, 6]

Part 2: Pyramid Search (High-res images)

For larger images, exhaustively searching all pixels for the best displacement can be extremely costly, especially for some images as the displacement can be up to 200 away from the original alignment, and SSD is very expensive on such large images. In order to reduce the computational time,

I used a image pyramid to help us form an estimate of the displacement, and then refine that estimate in lower layers.

If an image or pyramid level was smaller than 200x200, then we simply do an exhaustive search with a range of [-10. +10] from the original alignment. If not, we rerun our recursive alignment method on a resized version of the image such that each side is 2x smaller, to get an estimate displacement.

Given this estimate, we then multiply the estimate coordinates by 2 and do an exhaustive search with a range of [-10, +10] centered around the displacement estimate.

Since most images were around 3200px by 3700px, most image pyramids were 5 layers high (that is, we scaled down the images by half 4 times). Most images finish processing in 40-50 seconds. The below images all used the blue channel as the base channel.

Church: Green Offset [4, 25] Red Offset [-4, 58]

Train: Green Offset [6, 43] Red Offset [32, 87]

Harvesters: Green Offset [16, 59] Red Offset [13, 124]

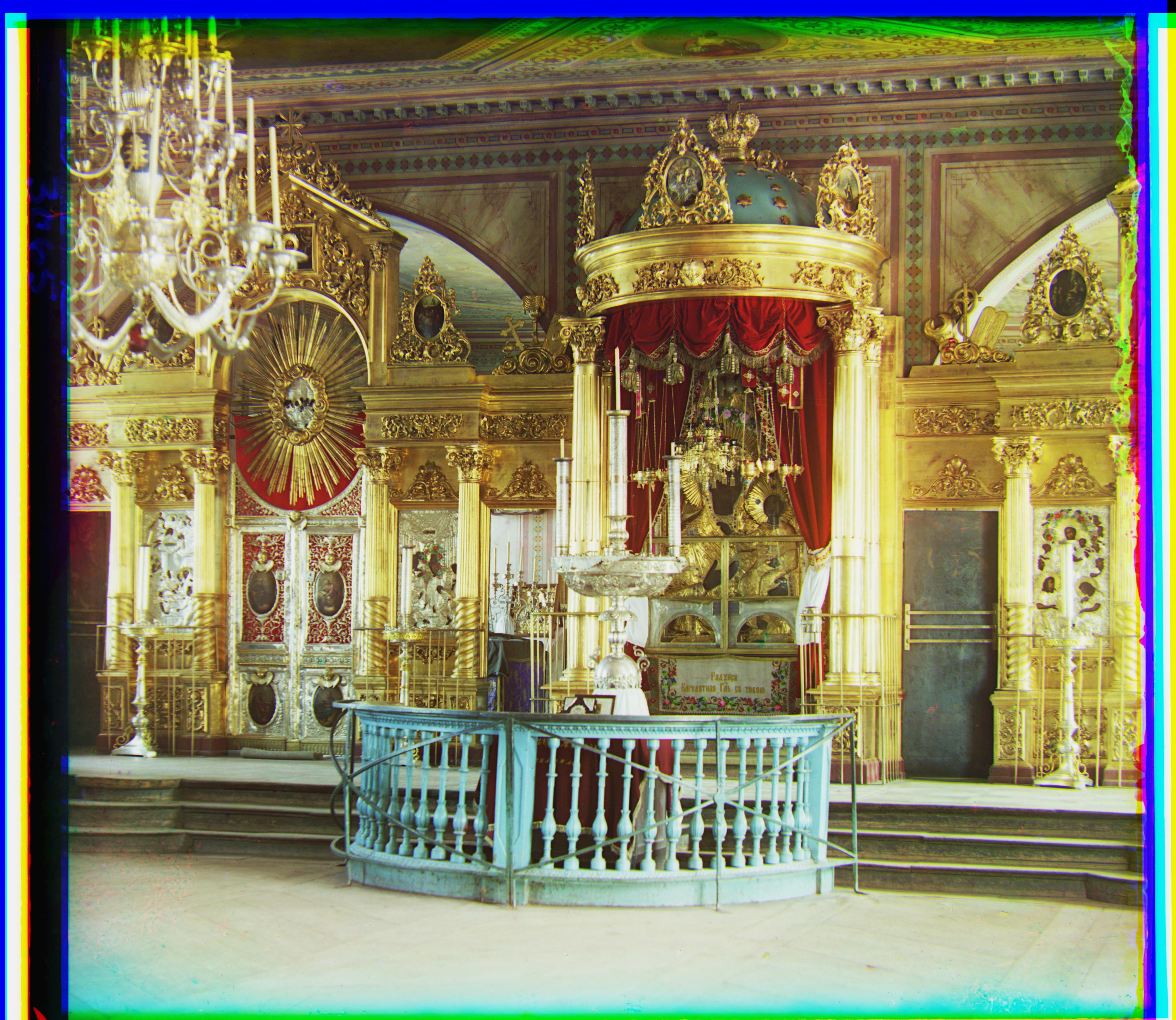

Icon: Green Offset [17, 41] Red Offset [23, 90]

Lady: Green Offset [8, 56] Red Offset [11, 116]

Melons: Green Offset [11, 82] Red Offset [13, 178]

Onion Church: Green Offset [27, 51] Red Offset [36, 108]

Self-portrait: Green Offset [29, 79] Red Offset [37, 176]

Three Generations: Green Offset [14, 53] Red Offset [11, 112]

Workshop: Green Offset [0, 53] Red Offset [-12, 105]

Emir: Green Offset [24, 49] Red Offset [57, 103]

Emir didn't align perfectly, because the different channels had such varying brightness values that it was hard to minimize the SSD to calculate an optimal match. I modify this photo in the modifications section.

Modifications

I found that Emir didn't line up perfectly, since the different channels had different brightness values, which made aligning based on the

sum of squared differences (SSD) inaccurate. To fix this, I tried experimenting with switching the base channel; that is, aligning to the green

or red channel instead of to blue. I found that aligning Emir to the green channel gave the best result, and was much clearer than the inital image.

The first image is the original image from the method used above, and the second is the improved image aligned to the green channel.

First image (aligned on blue): Green Offset [24, 49] Red Offset [57, 103]

Second image (aligned on green): Red Offset [17, 57] Blue Offset [-24, -49]

Images of My Own Choosing

I also chose some other images from the Library of Congress and ran my algorithm on them.

Vokhnovo: Green Offset [8, -8] Red Offset [-4, 3]

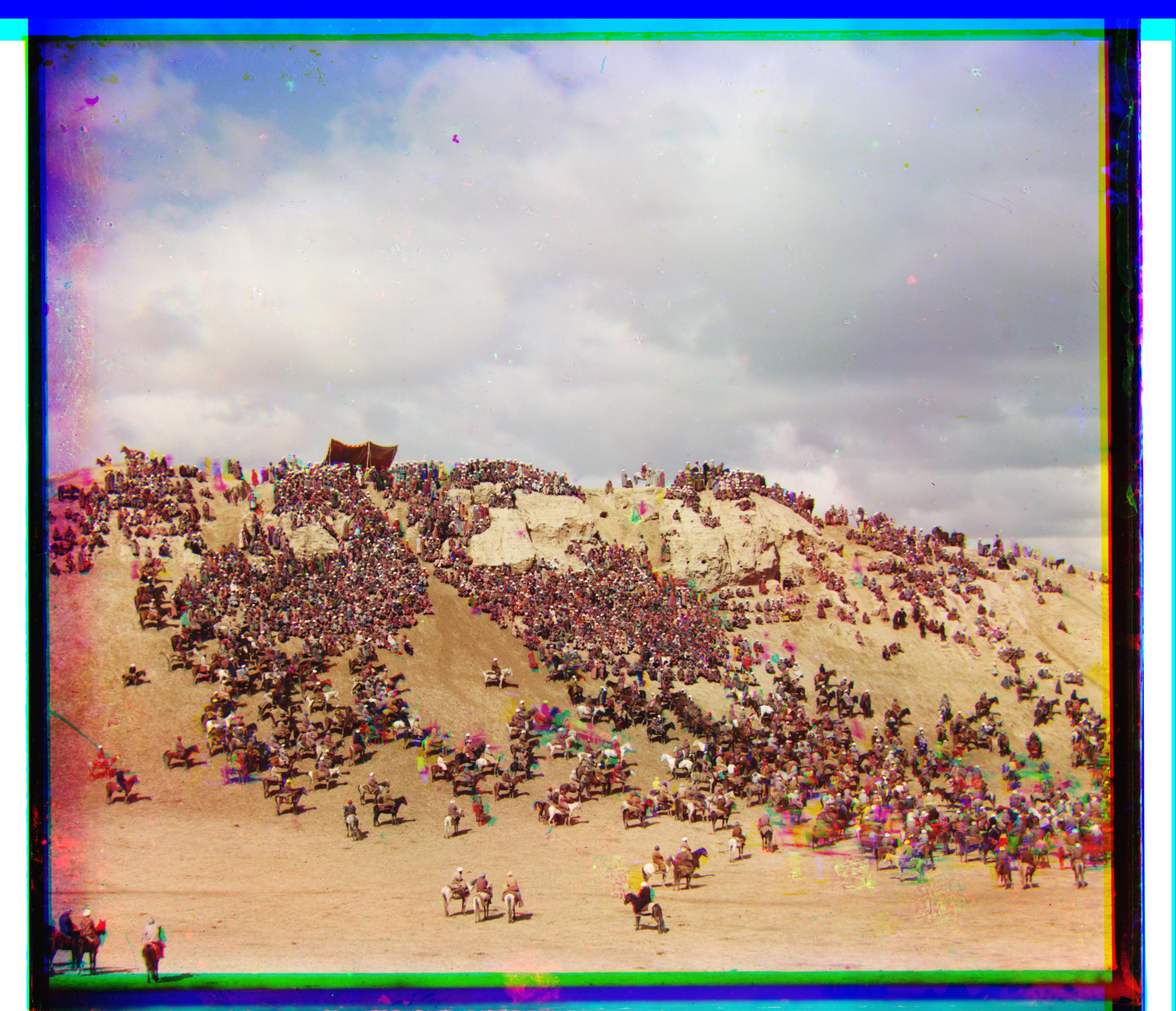

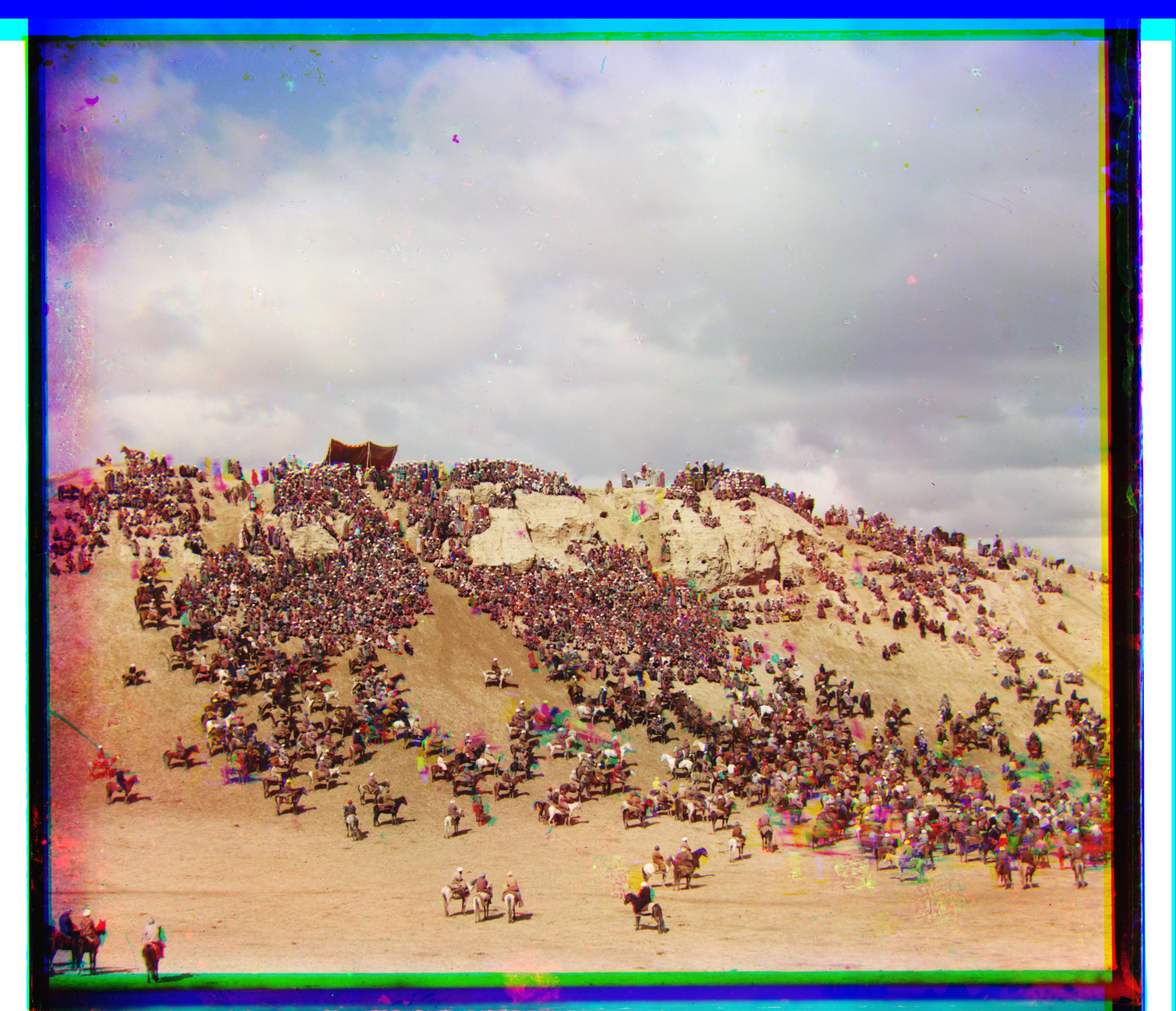

Bayga Samarkand: Green Offset [-1, 59] Red Offset [-14, 129]

The colored artifacts are likely a result of people moving in between scenes, leaving gaps where one is prsent in one scene but not the other. Since this is an error with the photos, it cannot be fixed by aligning the photos.

Chusovo: Green Offset [-5, 40] Red Offset [-32, 108]

Bells & Whistles

Althought I was not able to completely trim the borders, I was able to trim off some of the colored borders by utilizing the displacements generated from our alignment methods. Using the displacements, I trimmed the areas where not all 3 channels were present since one or two had been shifted.

This helped eliminate some of the bright stripes at the top and bottom of images.

Some examples of images where this helped were:

Train

Harvesters

Melons

Lady