Overview

This project aims to 'colorize' the Prokudin-Gorskii photo collection which are photos of the same objects/scenes taken with red, green, and blue filters. Once properly aligned and stacked, these filtered images can recreate a colorized representation of the original object/scene. For this project, I implemented 2 approaches to solving this issue. The first is an exhaustive search approach which calculates a score for all possible x and y translations for the red and green filter images using the sum of squared differences formula on the pixel values of each image in reference to the blue filter image. A translation with the minimum score is returned and used to align the filtered images. The second approach, image pyramid, builds upon the first in order to address larger images. The image pyramid algorithm scales down the original image several times by a factor of 2 and recursively runs the exhaustive search algorithm. As the optimal translation for the scaled images are returned, we apply the translations to an image one factor of 2 larger and recompute the optimal translations for this larger image. When the algorithm bubbles back up to the original image size, we return the final compounded 'r' and 'g' shifts to align the filtered images. For more detailed information about the algorithm implementations and examples of the results see the below sections.

Algorithm

Part 1: Exhaustive Search

The exhaustive search algorithm uses a brute force approach to finding the offset with the lowest error for a given range. For smaller images such as the first 3: cathedral, monastery, and tobolsk, this approach runs relatively quickly. The range used for the exhaustive implementation was [-15,15] for BOTH axes of translation. The loss function used to assign "fit" to the different possible shifts is the sum of squared differences:

∑∑ (image1 - image2)2

The calculation of the above summation is done in the getError function. getError uses numpy array subtraction to parallelize the subtraction process by leveraging array subtraction then computing the squared error using np.square and np.sum.

To actually align the functions, the align function uses nested for loops to iterate between every combination of x and y translations within the [-15,15] range. It uses np.roll to shift the first image (img1) and then calls getError to get the score in comparison to an anchor image. This is done repeatedly until all combinations are examined. A running min error variable decides which shift is ultimately returned. In this implementation, the anchor image was "b." Thus, align(r,b) and align(g,b) were run to derive the most "fit" shifts for the "r" and "g" images. These returned images are then stacked using np.dstack to produce a colorized image.

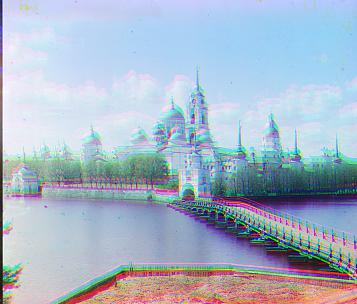

Below are the results for the first portion of the project. The images on the left are the result of dividing the Prokudin-Gorskii photos into equal parts vertically, then stacked using np.dstack. The images on the right display the aligned image after the 'r' and 'g' images are shifted according to the exhaustive search algorithm. The exact shifts of the 'r' and 'g' images are displayed below each aligned image.

|

|

|

|

|

|

From the images above, it is evident that the exhaustive search effectively aligns and colorizes the Prokudin-Gorskii images. One key observation is the presence of alignment artifacts on the edges of the images (most prominent on the cathedral image). These artifacts are the result of cropping a fixed percent of the original image sizes instead of automatically cropping the 'r', 'g', and 'b' image borders. The resulting shifts leave these artifacts present on the edges of the aligned image.

Part 2: Image Pyramid

The image pyramid approach builds on top of the exhaustive implementation to make it run efficiently for much larger images. The examples used to assess the algorithms efficiency are .tiff images of around 70MB in size. For reference, the set of images above were around 150KB in size. This approach uses recursion to scale down the image's resolution by a factor of 2 for a specified number of "layers."

The basic algorithm is as follows. pyramid_align recursively scales both input images by a factor of 2 until layers is 0. The base case calls align with the lowest resolution images and returns a shift. One level up the recursion stack, this shift is scaled up by a factor of 2 and applied to the images at that level (which should be a factor of 2 larger than the previous). align is then called on this shifted image and the results are returned. This continues until we reach the top level with the original image size where we return to the caller with the optimal alignment.

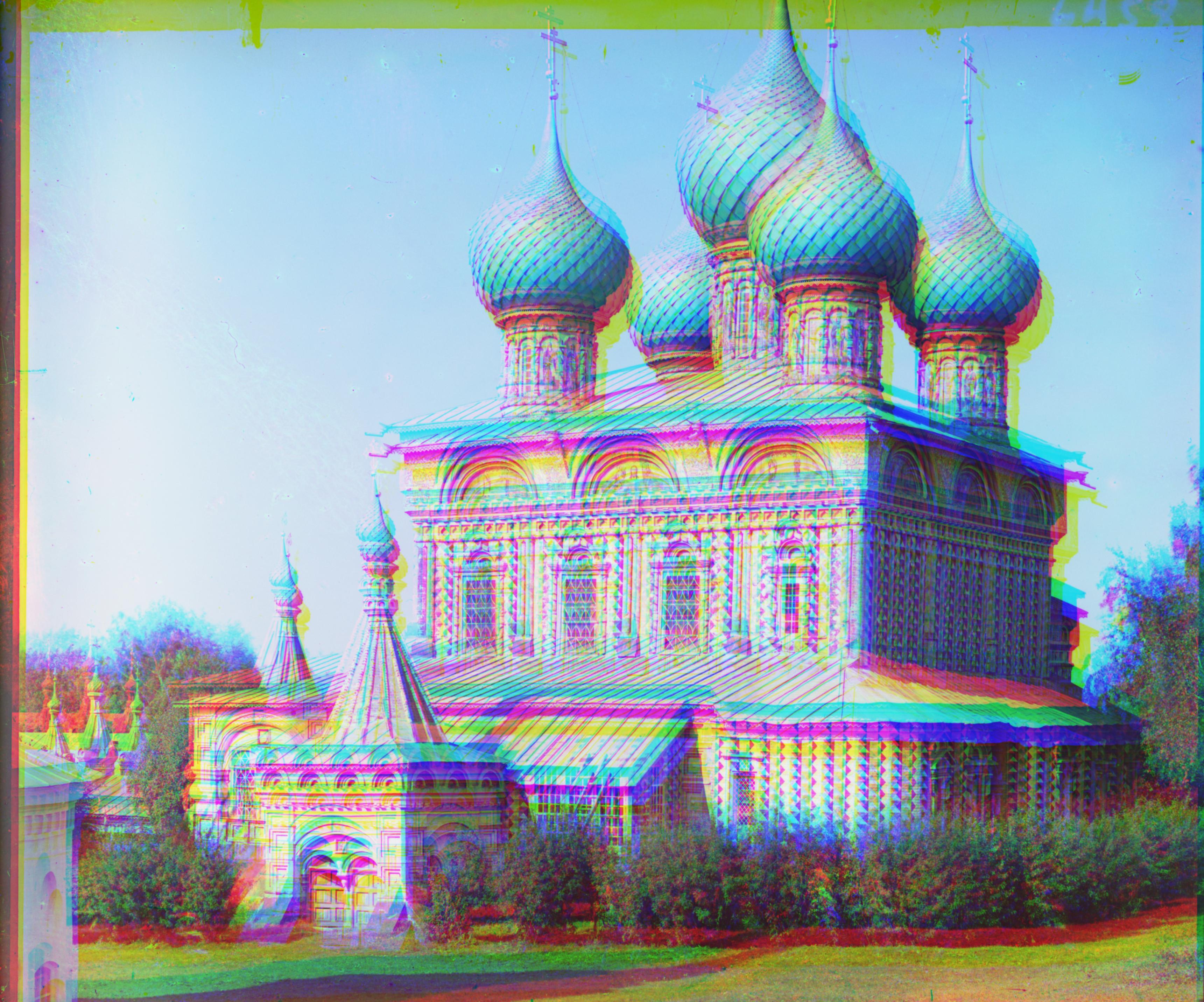

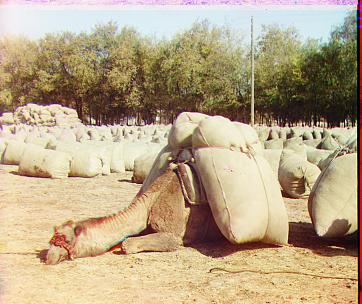

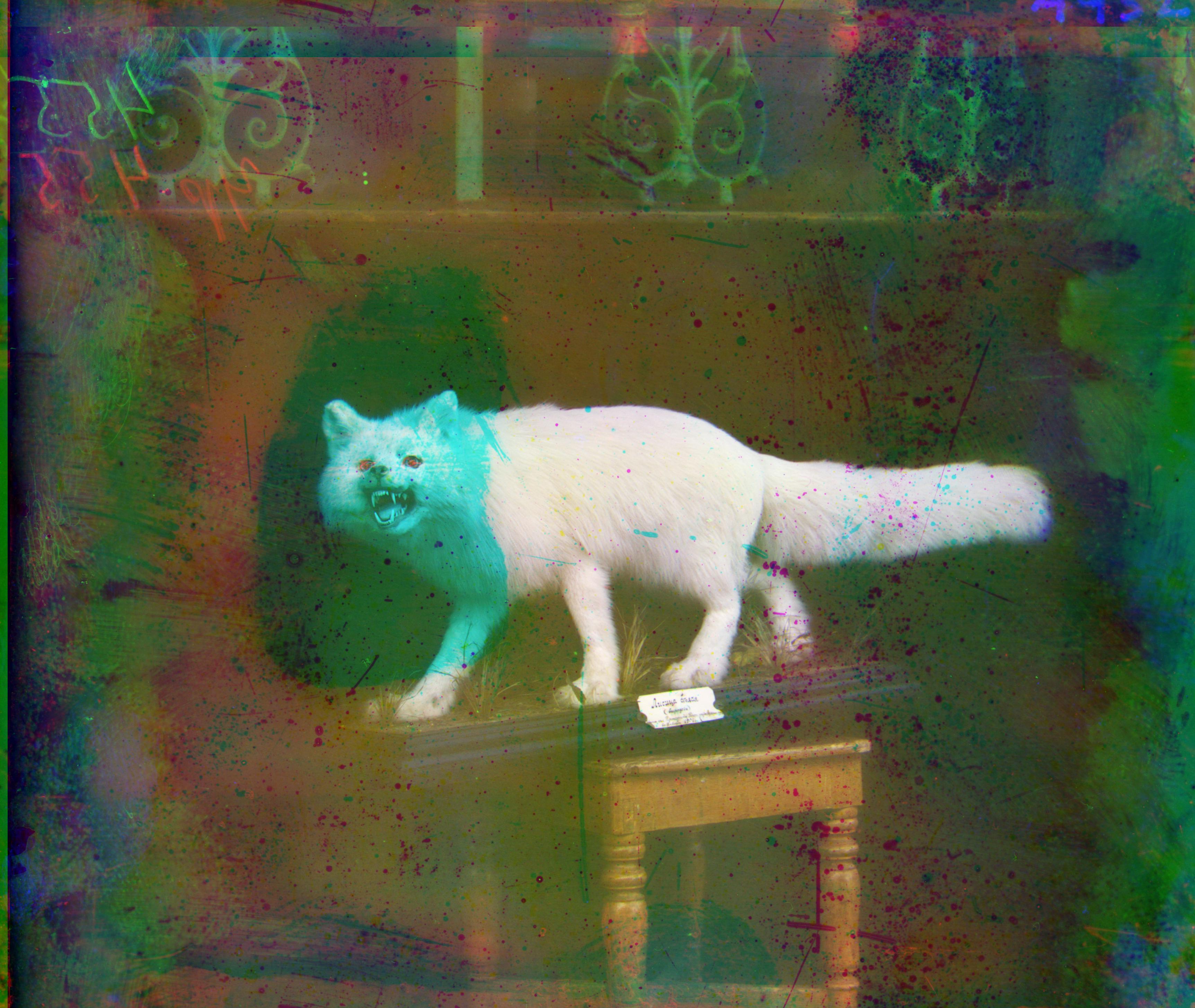

The results of calling pyramid_align on the significantly larger .tiff files are below. Notice the images have been converted to the .jpg file format and scaled for convenience in comparing the changes. Again, the images on the left are stacked channels before running pyramid_align and the images on the right, after.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

As we can see, the images are noticeable aligned and colorized for the most part. One case stands out significantly as a suboptimal alignment: emir.tiff. From the spec, this exception is directly correlated to the lack of similarity in the brightness values of the different channels. In order for this case to be effectively aligned, a different metric should be used, instead of the sum of squared differences, to evaluate the score for each possible alignment.

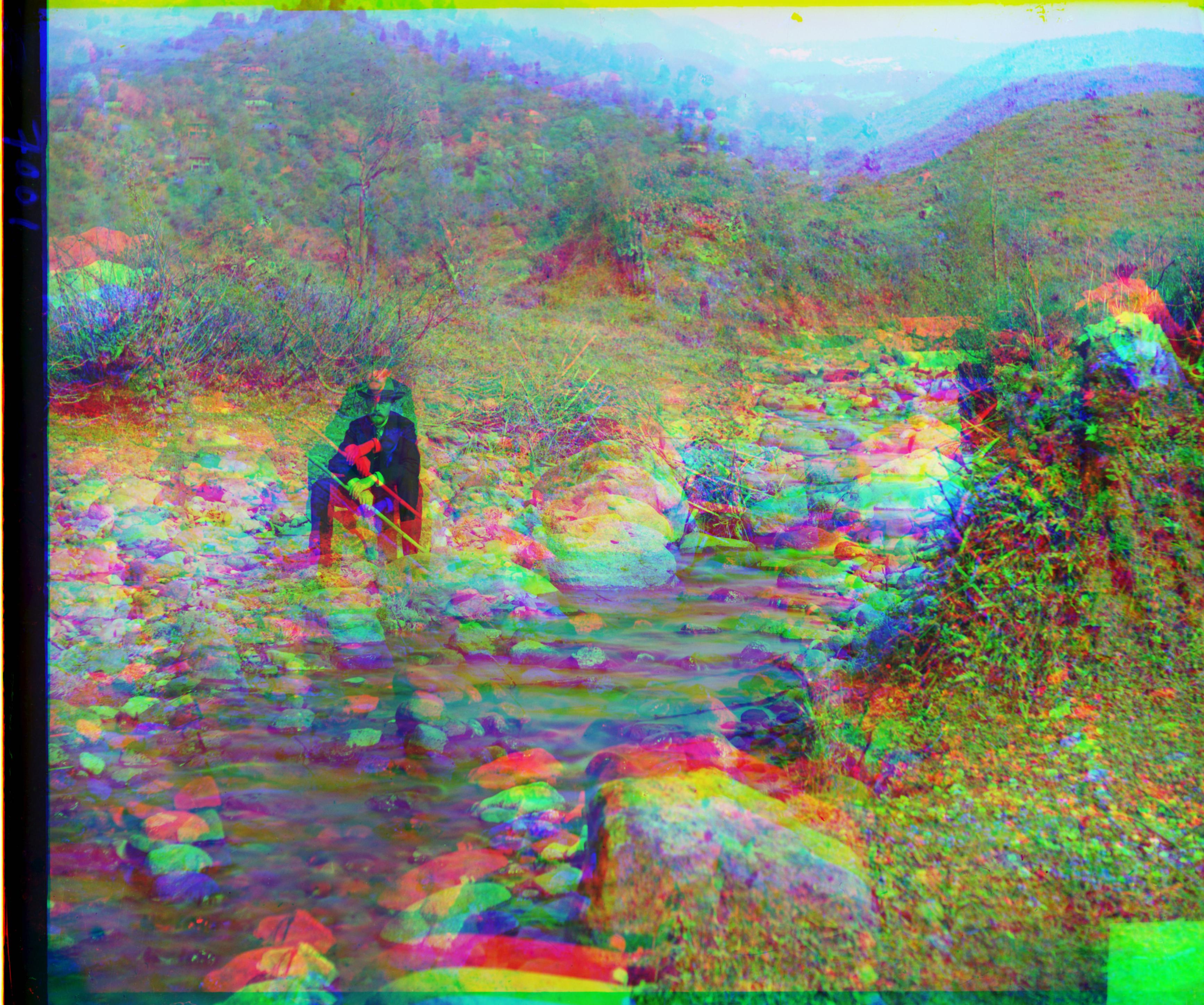

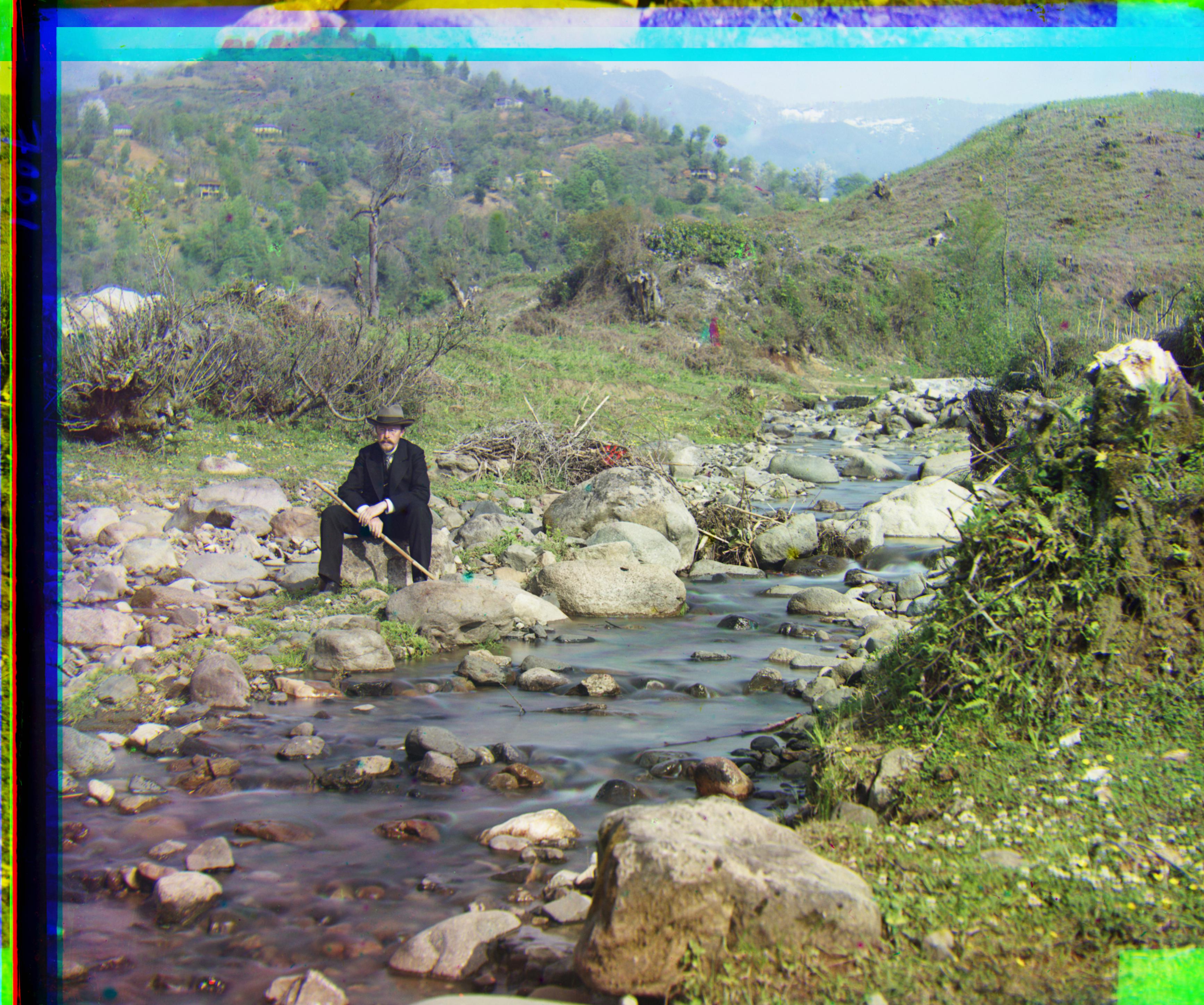

Extra Cases

Here are some other images from the Prokudin-Gorskii collection:

|

|

|

|

|

|