CS 194-26: Images of the Russian Empire: Colorizing the Prokudin-Gorskii Photo Collection

Amol Pant

Background

Sergei Mikhailovich Prokudin-Gorskii (1863-1944) [Сергей Михайлович Прокудин-Горский, to his Russian friends] was a man well ahead of his time.

Convinced, as early as 1907, that color photography was the wave of the future, he won Tzar's special permission to travel across the vast Russian Empire and take color photographs of everything he saw including the only color portrait of Leo Tolstoy.

And he really photographed everything: people, buildings, landscapes, railroads, bridges... thousands of color pictures! His idea was simple: record three exposures of every scene onto a glass plate using a red, a green, and a blue filter.

Never mind that there was no way to print color photographs until much later -- he envisioned special projectors to be installed in "multimedia" classrooms all across Russia where the children would be able to learn about their vast country.

Alas, his plans never materialized: he left Russia in 1918, right after the revolution, never to return again. Luckily, his RGB glass plate negatives, capturing the last years of the Russian Empire, survived and were purchased in 1948 by the Library of Congress.

The LoC has recently digitized the negatives and made them available on-line.

Objective

The objective of this project is to take the RGB channels of Prokudin-Gorskii's images and create an program

for aligning them. We begin with basic naive exhaustive search for aligning lower resolution images. After that is achieved, we can implement a

move efficient pyramid search method for speeding up our process for more higher resolution images.

Naive Exhaustive Search

We start off by dividing the input black and white image set into 3 channels for R, G, and B. Now, we begin our alignment process.

To align any 2 channels, we first crop the images by about 10% on each side to get rid of the black borders which are essentially just noise and

mess up our matching algorithm. This also then reduces the image size by 64%.

Next, we set up a window for our search algorithm to iterate over. It essentially just defines the limits of how much of an offset we want to check.

For my project, I intuitively set this window to about [-20, 20] units since the pictures arent that different when stacked on one another.

Now, we iterate through the offset, take out subset of pixels, and match them to see how aligned the image is.

The metric I use is the "sum squared difference" or SSD, since it is relatively simple and does the job well enough in our case.

Through our iterations we update the best alignment as we go along then use np.roll to move the first image to perfectly stack on top of the other.

I then use this method of aligning 2 images for aligning the RGB channels. I begin by taking the G image and aligning it to the B.

Then I use the same method to align R to B.

Finally we can just stack the shifted R and G images with the B image to get our final color image.

Pyramid Speedup

Our naive exhaustive search, albeit simple and fast enough for small jpg images, struggles performance wise on larger tiff images, taking several hours to iterate

through the window, especially since we would need to have a window size greater than [-20, 20] since these large images are also offset by more pixels due to their size.

To speedup this process, we can actually keep our window size [-20, 20] so our exhaustive search doesnt have to iterate too much. However, to ensure that our pictures

which are offset by more than 20 units are aligned, we must do this process over and over, starting from low resolution versions of the image and then updating our estimates

as we get closer and closer to the alignment of the original resolution images.

To do this, I begin by making a pyramid structure of images, scaling down our image by a factor of 2 everytime. We then align coarse images (which is a quick process),

get a rough idea for aligning the larger image, and update our estimates as we go down the pyramid.

I personally greated 6 levels of images, scaling down with a factor of 2, a window size of [-20, 20] and a cropping 10% from each edge, cutting it down to 64% of its original size.

This reduces the runtime from several hours to under a minute for most images.

Results on the Example Set of Images

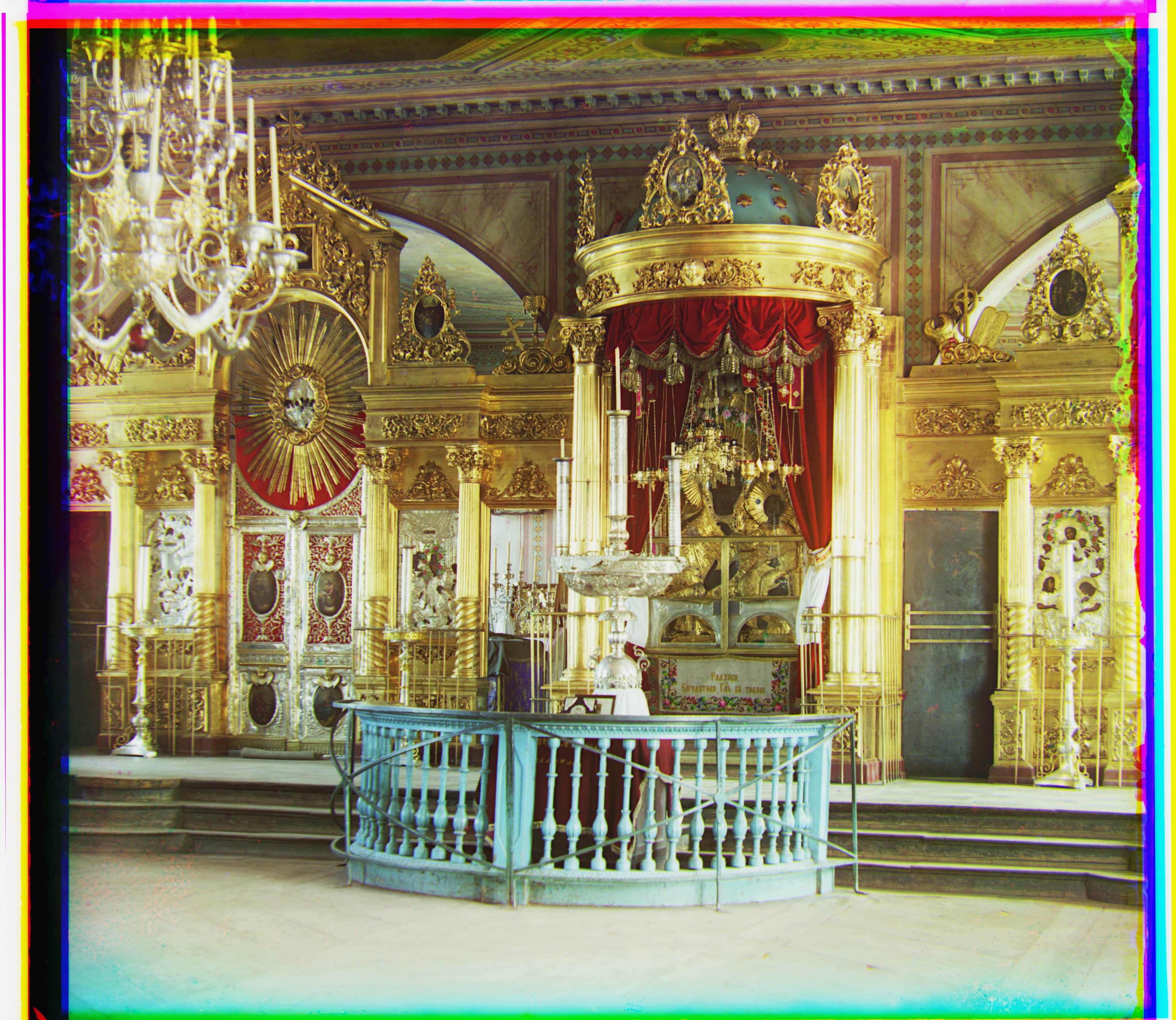

Here are the aligned images of the original set provided to us along with offset values for the R and G channles in [Width, Height] format. It also includes some more examples from the Prokudin-Gorskii Collection

which I found to be beautiful in color.

Green: [2, 5] Red: [3, 12]

Green: [2, 5] Red: [3, 12]

Green: [-3, 2] Red: [3, 2]

Green: [-3, 2] Red: [3, 2]

Green: [3, 3] Red: [3, 6]

Green: [3, 3] Red: [3, 6]

Green: [4, 25] Red: [-4, 58]

Green: [4, 25] Red: [-4, 58]

Blue: [-24, -48] Red: [17, 57]

Blue: [-24, -48] Red: [17, 57]

Interestingly, for the above image, the standard alignment on a b channel produced incorrect alignments, presumably due to a lack of comparision of the green coloring with reds.

This meant that for aligning the image, instead of aligning R and G on B, we can use G as a base channel and align R and B to it, thus fixing the error. The above image is the result of that fix.

Green: [17, 59] Red: [15, 123]

Green: [17, 59] Red: [15, 123]

Green: [18, 41] Red: [23, 90]

Green: [18, 41] Red: [23, 90]

Green: [8, 54] Red: [12, 112]

Green: [8, 54] Red: [12, 112]

Green: [10, 82] Red: [12, 180]

Green: [10, 82] Red: [12, 180]

Green: [27, 50] Red: [37, 108]

Green: [27, 50] Red: [37, 108]

Green: [29, 78] Red: [37, 175]

Green: [29, 78] Red: [37, 175]

Green: [14, 50] Red: [12, 110]

Green: [14, 50] Red: [12, 110]

Green: [6, 42] Red: [32, 86]

Green: [6, 42] Red: [32, 86]

Green: [0, 53] Red: [-12, 106]

Green: [0, 53] Red: [-12, 106]

Green: [-11, 44] Red: [-26, 105]

Green: [-11, 44] Red: [-26, 105]

Green: [5, 16] Red: [14, 49]

Green: [5, 16] Red: [14, 49]

Green: [2, 40] Red: [9, 88]

Green: [2, 40] Red: [9, 88]

Green: [2, 5] Red: [3, 12]

Green: [2, 5] Red: [3, 12]

Green: [-3, 2] Red: [3, 2]

Green: [-3, 2] Red: [3, 2]

Green: [3, 3] Red: [3, 6]

Green: [3, 3] Red: [3, 6]

Green: [4, 25] Red: [-4, 58]

Green: [4, 25] Red: [-4, 58]

Blue: [-24, -48] Red: [17, 57]

Blue: [-24, -48] Red: [17, 57]

Green: [17, 59] Red: [15, 123]

Green: [17, 59] Red: [15, 123]

Green: [8, 54] Red: [12, 112]

Green: [8, 54] Red: [12, 112]

Green: [10, 82] Red: [12, 180]

Green: [10, 82] Red: [12, 180]

Green: [27, 50] Red: [37, 108]

Green: [27, 50] Red: [37, 108]

Green: [29, 78] Red: [37, 175]

Green: [29, 78] Red: [37, 175]

Green: [14, 50] Red: [12, 110]

Green: [14, 50] Red: [12, 110]

Green: [6, 42] Red: [32, 86]

Green: [6, 42] Red: [32, 86]

Green: [0, 53] Red: [-12, 106]

Green: [0, 53] Red: [-12, 106]

Green: [-11, 44] Red: [-26, 105]

Green: [-11, 44] Red: [-26, 105]

Green: [5, 16] Red: [14, 49]

Green: [5, 16] Red: [14, 49]

Green: [2, 40] Red: [9, 88]

Green: [2, 40] Red: [9, 88]