The goal of Project 2 is to build and test intuition around the applications of image filtering and frequency modification.

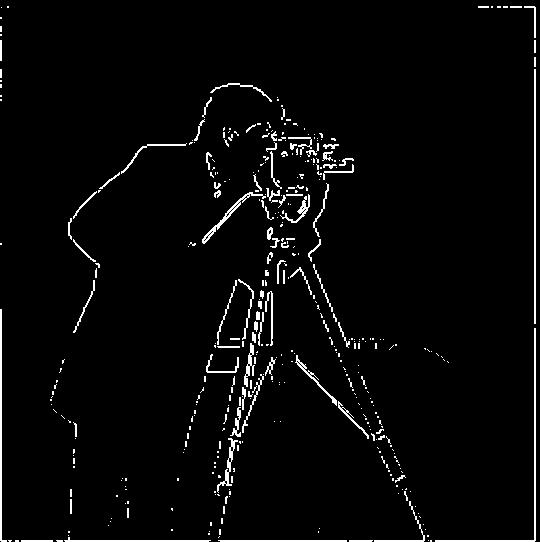

In this section, we were tasked with applying some simple techniques to the "Cameraman" image:

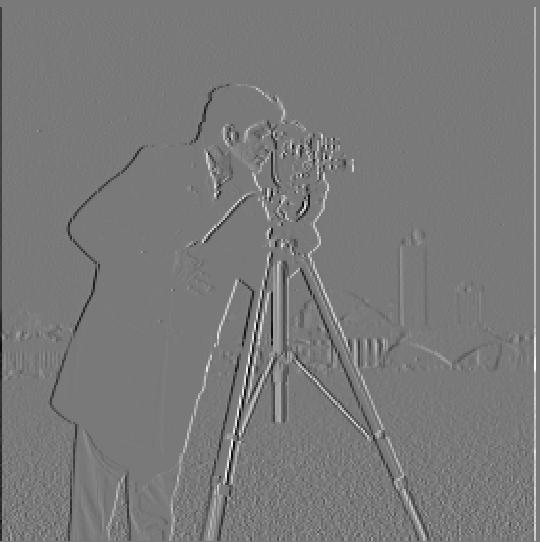

I explored using finite difference as an image filter by showing the partial derivatives with respect to x and y of famous image.

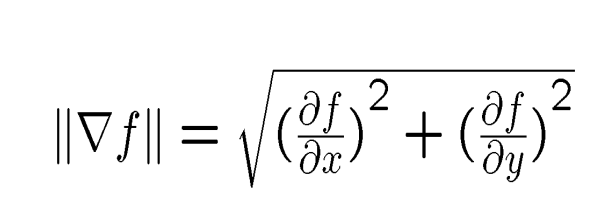

Next, the assignment called for computation and display of the gradient magnitude, which required me to convolve the D_x and D_y differentiation operators with the original image. Finally for this section, we were asked to binarize the gradient magnitude image to create an edge image, while also trying to suppress as much noise as possible. This required setting a threshold value of 0.39 and keeping the image array values between 0 and 1:

threshold, upper, lower = 0.39, 1, 0

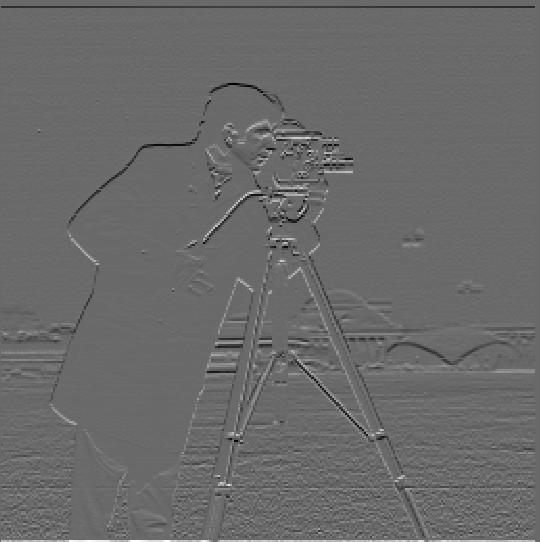

Because the binarized "edge" image was still quite noisy, the next step is to apply a smoothing technique--the Gaussian filter. We were tasked to create a blurred version of the original image, and then repeat the previous section, noticing the differences. The blurred version was achieved through convolving the original image with a Gaussian. In order to achieve this in python, it was necessary to first create a 1D Gaussian and then take an outer product with its transpose to get a 2D gaussian kernel.

From the blurred image, repeating the gradient magnitude and binarizing processes, I noticed the following:

The final part of this section asked us to verify that we could compute and display the same result using a derivative of Gaussian filters, which requires convolving the Gaussian just created above with the derivative images D_x and D_y, and then applying the following formula:

Below is the result:

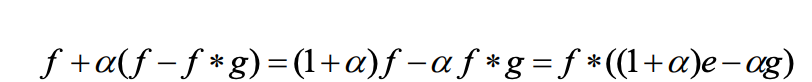

In this section we were tasked with deriving the unsharp masking technique: Subtracting the blurred version of an image from an original image to display only the highest frequencies of the image.

The more detailed technical procedure, executed in python, consisted of:

This can be done in a single convolution operation due to the definition of the unsharp mask filter as such:

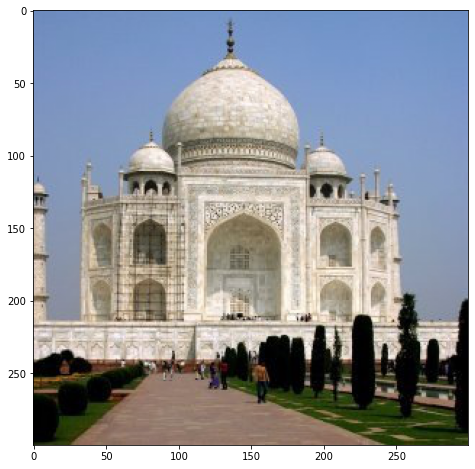

For the example image, the steps are shown below:

Left: Orignal, Right: "sharpened image

Next, we were asked to apply the methodology to our own images. I chose an owl image from the lecture slides. Below is the result of the image pipeline:

Some observations from both images include:

The sharpening definitely brings out additional details of each image processed, but at a cost--there is clear distortion/loss of image signal in the "sharpened images"

Choosing an alpha parameter for the single convolution step is non-trivial and was difficult to automate.

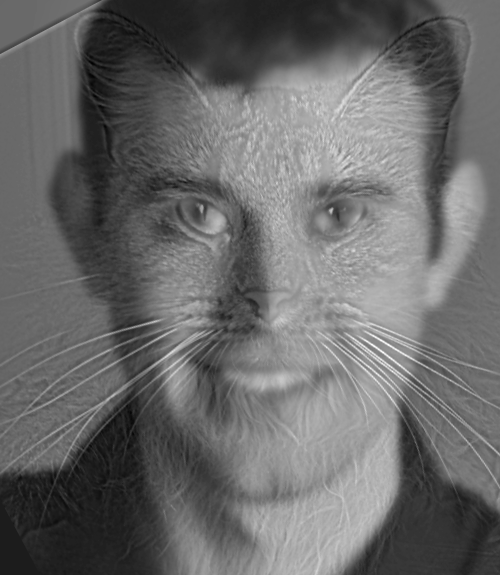

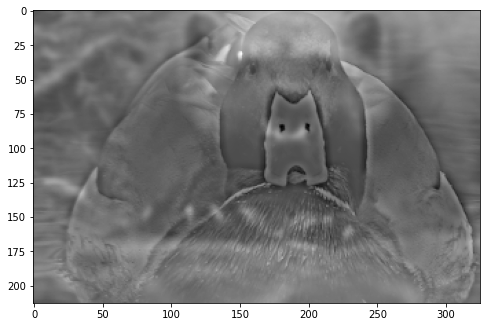

This section focused on the creation of hybrid images, which take advantage of the natural properties of human vision to prioritize high frequencies when available (when the eyes are close to the image) but farther away to process low-frequencies that are present. If a high-frequency portion of an image is blended with a low-frequency portion of another the effect of seeing different images at different distances or a "hybrid" is achieved.

The steps to achieve such an image in my solution are as follows:

The input images are:

The results of the process on the given image, plus a couple of my own choosing are below:

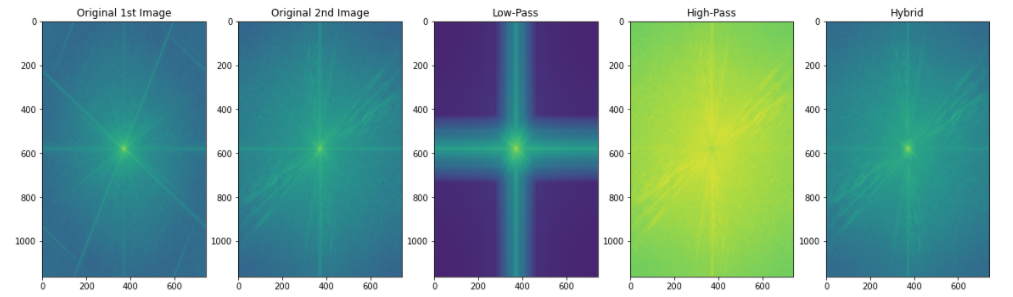

The log magnitudes of the fourier transform of the original first image, 2nd image, low-pass, high-pass and hybrid are below:

This section consisted of two high-level steps:

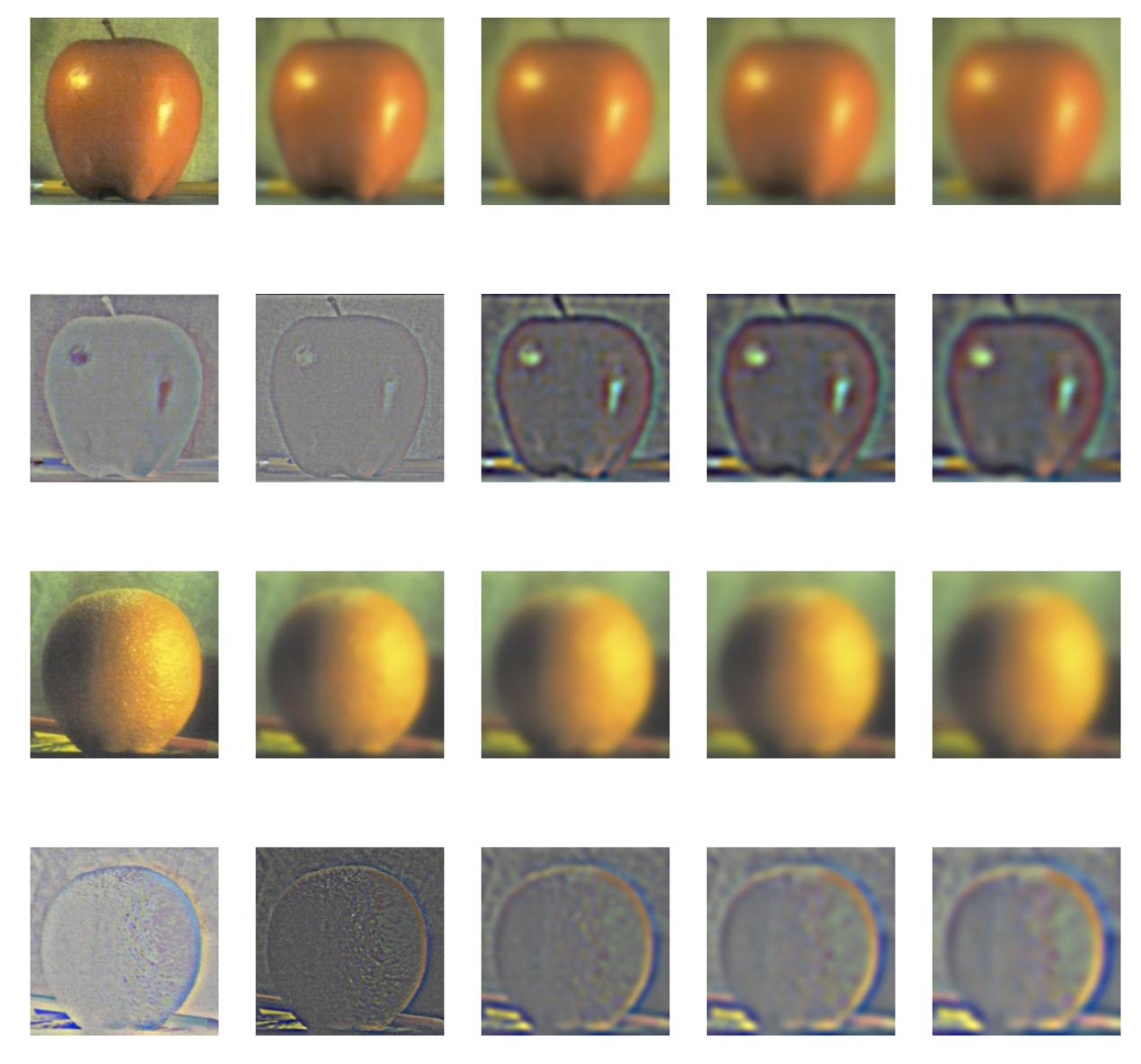

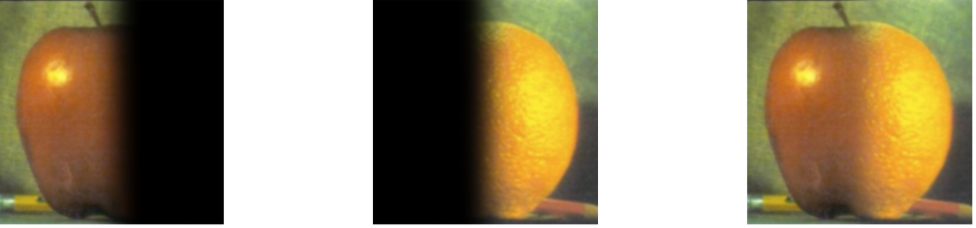

I first tackled this problem set using the given apple and orange images.

I then created the Gaussian and Laplacian stacks of both images, as you can see here:

Using input from the next section, we can re-create the figure from the textbook:

Using the same two source images from above, we proceed to blend the two pairs of images together. However, since we are running stacks not pyramids we need to implement the following process:

This is executed by:

Defining and applying a mask to both images, in this case just half of the image

Build Laplacian pyramids for both images

Build a Gaussian pyramid for the masked region

Create a combined pyramid, using the Gaussian mask as a weight

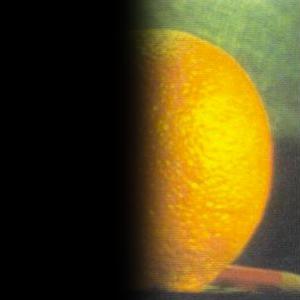

The result is a nice smooth blend of both fruits--and Oraple!: