Author: Sunny Shen

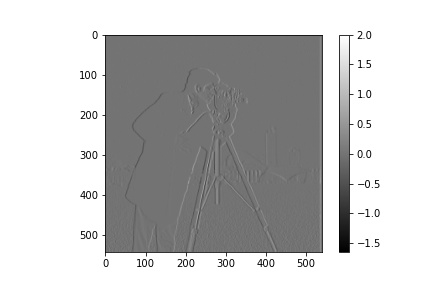

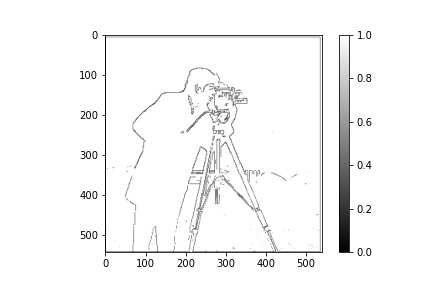

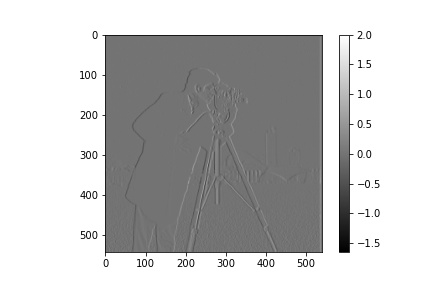

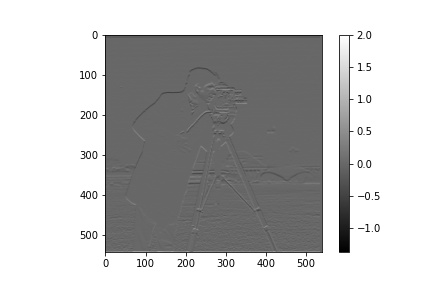

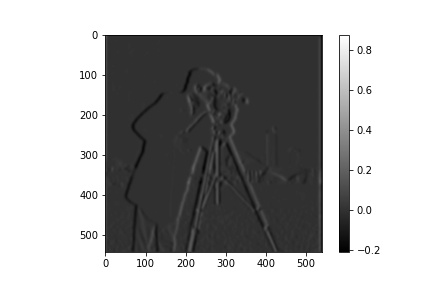

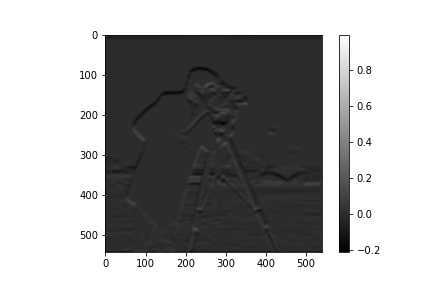

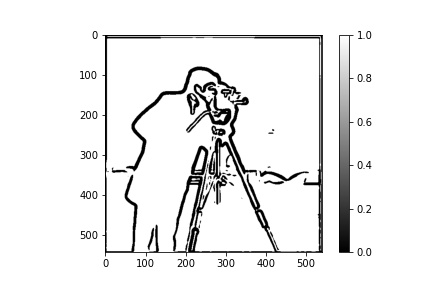

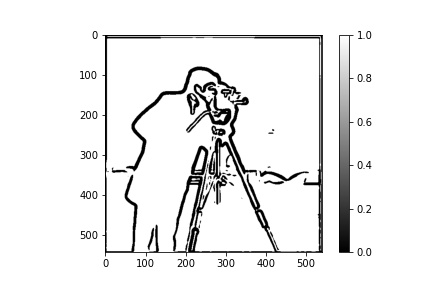

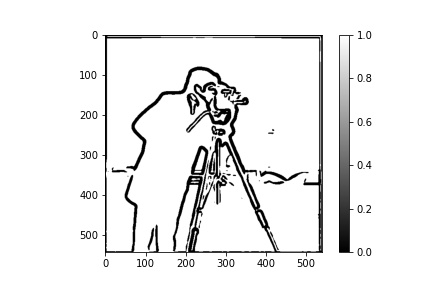

Edge detection is an important aspect of image processing. Edges usually can be found in places where color/brightness change drastically. To get partial derivatives of images, I convolved them with derivative D_x = [1, -1] and D_y = [[1], [-1]], the resulting partial derivatives show us how drastic the image is changing horizontally and vertically. To get a sense of how fast it's changing overall, I computed the gradient magnitude by taking the square root of the sum of the squared partial derivatives.

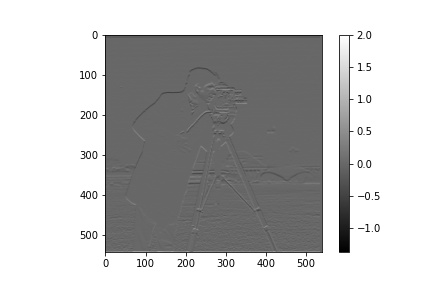

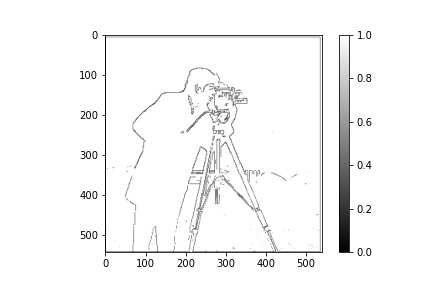

That the result in 1.1 is pretty noisy and the edges aren't as clear as we would like it to be. Therefore, we can blur the images with a Gaussian filter first and then calculate the gradient magnitude to find edges. We can see that the edges are a lot more distinct now -- less noisy and thicker boundaries because of the blur.

Convolution has a really nice property that it's associative! Therefore, we can get the derivative of gaussian filter first and then convolve with the images, so that we only convolve once instead of twice. And we will get the same result.

Note: There are some tiny differences in details because of rounding errors when we calculate the derivatives in two different ways.

In the last section, we see how Gaussian filter can blur images. Gaussian filters are low-pass filters that only retain low frequencies. If we subtract low frequencies from the original image we will get the high frequencies, we will get the "sharp" part of the image. Therefore, we can add the high frequency back to the original image to "sharpen" an image. There are two ways to implement this:

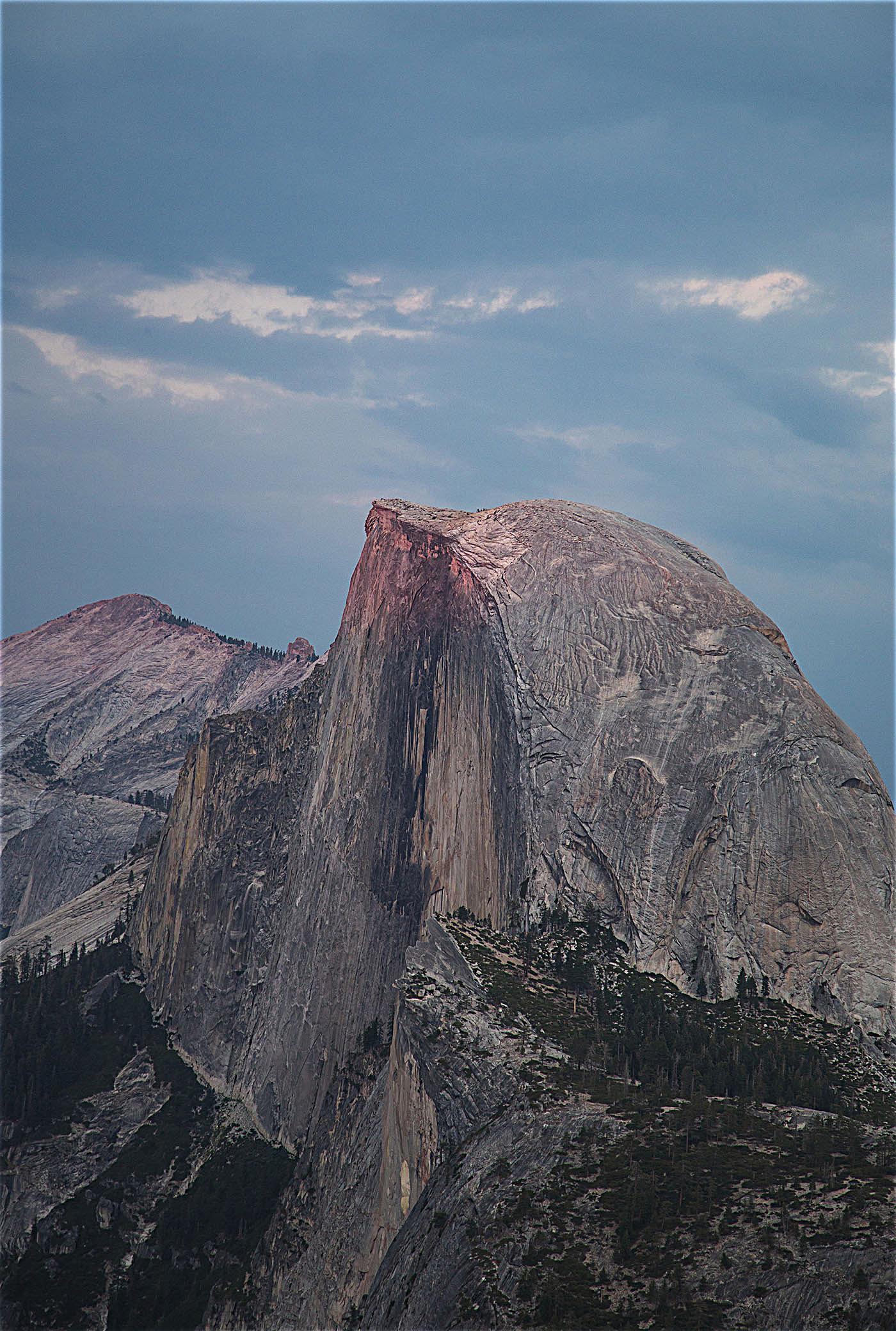

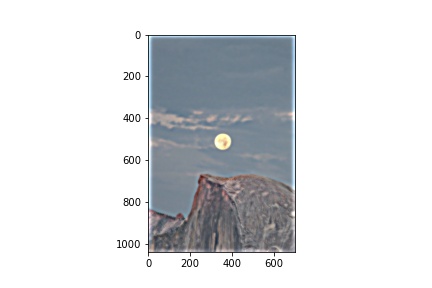

Some of the fav photos I took over the summer!

Half Dome

Mt Tam

Lassen Volcanic Park

Blur an image and Sharpen it Again

Observations: After blurring an image and re-sharpening it, it seems like the re-sharpened image, while sharper than the blurred image, is more pixelated than the original image, and it lost some details.

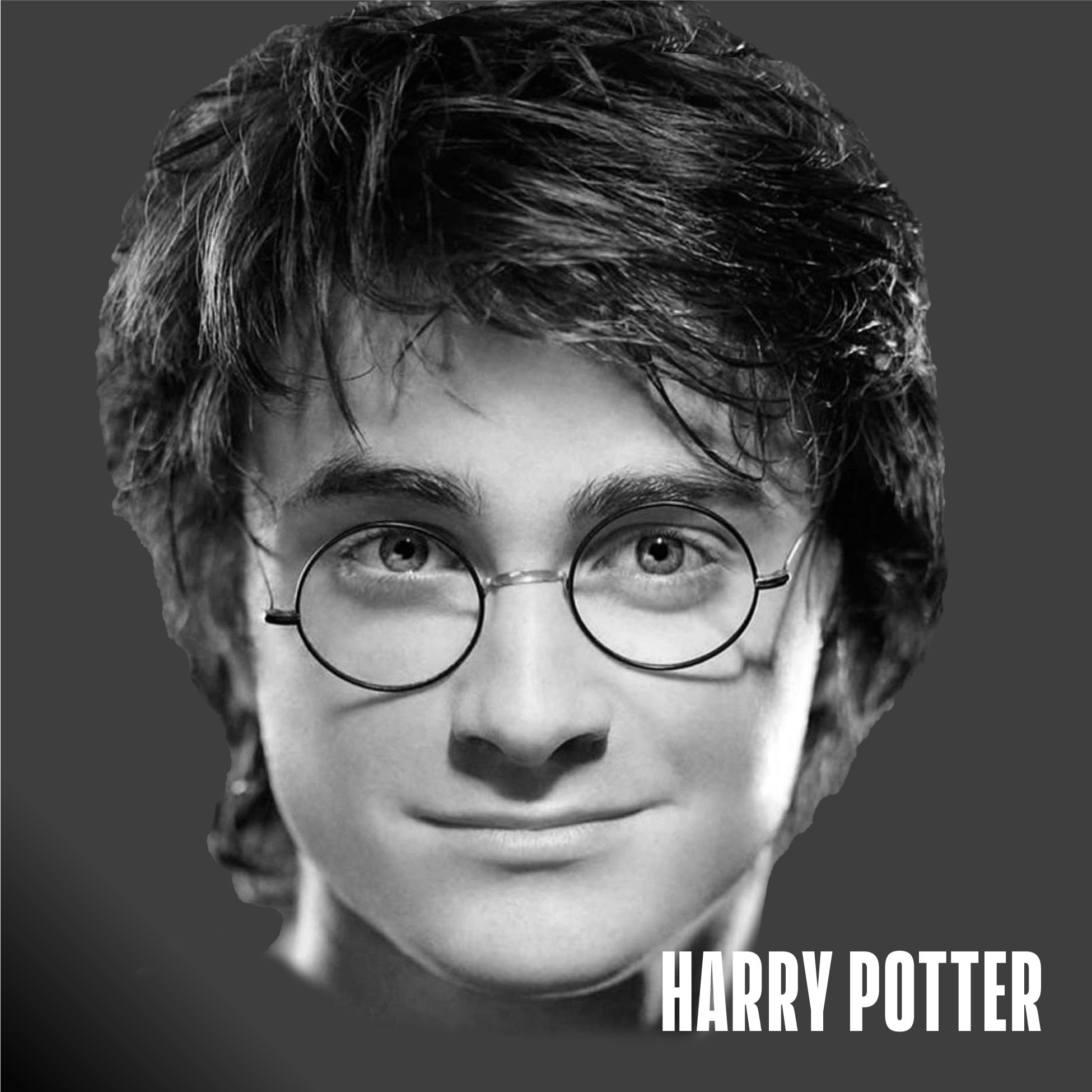

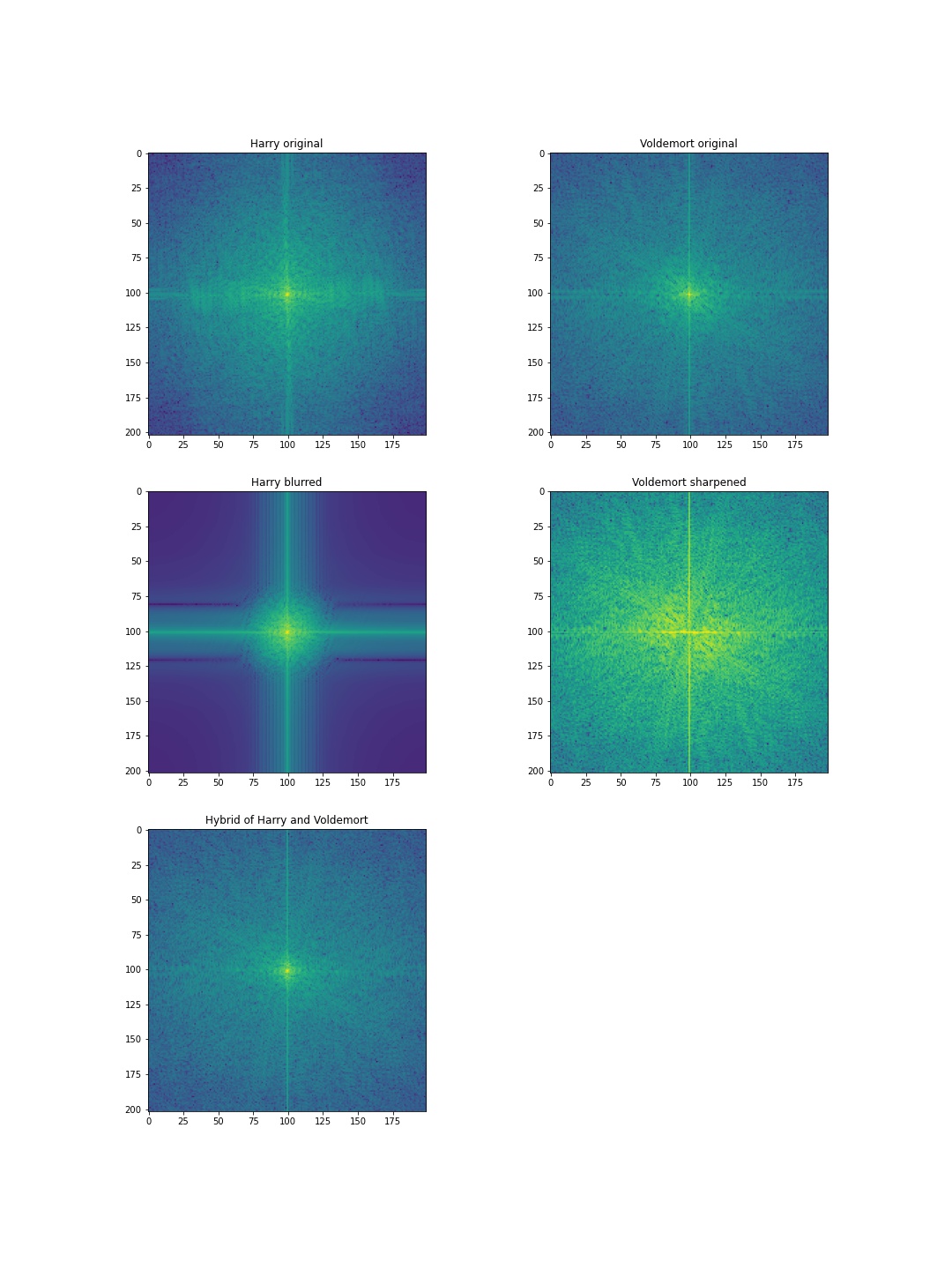

In SIGGRAPH 2006 paper by Oliva, Torralba, and Schyns, they presented some hybrid images that look different when viewed at different distances. We tend to see high frequencies at a closer distance, while the lower frequencies dominate our perception when it's further away. The idea is that we will blend one high frequency image with another low frequency image after aligning them together, and we can create this cool visual effect.

Nutmeg or Derek?

Here are a few creations of my own:

Harry Potter or Voldemort?

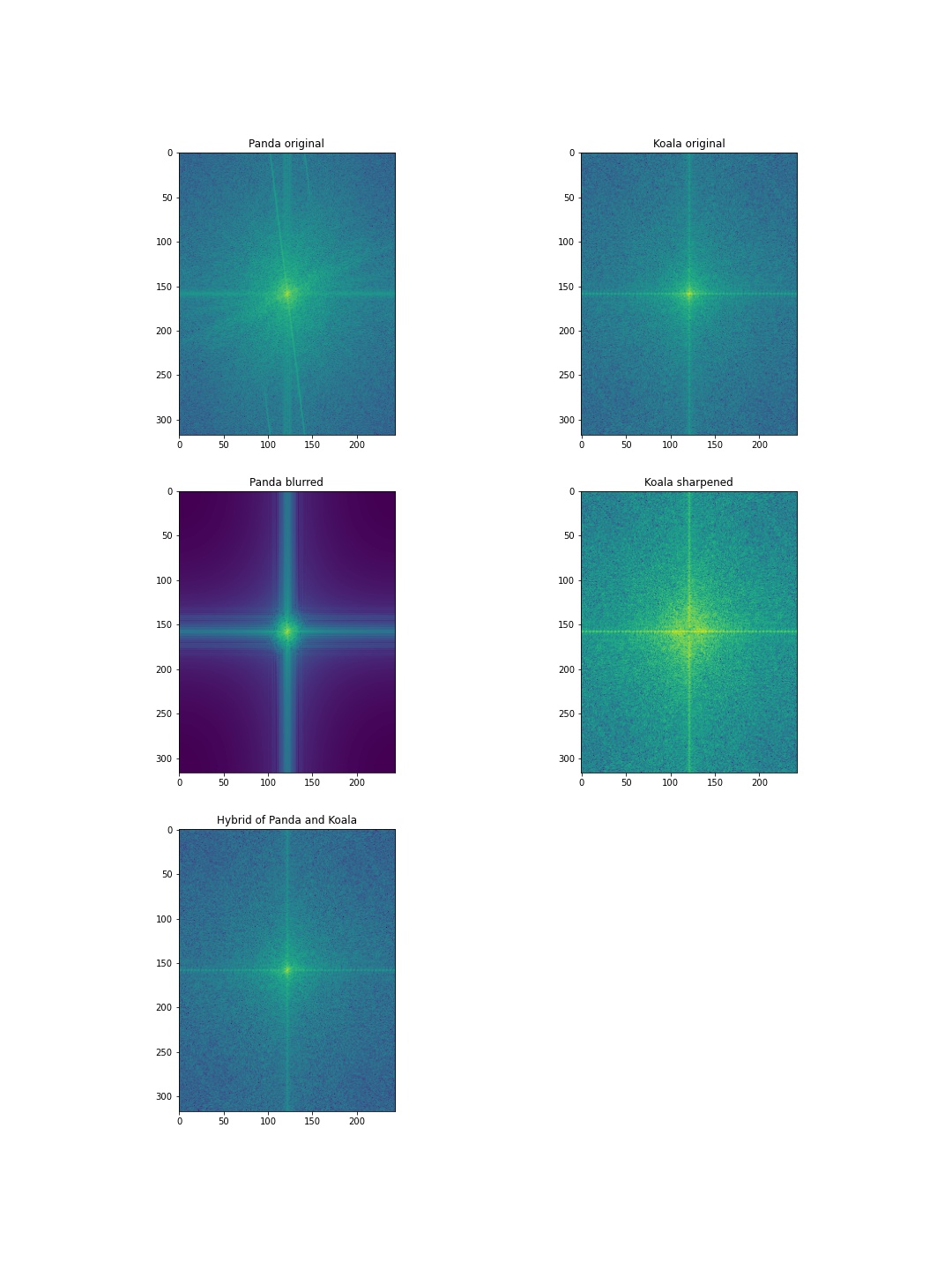

Panda or Koala?

oski or Stanford tree? - failure case

In this case, oski is the high-frequency image and the Stanford tree is the low-frequency one. However, because the Stanfurd tree has very bright teeth with very distinct edges, it's very obvious and dominates the perception of oski's mouth even at a close distance.

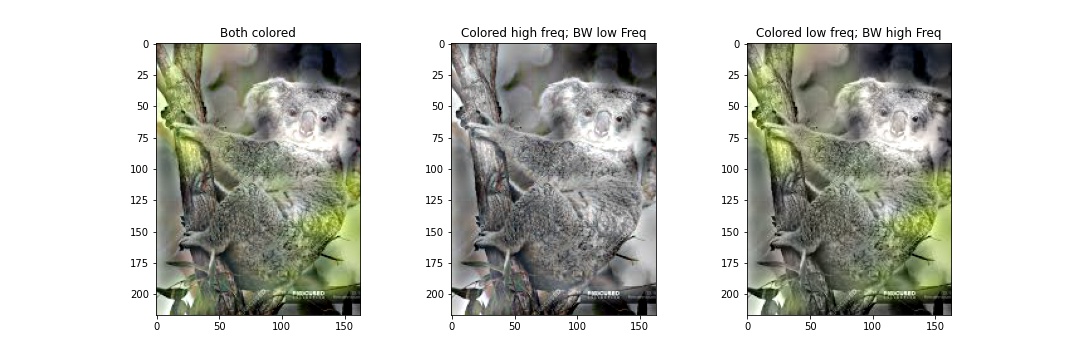

Bells & Whistles - colored hybrid images

On Panda vs. Koala, I tried using color for both low and high-frequency components, and only either low or high-frequency components and compare the effects. I don't notice any significant differences, but it's probably because pandas are black and white and Koalas are mostly grey.

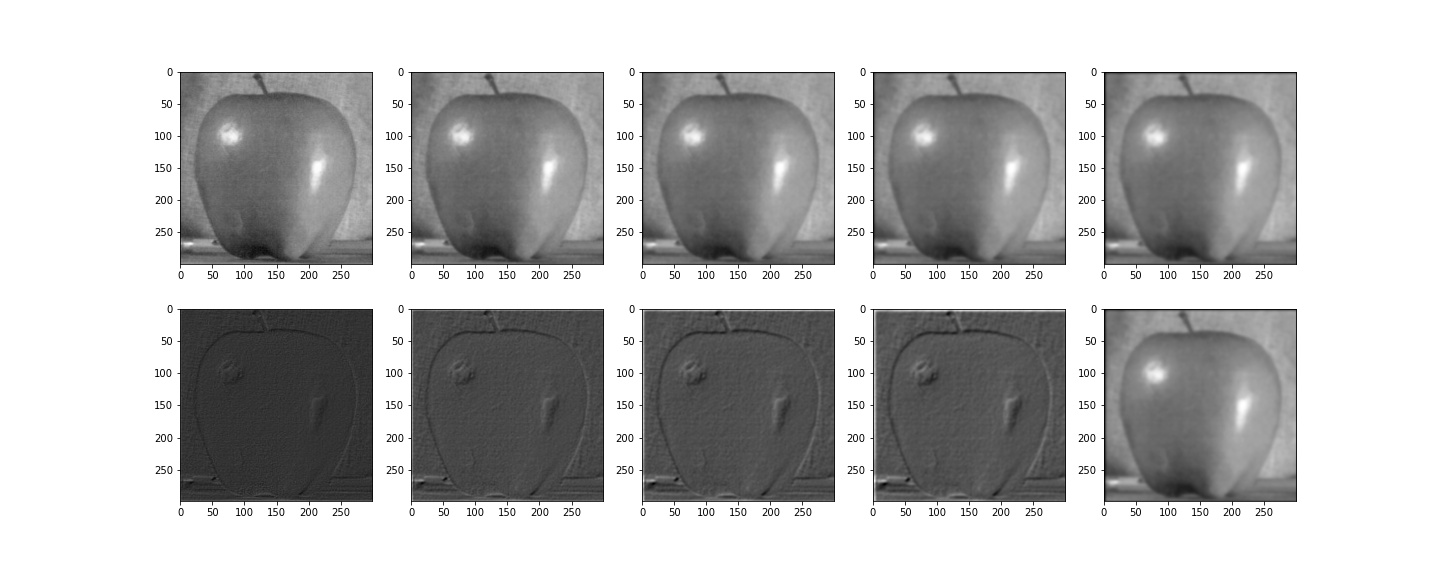

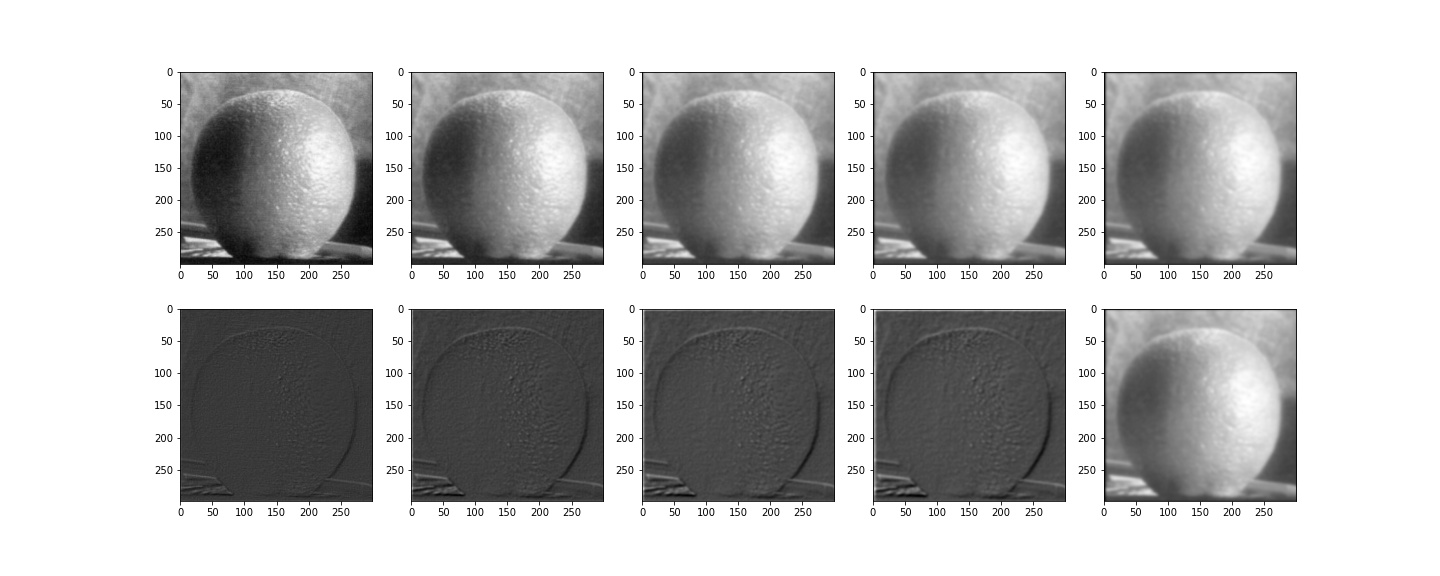

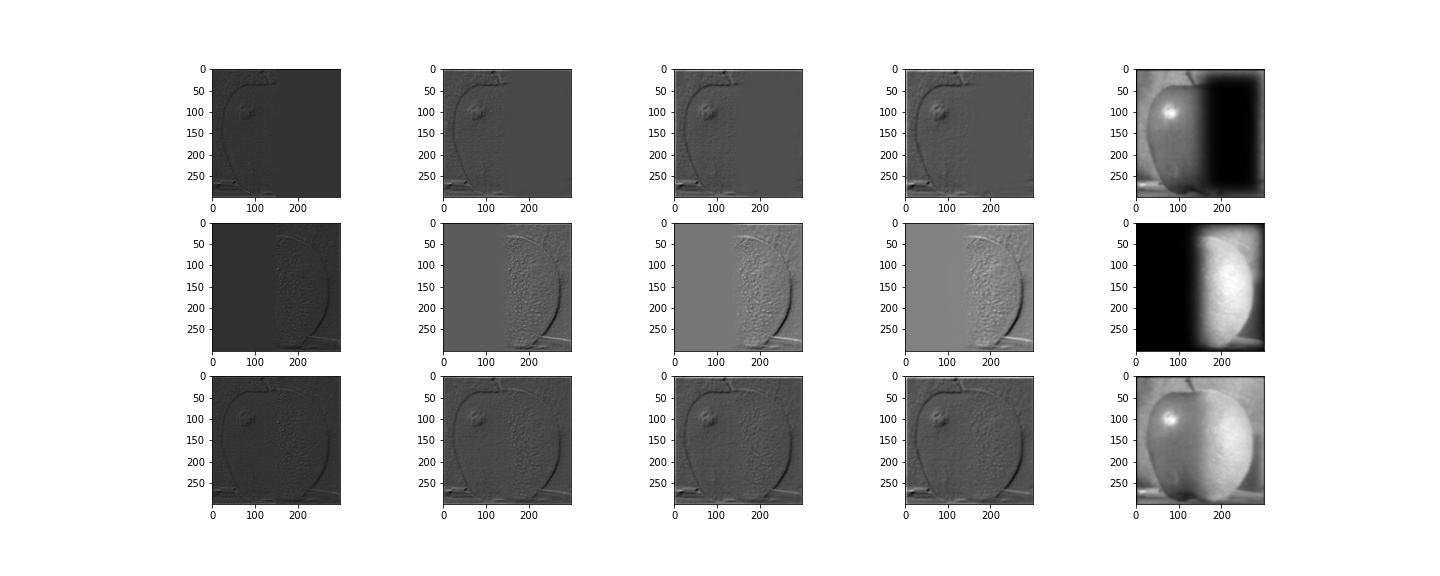

For Gaussian Stacks, we apply (increasingly blurrier) Gaussian filters to each level of the stack so we'll have a stack of low(er) frequency images. For Laplacian Stacks, we take the difference between any consecutive Gaussian layers to calculate Laplacian layers, and the last layer is the same as the last layer of the Gaussian stack so that when we sum up the Laplacian stacks, we get the original image!

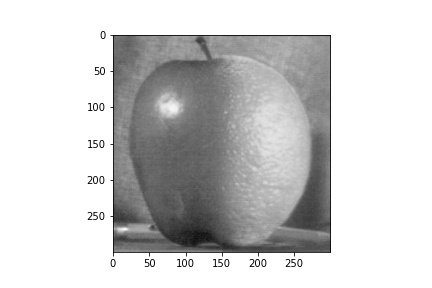

Gaussian & Laplacian stacks for apple:

Gaussian & Laplacian stacks for orange:

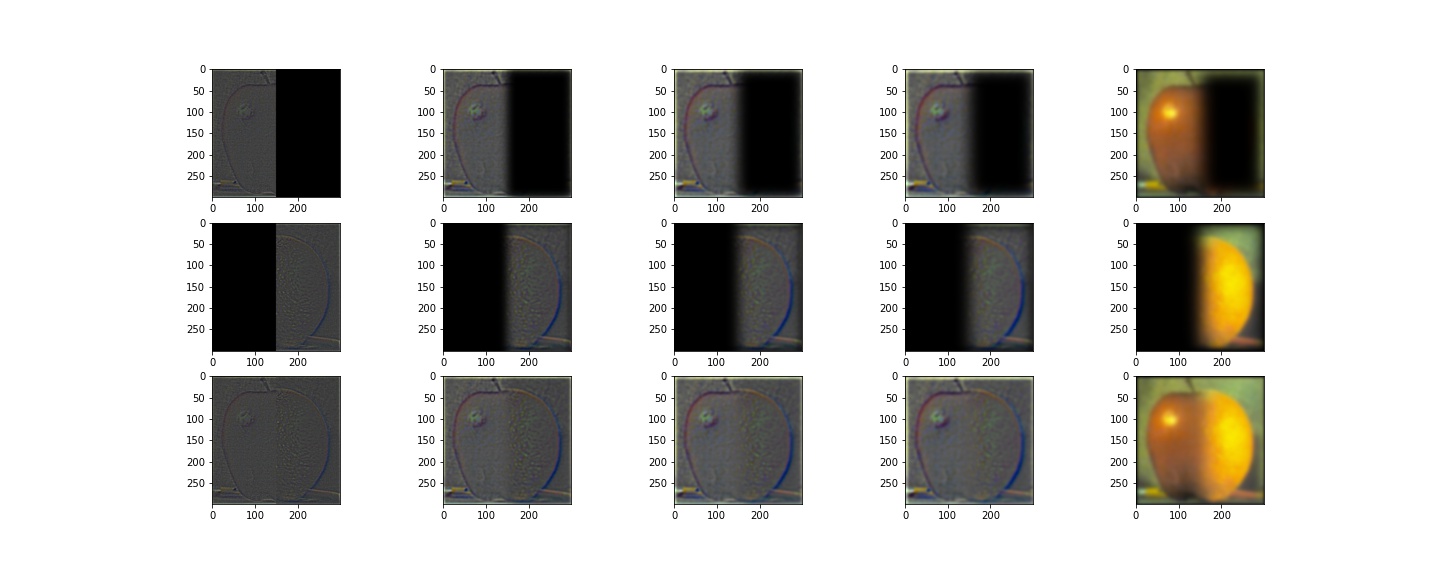

To blend the apple and the orange together, we can create a mask that's half black and half white, and create a Gaussian stack of the mask to smooth out the transition between the two images.

Below is the masked Laplacian stacks for apple and orange, and adding them together will give us the oraple!

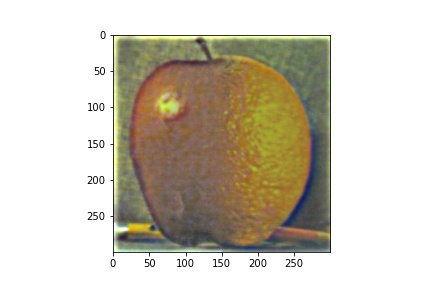

Adding the oraple laplacian stack (the last row) will give us the full blended oraple!

Bells & Whistles - Colored Oraple To get the colored blending, we would just repeat the steps above for each R, G, B channel. I noticed that the Laplacian layers would be pretty much completely black if I didn't adjust the values in R, G, B high-frequency layers when stacking them together, so I normalized the stacking of high-frequency RGB so that we can see the stacks better.

Again, we sum up the oraple Laplacian stacks and normalize the results to get the final oraple!

More Examples

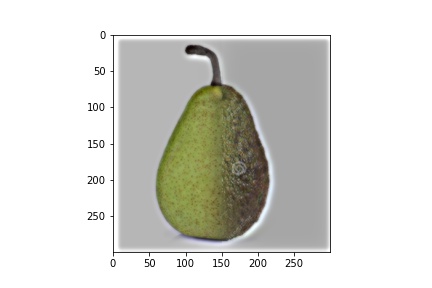

Pear & Avocados

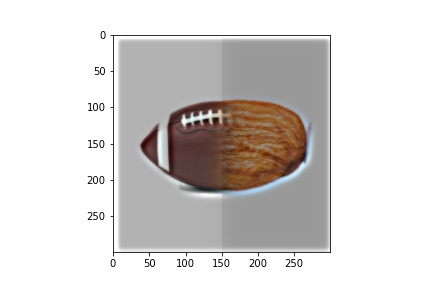

Football & Almond

This one actually didn't work that well. Potentially because the white background of the football and the almond isn't exactly the same "white" so computationally it caused some differences in the brightness.

Half Dome @8pm & Moon @5am

Here I create a circular mask to put the moon at around sunrise time to Half dome at around sunset time

The most important thing I learned from this project!

It was very interesting to see the mathematical/computational fundamentals of image processing! As a photographer, I use Photoshop/Lightroom a lot but I had no idea how the software manages to do the editing of the photos. Now I kinda get a sense of basic blurring/sharpening and also blending images together. Writing code to edit photos instead of using a developed software was a very rewarding process for me :)