by Christy Koh, October 2021

This project presents three tasks related to manipulation of the human face: (1) a two-face morph sequence, (2) a computation of the mean face of a population, and (3) a caricature of a face by extrapolating from the population mean.

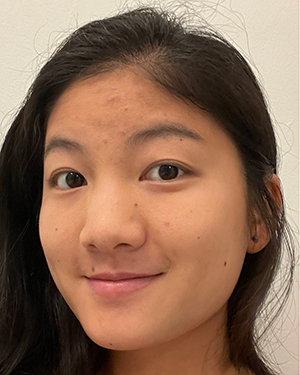

I chose to morph between my face and the Nobel-Prize winning author Kazuo Ishiguro. 'Tis a a playful and not-at-all unsettling symbol of how one day, I hope to be half as eloquent and evocative a writer as he is. Unfortunately, he does wear glasses, so the morph sequence does contain some images that do not fall within the subspace of human faces. Despite knowing this, my heart was set on achieving this vision -- so let us forge ahead!

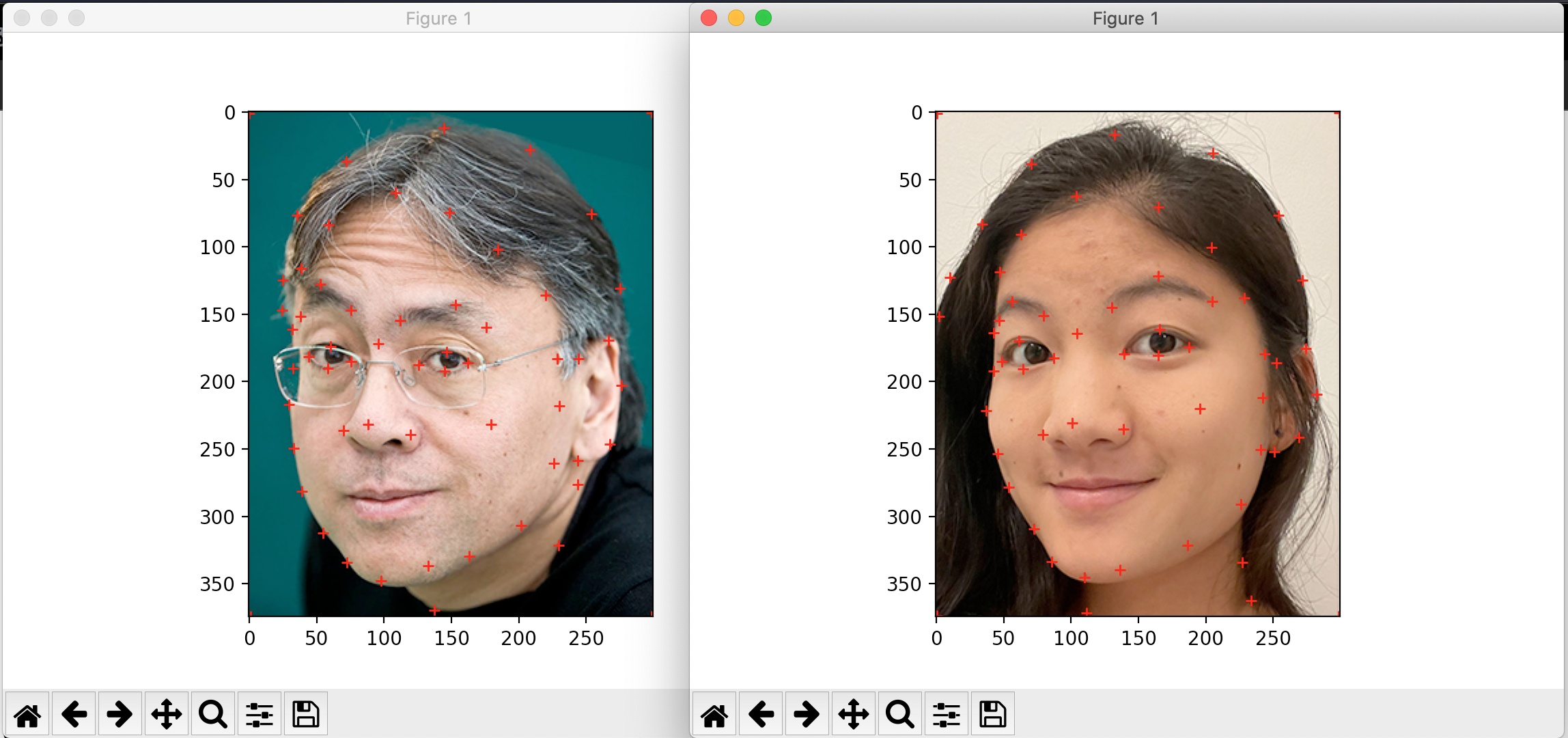

First, I implemented a simple tool (cpselect.py) that enables manual selection and saving of key correspondence points for a single image, using matplotlib's ginput function.

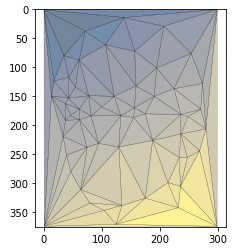

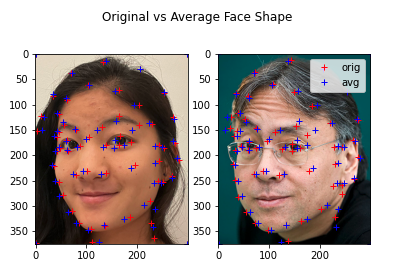

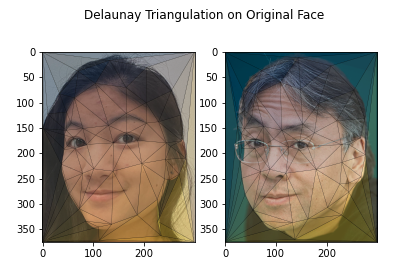

To determine the average of the two face shapes, the midpoint of each corresponding pair of points was calculated. This set of average points was used to derive the Delaunay triangulation (computed using the scipy.spatial package), which constructs a warp over the key points of the facial features such that no point is encompassed within in any other triangle. This will allow the transformation within each triangle to be computed separately and 'stitched' together.

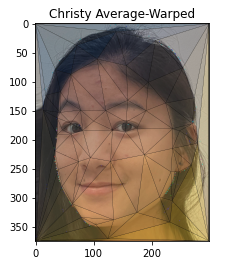

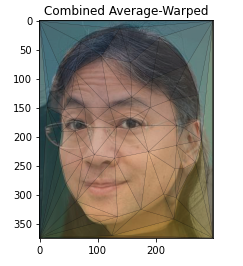

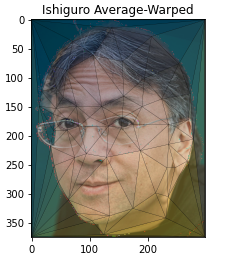

Next, we compute a "midway" face by warping both images to the average face shape.

How is this warping accomplished? For a single triangle we may apply an affine transformation, composed of a rotational and a translational component. There are two ways to generate the affine transformation matrix: 1) algebraically solving for the 6 unknowns, or 2) geometrically finding the basis of each triangle with respect to the unit triangle.

Calculating algebraically: let A and B be the points from triangle 1 and 2 respectively. These triangles, expressed in homogeneous matrix form, can be related to the desired transformation t according to the equation B = tA. Taking the inverse of A on both sides, we determine that t = BA^(-1).

Using the geometric approach, take two sides of the triangle as basis vectors, setting the point at which they intersect as the translated origin of the new basis. Thus, the transformation from triangle 1 to triangle 2 can be broken down into an inverse transformation from tri1 --> unit triangle, followed by a forward transformation from unit triangle --> tri2. Paying attention to the fact that the Delaunay function returns points ordered clockwise around the triangle, the transformation matrix t can be easily computed.

With this affine computation function, I programmed a warp function to transform an initial triangular warp into a new desired shape. This function is applied to both faces to warp to the average face shape. The combined image can be constructed from the two warped images by giving an equal weight to the pixel RGB values from each image (0.5), then adding the values.

Finally, a morph function is programmed to generate a video sequence of intermediate images as we morph between the two faces. Two additional "warp_frac" and "dissolve_frac" parameters scale the shape and cross-dissolve of colors respectively between the two images, determining each face's proportional contribution to each incremental stage. To achieve the color mapping, we use the inverse transformation from the transformed coordinates to sample colors from the original image according to the RectBivariateSpline interpolation function.

The final result is pictured again below.

To improve on this result, one could select an image of an individual who does not wear glasses, or who has a more similar hairstyles. In addition, the colored background and slight mismatch in face and shoulder orientation (Ishiguro is hunched over...) leaves more to be desired in the quest to achieve a subtle and seamless transition.

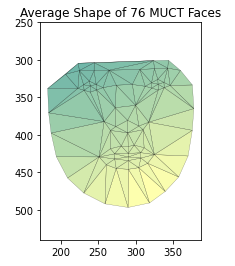

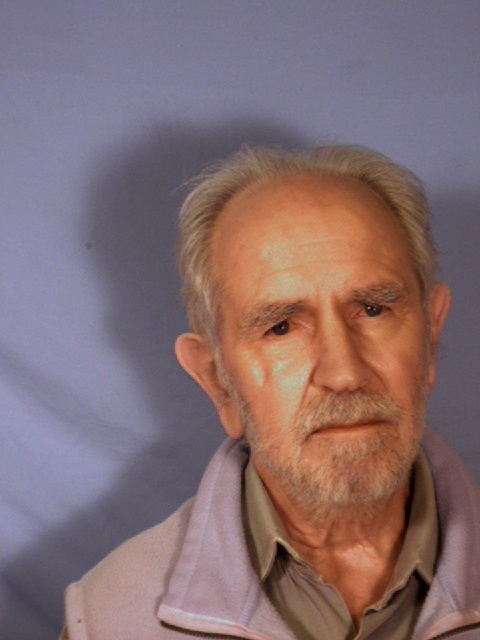

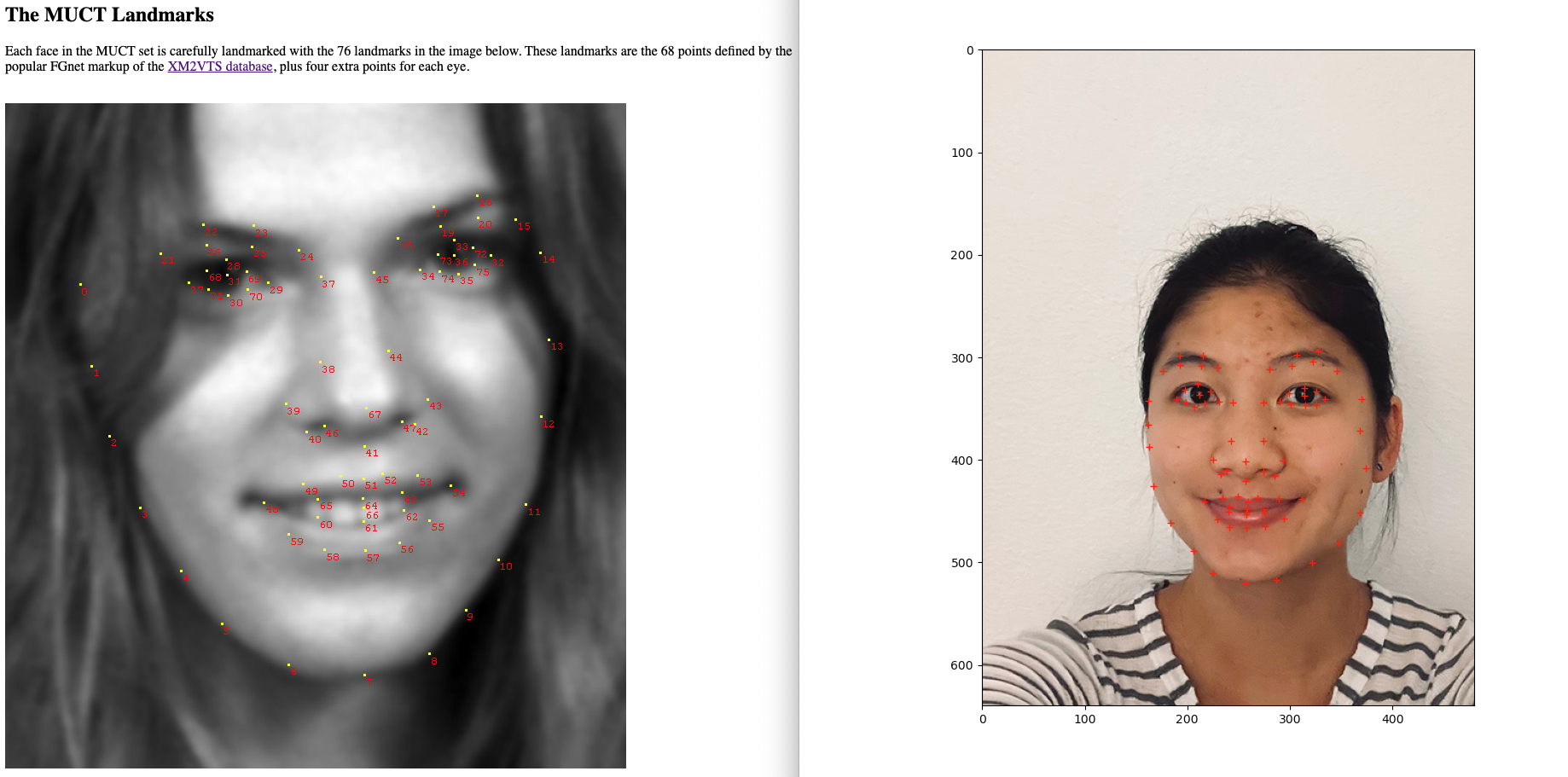

I used the MUCT Face Database, a diverse set of 3755 faces taken in different lightings and annotated by 76 facial landmarks. In addition, images are labeled with gender, camera view, lighting, and presence of glasses. To filter out noisy data, I only selected images from a frontal camera view and in regular lighting. The remaining set consists of 76 images, 36 female and 40 male.

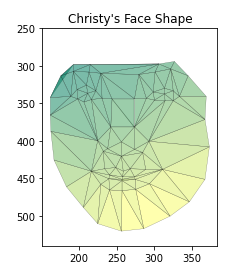

First, the average face shape of the whole population was calculated using the work from Part I.

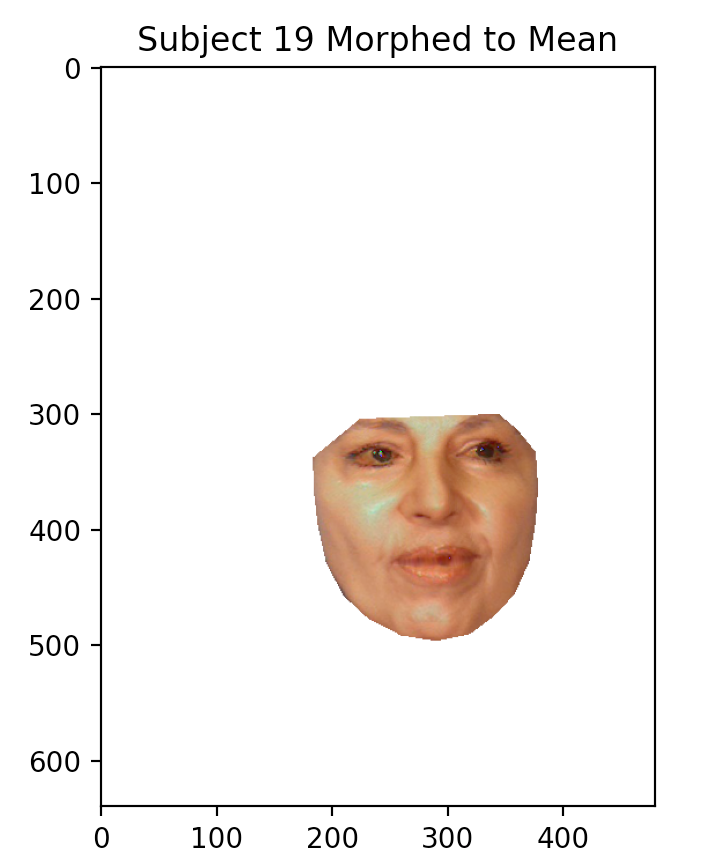

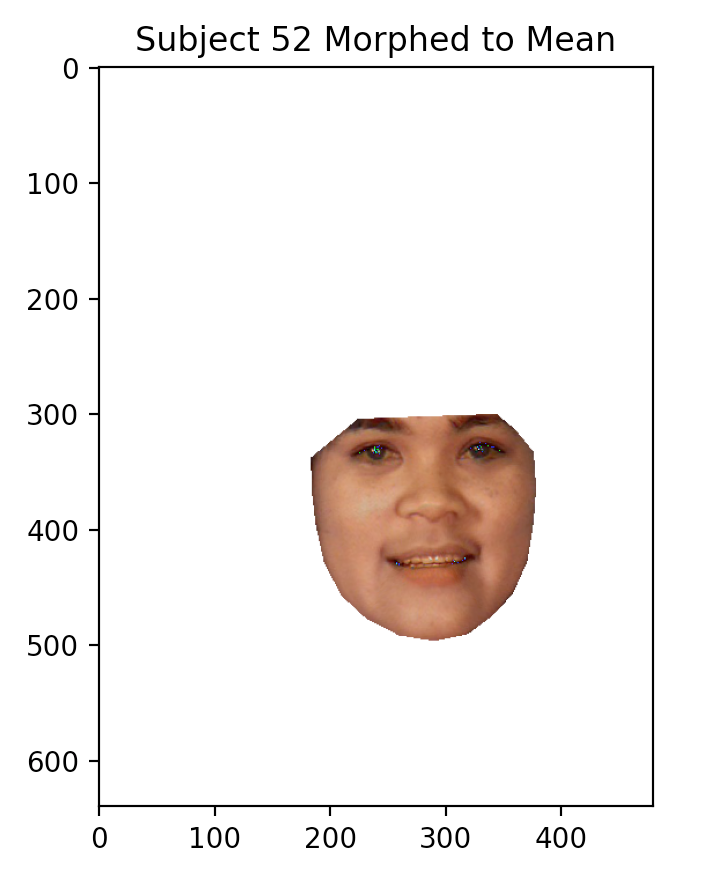

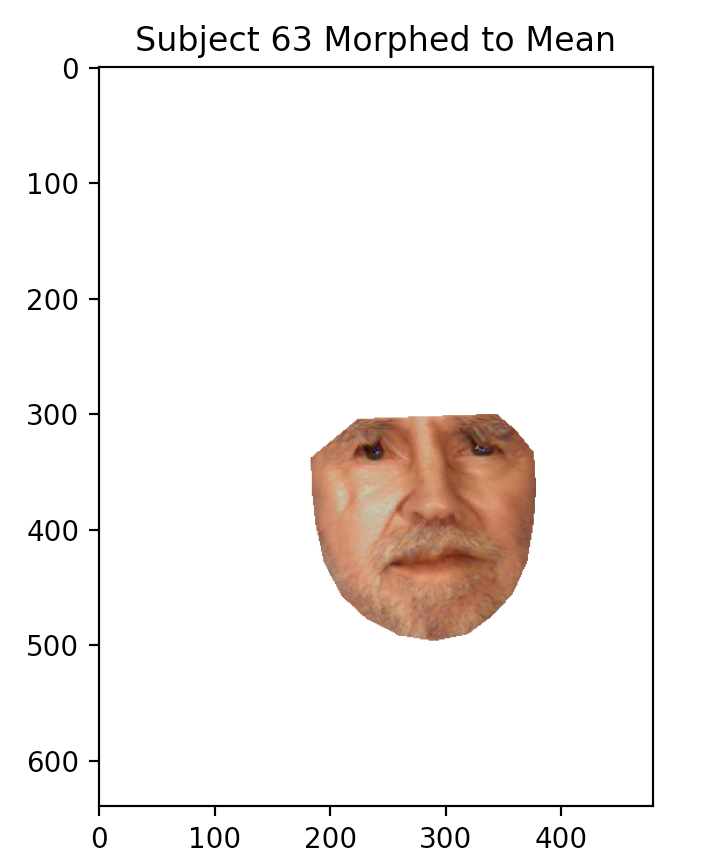

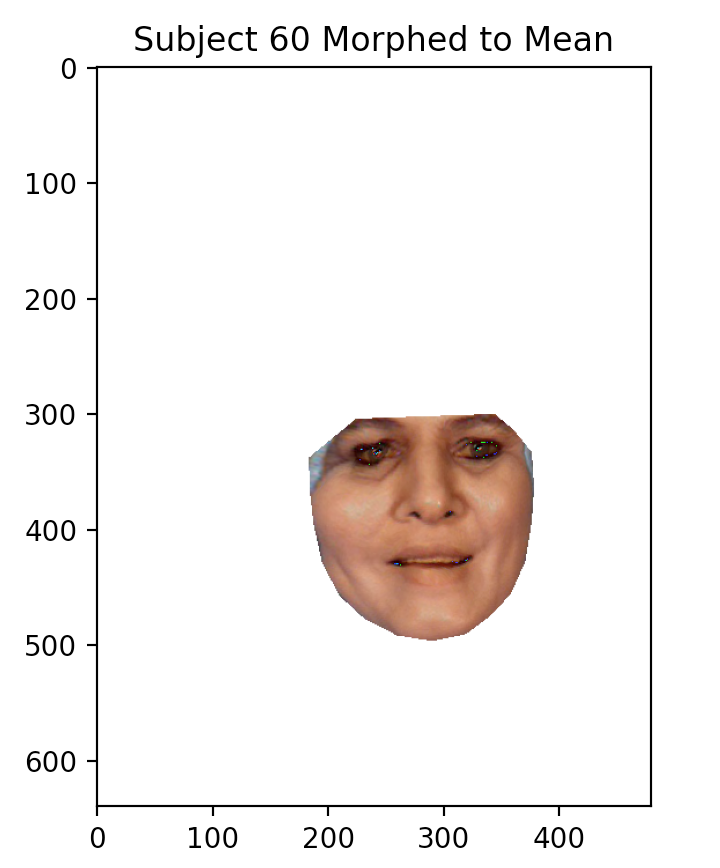

Next, we morphed each participant's face to the mean shape. Below are some examples.

There are some wonky looking ones!

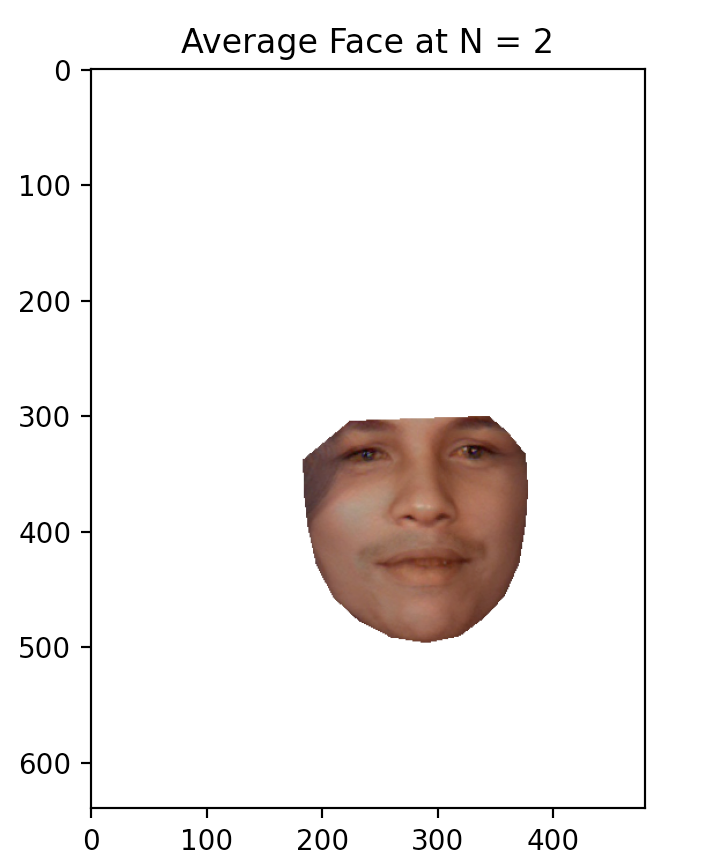

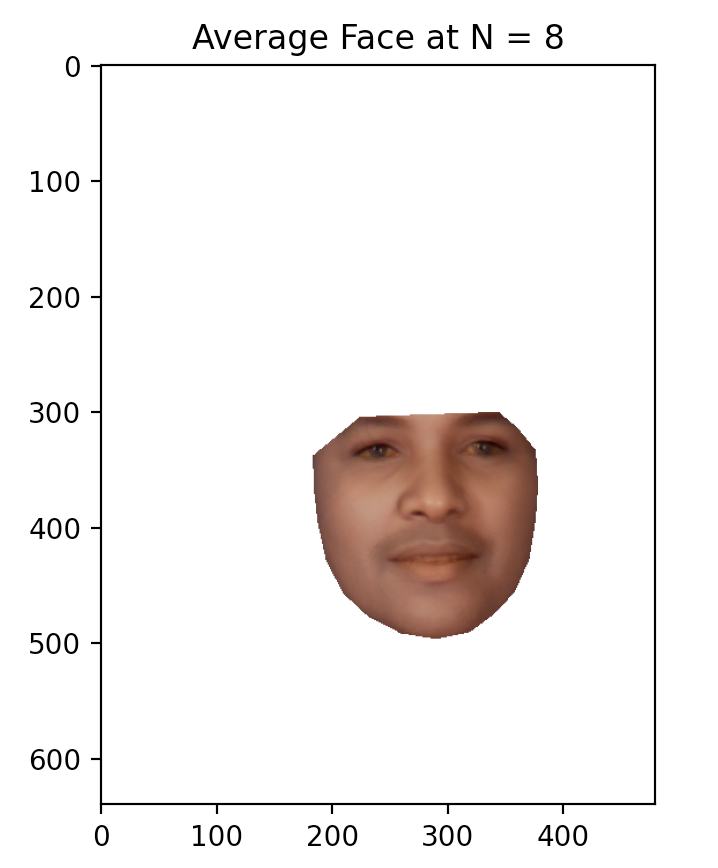

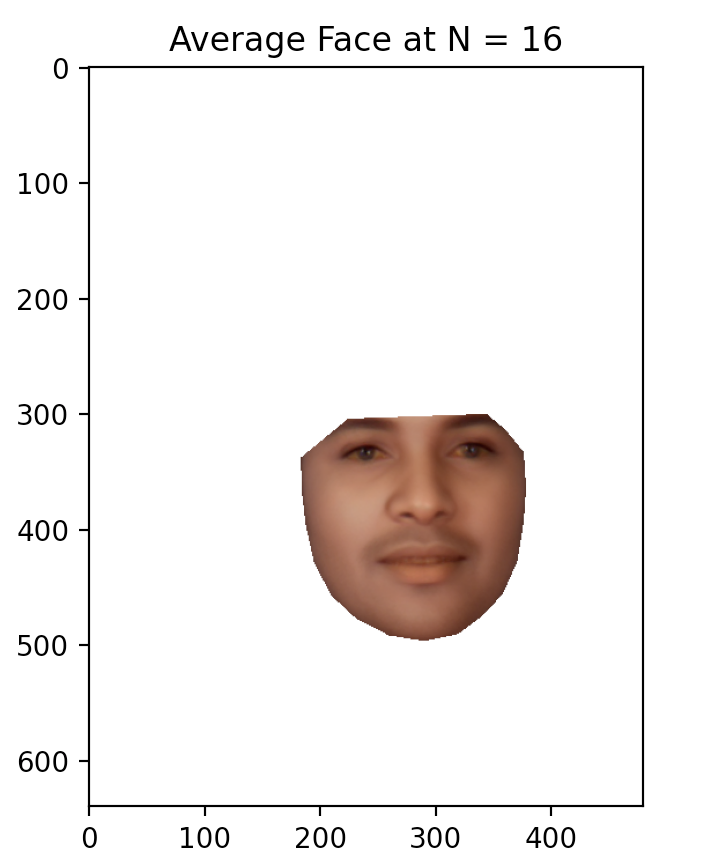

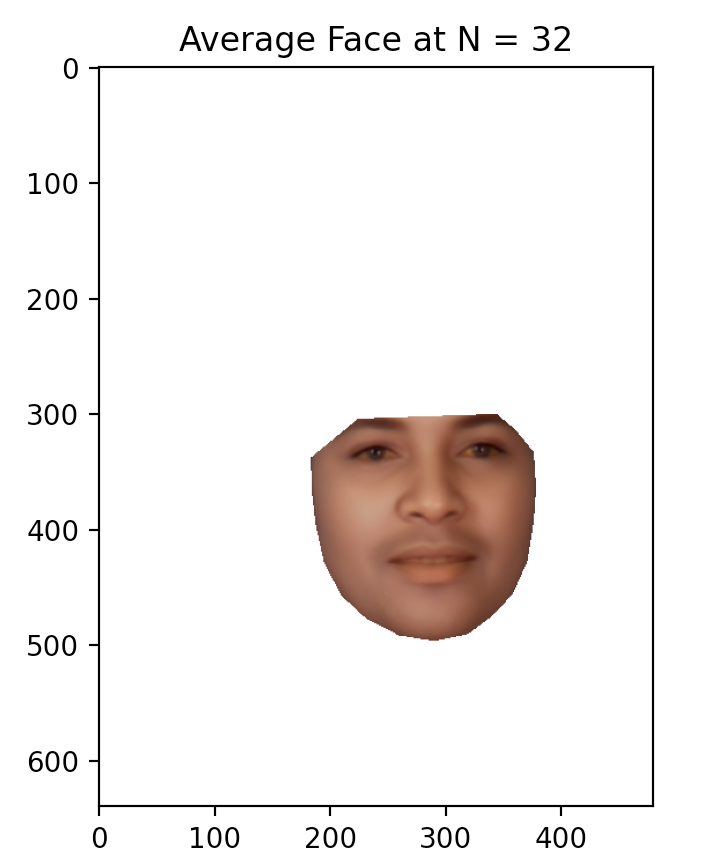

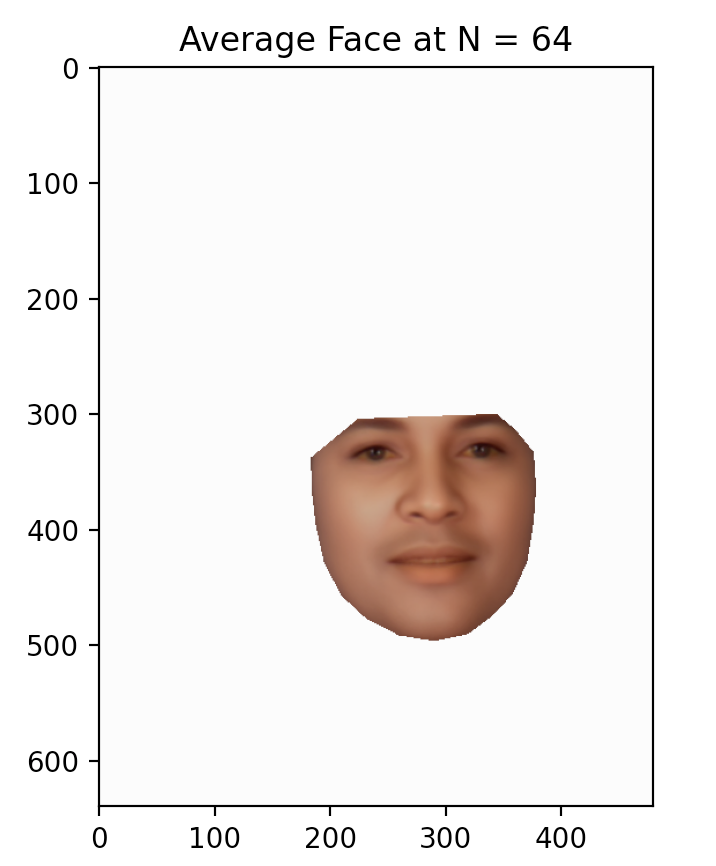

Finally, the RGB values of the morphed MUCT faces were summed and averaged. The figures below display the progression of the mean face as more images are averaged in.

And voila! We have computed the population mean face of the participants.

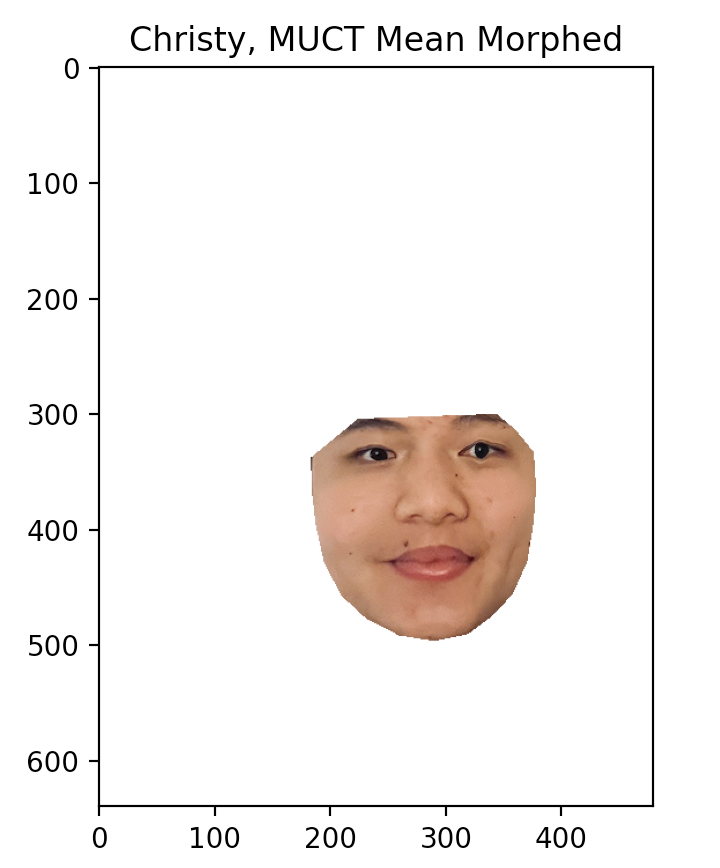

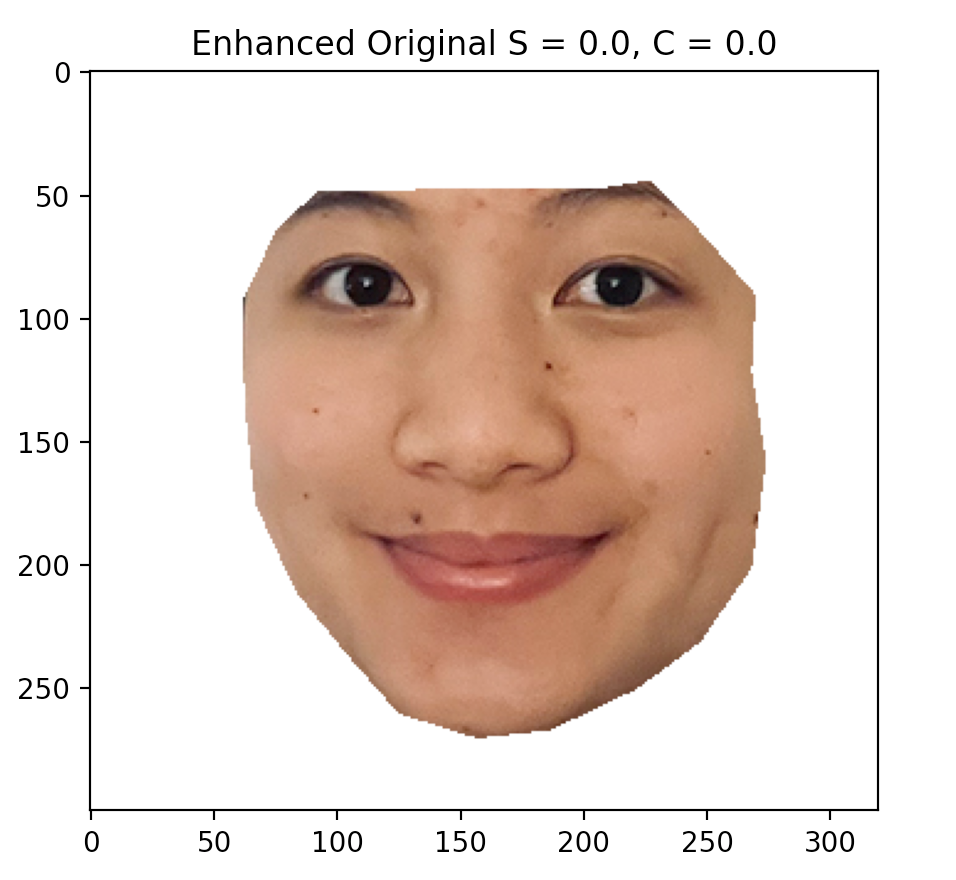

In order to relate my face to the set of MUCT faces, I took a picture with my face approximating the position and size of the average face shape. I labeled my face with the 76 corresponding landmarks, and morphed my face to the mean shape. The image was sligntly less than ideal, since the lighting for my picture did not match that of the provided images.

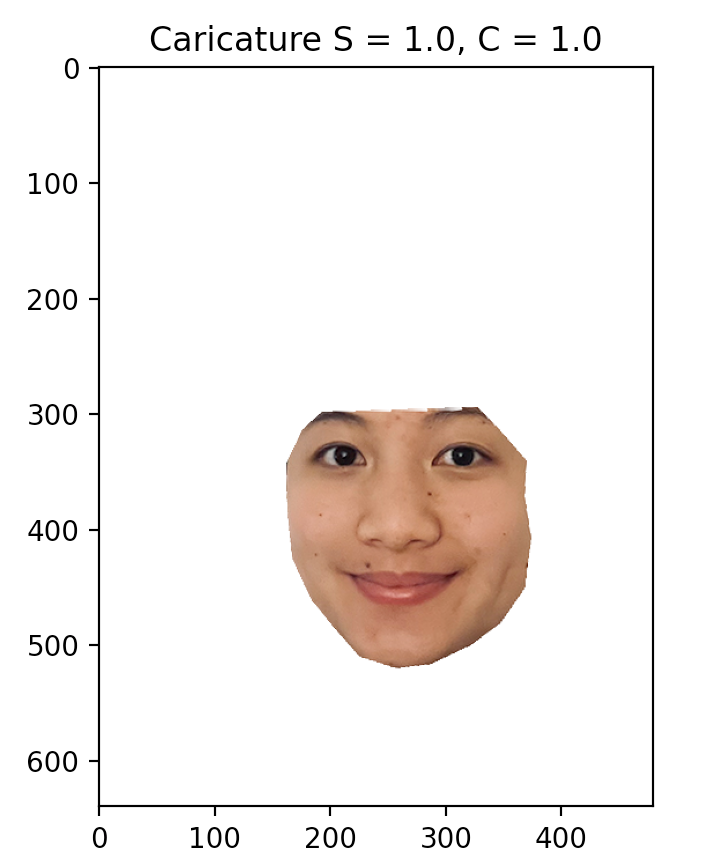

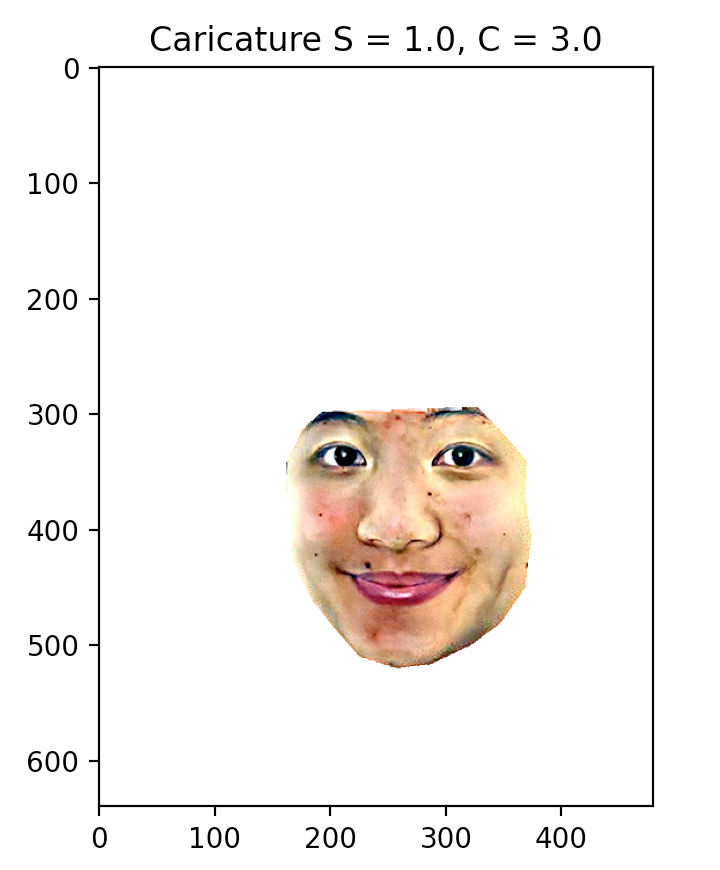

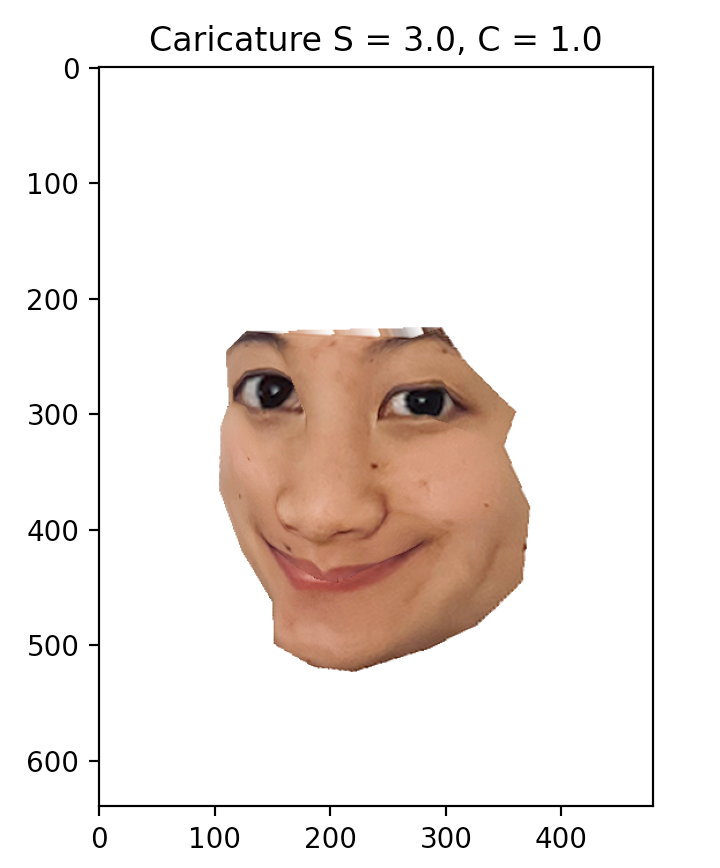

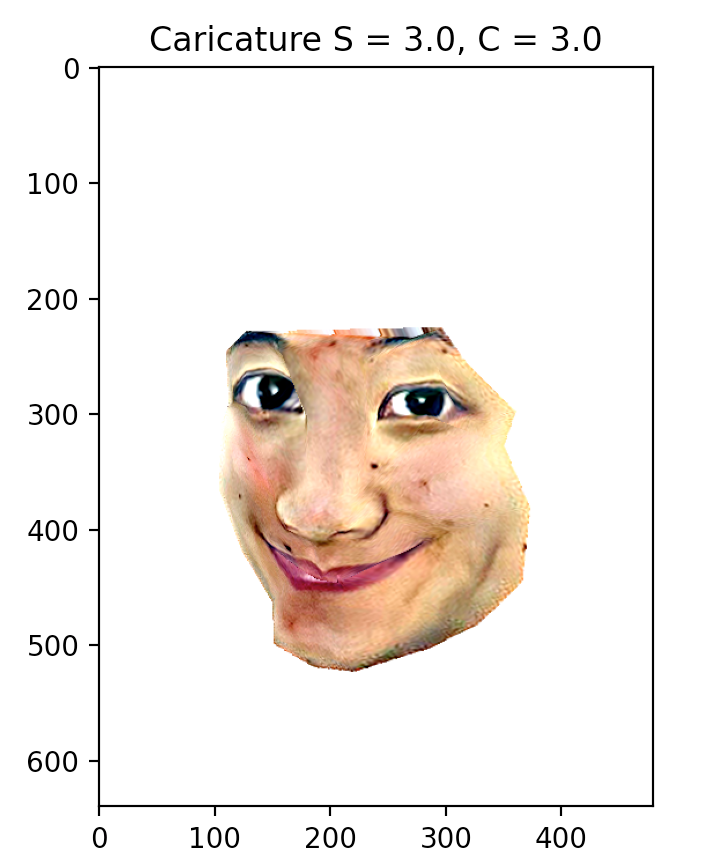

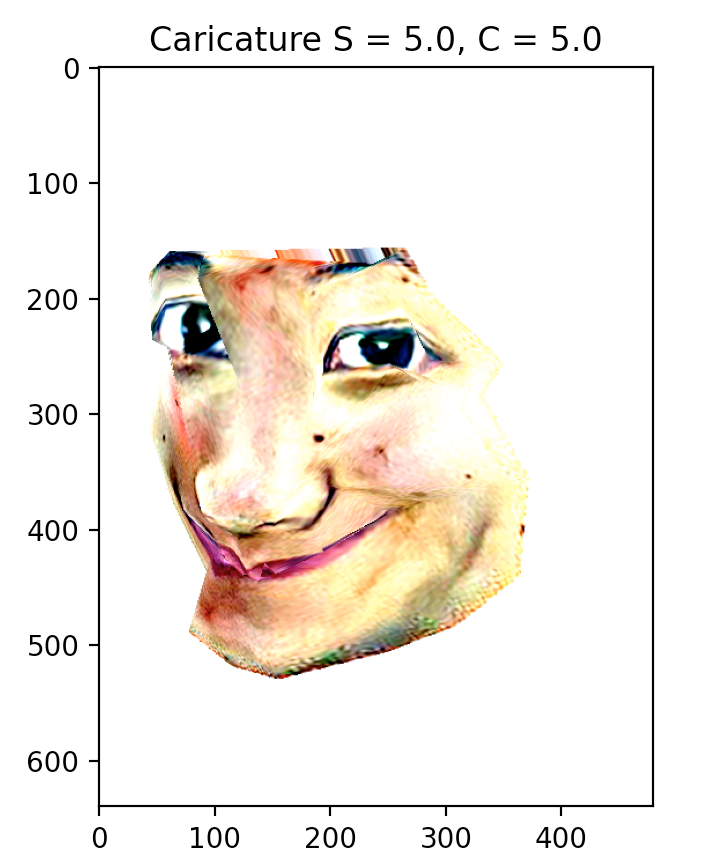

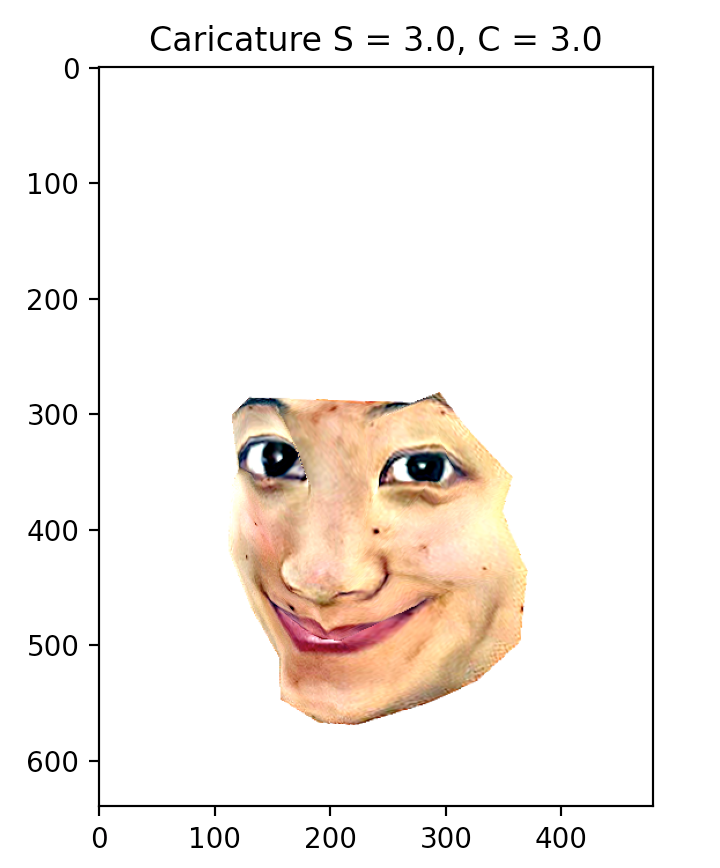

By comparing any face to the population mean, we can capture the unique characteristics of that face; put another way, I can perhaps discover the essence of "Christyness" with respect to my visage. Here, we generate a caricature by extrapolating the difference in shape and color from the population mean.

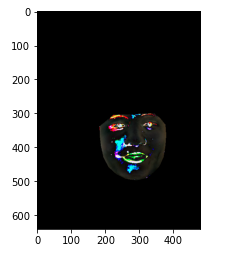

There are two "knobs" we can turn to adjust the caricature: shape, controlled by the 76 landmark points, and color, determined by the RGB values. Calculating the shape vector from the mean to my face is a straighforward subtraction of the average points from my face's landmark points. However, the image of my face must be morphed into the average shape before calculating the color vector (shown below).

To apply the scaling parameters to the mean face, first extrapolate by color while the

two images share the same shape. Then, using the warp function programmed

in Part I, the shape of the image can be morphed to the average shape extrapolated by

the scaled difference in point positions.

The overall process is as follows:

The grid above shows the caricature function applied by varying the input parameters, S for shape scaling constant and C for color scaling constant. The results are cartoonish and possibly nightmare-inducing, as caricatures should be... but since this is a result derived from a single image captured under non-control conditions, no offense need be taken :) Do I regret using my face? Now that these cursed images exist on the internet, when people see me in real life, hopefully they will be much relieved.

We calculate the mean female face from the dataset and apply the caricature process again.

The results of caricaturing from the subset of female faces seems to be less extreme, which makes sense if my facial features are more similar to the average female face.

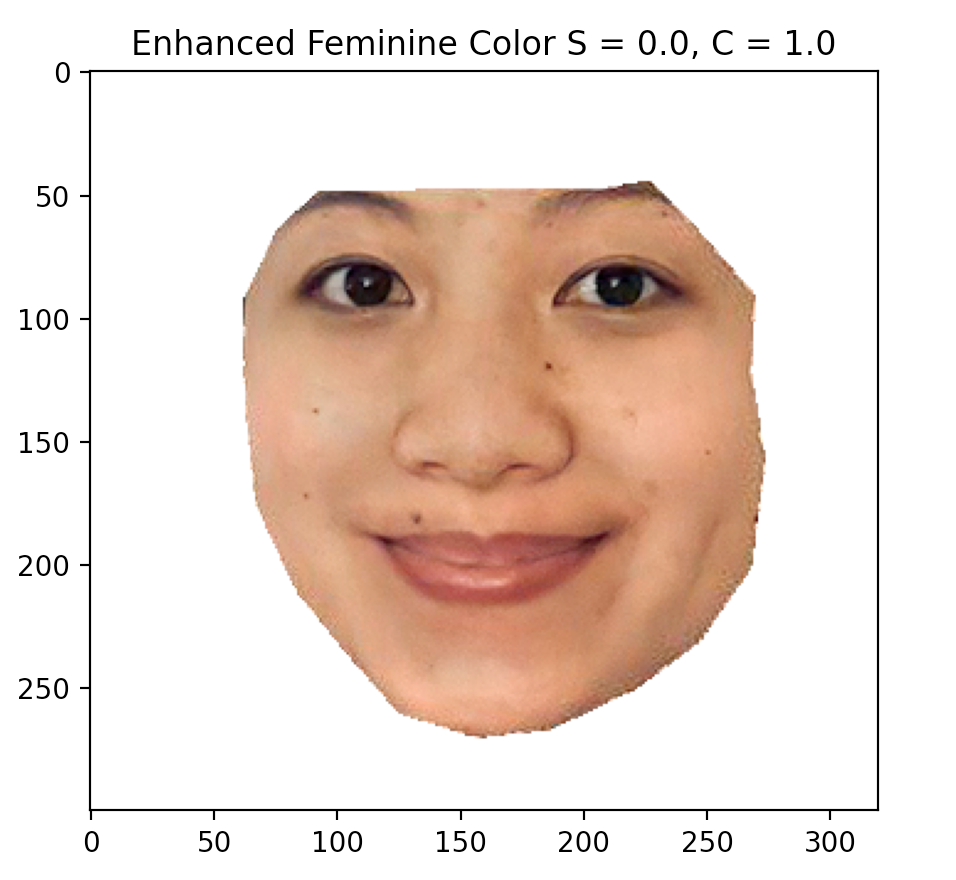

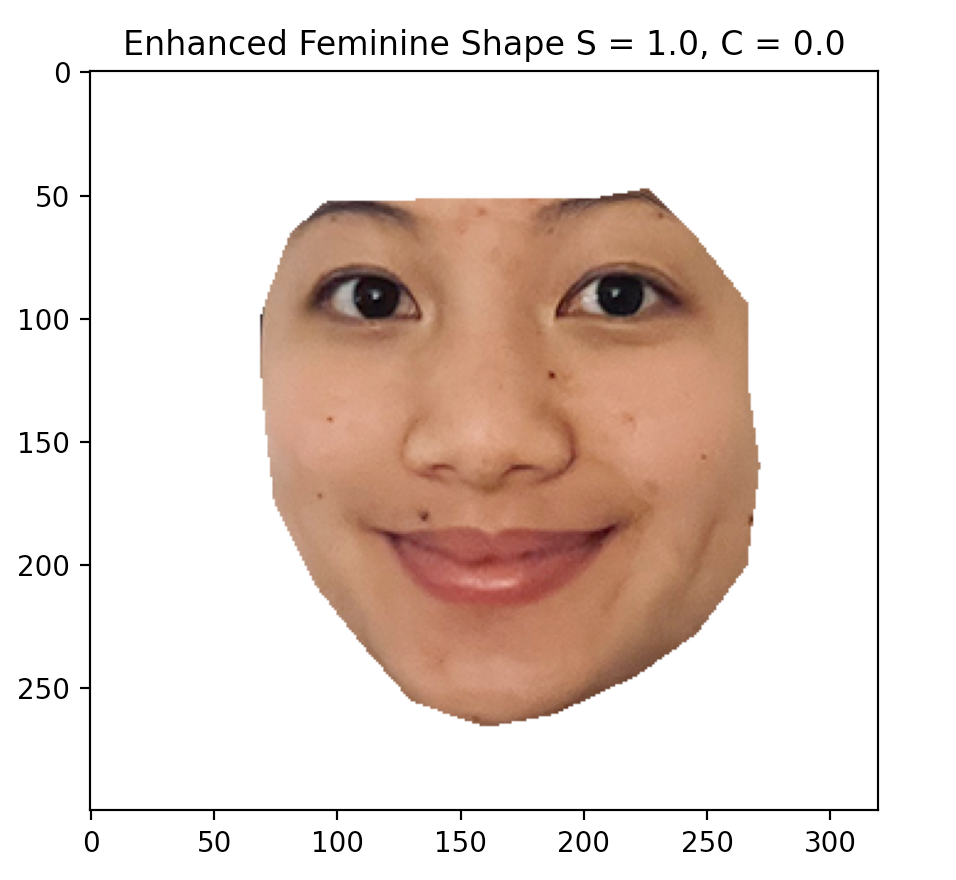

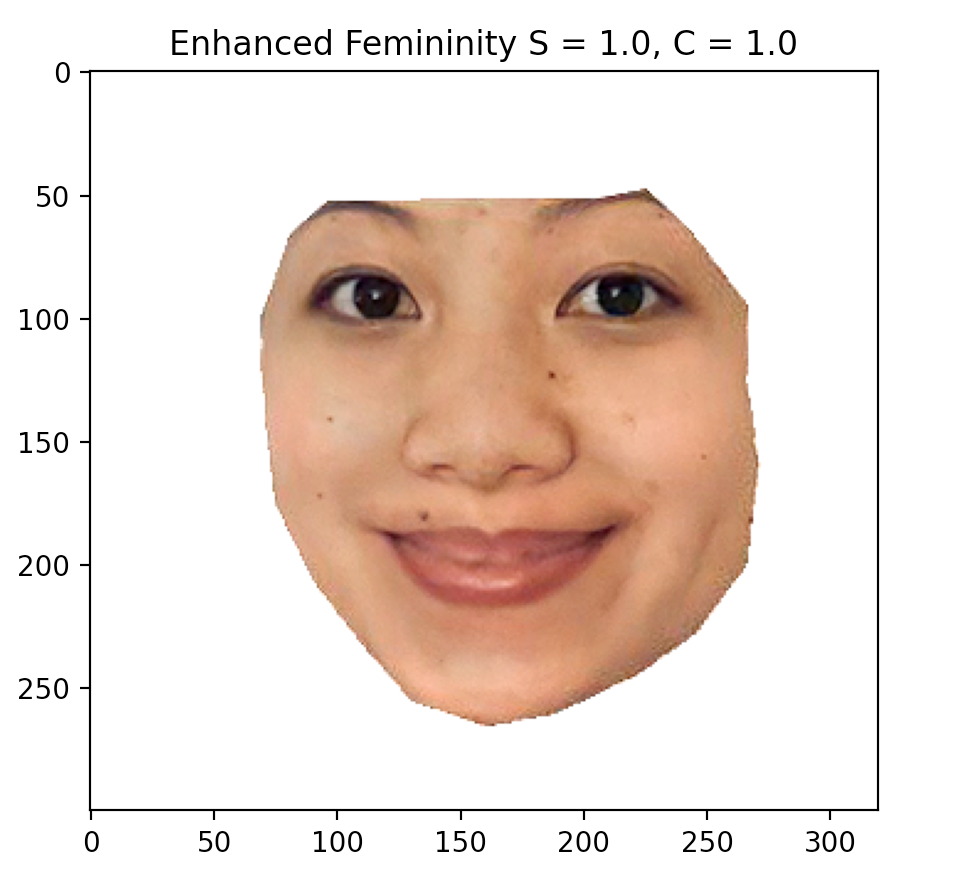

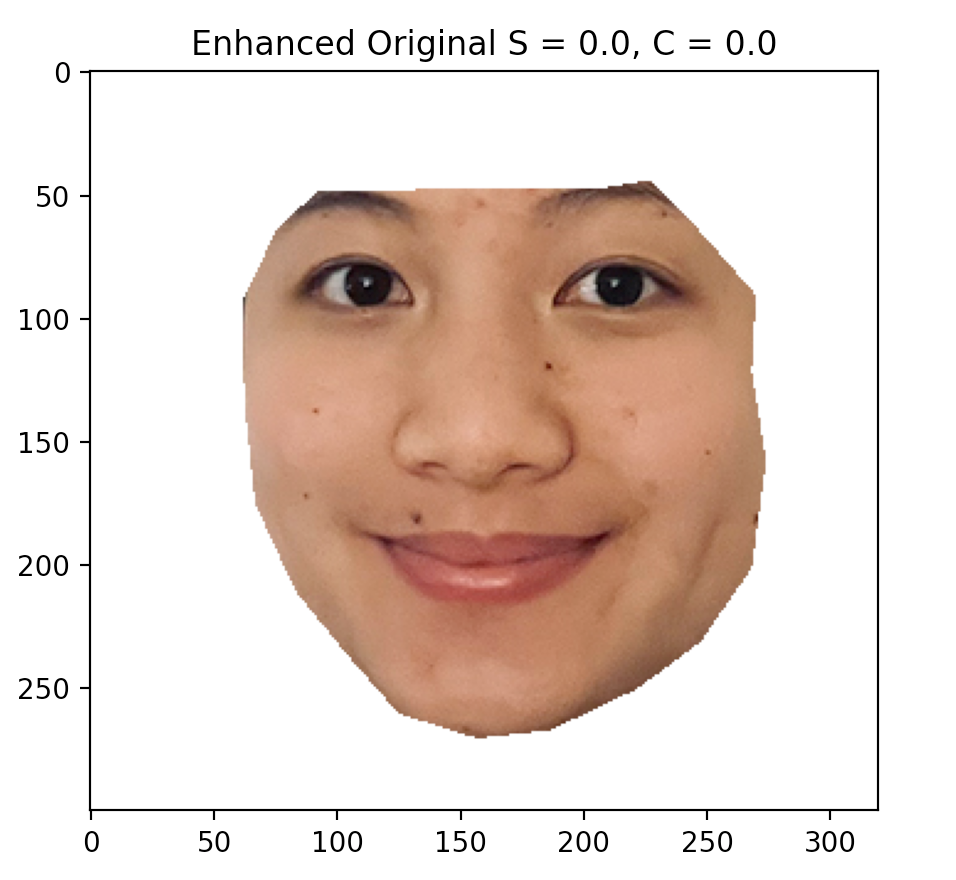

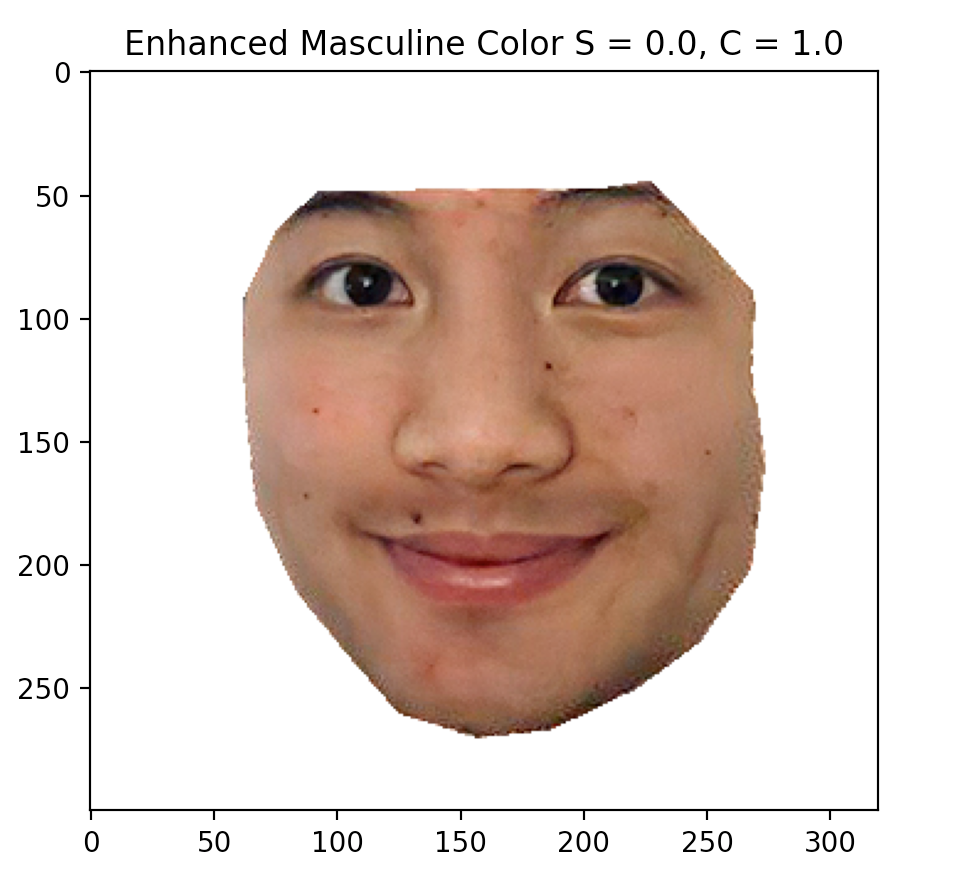

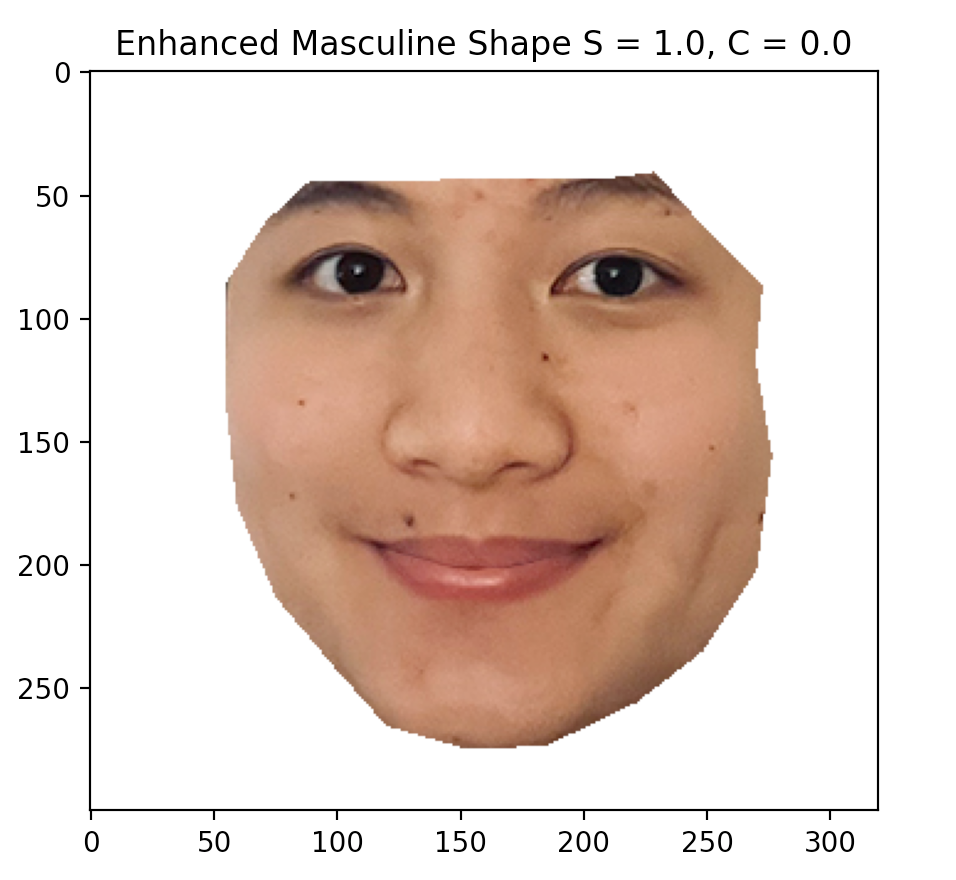

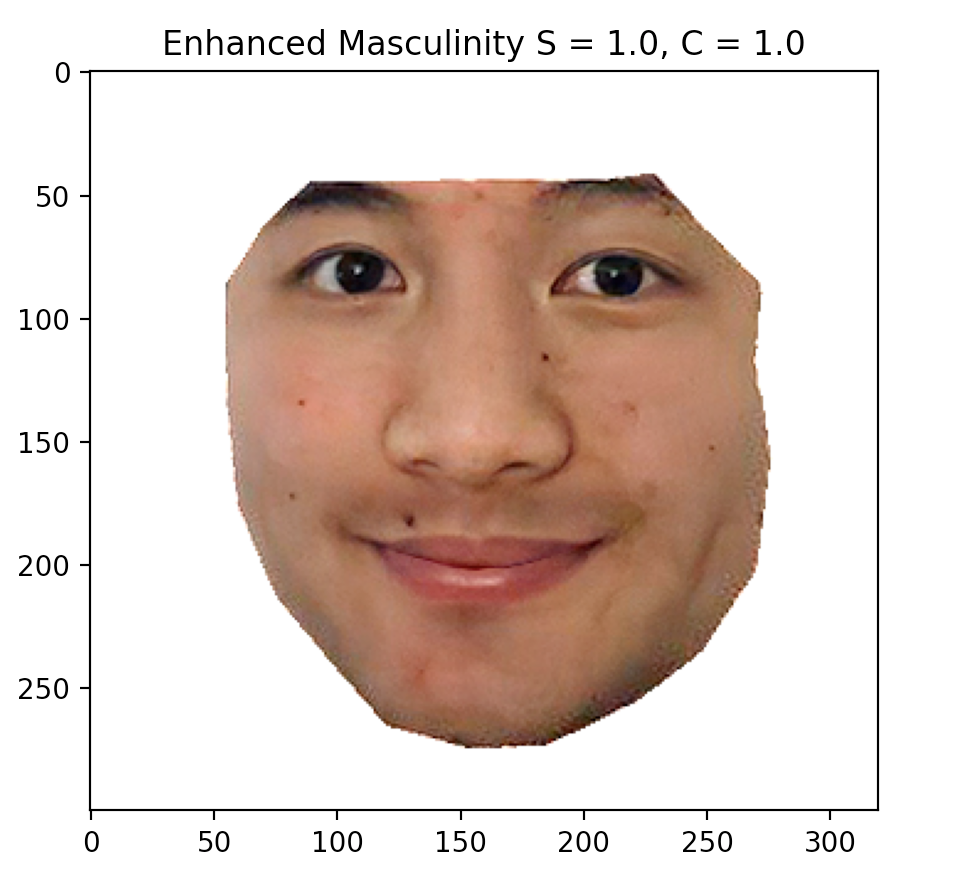

We can also manipulate the face to become more "feminine" or "masculine" based on the difference between the averages of each gendered subsets. Below are the average mean population faces computed from the subsets of female and male faces.

Within caricature.py, I programmed a new enhance function which scales an input image (my face)

between two extreme images of a trait. A scaling factor of 1 scales the trait from low image to

high image. This essentially accomplishes the same task as the caricature function, except the

difference vectors are defined as the direction between the high and low images instead of being

computed with respect to the input image to be transformed.

One implementation difference to note is that the enhance function needs to warp the color vector

to the input image shape in order for the scaled difference to be summed correctly with the input

image. The overall process is as follows:

Observations: face becomes lighter and narrower, with darkening around the eyes and more vivid and plump lips.

Observations: face becomes darker and wider, with thicker eyebrows, appearance of facial hair, and thinning of the lips.

Note: For both the masculine and feminine shape transformations, the face is translated vertically, but the image was cropped so that shape comparisons would be more apparent.