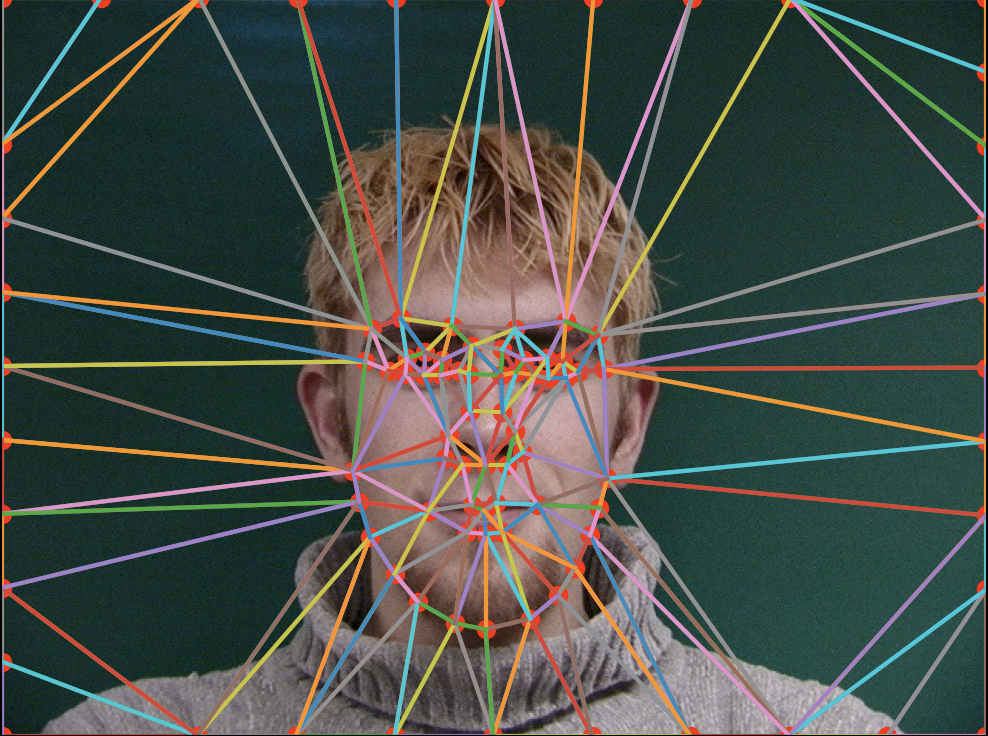

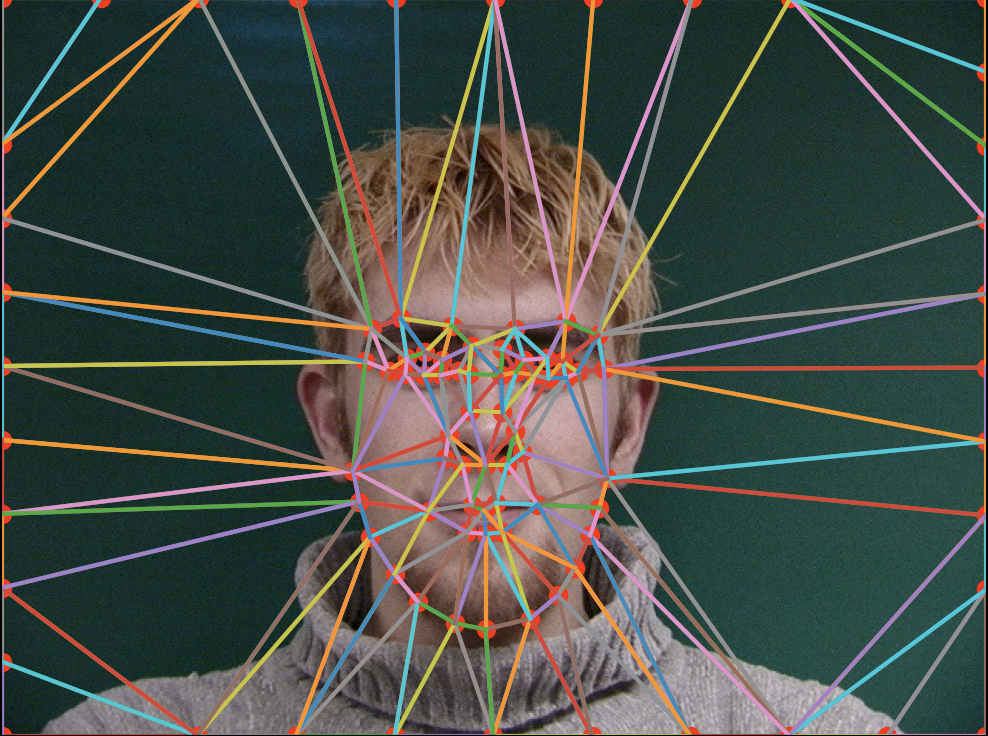

For this assignment, I used the Imm Dataset (https://web.archive.org/web/20210305094647/http://www2.imm.dtu.dk/~aam/datasets/datasets.html) and followed their annotation format. The illustration of a sample image of the dataset and a photo of mine is shown below. Both are annotated and triangulated in the same form. Further, I added border keypoints of the image such that morphing the face will not greatly morph the other areas. Triangulation is constructed via Delaunay's algorithm.

First, The average shape is computed as the average of the two point sets. Then, I created a new canvas of the same size as the input images which is used to paint the midface. To map the pixels onto the canvas, I used inverse affine transformation for each pair of triangles. Concretely, for each pixel in a certain average shape triangle, its corresponding pixel in the original image is computed by inverse affine transformation. If the corresponding pixel is at a non-integer position, then bilinear interpolation is used to calculate its pixel value. Then the value is copied onto the canvas. The final midface is the average the two input images mapping onto the canvas. The mid-way face of the same person smiling and not smiling in the Imm Dataset is shown below.

Constructing a morph sequence is much similar to constructing the midface instead the target shape is not the average the two input images, but a weighted average of the two with the weight changes by time. Let's define two weights, warp fraction and dissolve fraction. For each frame, it can be generated by a function with its interface definition like

morph_image_pairs(img1, img2, pts1, pts2, tri, warp_frac, dissolve_frac)