In this project, I used projective warping to rectify images and blend multiple images together in to a mosaic/panorama.

I shot the photos on my smartphone camera and transferred them to my computer. I tried to rotate my camera while avoding translation when shooting the photos for the mosaic. I also used the AE/AF lock feature on iOS to keep the lighting consistent.

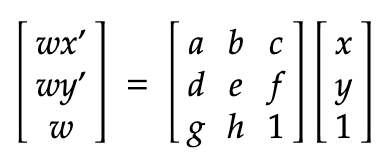

We first have to compute the homography matrix H that defines the warp.

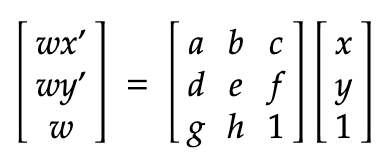

Next, we can solve for x' and y' by dividing wx' and wy' by w

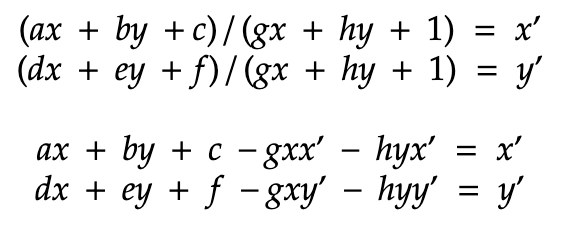

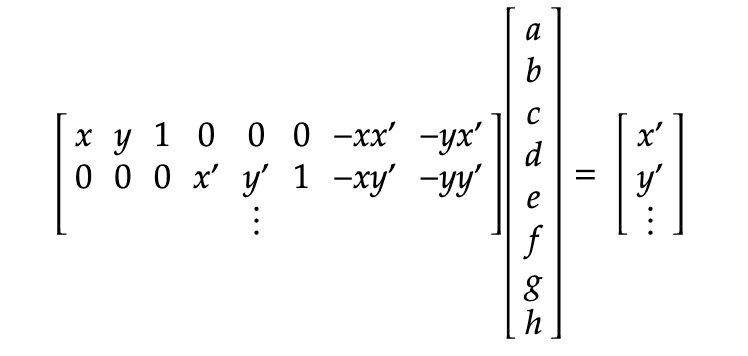

Finally we can format the equations as a matrix, and use least squares to solve for the optimal [a b c ...] vector.

I used np.linalg.lstsq to find h and then reshaped it in to a 3x3 matrix. The minimum number of points needed is 4, but it is good to select more points for a more stable solution.

I then wrote the function warpImage to warp an image with the matrix H. I use H to calculate the new bounding box of the image, and the inverse of H to sample the pixels with interpn. My implementation can either compute the warped image dimensions automatically, or work with a fixed canvas. The image returned by the function also has an alpha channel so it is easy to disntiguish the image from the blank background.

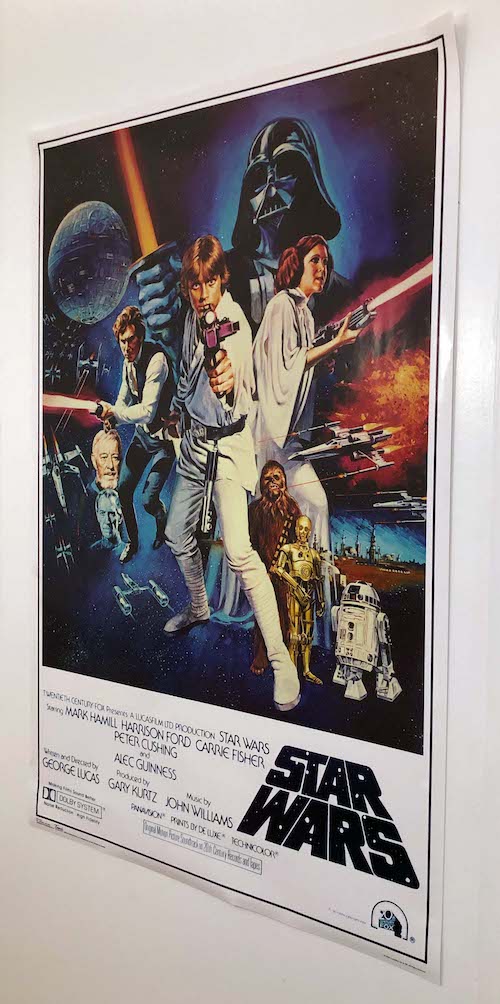

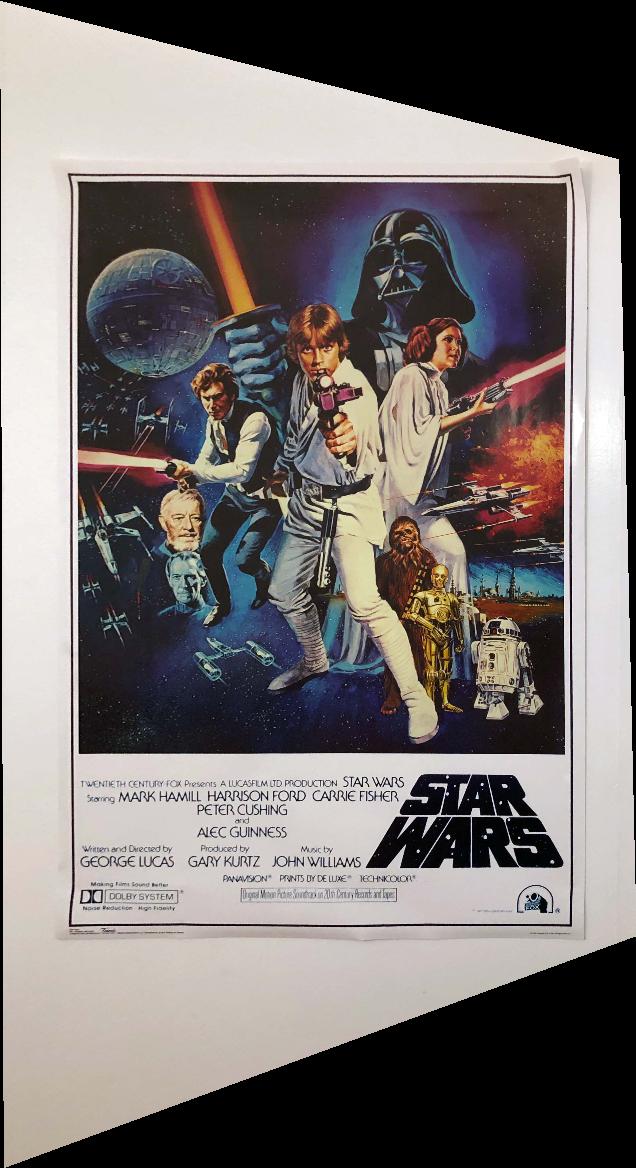

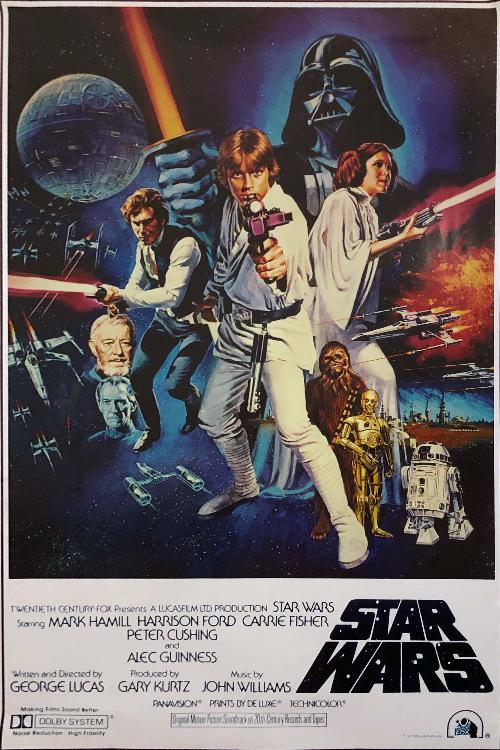

Image rectification means transforming a picture of a planar surface so that the plane squarely faces camera. I defined points at the corners of the plane I wanted to rectify, and computed the transform to make the corners form a rectangle.

|

|

|

|

|

|

To blend multiple images together, we first have to define correspondences between matching keypoints. In the image below, I hand selected points at easy to identify corner locations that were present in both images.

|

|

I then warped Image B so that its points lined up with the points on Image A. I initially used alpha-weighting to blend the images (in areas where the image overlapped, I took the average of the two pixels at the location)

Because of differences in exposure, you can see a pretty clear seam in the image, and the left side of the tree at the top-right of the image is faded. In addition, if the images are not perfectly aligned or taken from the same location, then there will be ghosting/blurriness in the overlapping images if alpha-weighting is used.

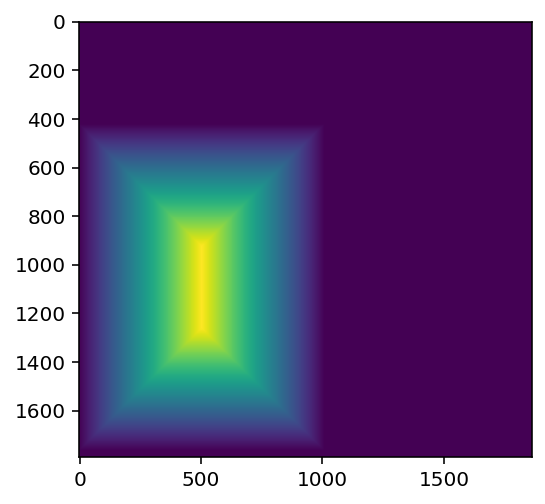

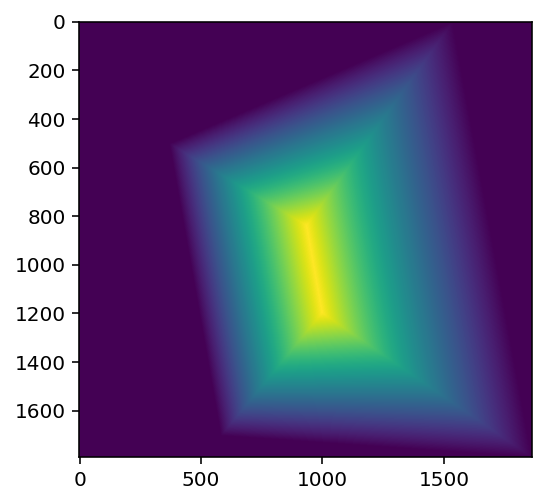

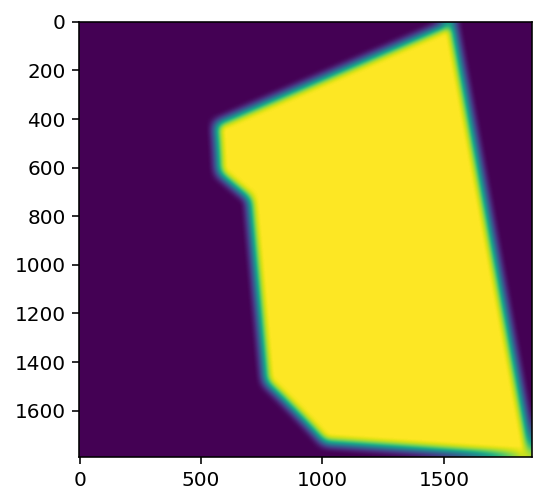

A more advanced strategy is to blend based on a distance function. I created a function bwdist (that emulates MATLAB's bwdist) that sets the pixel value to the distance to the nearest image edge.

|

|

Using these distance functions, I created a mask for each image based on if the image has the maximum dist value at the point. For image A, the mask is (distA > distB). I also added a gaussian blur so the transition isn't as harsh.

|

|

When I blend the images with these masks, there is a bit of ghosting at the edges because of the blur. Using the distance function (which we already have), I inset the image by a fixed amount to crop out the blurry parts. The result in the cropped image is nice with no ghosting and the seam is nearly invisible.

|

|

Here are a few more mosaics I made:

|

|

|

|

|

With a bit of cropping and lighting adjustments in Photoshop/Lightroom, I can get a pretty clean looking result. The T-Rex's name is Osborn, btw.

I thought the way we set up the least squares problem for finding the homography matrix was pretty interesting. This was also my first time seeing the distance transform and I thought the application for masking was clever.