In the previous part of this project , I was able to stitch images together by manually choosing corresponding points between images. This was a task that required a lot of time and precision, however. In this part of the project, I explore ways to automatically choose and match features between images to automatically stitch them. This part of the project mostly follows this research paper, with some modifications/simplifications. Additionally, I also look into automatic mosaic detection for fun.

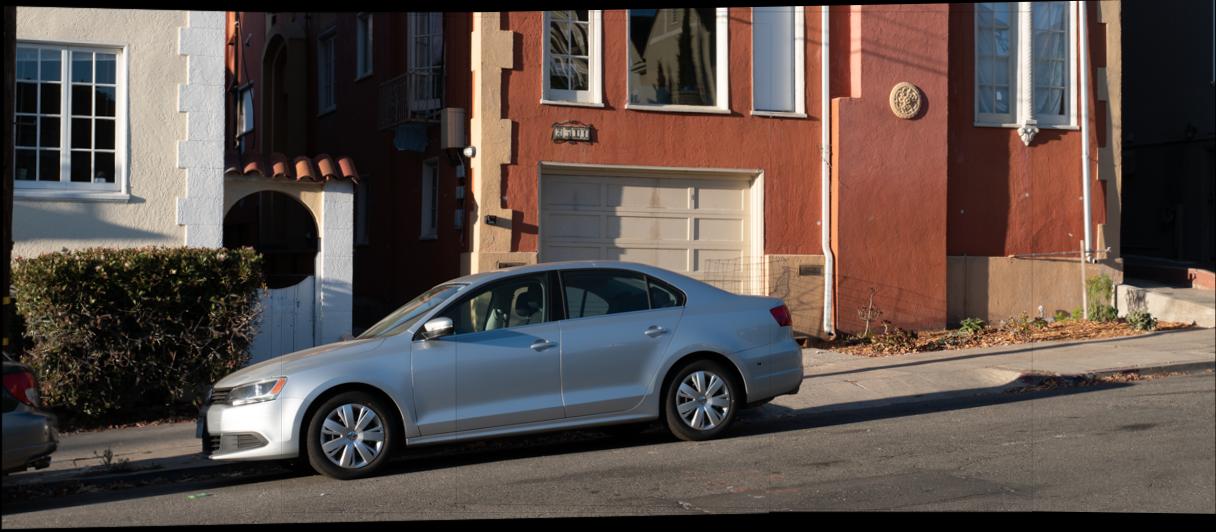

Theres are the same pictures as used in the first part of this project .

Weren't we told as kids not to give our addresses out on the internet?

Well, at least no one told us anything about giving away our neighbors' addresses.

Hey, you can see my house from here!

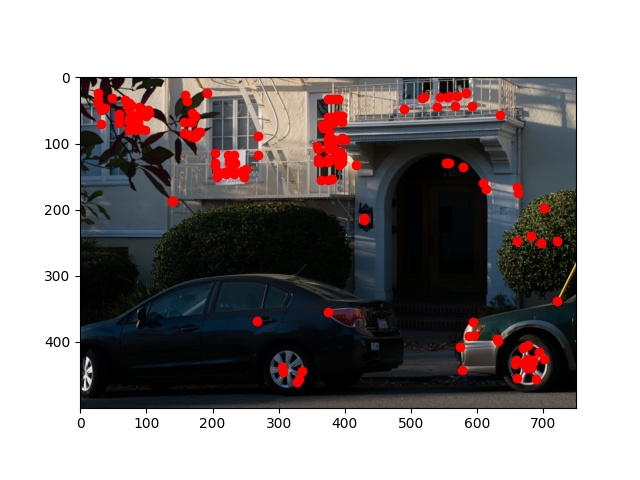

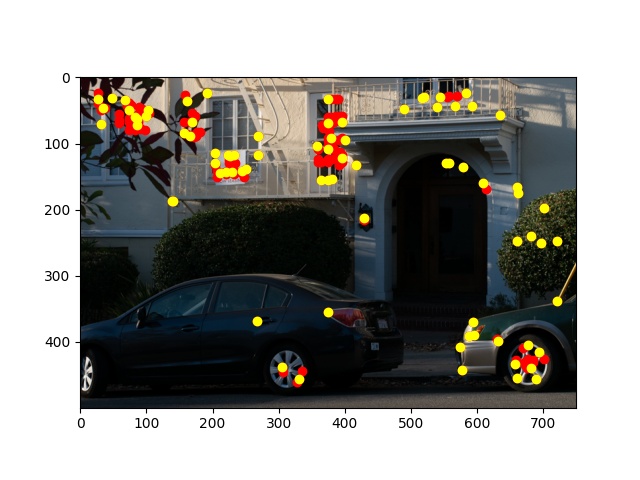

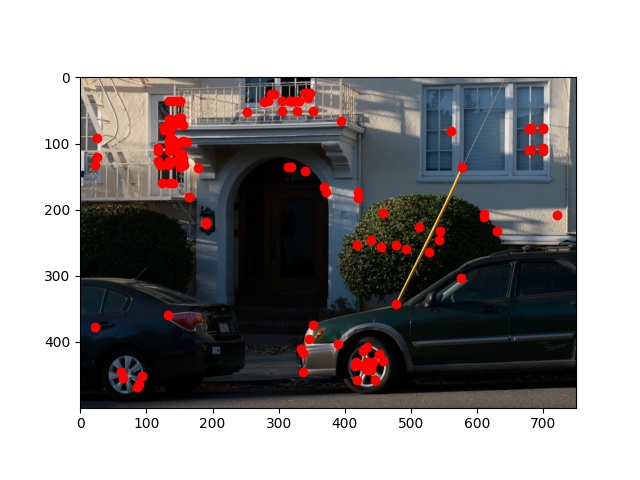

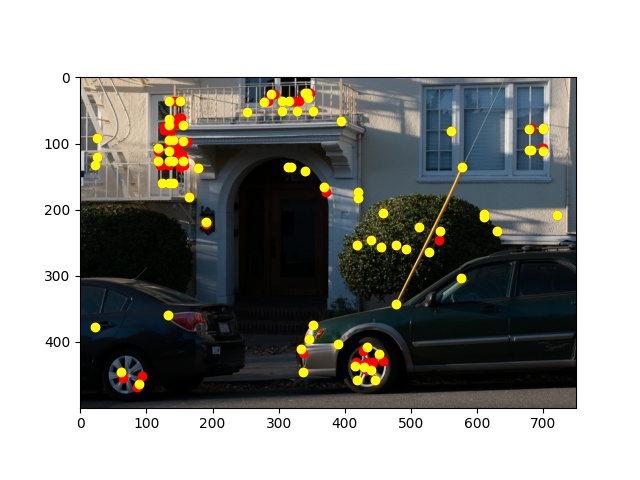

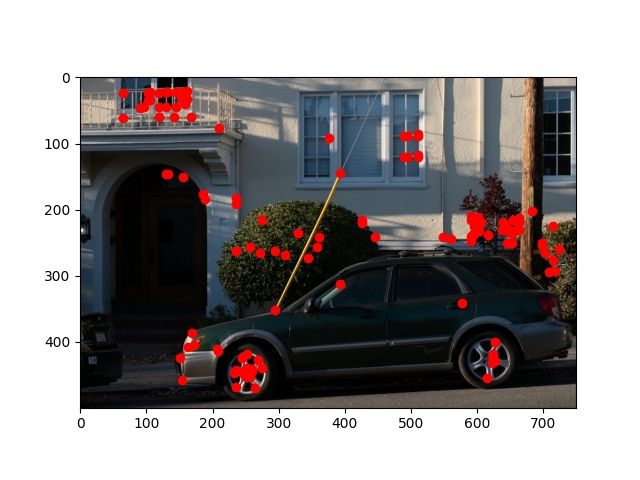

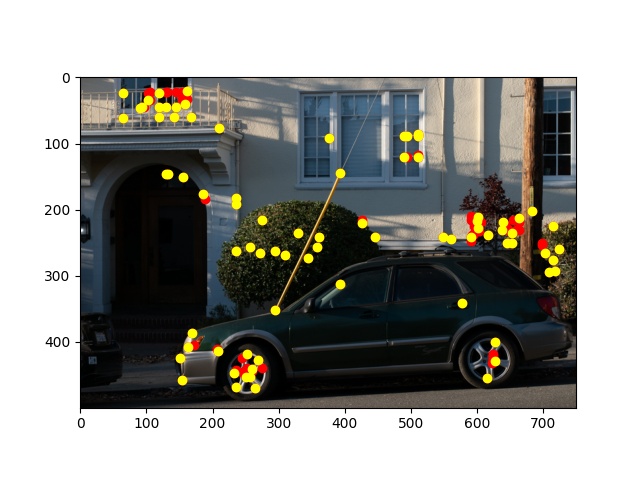

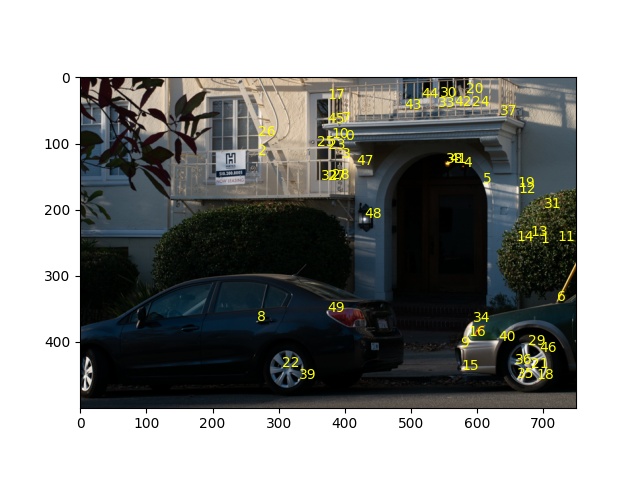

To detect an image's corner features, my code looks for Harris Corners that have a corner strength at least as strong as a certain threshold. The code for Harris corner detection was given to us, and I set the threshold manually for each set of images. Once a Harris corner is detected, I run adaptive non-max suppression on the Harris points, in order to push the algorithm to choose corner points that are more spread out.

The Harris threshold was a bit funny. For some sets of images, I found that I needed a really low threshold (0.28 and 0.3) to get good corner features. For others, 0.7 worked just fine.

For ANMS, I found success with choosing the best 80-100 points, and used a robustness factor c_robust of 0.9

For the following images, Harris points are plotted on the left, and ANMS (overlayed on Harris) on the right

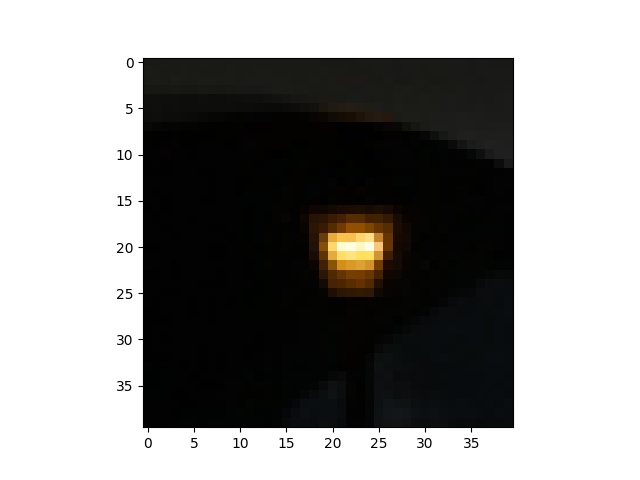

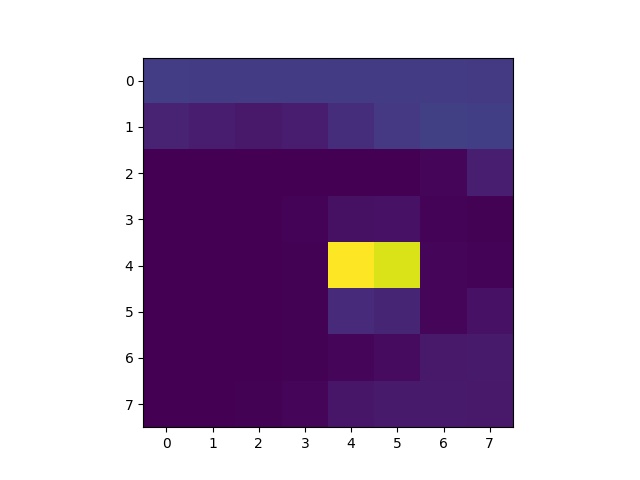

Feature descriptors are extracted as follows:

Choose the following parameters: spacing, sigma, and pyramid_level

Using the given sigma, create a Gaussian kernel and blur the image pyramid_level number of times (mimics sampling from the pyramid_level of a Gaussian pyramid).

For each corner:

a. Extract an (8 x spacing) by (8 x spacing) patch, centered at the corner.

b. Sample pixels at the given spacing rate' from this patch to make a smaller 8 x 8 patch.

c. Save the patch

I found that a spacing of about 5 (as recommended in the referenced MOPS paper) works pretty well. I also used a sigma 1, and let the pyramid level correspond to about floor(log2(spacing)).

Below: Original photo, Sample Patch, Featurized Patch

To match feature descriptors between a pair of images, we use Lowe's method. For each image, each corner point, Lowe's method looks for the two corner points in the other image that best match the corner point of interest (a matching's error is computed by the SSD). If the ratio of the first error to the second error is below our desired threshold, then we consider the matching. Based on graph 6b from the referenced MOPS paper, it looks like 0.667 might be a good threshold. We then take the corner pairs commonly considered by both images, to return a list of matching pairs. The best performance is obtained when the threshold adjusted based on the set of images.

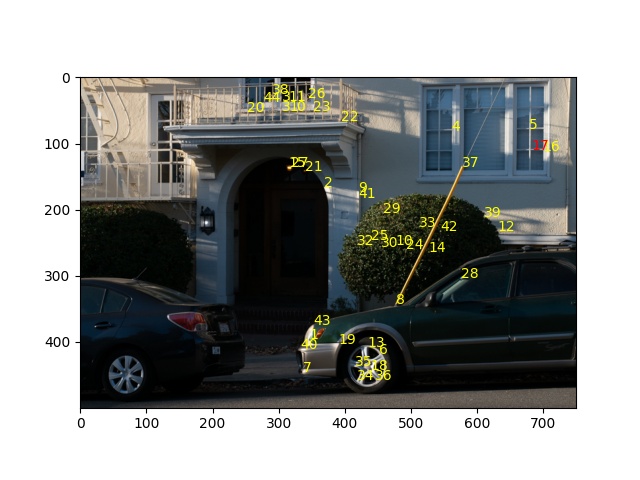

Let's look at a sample matching between two of my home images.

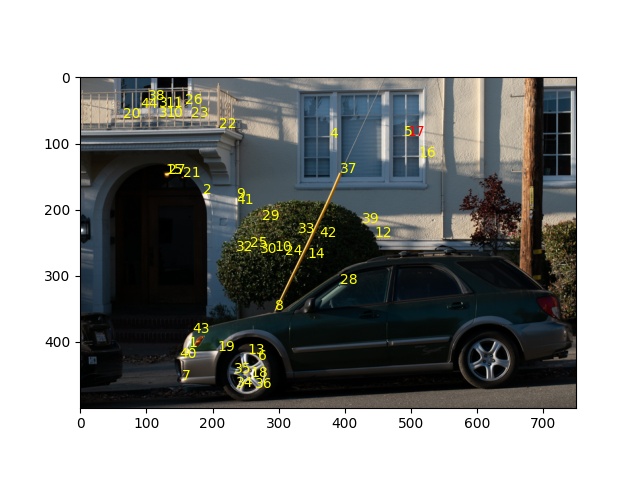

Once we have our pairs of matching corners/descriptors, we want to make sure that we have absolutely no bad pairs (pairs that don't correspond to true correspondences), as our usual method for computing homographies, least-squares, is very susceptible to outliers. To do so, we run RANSAC to randomly choose a good set of 4 pairs of corners, keeping track of the set that results in the highest number of inliers (accurately repositioned points) when a homography created from the 4 corners is applied. For determining inliers, I found that an epsilon (maximum distance) of about 10 works best. Then, using the inliers of the best set of 4 points, create a final homography for the transformation. For most image, I was able to get really good homographies from just 1000 iterations. Allowing the program to run for longer allows for a better chance of getting a better homography, though. Thus, I used between 5,000 and 20,000 iterations in my final results.

In the following images, points selected by RANSAC are labeled in yellow, and points rejected are labeled in red. In some cases, we're lucky and end up with a perfect pairing right off the bat:

Other times, we get a few outliers:

Point 17 definitely looks pretty similar in both images.

As the saying goes, "snitches get stitches"

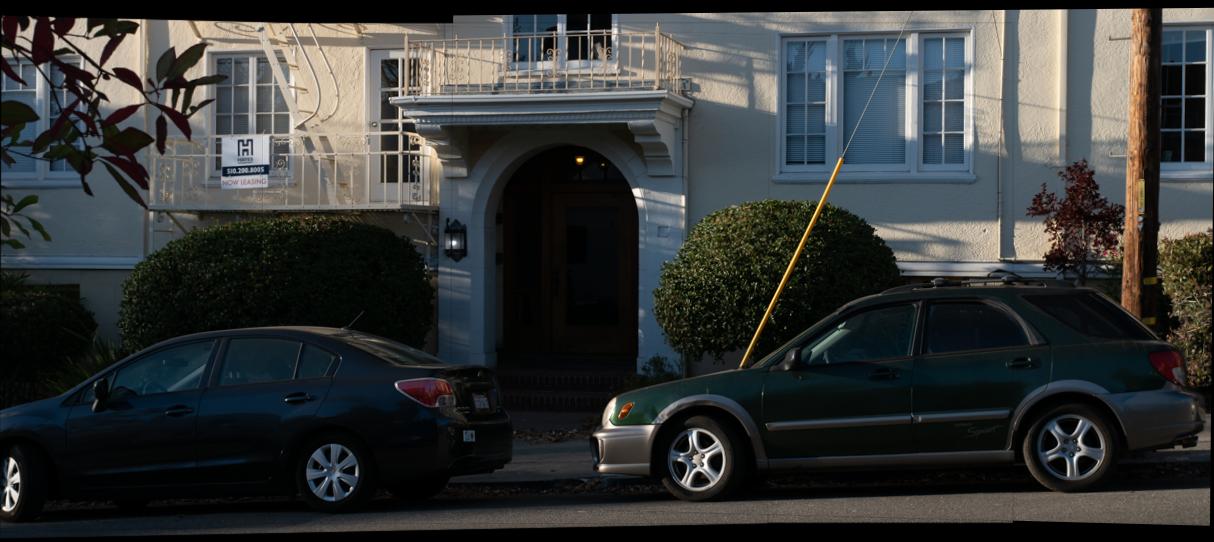

Once we've used RANSAC to compute the homographies between our images, the process for computing a mosaic is largely the same as in Part A. In my implementation, one image is chosen to be the center image, so all feature pairs/homographies are between peripheral images and the center image. Each image set has its own tuned set of hyperparameters for each of the steps mentioned above.

Auto-Stitched:

Manually-Stitched:

Auto-Stitched:

Manually-Stitched:

Auto-Stitched:

Manually-Stitched:

Snitches (that pair well together) get stitches?

To do automatic mosaic detection, I progressively form groups of images, and choose a group for each image by finding if there exists at least one other image such that the image of interest looks "similar" enough. This is done by observing the number of matching feature descriptor pairs (method described above) between the two images, and accepting the image into the group if it is at least a certain threshold. Otherwise, a new group is made.

For each group, the center image is chosen by choosing the image with the highest total number of pairs in the group.

Though I'm not sure if this can be labeled as "Bells and Whistles", one of the things that I did to get better image blends was two-band multiresolution blending. The results were pretty interesting; since the images were already pretty well-aligned, wasn't able to see much of a difference.

Multiresolution Blending:

0 - 0.5 - 1 Alpha Mask:

The coolest thing that I learned from this project is that we can actually detect corners by looking at the image's Taylor-approximated derivative at each point, and passing the points that meet a certain threshold! I also thought that Lowe's method of looking at the second-nearest neighbor, in addition to the first, was pretty smart. Using RANSAC to take advantage of positional data (whereas Lowe's method only looks at feature data) was pretty cool too.

https://inst.eecs.berkeley.edu/~cs194-26/fa21/hw/proj4/partB.html