The primary goal of this project is to explore how we can exploit image transformations to correct and warp between different perspectives of the same object/scene. Starting with separate images of the same scene from different viewing angles, we can use a variety of feature detection, selection, and matching methods to auto-stitch a panorama.

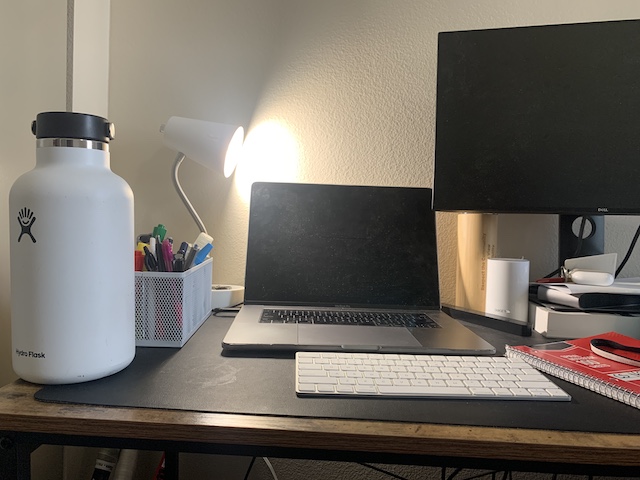

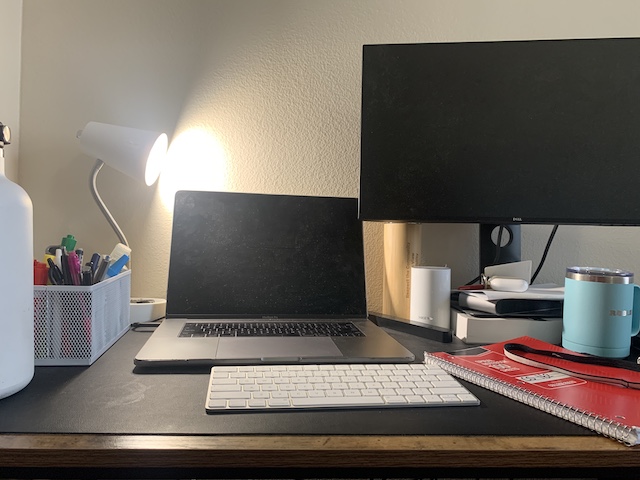

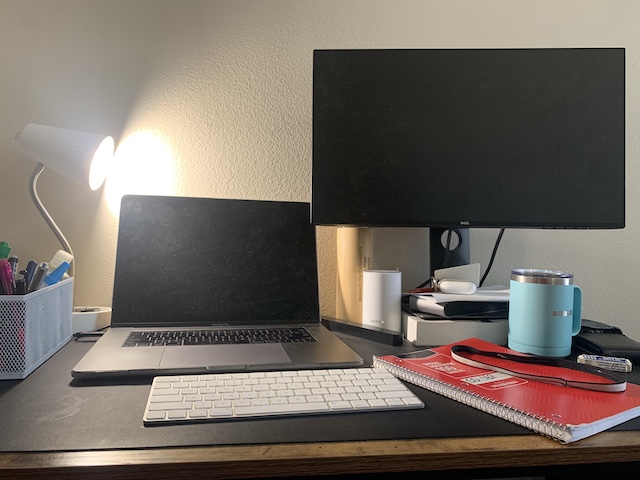

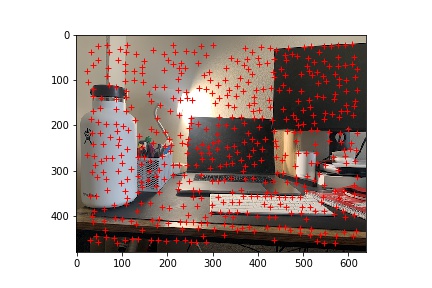

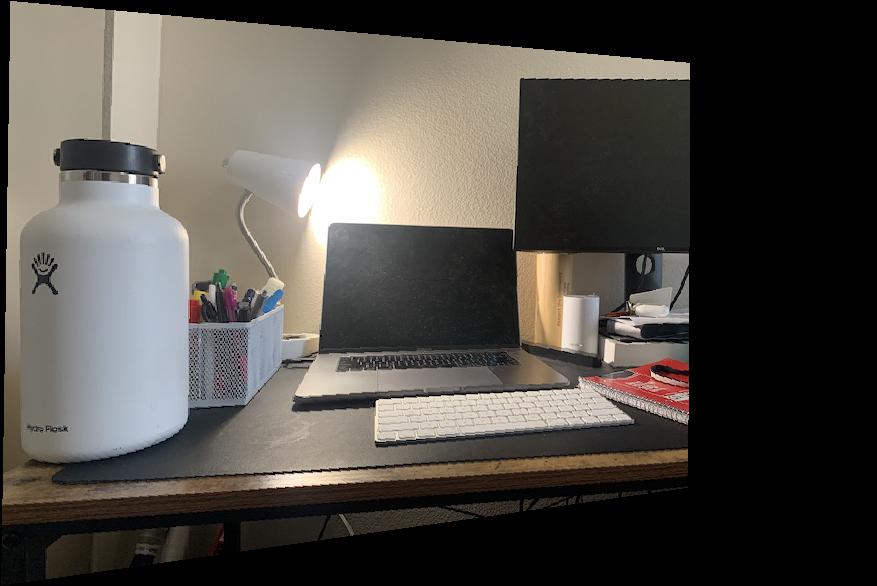

Before we can do anything, we need pictures that are relatively consistent in lighting, content, and camera position (although viewing directions vary). Here are raw pictures that I used for the panoramas.

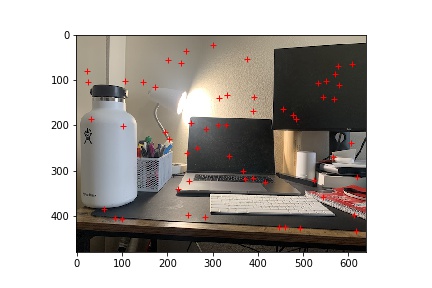

Desk

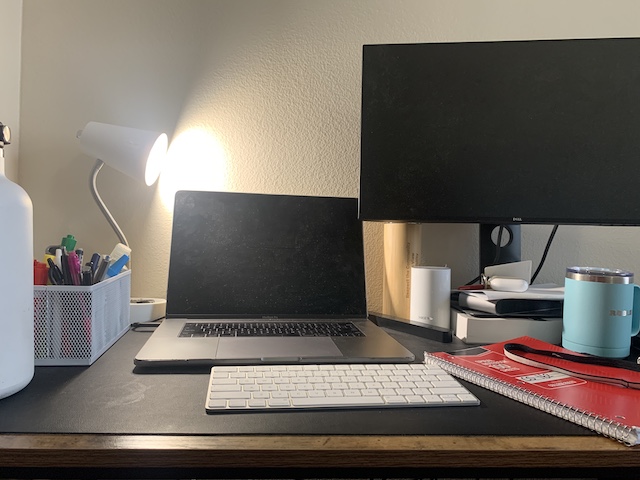

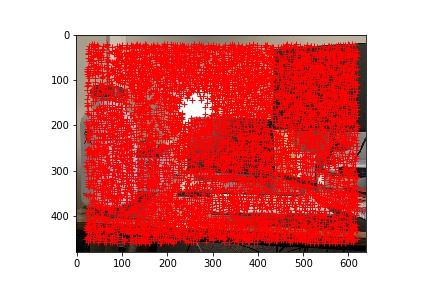

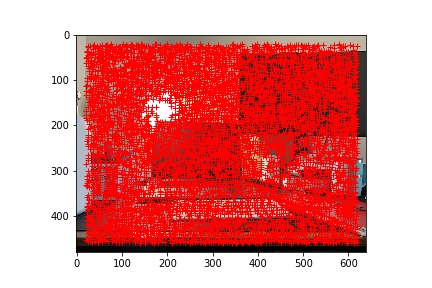

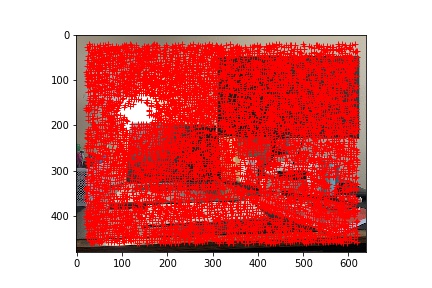

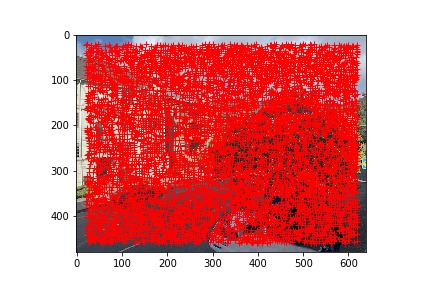

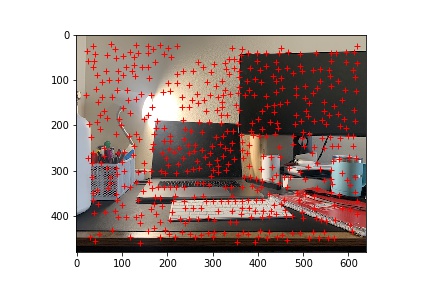

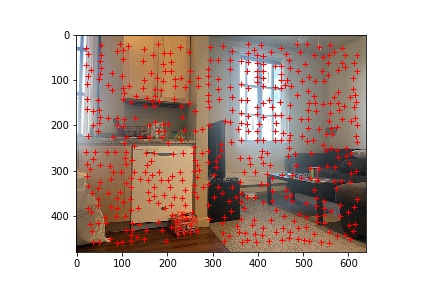

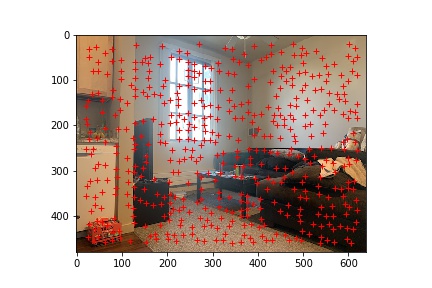

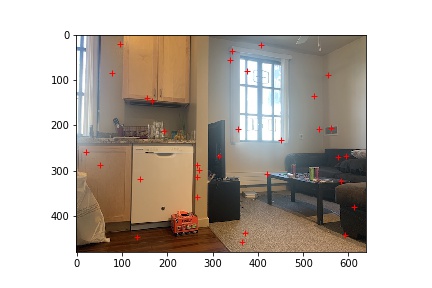

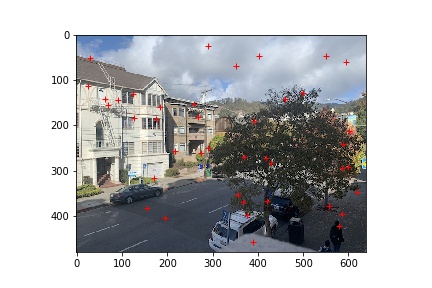

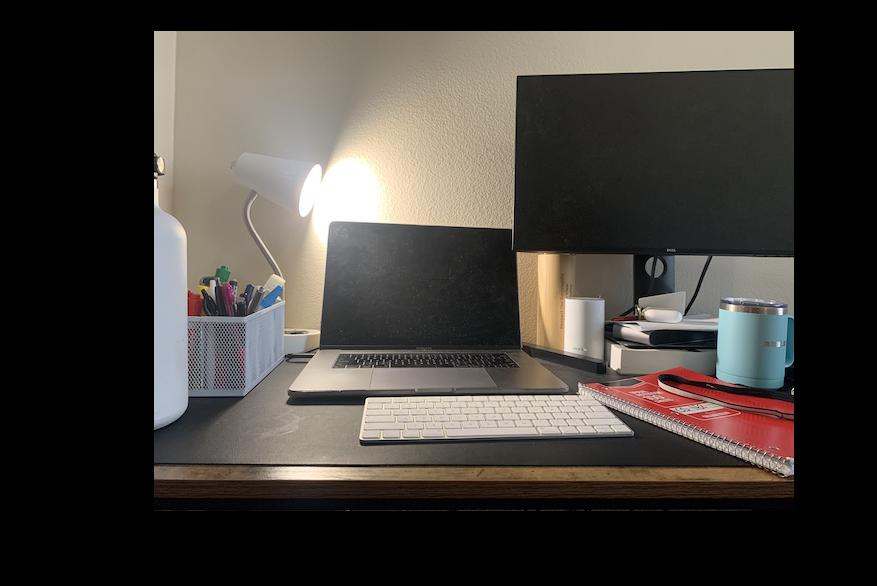

For this part, I just took the strater Harris Corners code and applied it to my input images to get potential corners/features to use for matching.

Desk

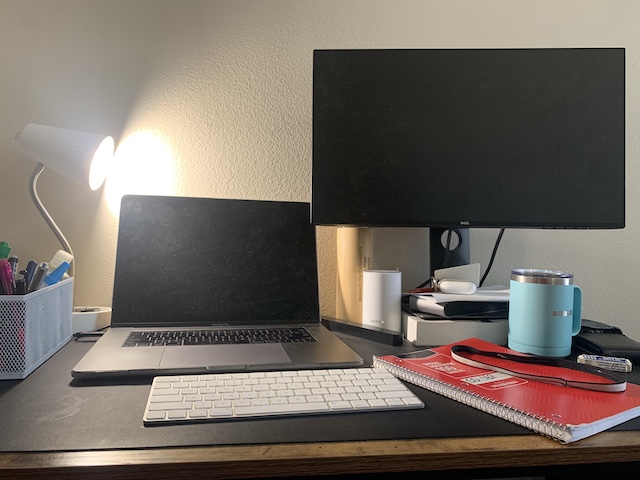

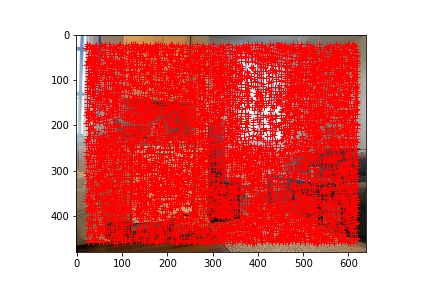

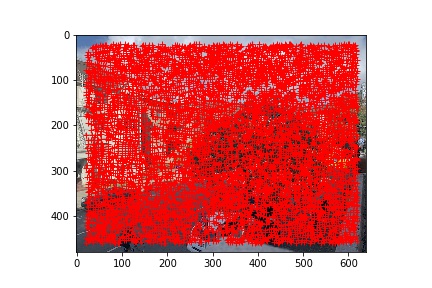

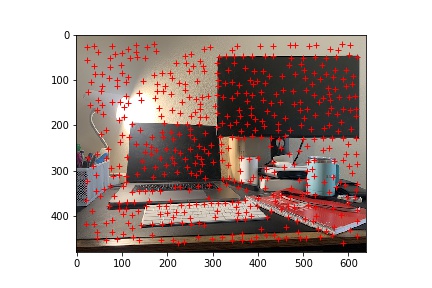

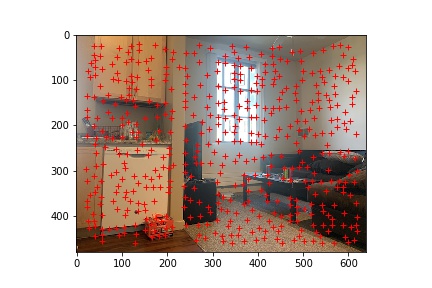

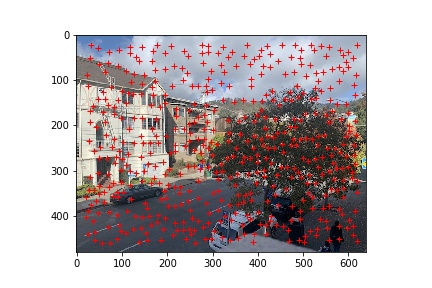

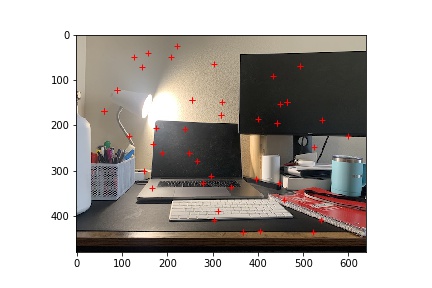

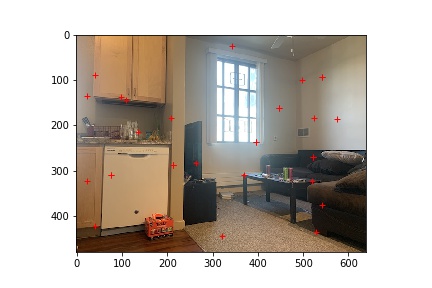

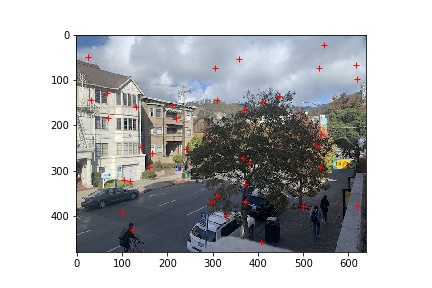

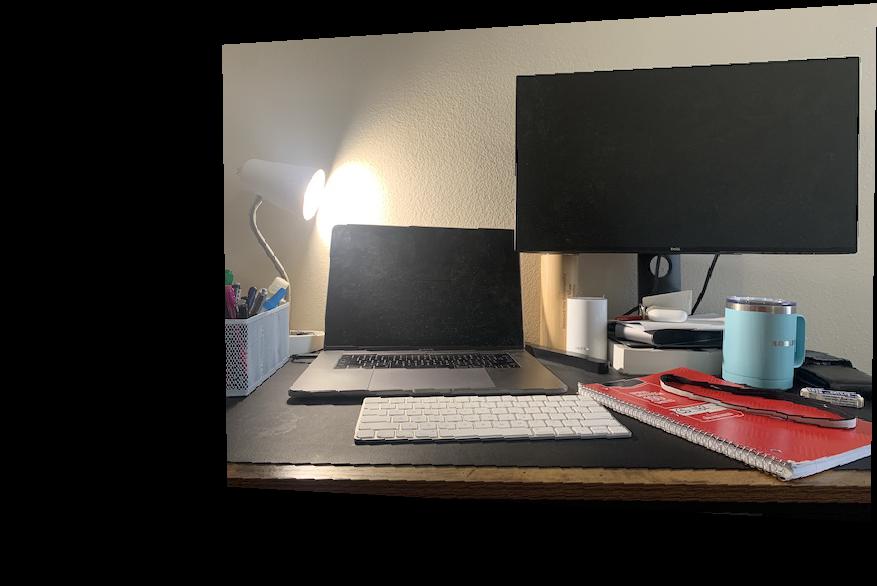

This step gets rid of many of the unessecary and unhelpful corners/points for feature extraction and matching.

Desk

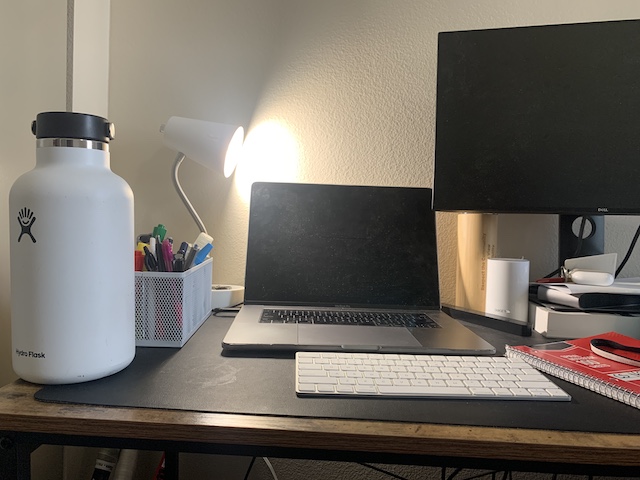

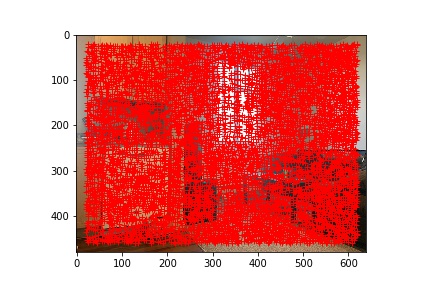

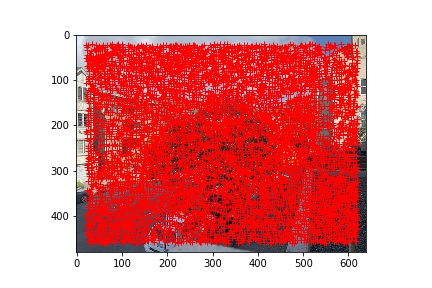

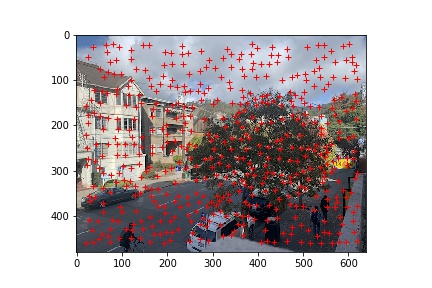

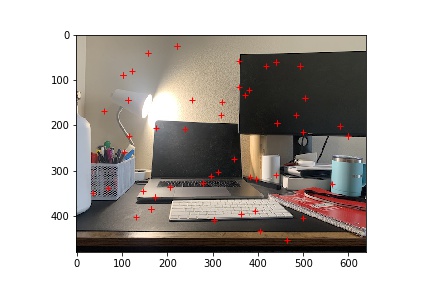

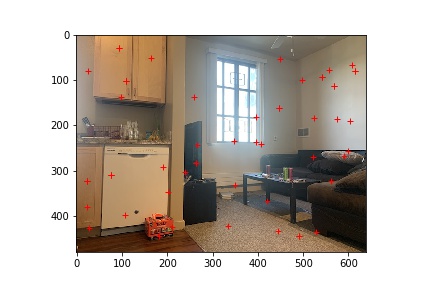

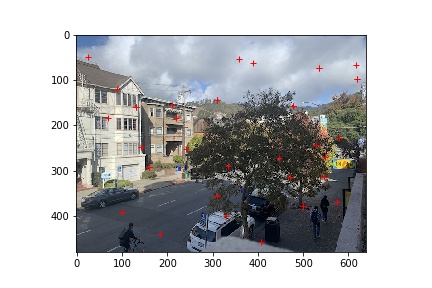

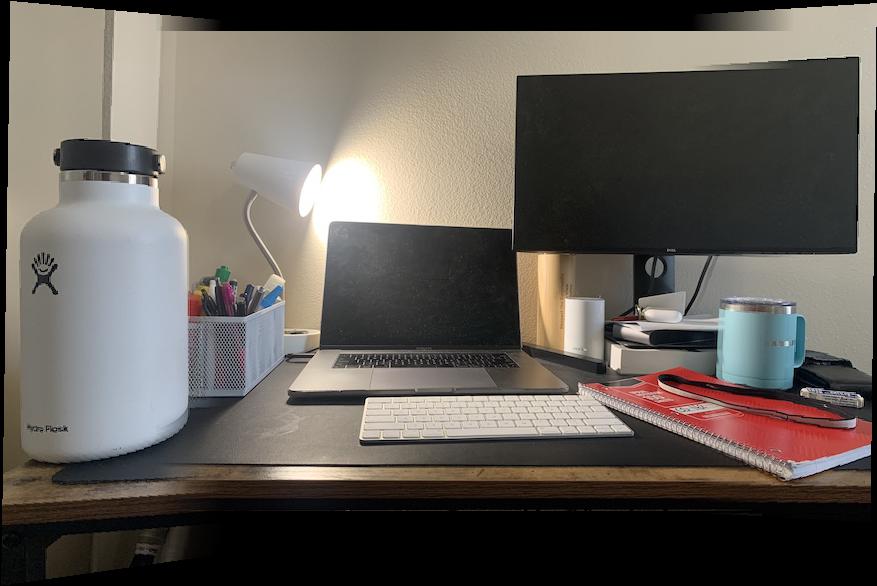

To extract features, we use normalized 8 x 8 downsampled patches as feature vectors. Since we want a 5 pixel spacing and 8 x 8 windows, we take 20 x 20 patches and downsample by a factor of 5. Then, we compute the euclidean distance of every feature vector pair and if the top 2 matches are close enough (determined by a parameter epsilon), then we consider those points to be a match.

Desk

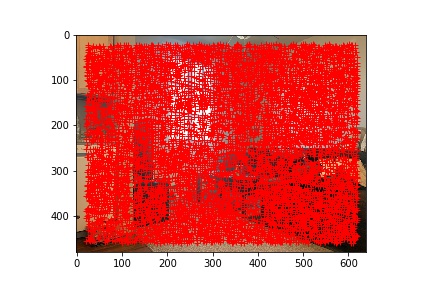

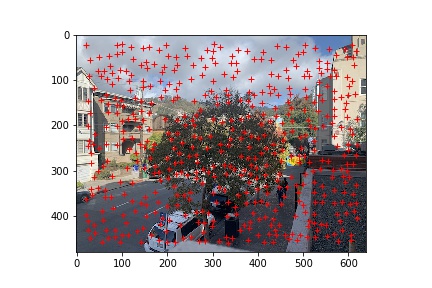

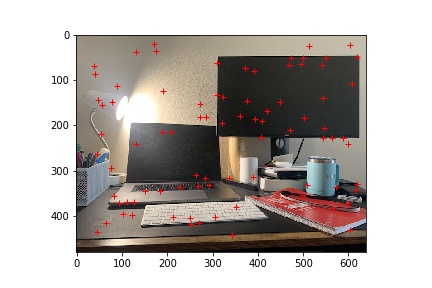

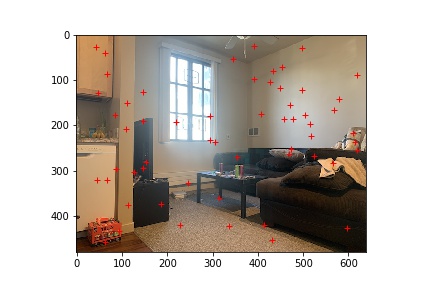

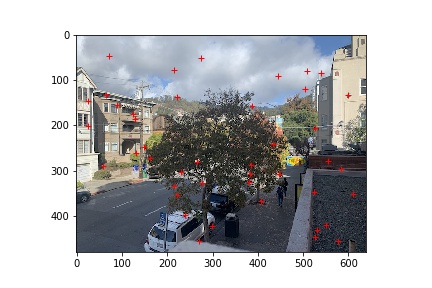

Now that we have the matching points for each pair of images, we can warp and blend in the same manner as from Proj4A to create the final panaramas.

Desk

I think that this project was very worthwhile and taught me a lot about the interpretation and manipulation of features in the context of images. Through methods such as Harris Corner Detection and RANSAC, we were able to systematically detect, extract, and match features across images. One thing that I struggled with during this project was tuning the parameters, specifically the different threshold values for feature matching and RANSAC. I discovered that the quality and consistency of photos matters a lot for this process, as for example my desk panorama had poorly taken and inconsistent pictures and that resulted in a very painful tuning and guess-and-check experience trying to get the right matching points.