Author: Sunny Shen

In Part A of this project, I took a bunch of photos of objects/scenes from different perspectives. I calculated the homography and did projective transforms on them, and then I stitched & blended the images together to create panoramas.

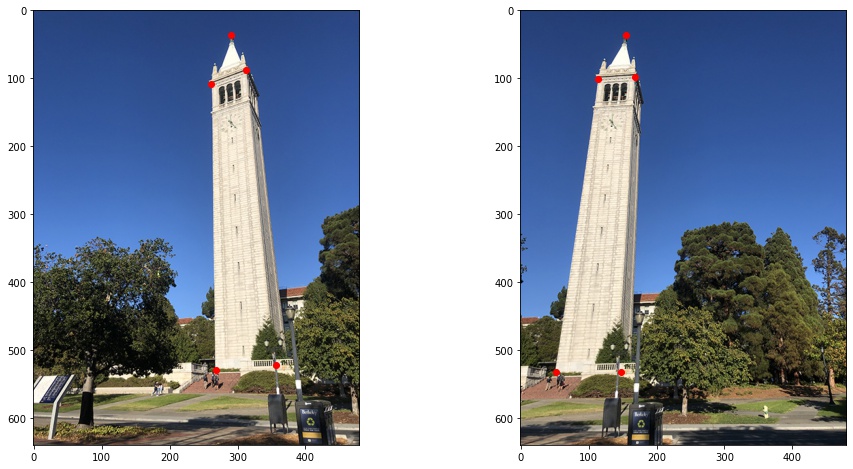

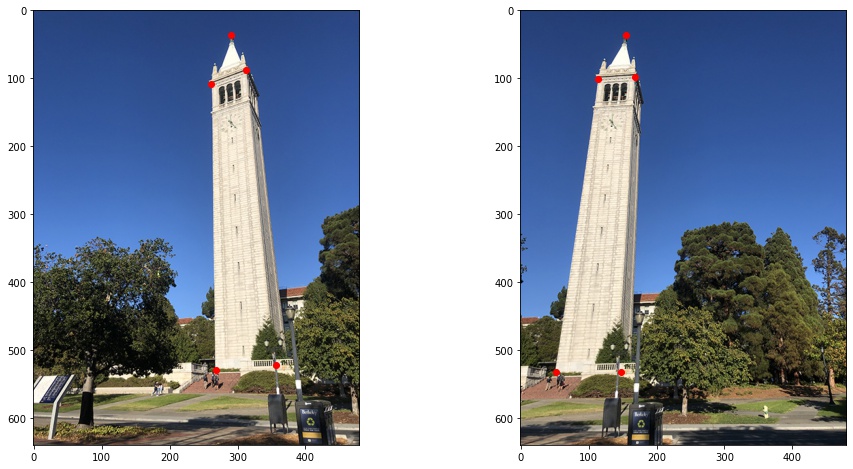

Before doing all the cool warping and projective transformation, we need to find key points in images and calculate the homography matrix that warps the images onto each other.

Homography matrix H is a 3x3 matrix with 8 degrees of freedom, and we transform keypoints p in th source image to the destination images key point p' based on H: p’=Hp.

To solve for H, I set up a linear system of n equations (Ah=b where h is a vector holding the 8 unknown entries of H).

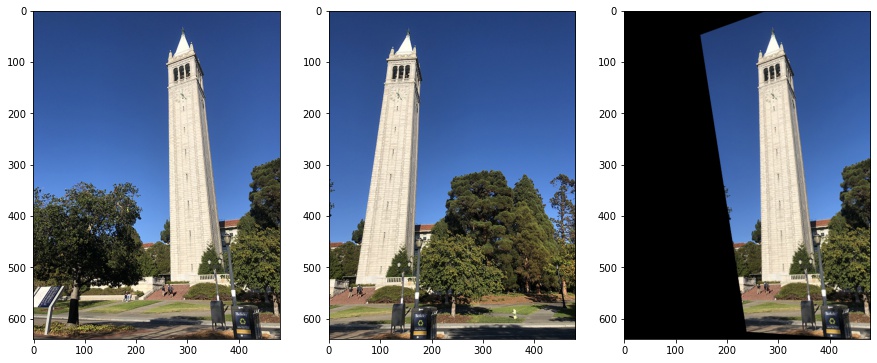

Now with the homography, we can warp the image with the projective transformation! Note that if the output image is the same size as the original image, we will "lose" part of the image after projective transformation because we not only change the shape defined by the key points but also the location -- so we need to change the output image size to retain most information in the image. Also note that there are a lot of black pixels in the warped images, and that is because there is no information available in those pixels.

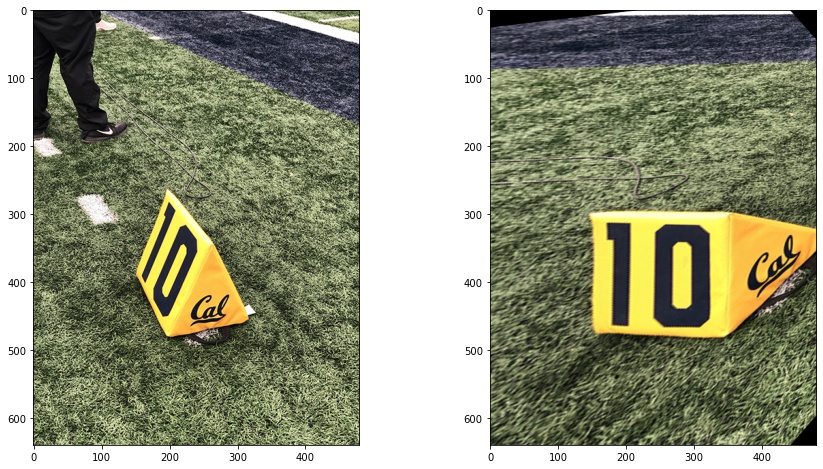

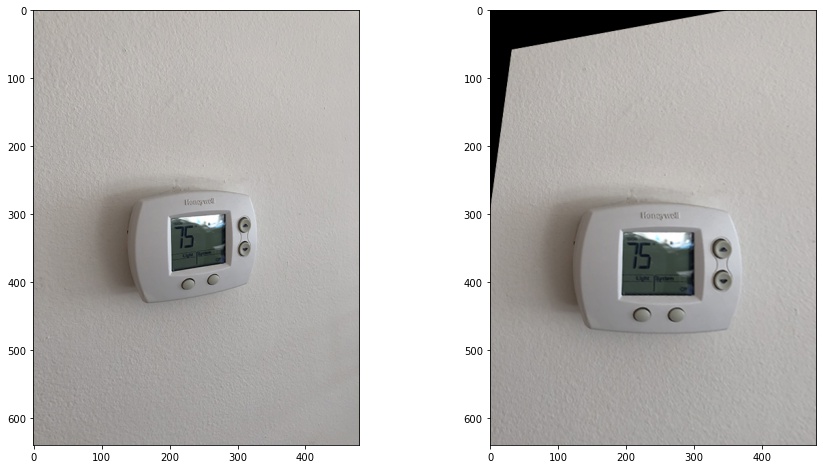

Let's rectify some images that we know some ground truth of the objects -- for example, we know that from a different perspective the objects are supposed to be 'rectangular'. And we use the rectangular shape as 'target' and warp the original images into that shape. Here are a few examples!

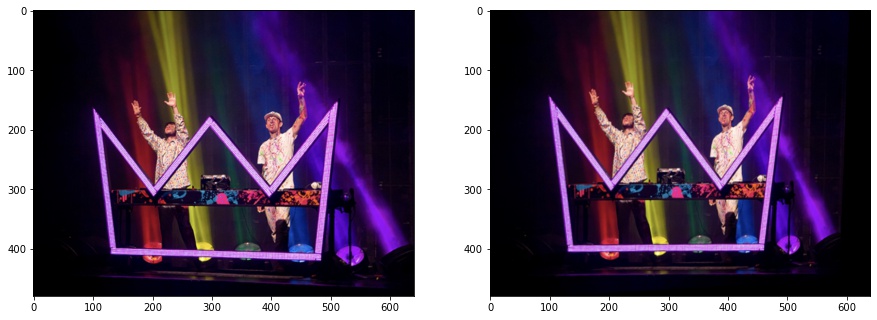

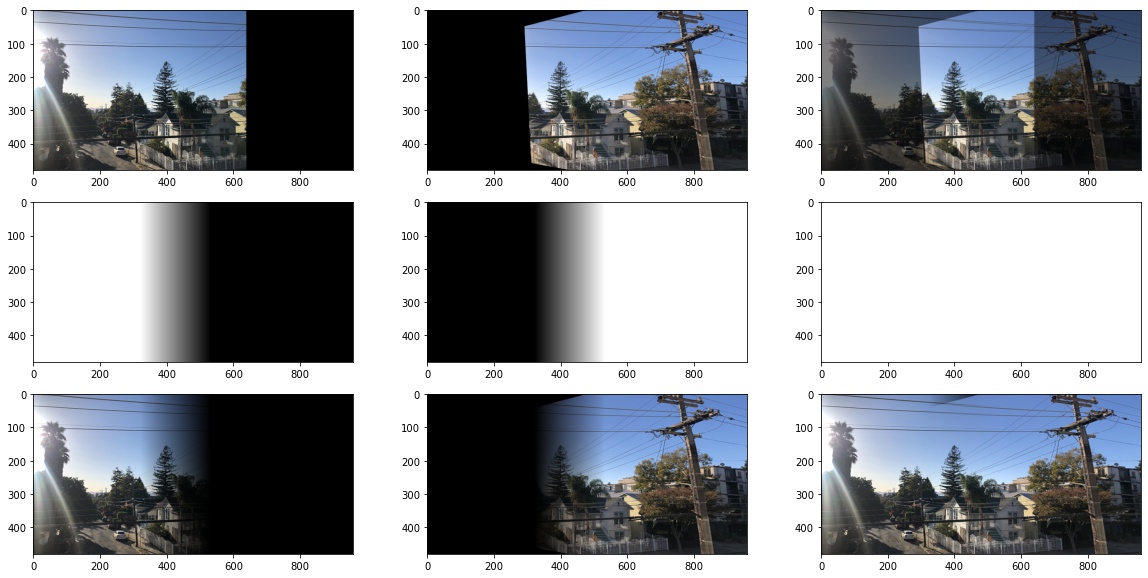

Let's create some panoramic pics! After applying projective transformations, we need to find a way to combine and blend all the images together. Simply doing img10.5 + img20.5 will create weird edges at the intersection of the images. But alpha blending -- Create a gradient mask that linearly decreases/increases the weights from 1 to 0 (or 0 to 1) for parts that overlap -- help the images blend well.

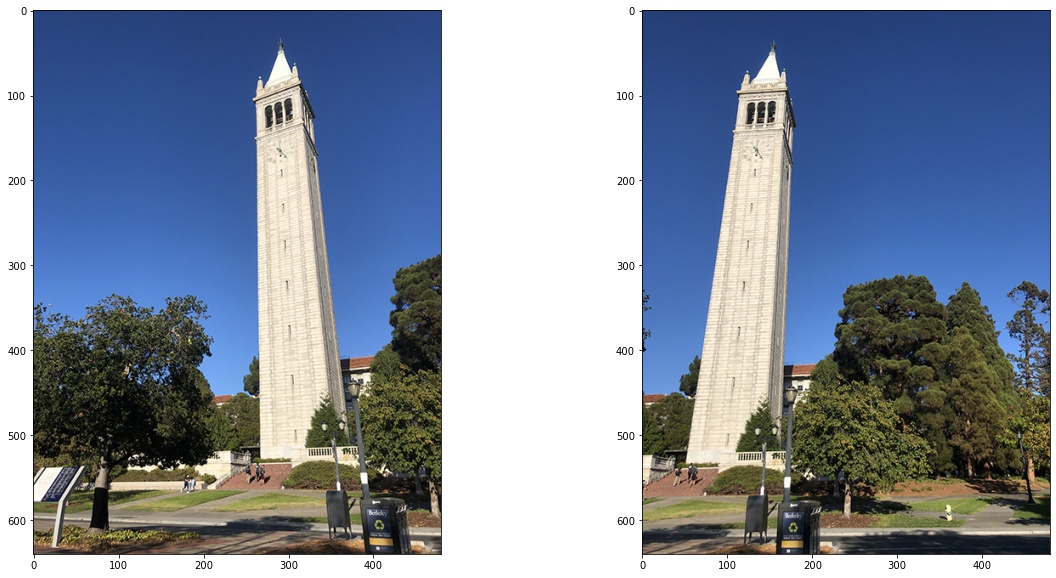

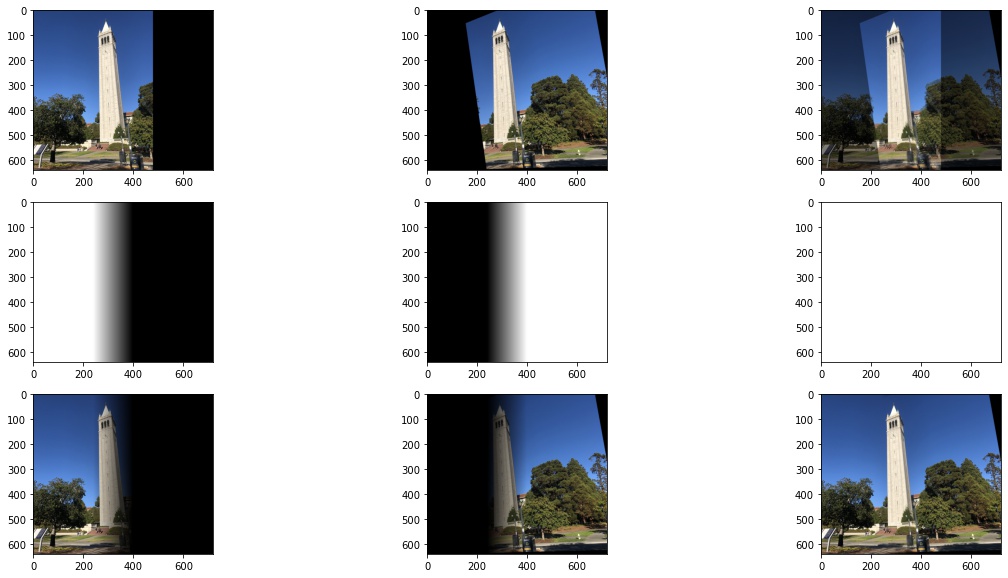

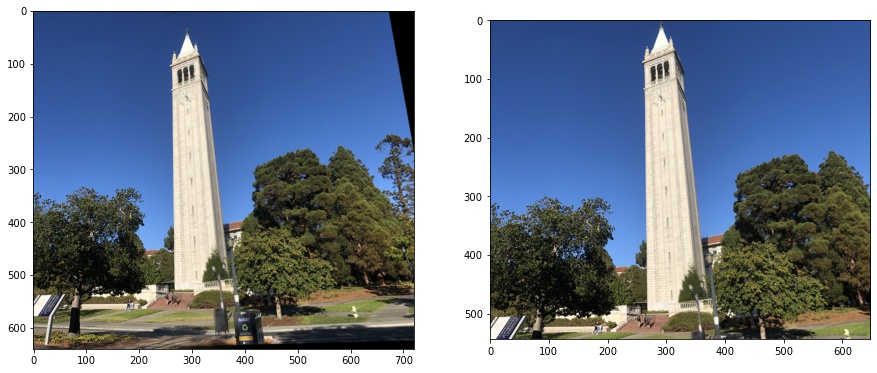

Example 1: Campanile

Originals:

Mosaicing in Progress:

Final output (before and after cropping out black parts):

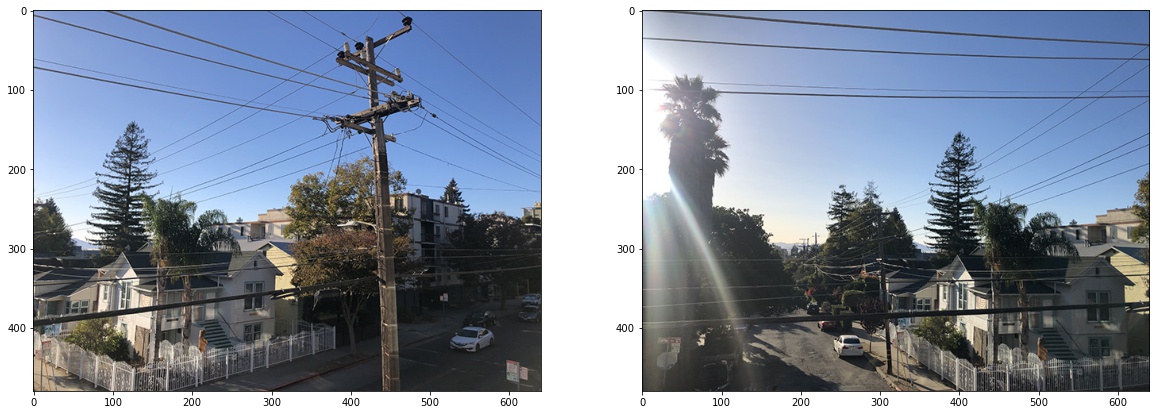

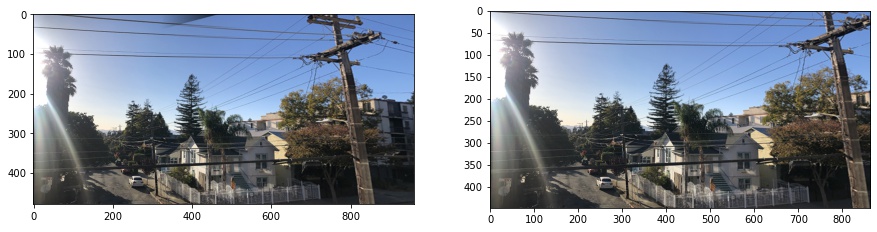

Example 2: Street View

Originals:

Mosaicing in Progress:

Final output (before and after cropping out black parts):

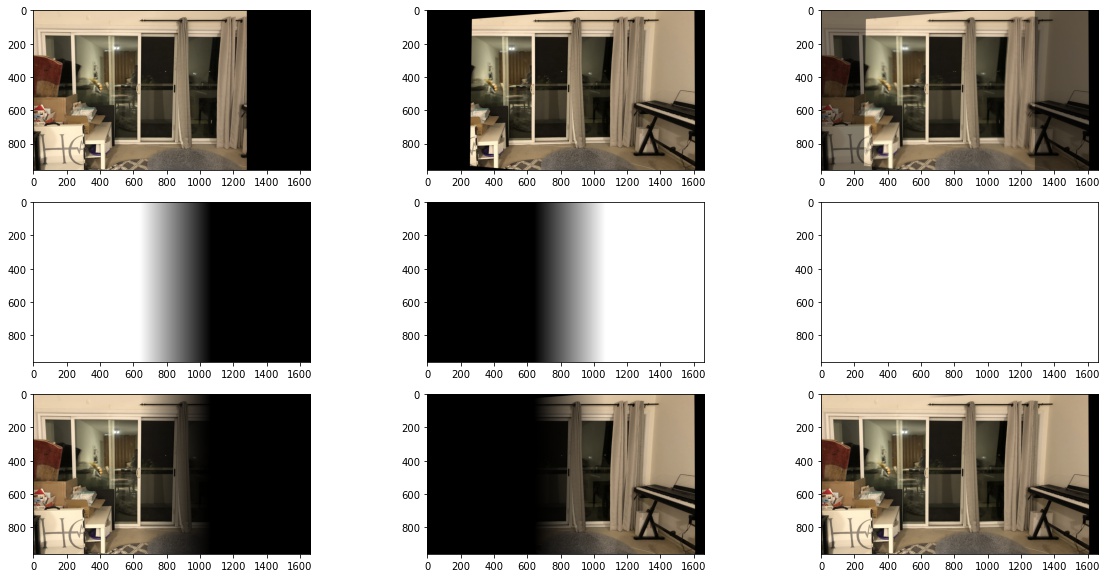

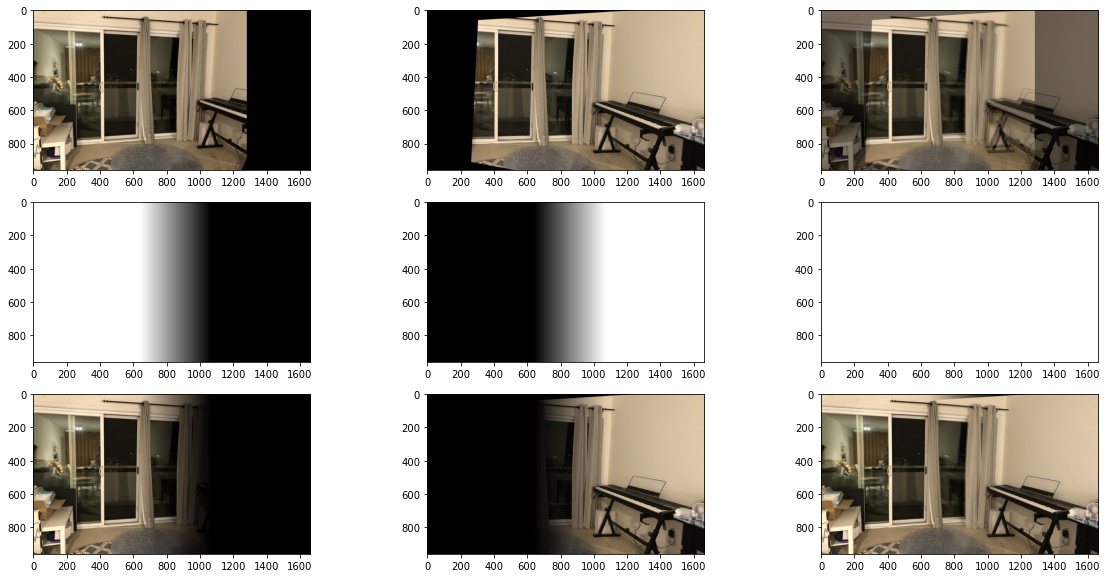

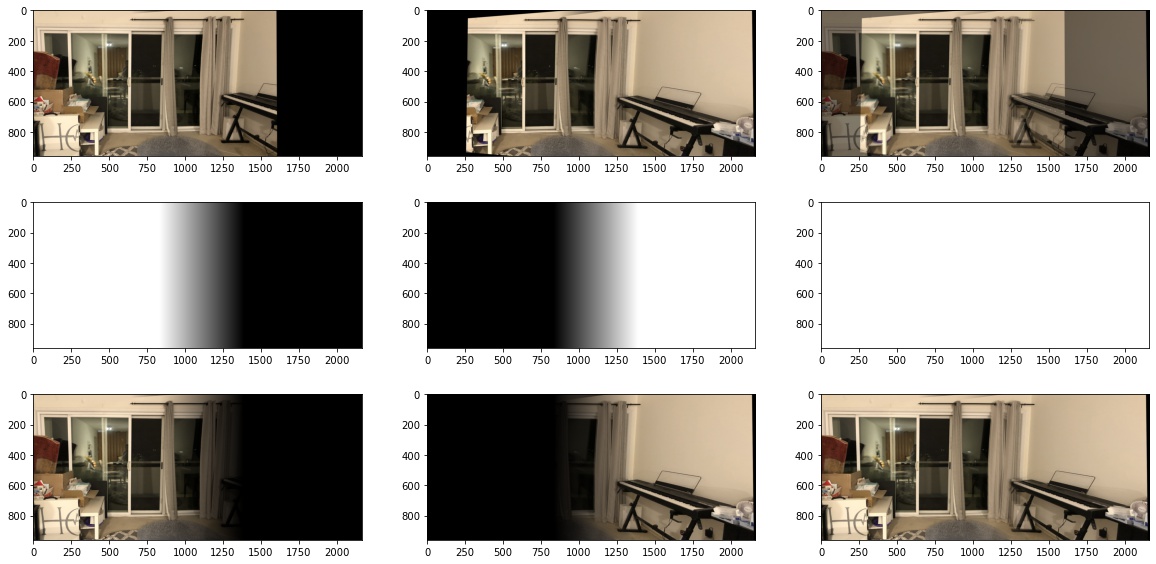

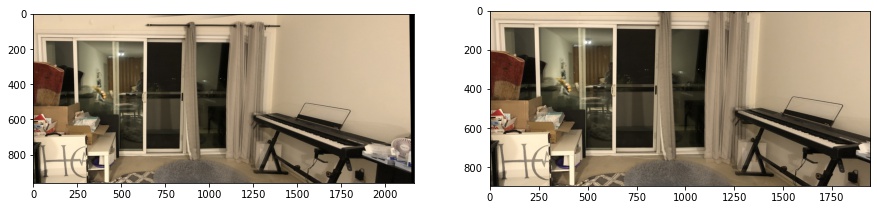

Example 3: Living Room

Originals:

Mosaicing in Progress:

Final output (before and after cropping out black parts):

I think it was super cool to apply projective transformations to images and think about how to blend them together. I took most photos from my phone, and my eyes weren't able to tell that the color/lighting/other specs of the camera slightly changed when I take 1 photo from 1 perspective and take another photo from a different perspective. I was trying to warp and blend some sunset photos from phone and I realized that the color of the sky didn't exactly match. I didn't put those examples on the website but I think it was cool to learn about that.