Nithin Chalapathi

nithinc@berkeley.edu

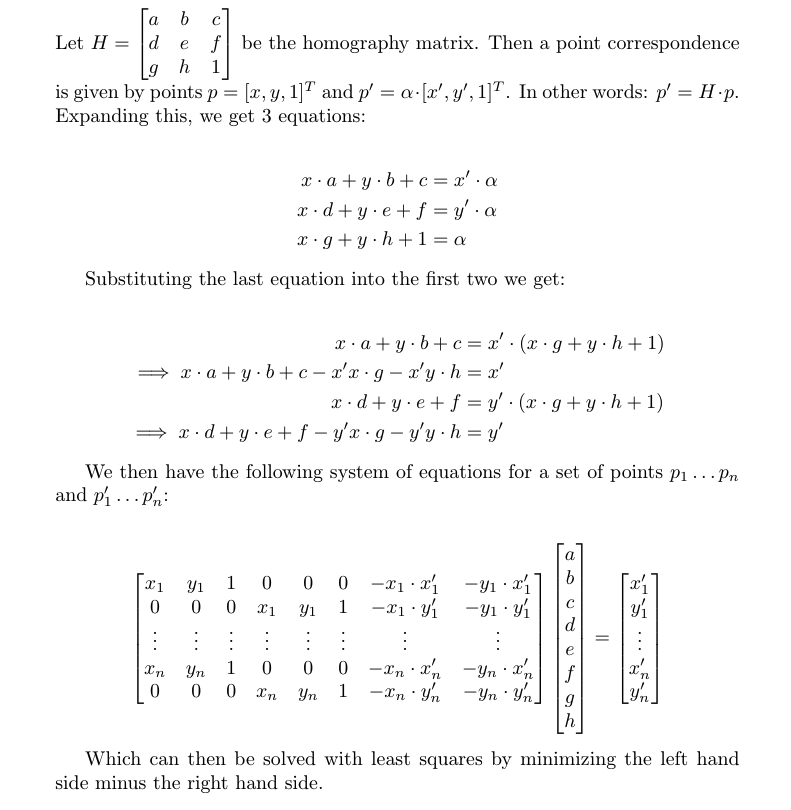

Let p in the pre-image space. Let p' be the corresponding point in the image space. Then for homography H, p' = H p, so long as p and p' are in homogenuous components. Then, I set up a least squares solution as outlined in the slides. Here is a more detailed explanation written in Latex:

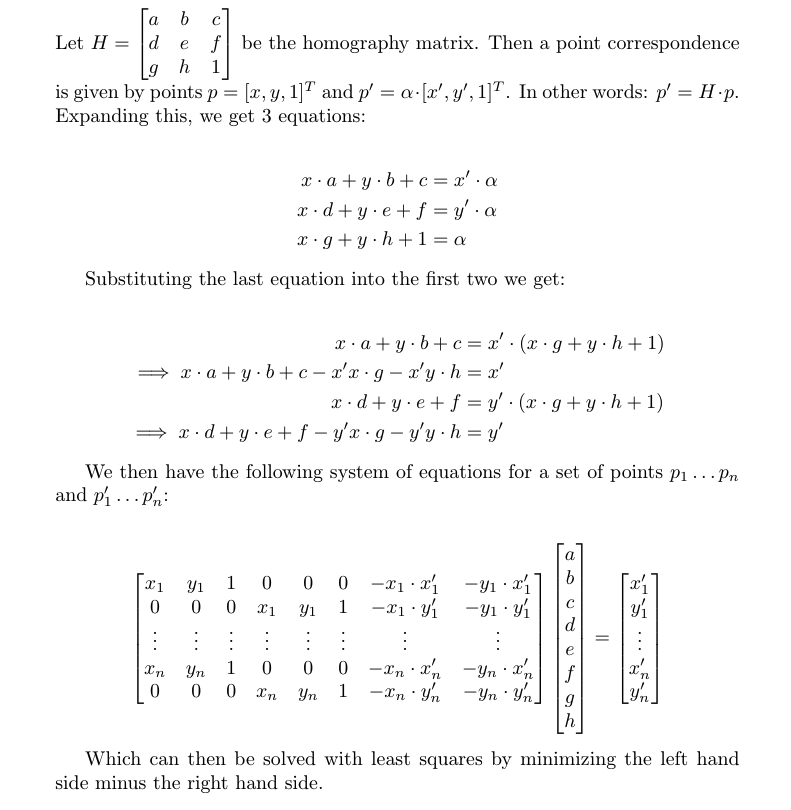

I have two sample images. The first image is a coaster of the "The Great Wave" painting. Here is the image with the corresponding point:

After image rectification, I get the following results:

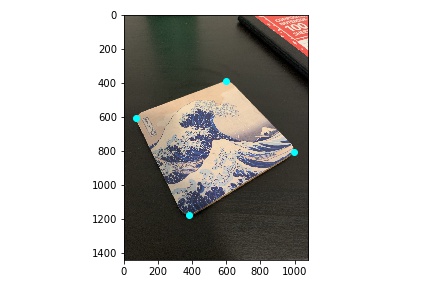

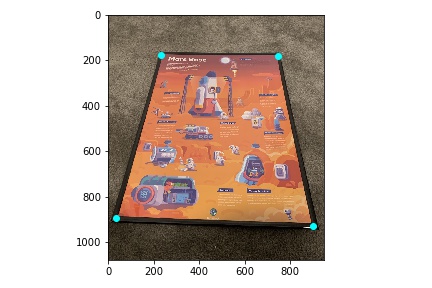

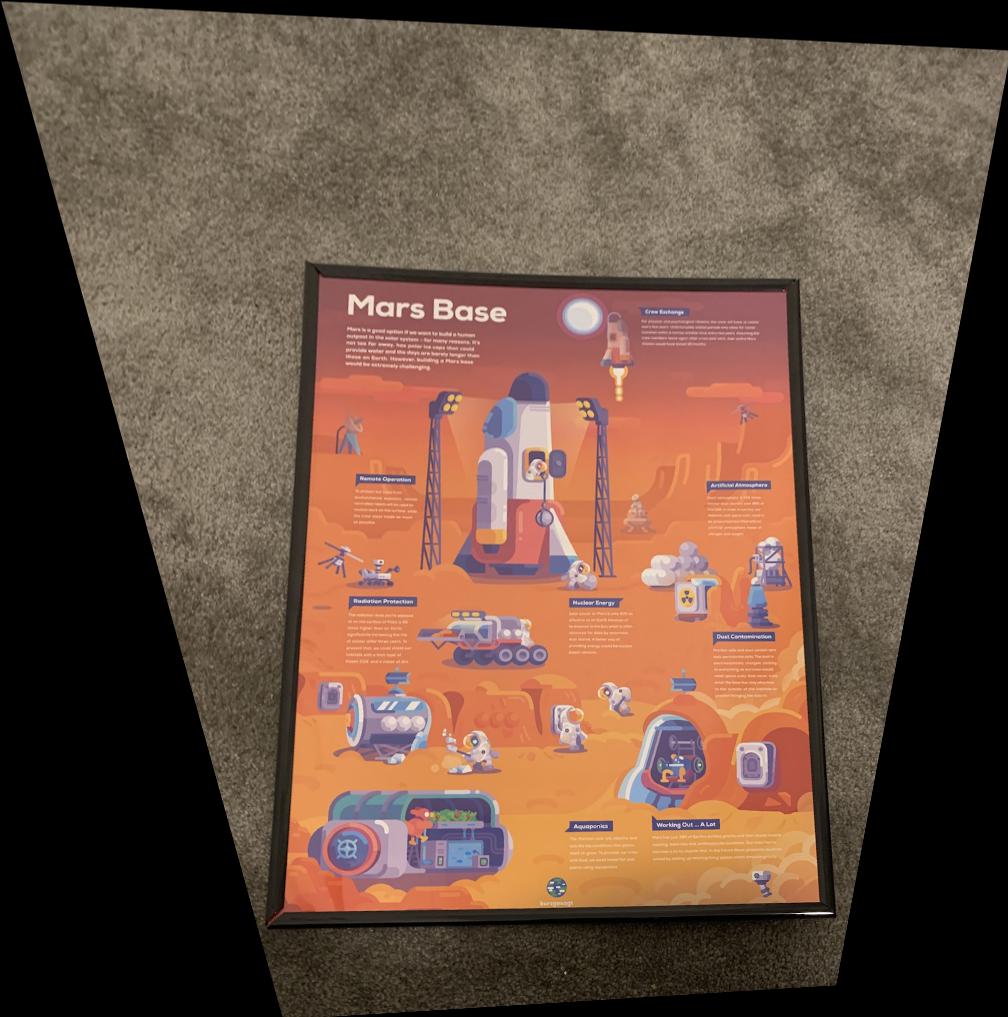

Next, I have a Mars base poster from the youtube channel kurzgesagt. Here are the correspondences:

The result is as follows:

There are 3 hand made mosiacs. The first is from images I took outside one side of SODA hall. Here are the correspondences:

And the final mosiac:

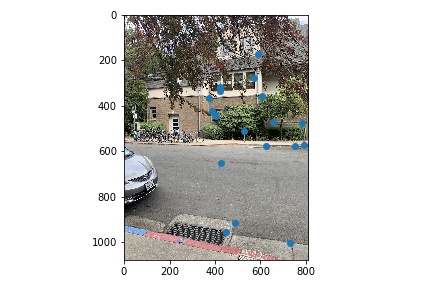

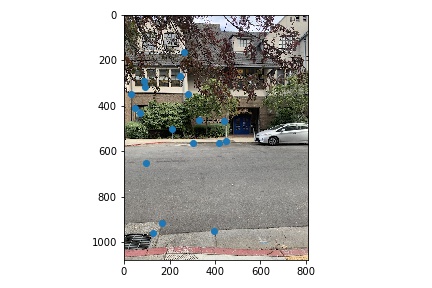

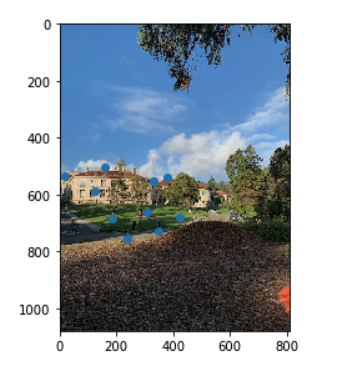

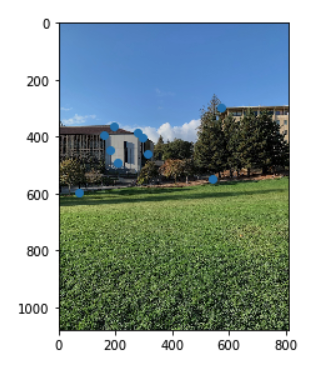

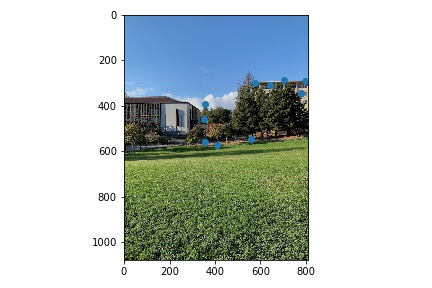

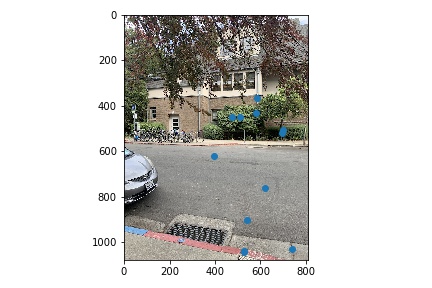

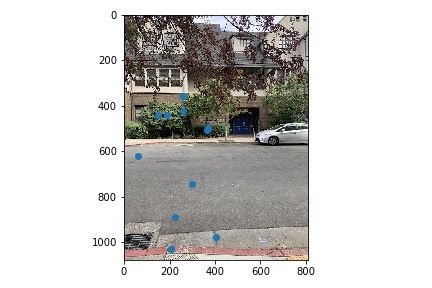

The second mosiac is a series of 3 images taken outside the Valley Life Science building. I projected the 1st and 3rd image to the plane of the 2nd image. Here are the manually generated correspondences:

Images 1 and 2:

Images 2 and 3:

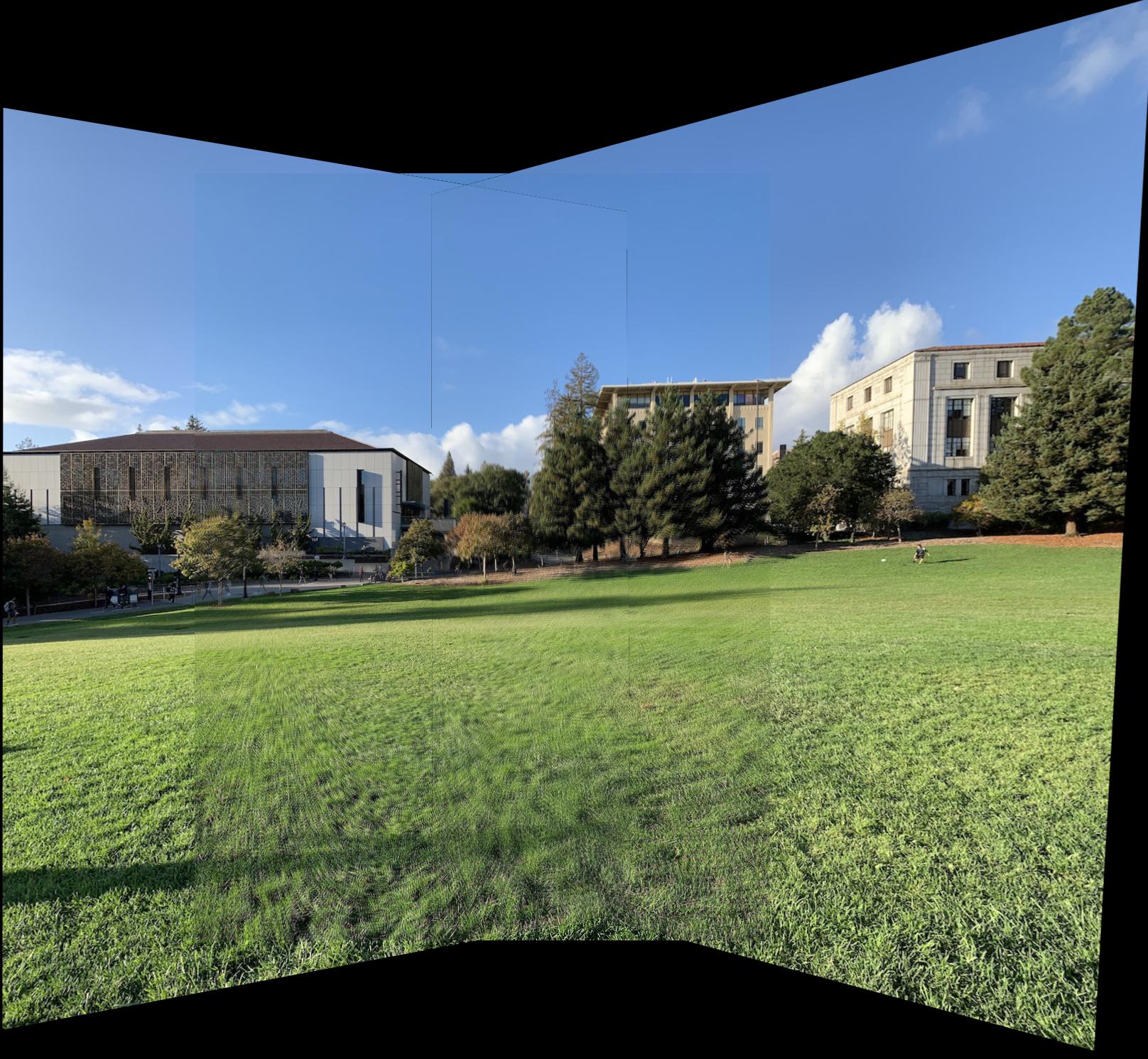

And here is the final mosaic:

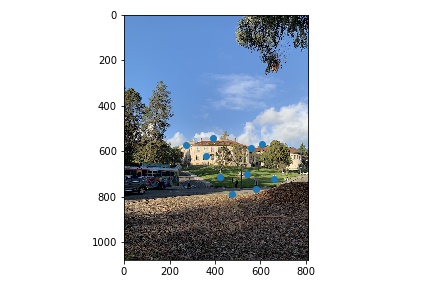

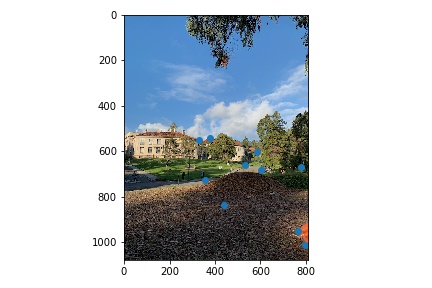

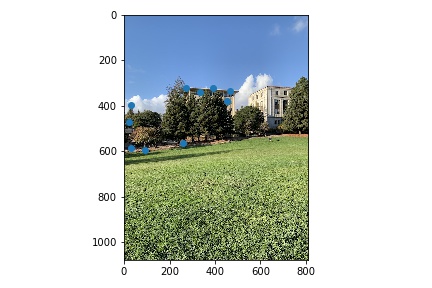

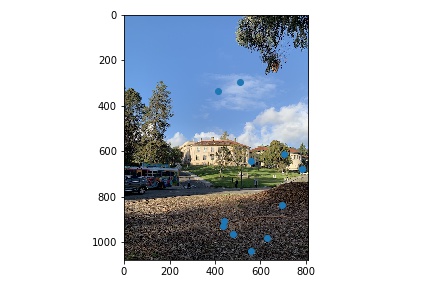

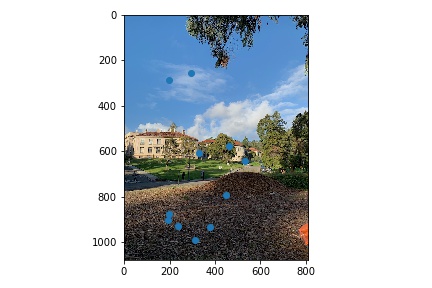

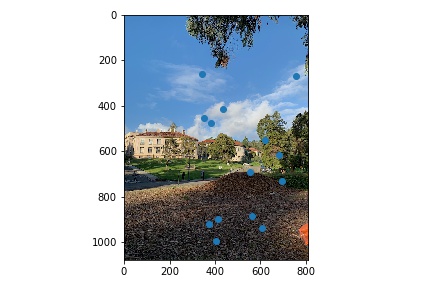

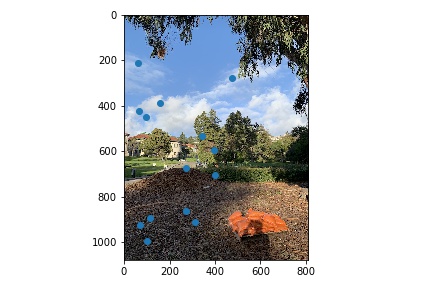

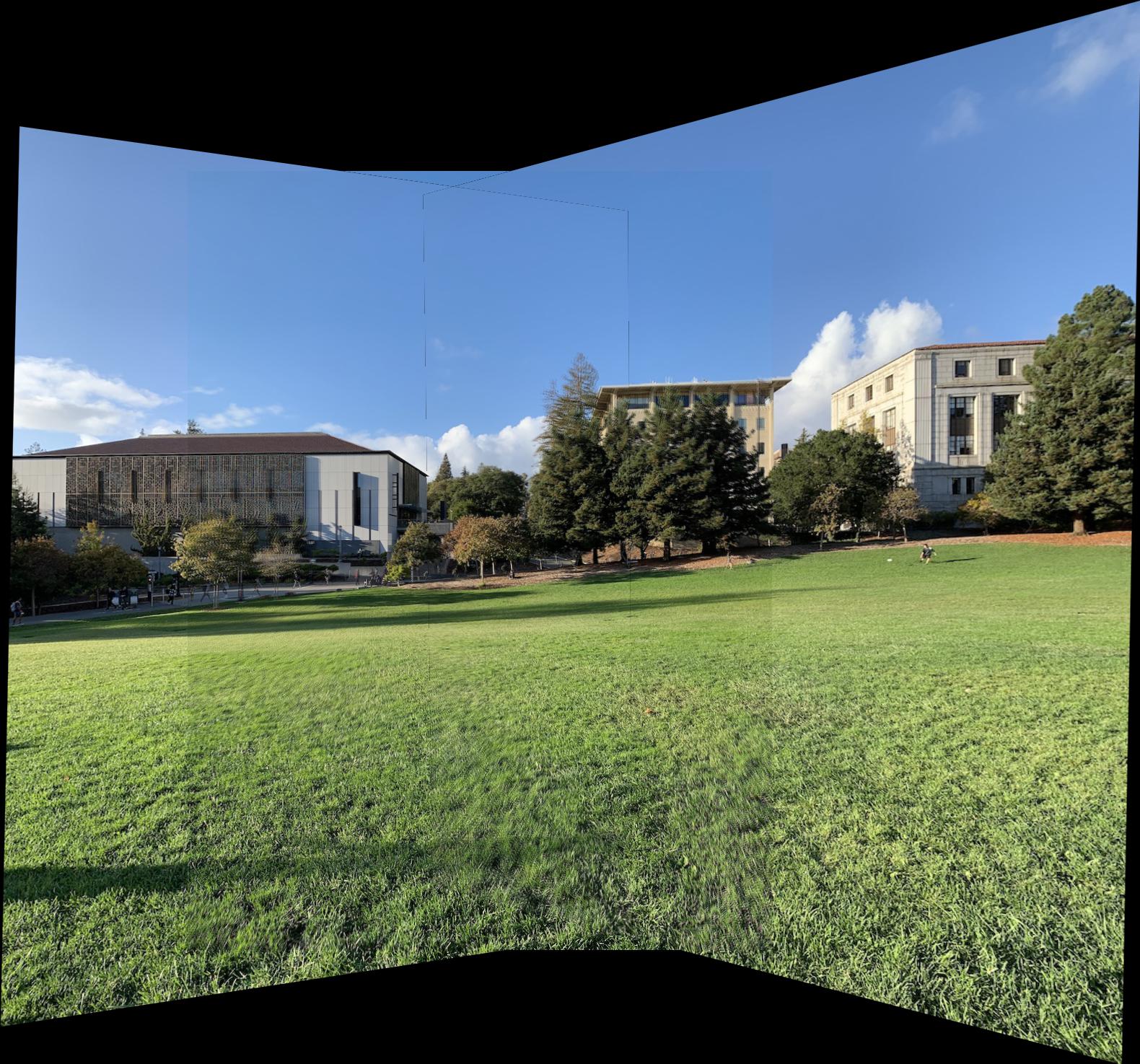

The final mosiac is from outside the Doe Library facing the East Asian Library. Once again, this is a set of 3 images where the 1st and and 3rd images are aligned to plane of the 2nd image. Here are the manually generated correspondences:

Images 1 and 2:

Images 2 and 3:

And the final mosiac:

Honestly, the coolest part was understanding the homographies and implementing the homography transformation. I find the transformation fascinating and it was super fun to implement it!

In this part of the project, I implemented corner feature detector, extracted feature descriptors, matched feature descriptors, and implemented RANSAC. I reran my new automatic homography computation to reproduce the mosiacs from part 4a. Similar to Brown et al., I selected 500 feature points using adaptive non-maximal suppression and used a robustness parameter of 0.9. For RANSAC, I used an epsilon value of 2 and 10,000 iterations. Again, for each mosiac I mapped all the images to the plane of image 2. When extracting the feature descriptors, I used a 40x40 patch and applied a Gaussian blur to downsample to 8x8. Each feature descriptor is then bias and gain normalized. For feature matching, I used a threshold of 0.6 in Lowe's matching.

Outside Soda Hall Auto-generated final Correspondences:

and the final product:

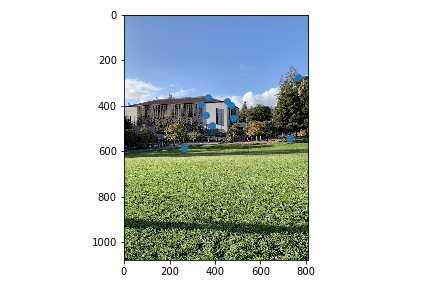

Lawn Outside the Valley Life Science building:

Correspondences between image 1 and image 2:

Correspondences between image 2 and image 3:

And the final product:

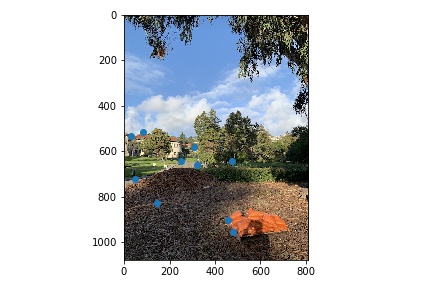

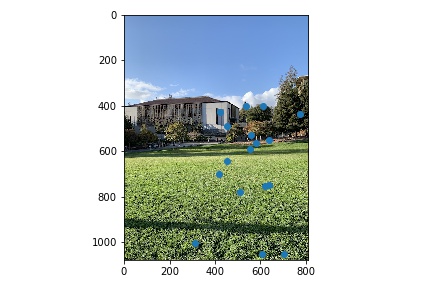

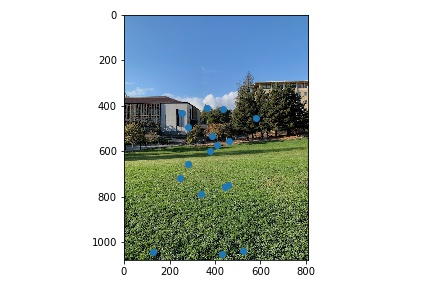

And finally, the lawn outside the Doe Library:

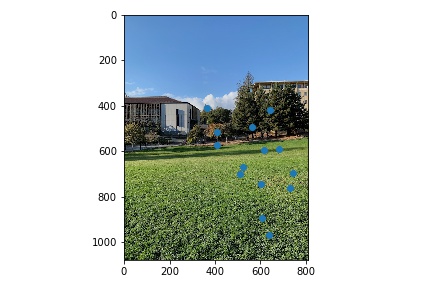

Correspondences between image 1 and image 2:

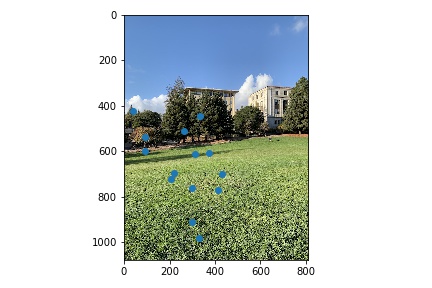

Correspondences between image 2 and image 3:

And the final product:

I thoroughly enjoyed the second portion of the assignment (4b). In my opinion, I found this section much more fun and, to be honest, much easier. The coolest thing I learned form this project is just how much small differences in correspondences can make. I had to be very careful to double check alignments when projecting onto a common canvas. Even some of my results are partially blurry because of small pixel misalignments. I would be curious to see if there is a way to improve the point correspondences by modifying some of the algorithm parameters (RANSAC and ANMS).