Image Warping and Mosaicing - Part B

- Kumar Krishna Agrawal UC Berkeley

Statement

Cameras capturet he three-dimensional world onto two-dimensional planes \(P\). Under the assumption that there is no translation of the camera, let us consider two different views \(P_1\) and \(P_2\) of a scene. Typically, with reasonable overlap between the two views, we can define correspondences between points on the two planes, let's represent this mapping by $$x_i \in P_1 \to x'_i \in P_2$$ This is an example of projective transformation, where we can find some homography matrix \(H\) such that $$x'_i = Hx_i \qquad H = \begin{bmatrix} h_{11} & h_{12} & h_{13} \\ h_{21} & h_{22} & h_{23} \\ h_{31} & h_{32} & h_{33}\end{bmatrix}$$

As our homogeneous representation is scale invariant, finding \(H\) reduces to estimating \(8\) parameters. In this work we consider using Direct Linear Tranformations (DLT)-style algoritms. In particular we setup the following regression problem $$\begin{align} \begin{bmatrix} x_1 & y_1 & 1 & 0 & 0 & 0 & - x'_1 x_1 & -x'_1 y _1 \\ 0 & 0 & 0 & x_1 & y_1 & 1 & - y'_1 x_1 & -y'_1 y_1 \\ \vdots & \vdots & \vdots & \vdots & \vdots & \vdots & \vdots & \vdots \\ x_n & y_n & 1 & 0 & 0 & 0 & - x'_n x_n & -x'_n y_n\\ 0 & 0 & 0 & x_n & y_n & 1 & - y'_n x_n & -y'_n y_n \\ \end{bmatrix} \begin{bmatrix} h_{11} \\ h_{12} \\ h_{13} \\ h_{21} \\ h_{22} \\ h_{23} \\ h_{31} \\ h_{32} \end{bmatrix} = \begin{bmatrix} x_1 \\ y_1 \\ \vdots \\ x_n \\ y_n \end{bmatrix} \end{align} $$

Solving the above equation requires minimum \(4\) points to estimate \(H\). However, getting the correspondences is often inexact and noisy measurements. Therefore, using more than \(4\) points would help improving the estimate of our homography. In this project we create mosaics from different images captured from single point, different planes by stitching these frames into single canvas.

Shooting Images

Several views are captured from iPhone 11 camera, at different times of the day. Some illustrative examples

Finding Correspondence

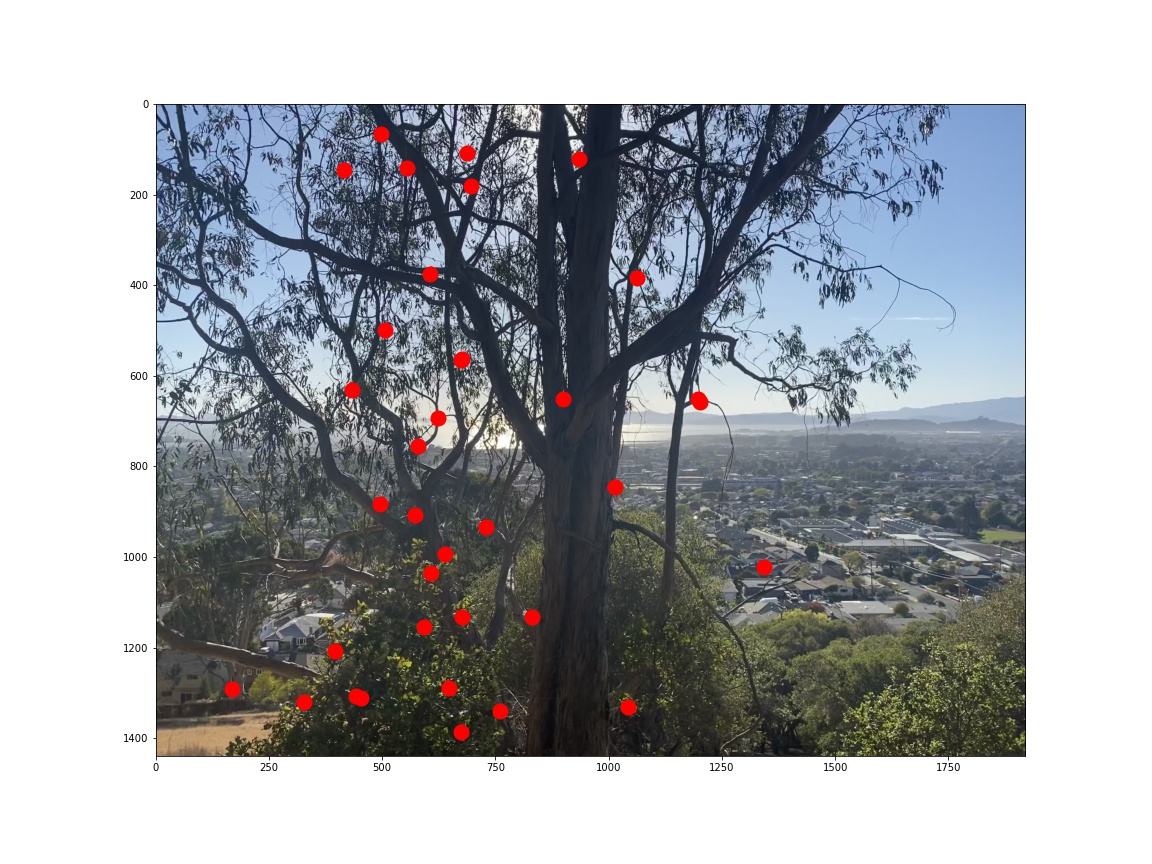

Computing the homography requires us to find correspondences between two views. In this part of the project we make automate this procedure using Harris corner detectors with adaptive non-maximal suppression. With 500 potential feature points, we find correspondences between pairs of images, with thresholding of error inspired by Howe's algorithm.

Generating a Mosaic

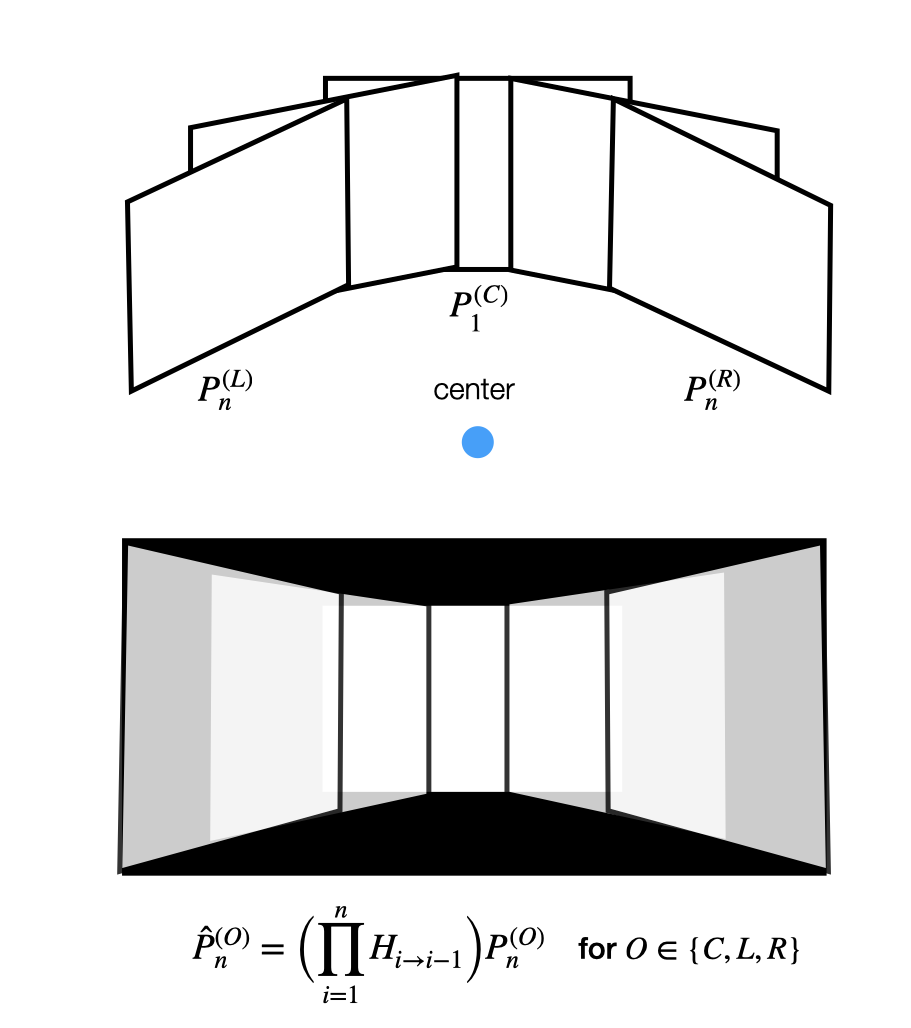

Warping images with projective transform allow us to perform something interesting : stitching images from same point, but different perspectives onto a single canvas. The high-level idea is outlined below

Blending Images

Considering that we have multiple frames on a single canvas with significant overlap, blending is required to create a single mosaic image. For this part, we consider first evaluating the overlap between two frames, and use that to blend images. We use Gaussian filters on the generated masks to make the overlap seamless.

Putting it together

Using the tools sketched above, we're ready to generate some mosaics! Mouse-Over for comparing to manual mosaic creation.