Angela Xu

In this project, I used the IMM dataset to train convolutional neural networks in order to detect facial keypoints. I began experimenting with nose points, and then progressed to the full face.

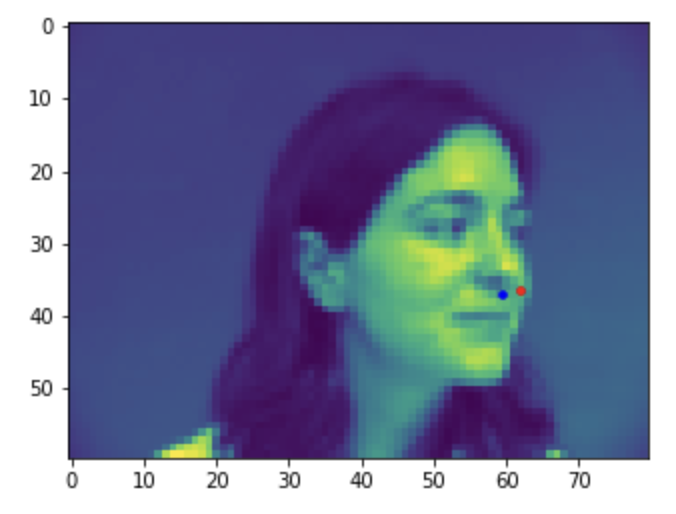

First, I created a Dataset class to use the Dataloader in PyTorch to read point and image files. Here are some examples images showing the ground-truth nose keypoints plotted:

To create the Convolutional Neural Network, I followed a PyTorch's tutorial to make a Net object.

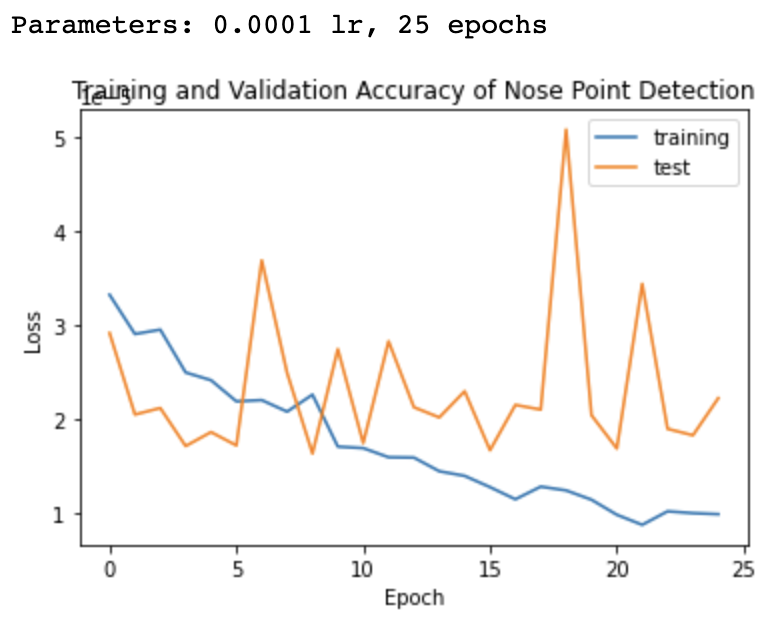

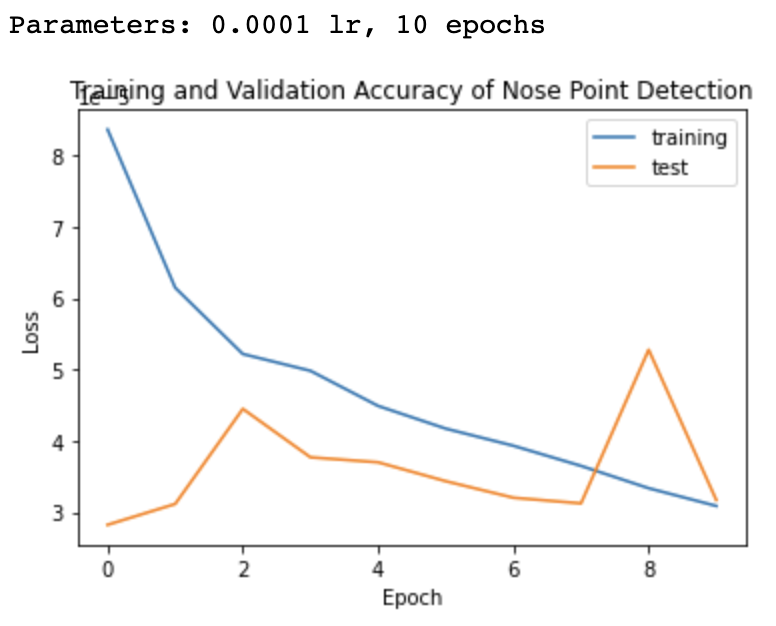

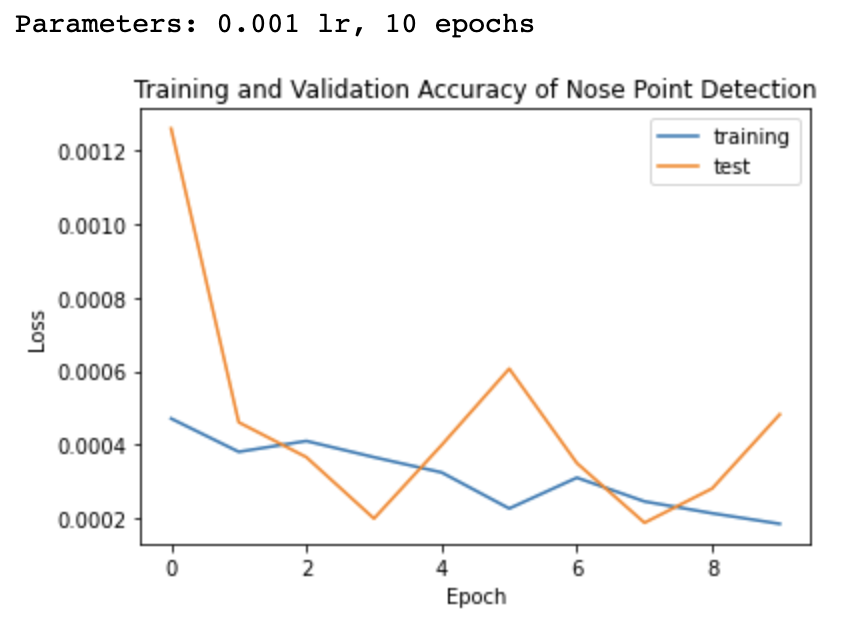

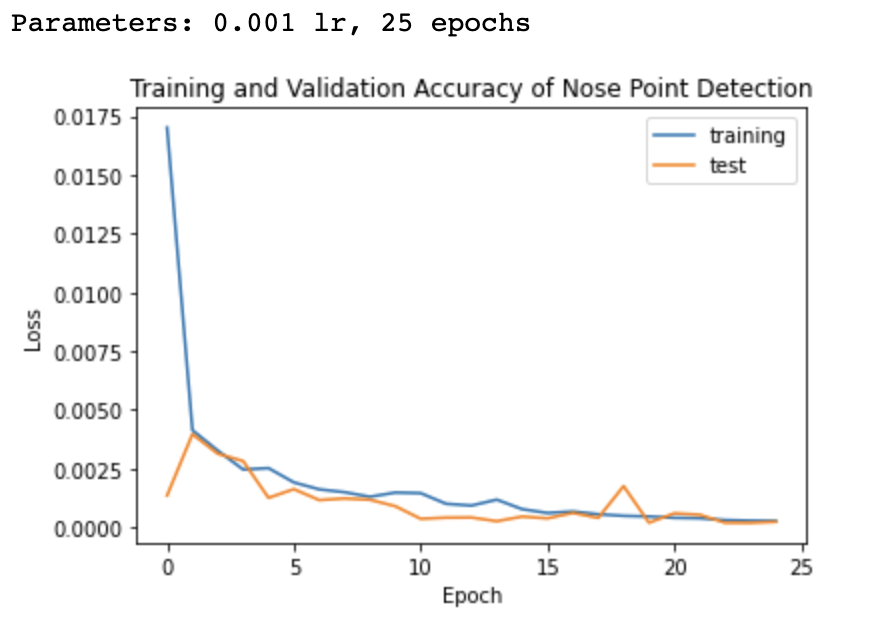

I played around with different epoch and learning rate values. The following graphs show different combinations:

I ended up going with the fourth option, a 0.001 learning rate and 25 epochs.

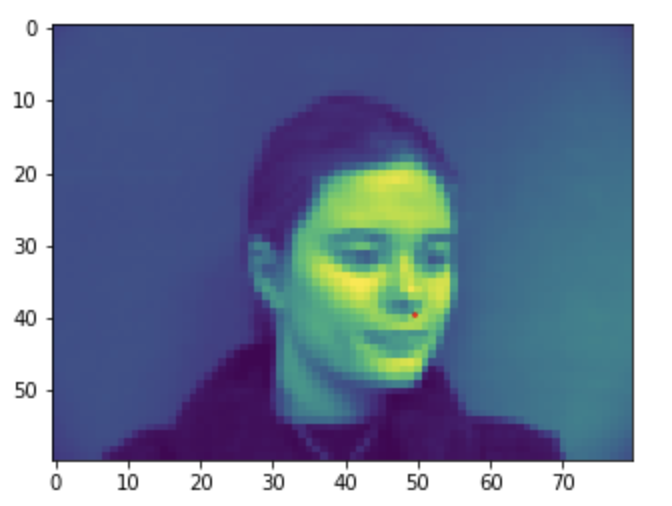

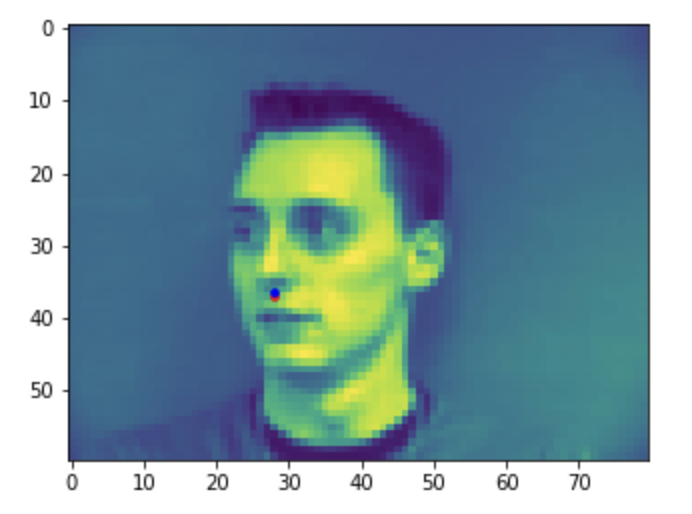

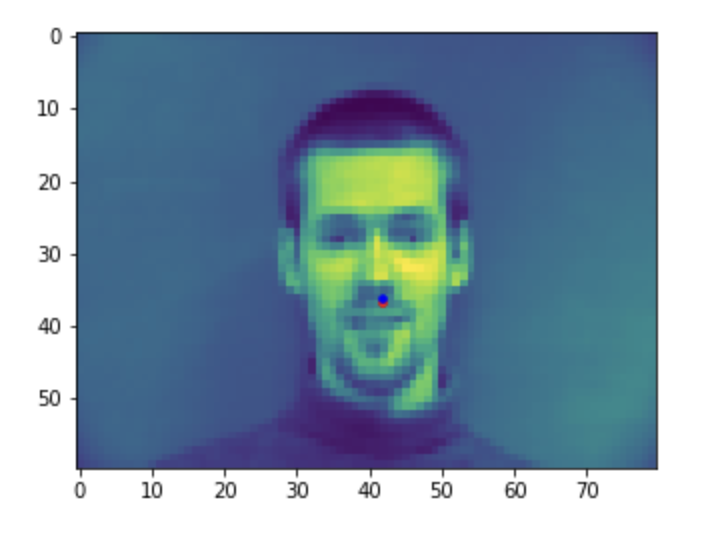

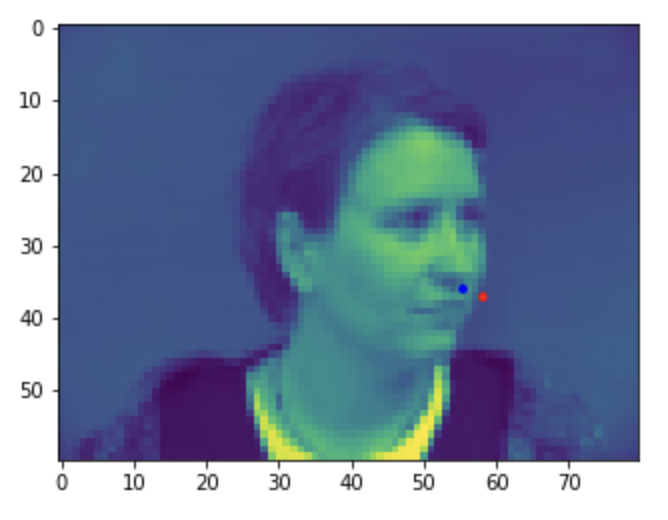

Here are 2 successful nose tip detections, followed by 2 failures. These may be because I used a pretty limited dataset with no augmentation.

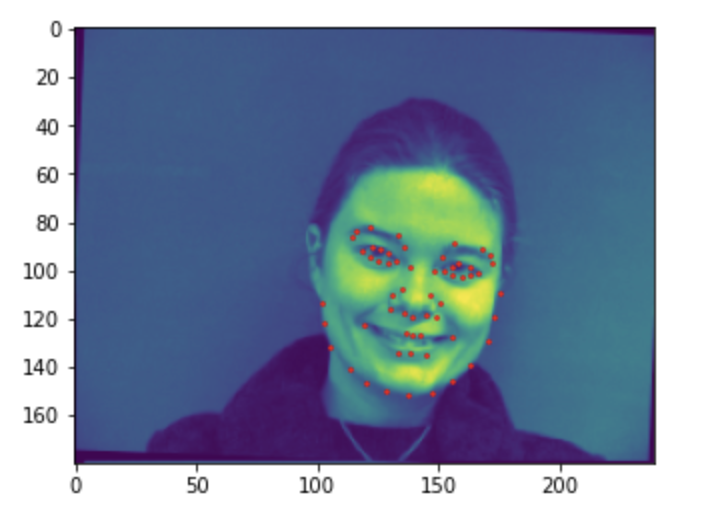

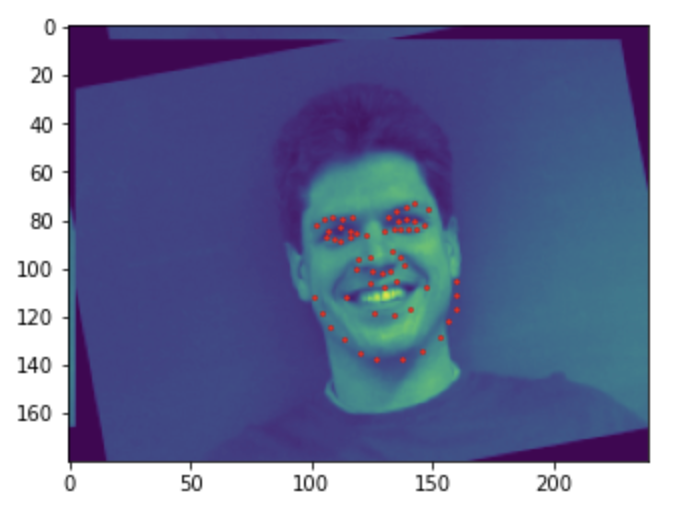

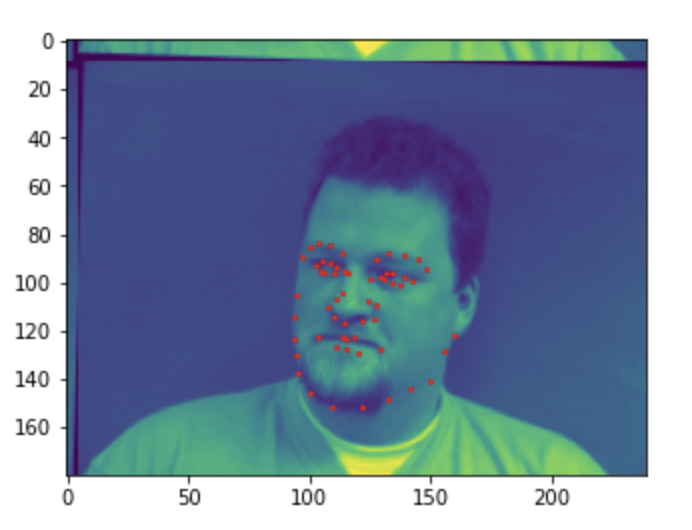

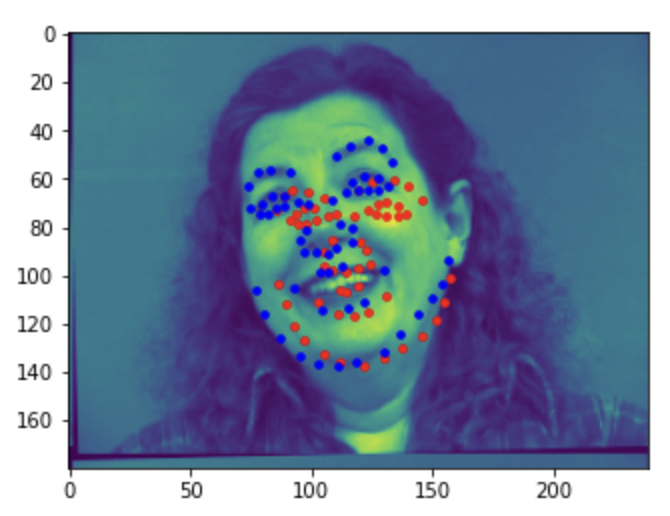

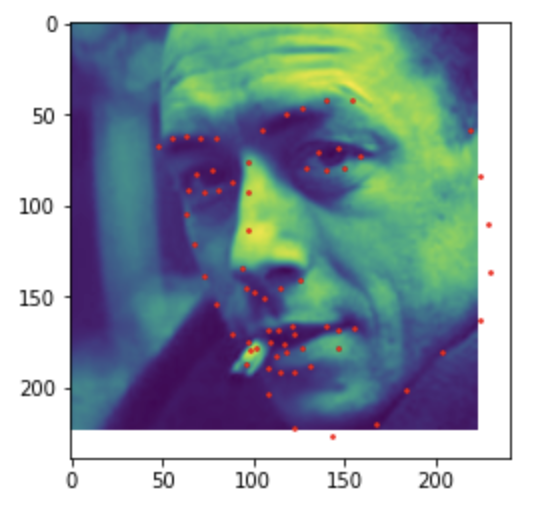

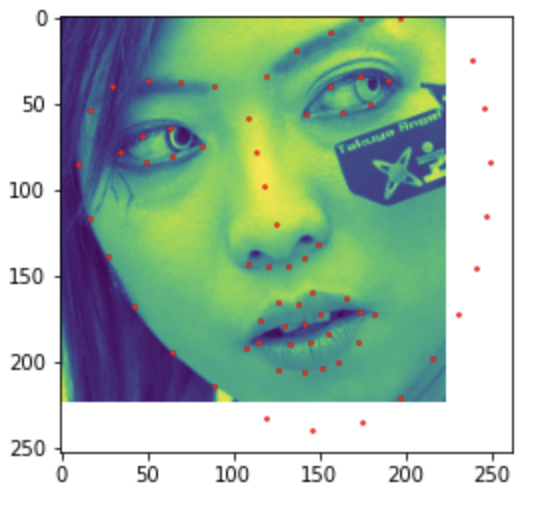

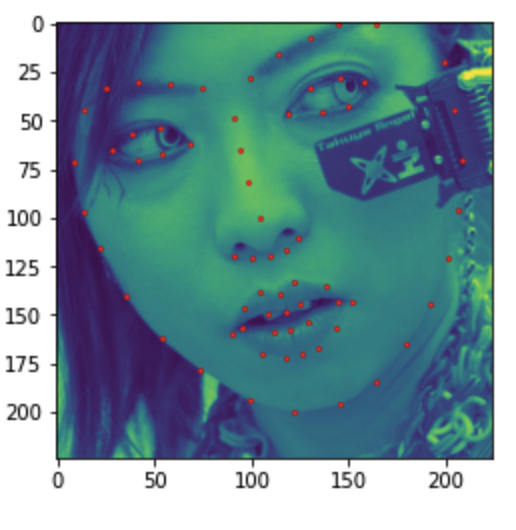

For this Dataloader, I made a function to apply a random angle between (-15, 15) and random x and y shifts between (-10, 10). Here are some examples images showing the ground-truth full facial keypoints plotted with these augmentations:

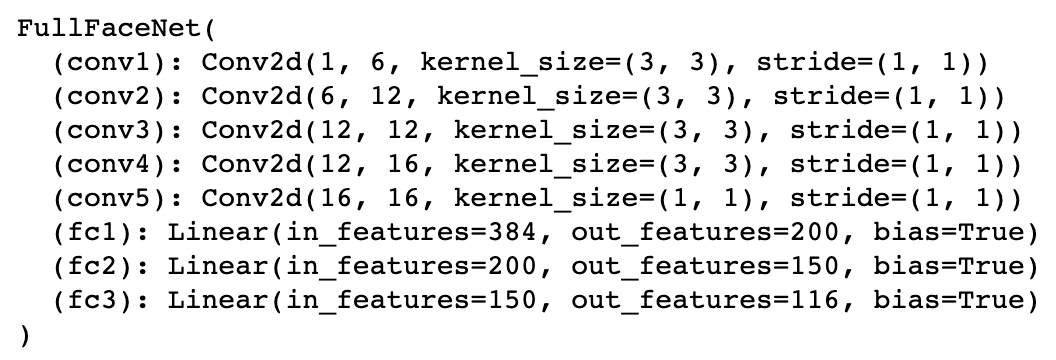

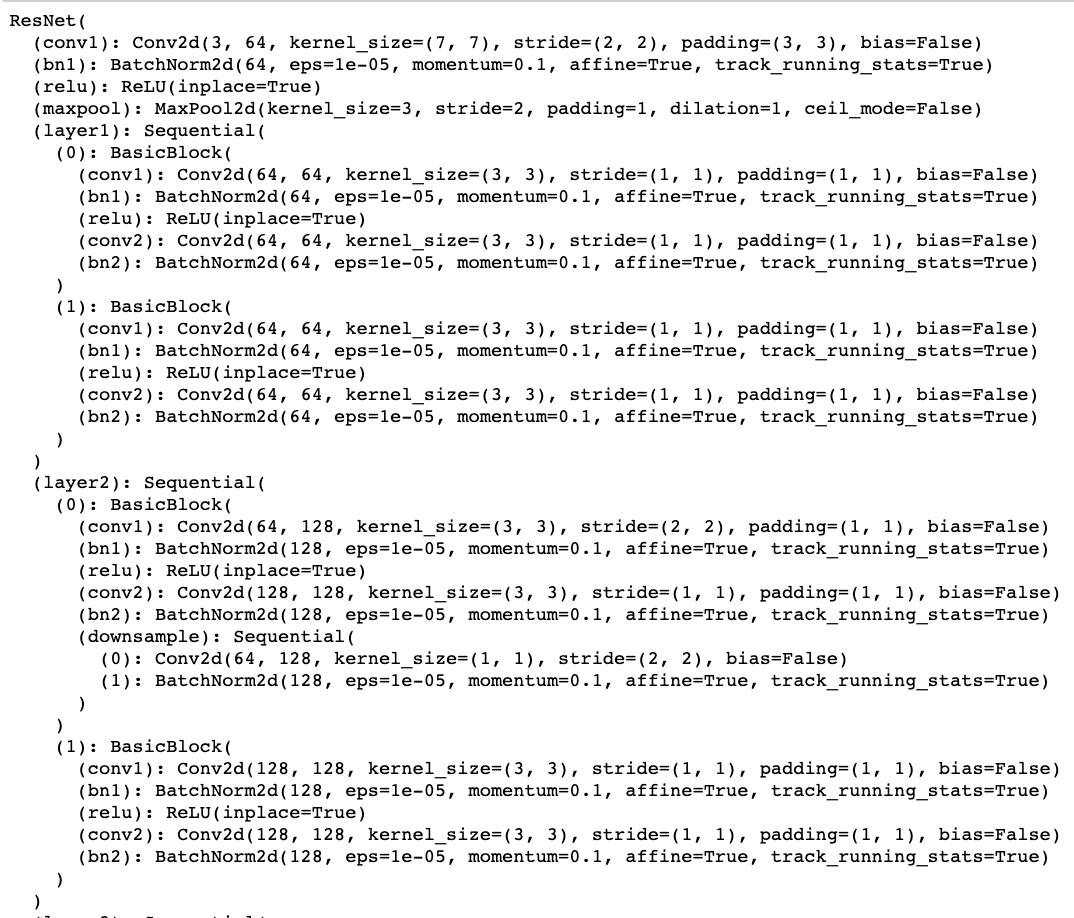

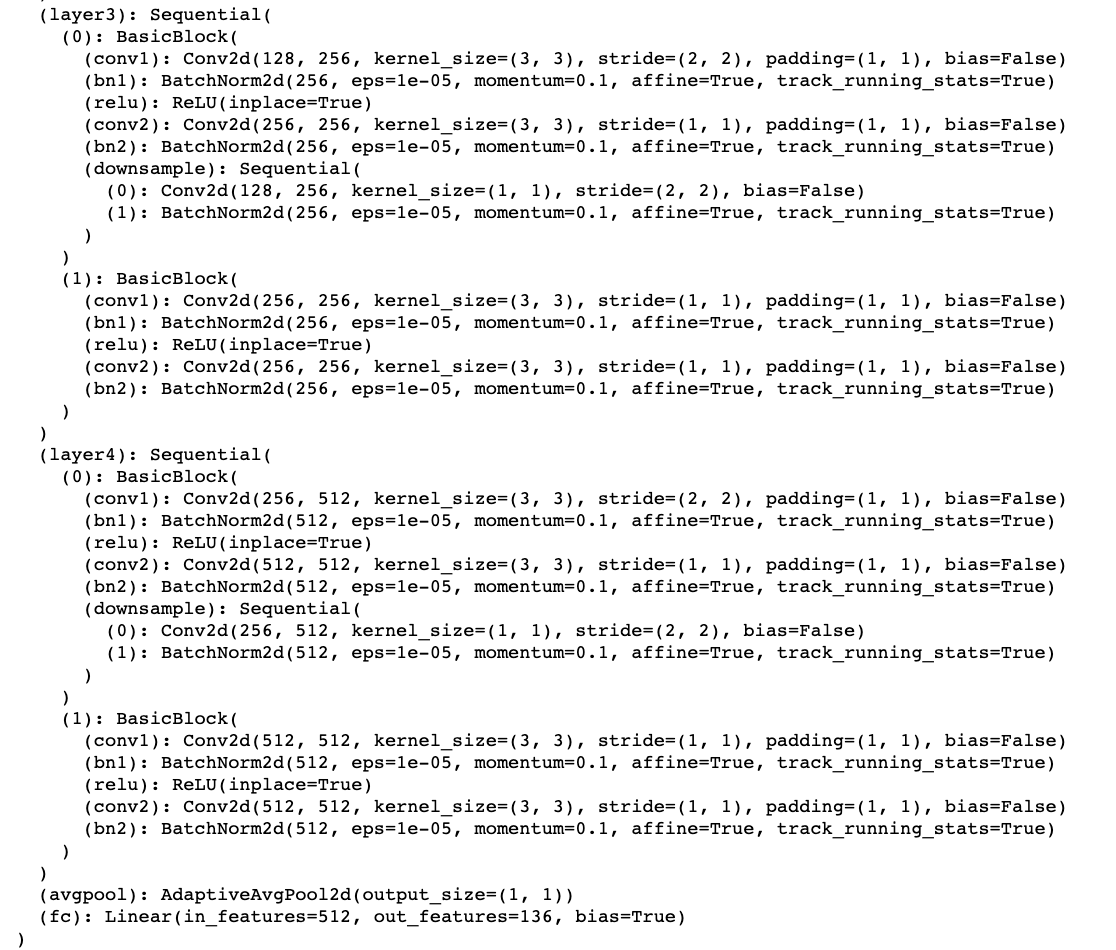

The Convolutional Neural Network architecture details for this are below:

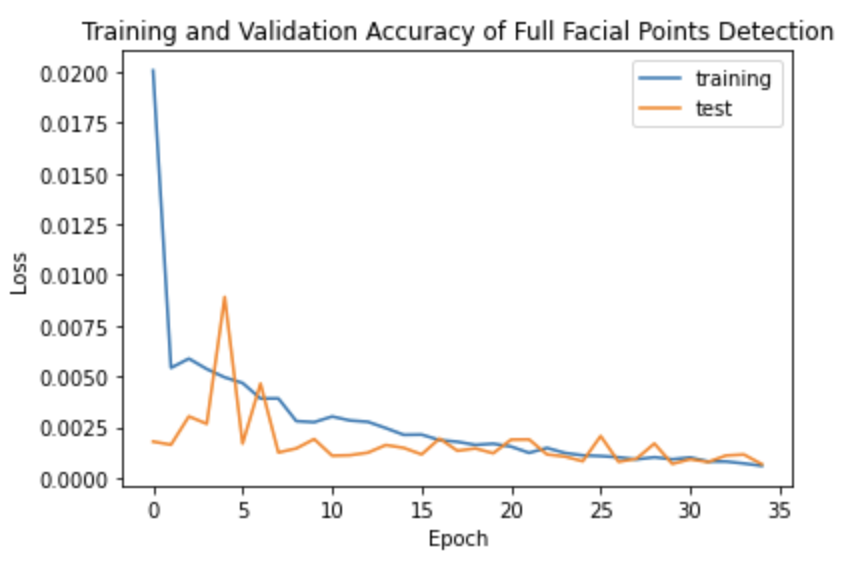

For this, I continued to use 0.001 learning rate and trained 25 epochs. Here is the graph for loss:

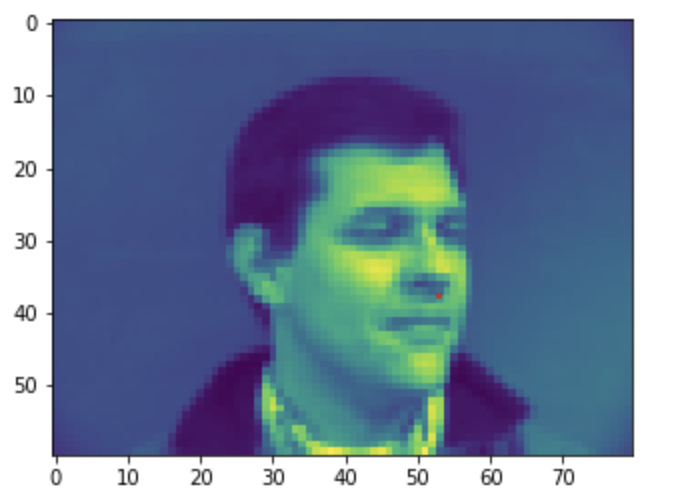

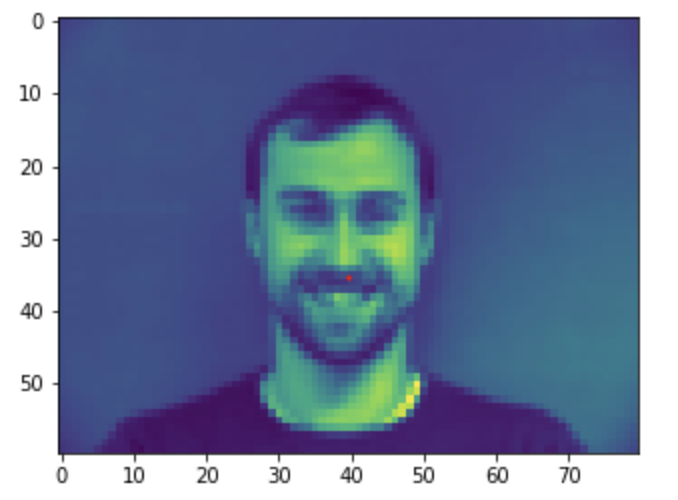

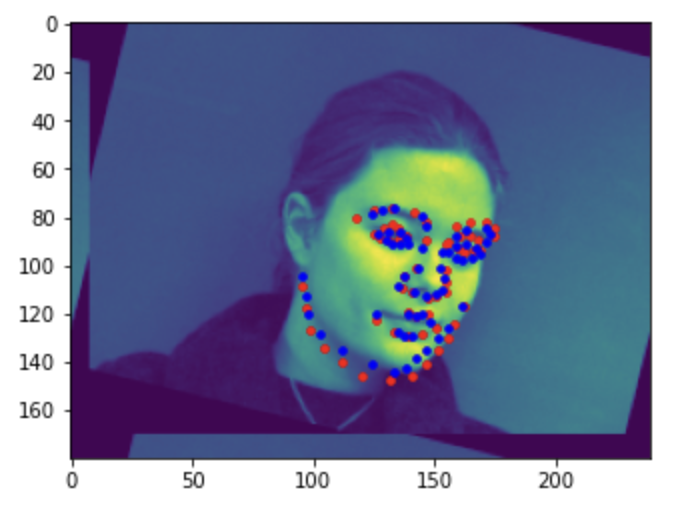

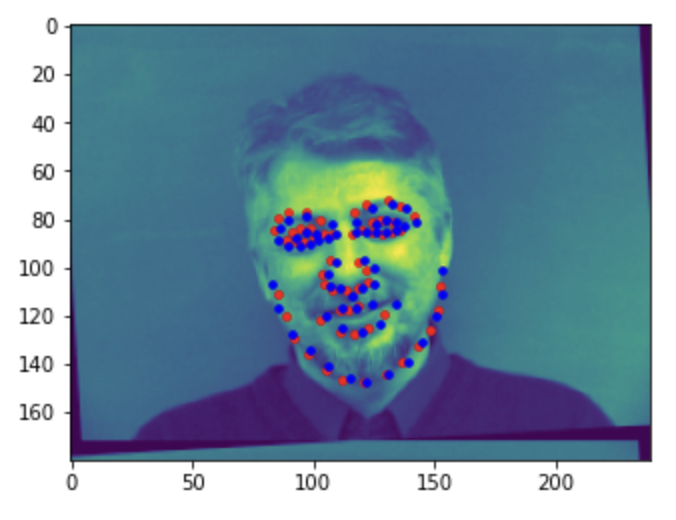

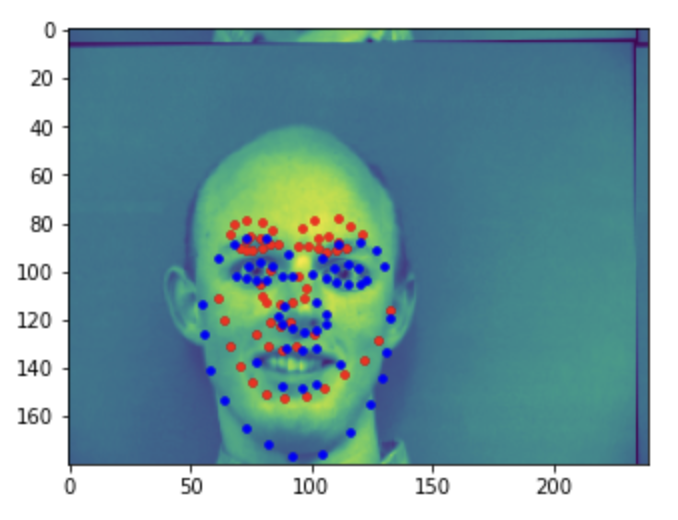

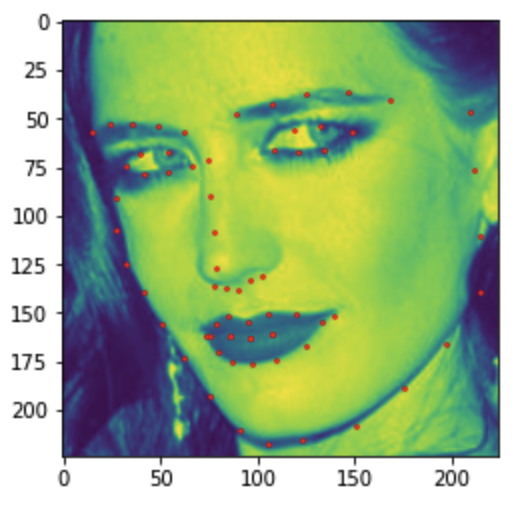

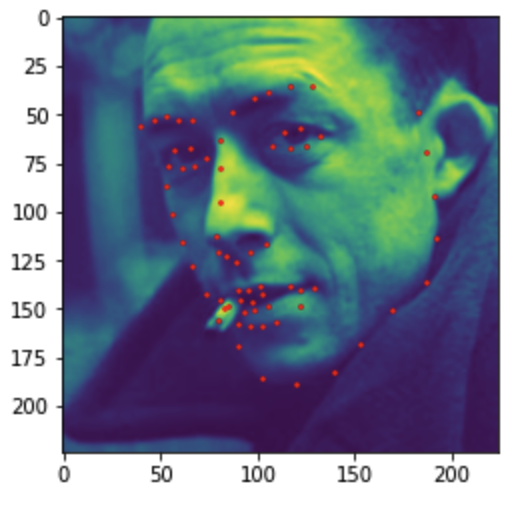

Here are 2 successful full facial detections, followed by 2 failures. Even with augmentation, differing expressions and angles may have made it difficult to predict.

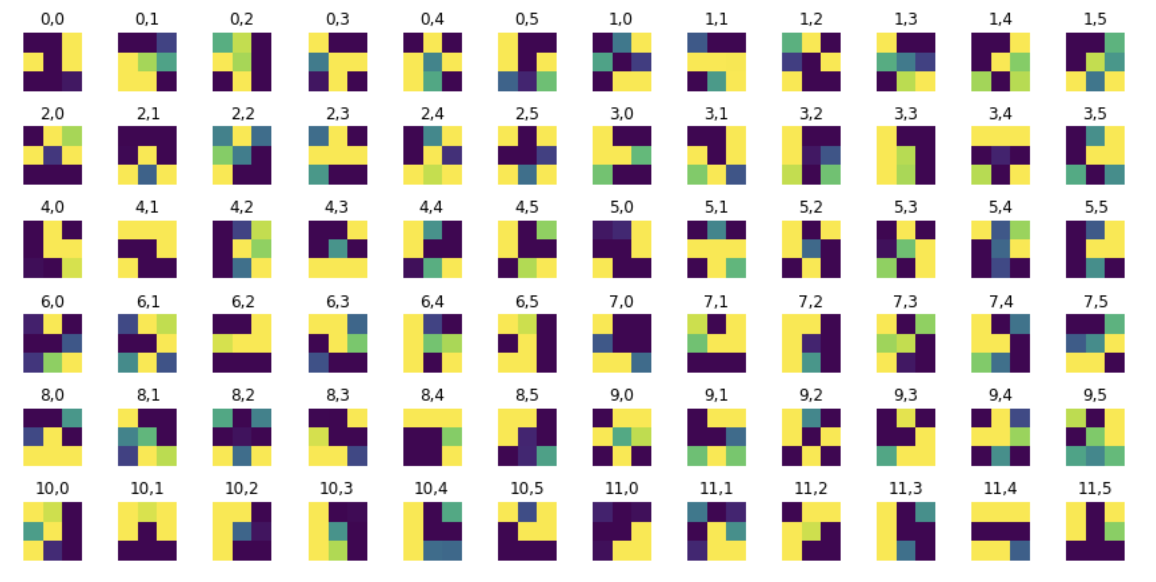

The learned features:

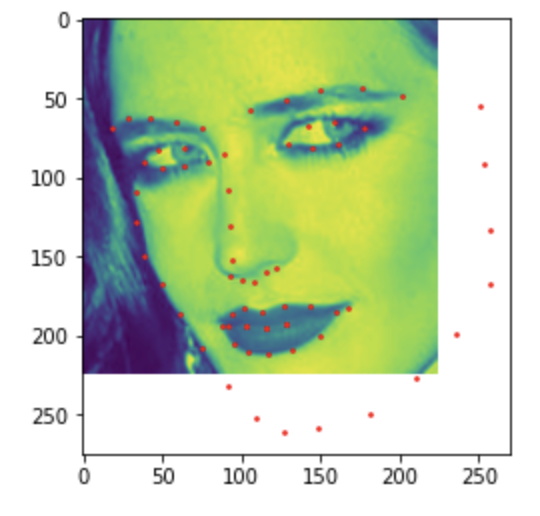

Using the bounding boxes generated with the provided code, I cropped images, resized them to 224x224, and applied the corresponding transformations to keypoints:

However as we can see, some of the points are outside of the bounding boxes. To fix this, I adjusted bounding boxes to be 1.2x the original size. While looking at data, I also found some images to have negative bbox values. I filtered these out. Below are the new images:

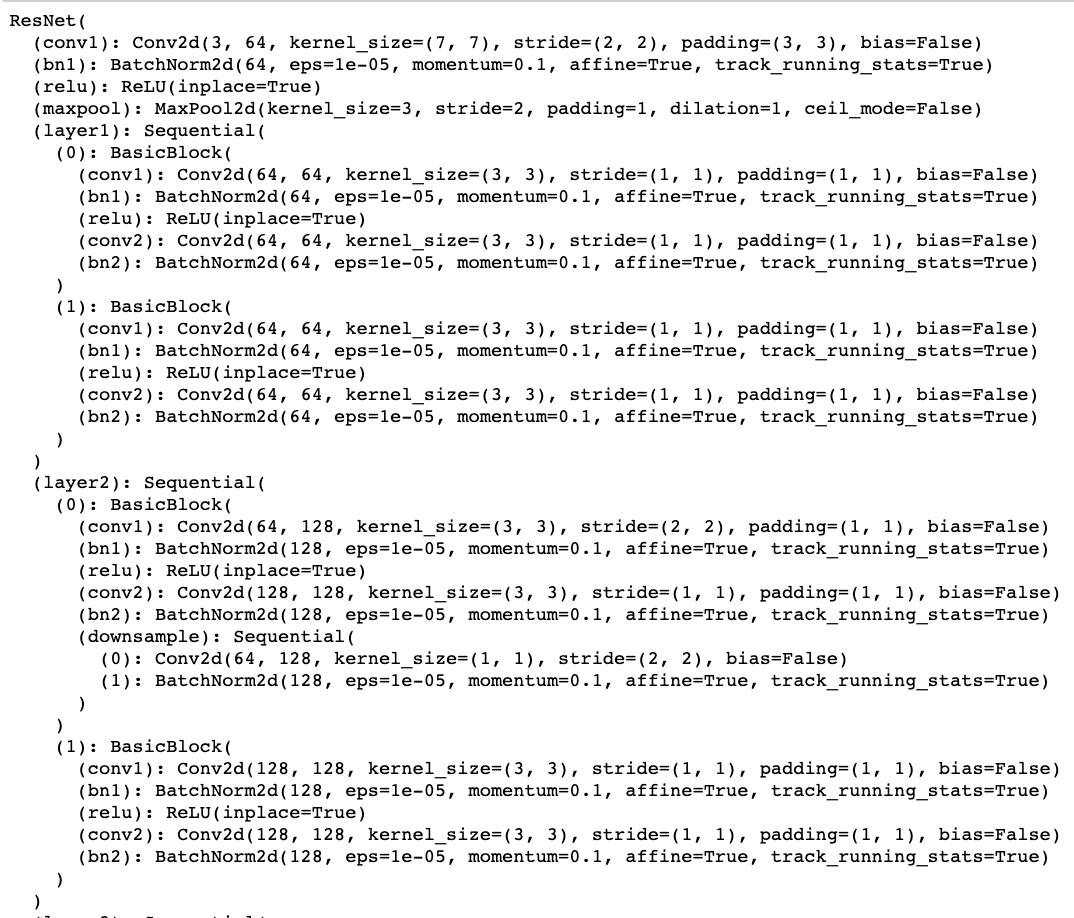

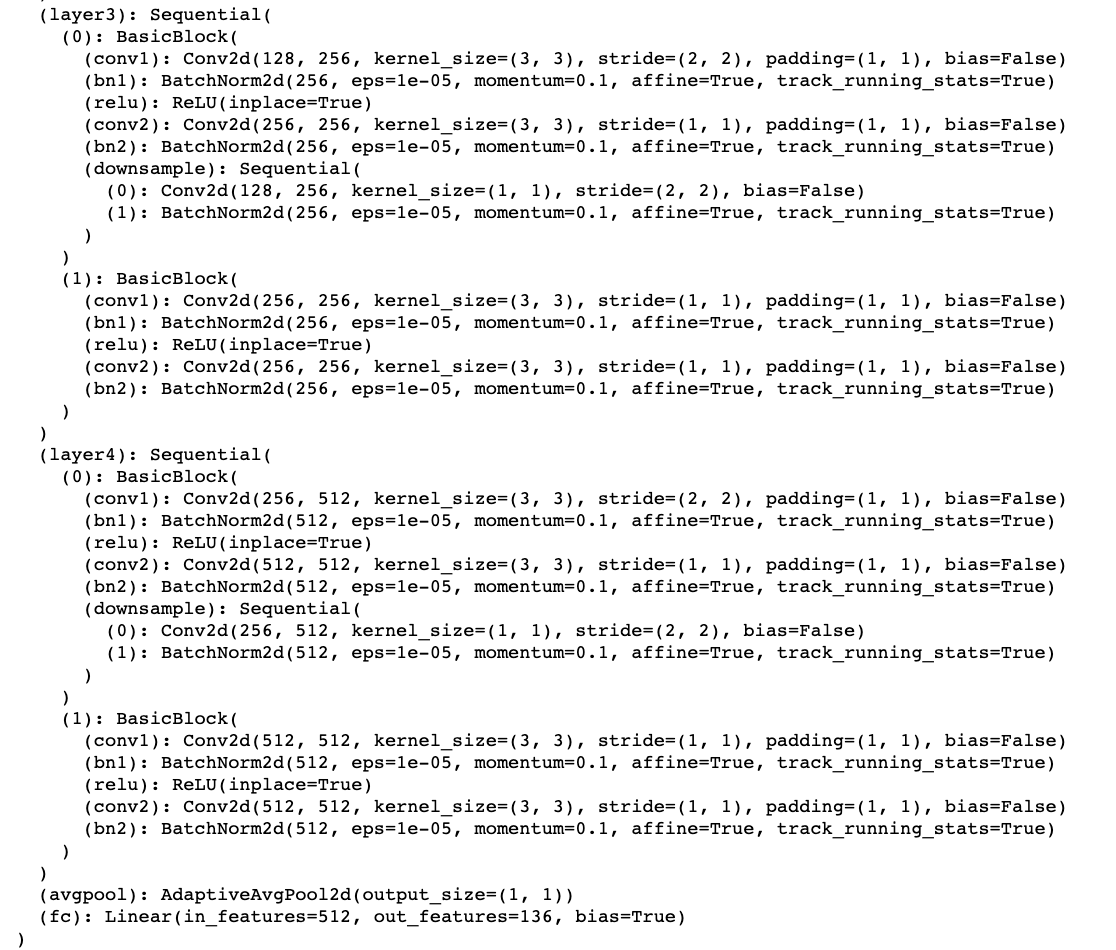

For this part, I used ResNet18. I modified the final layer to adjust the out_features to be compatible with our data (I changed it to 68*2=136), and for the input data, I stacked the grayscale image to produce an image with three channels. Here is the detailed architecture:

At this point, I was able to train data, but had many issues with RAM, Google Colab crashing, and losing my data.

My Kaggle submission is under username angiezx, but it performed very poorly, so I'm not sure if the results are formatted correctly or contains all the data.