This project focuses on using neural networks to detect key points in a facial plane.

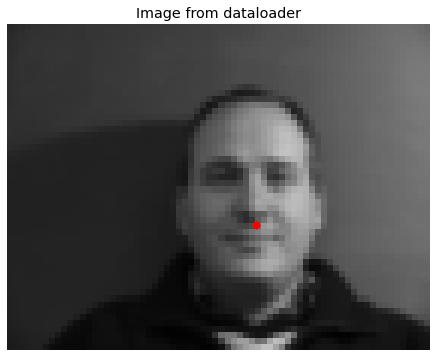

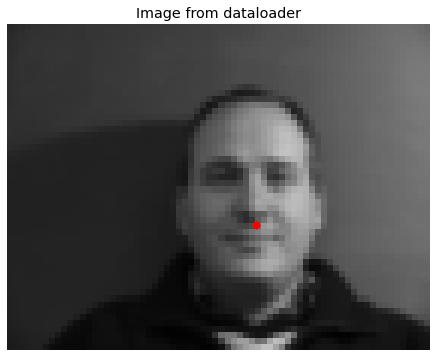

This section splits the Danes dataset and its nose facial keypoints to train a model to predict nose data points for the test set. I implementated a Dataloader class to get the images and their nose point data. Here are some images plotted with ground-truth nose keypoints.

Then, I created a convolutional neural network with 3 convolutional layers, 12, 24, and 32 layers, and kernel sizes 7x7, 5x5, and 3x3, respectively. I defined my prediction loss using MSE, and then trained it on the training set (first 32 people) for 25 epochs.

For the images that detect the nose incorrectly, I believe this occurs because there are not as many images that train on angled facial expressions or are outliers. Comparing the two men whose heads are turned at 3/4 angle, I think the error occurs because the second man has a more prominent nasolabial fold which may influence the training and cause the neural network to mistake it for the tip of the nose.

I tested changing my hyperparameters (learning rate and filter size) and the results were actually better...

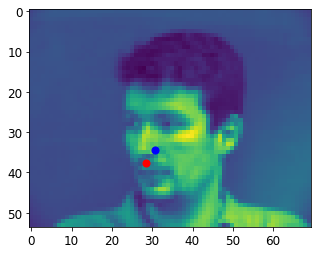

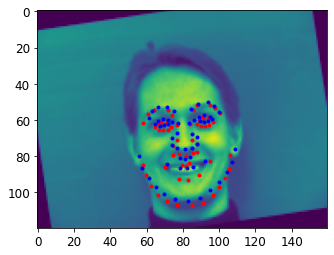

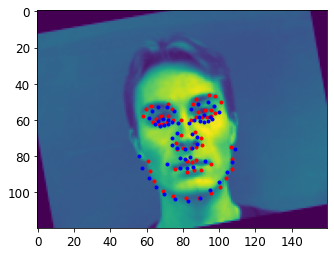

In this section, I expanded upon part 1 to train a set that predicted all 58 facial keypoints on a face. I rewrote my Dataloader to import all the points of the face and plot them. To augment my data, I randomly rotated my faces and their points to be considered as extra data for the training set.

For the images that detect the full face incorrectly, this may have to do with the lighting on the faces, which is darker / not as evenly lit as the successful matches. It also might have been an error on my part, as it seems like the predicted points look like they're the same, which might mean that I didn't plot the output points correctly.

For my training hyperparameters, I used 5 layers with 12, 16, 20, 24, and 28 channels, with 7x7 to 3x3 kernel size for each layer (7x7, 6x6, 5x5, and so on.) I used ReLU for nonlinearity and maxpool layers as well.