Part 1. Nose Tip Detection

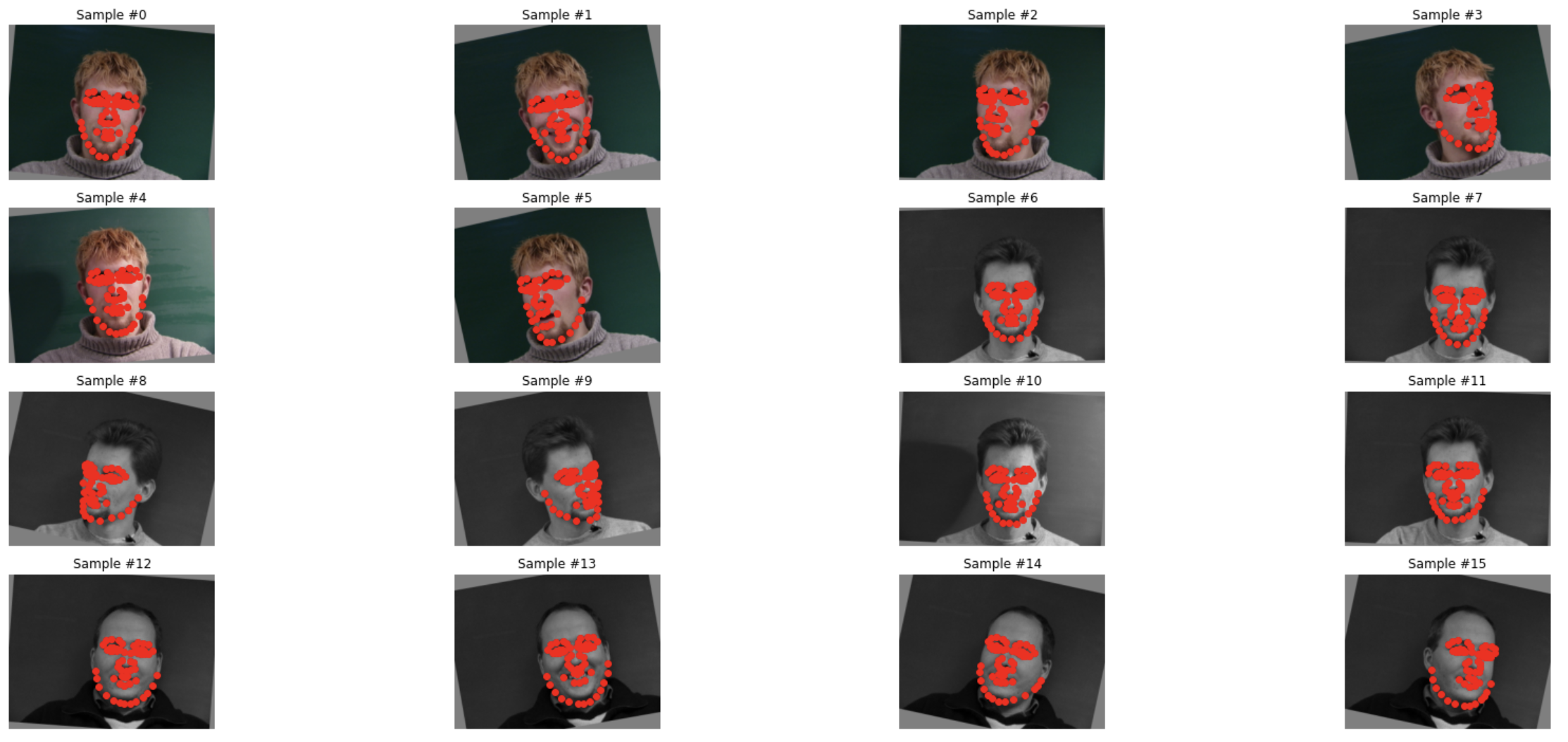

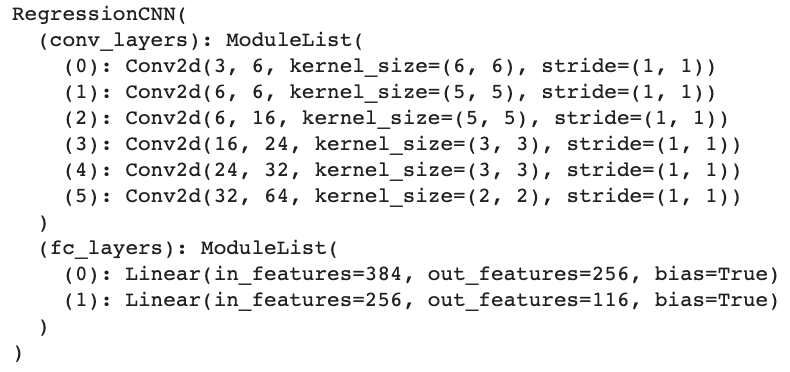

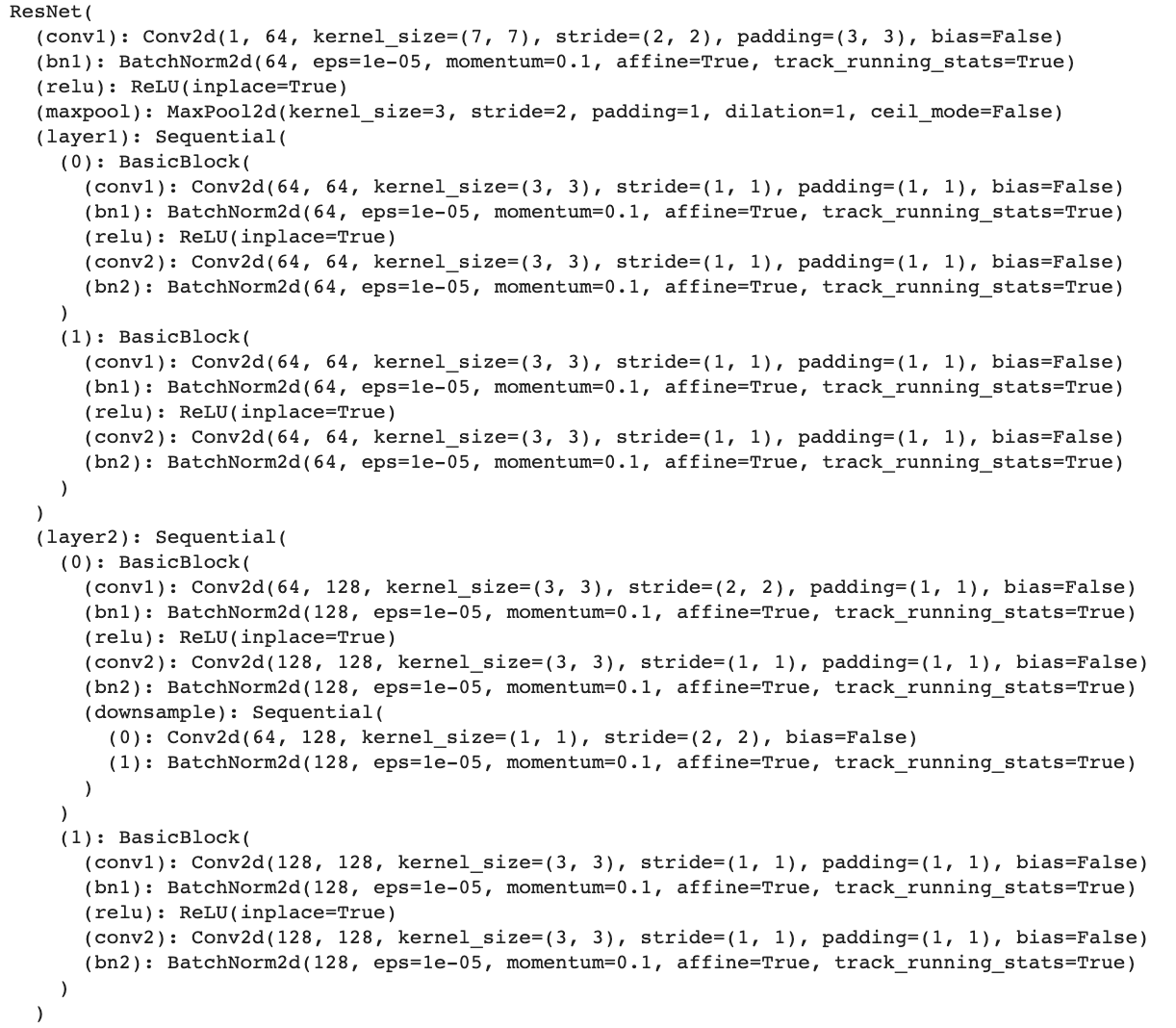

In this portion, I wrote a lot of code that I ended up using for the rest of the project. Specifically, I wrote out a dataset that reads the ASF files to collect the datapoints (with an option to either only keep the nose as the target or to keep all the facial points), a RegressionCNN module, and a general training loop that could be applied to different models / different dataloaders.

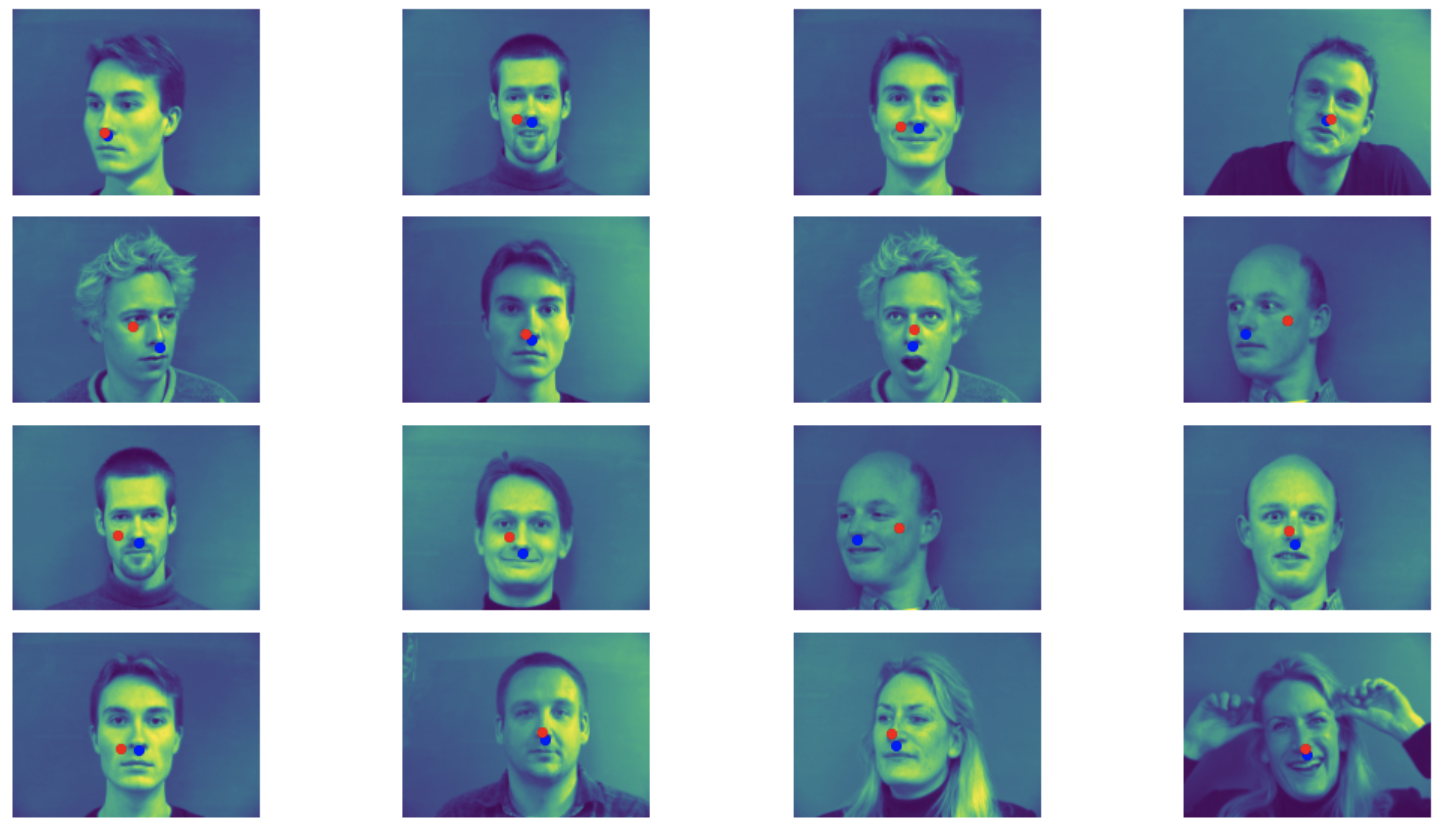

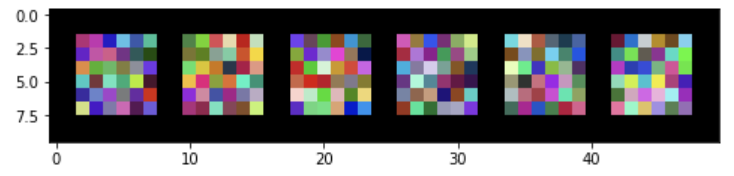

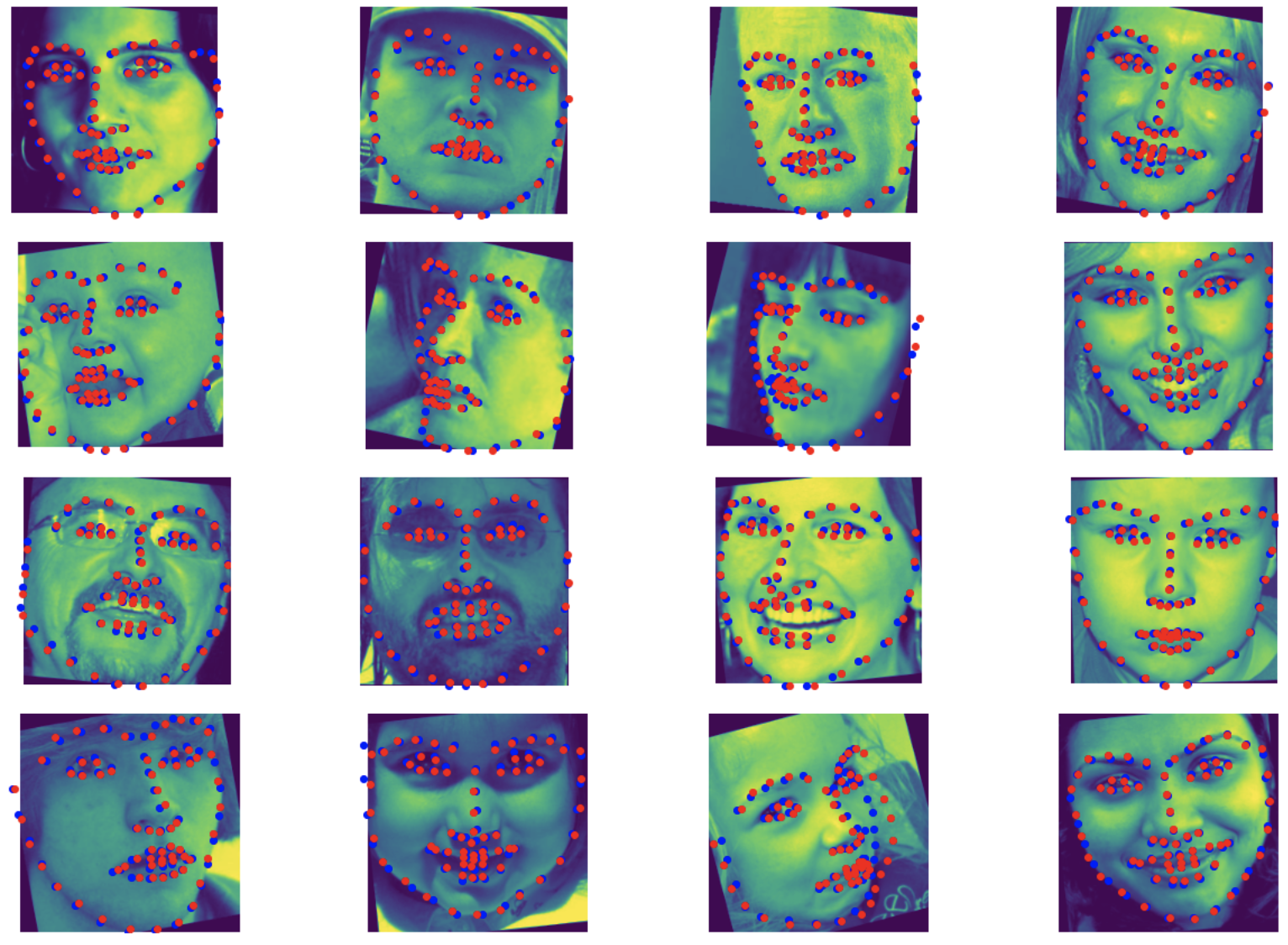

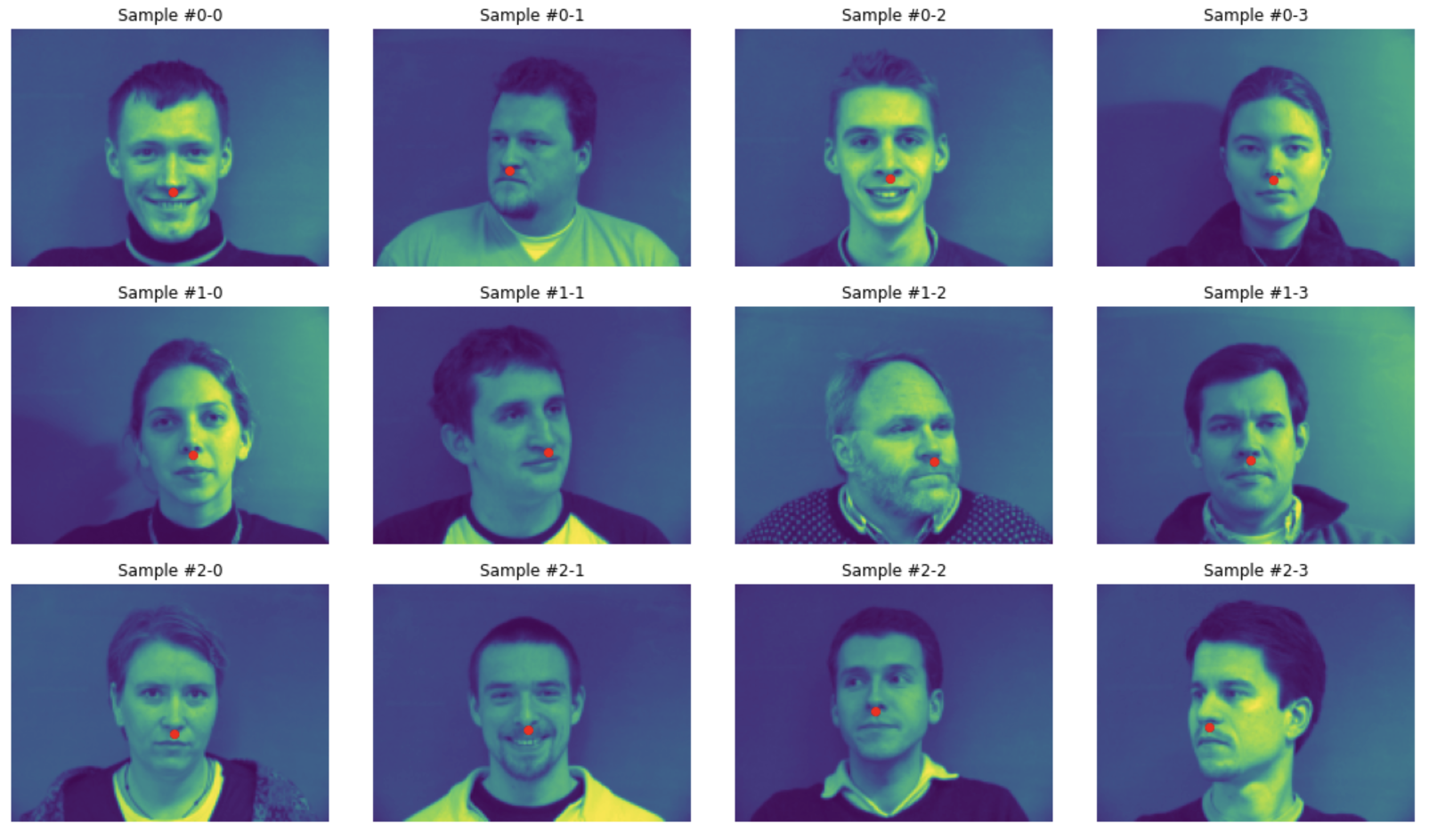

As a sanity check, I made sure the dataloader was correct in storing the datapoints.

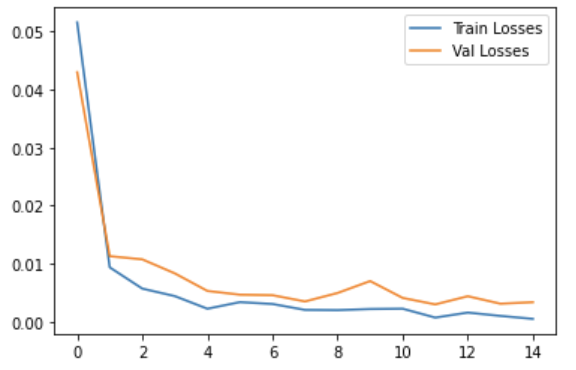

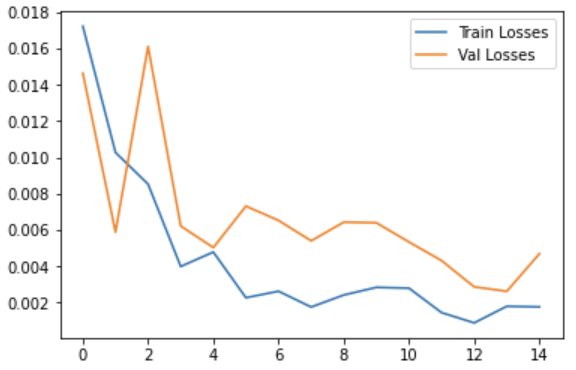

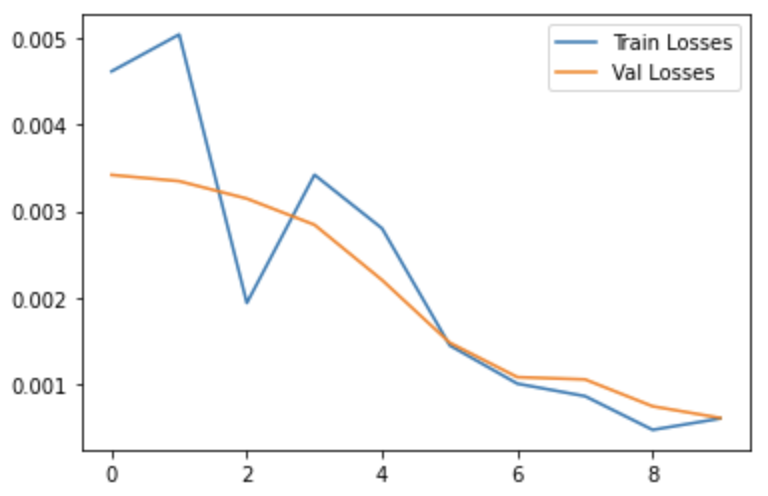

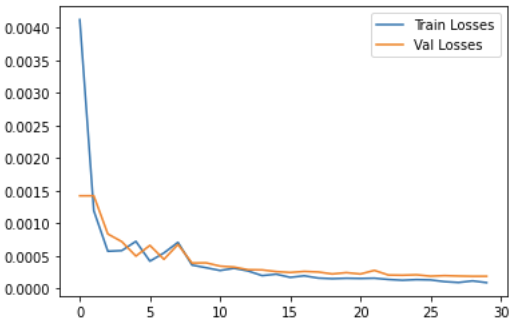

I then ran two models with learning rates of $1e-3$ and $5e-4$, respectively. I also changed the hidden Fully Connected layer sizes, with the first model having a size of 256 while the second model has a size of 64. The training graphs are detailed below, respectively.