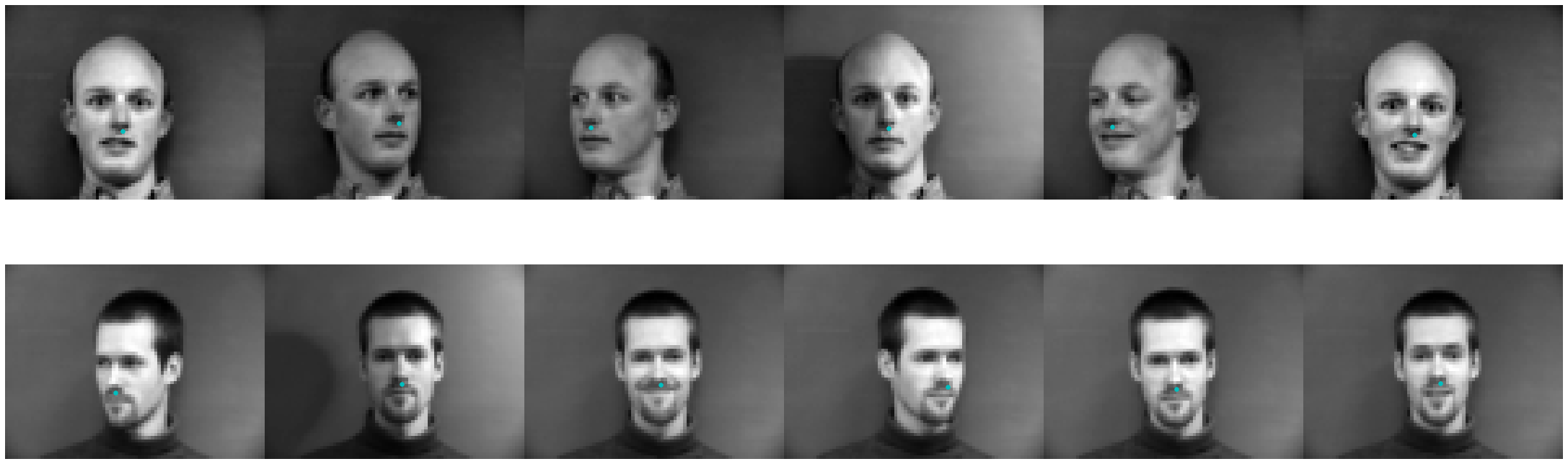

Here are two people with all six orientations as samples from the DataLoader, alongside Ground Truth Nose Keypoint:

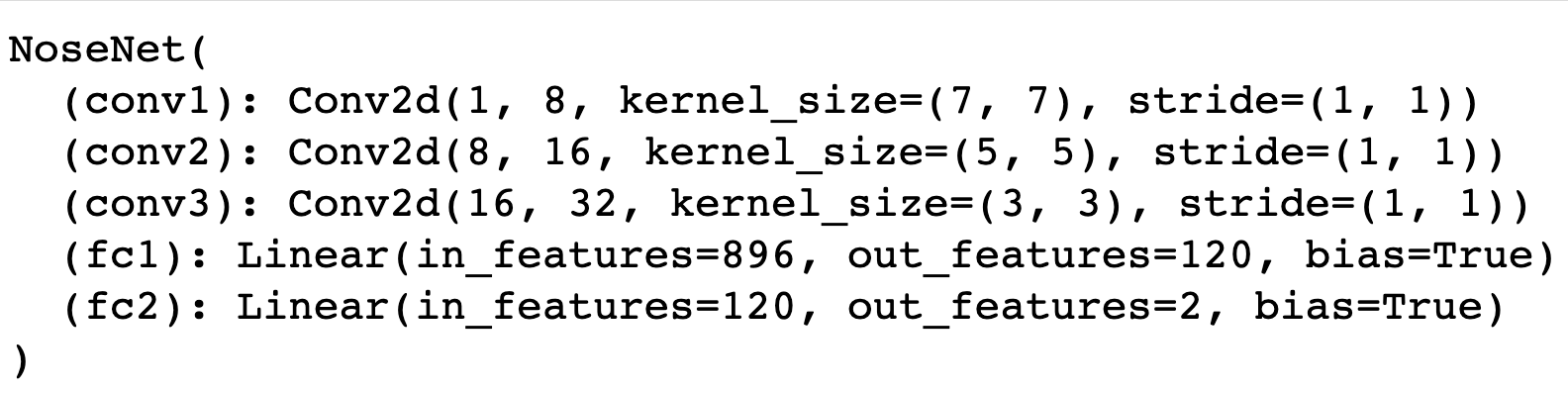

I chose the following architecture for my Nose Net, using 3 Convolutional layers accompanied by MaxPool2D and ReLU + 2 FC Layers:

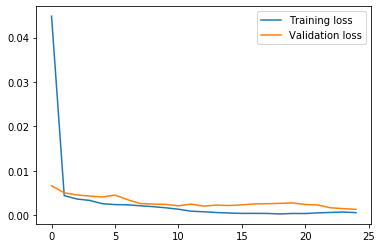

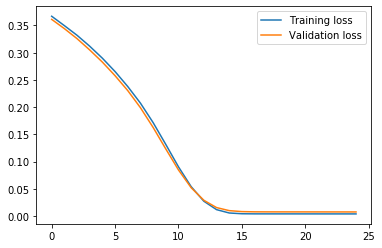

I trained the model with 25 epochs, batch size of 8, using the Adam optimizer with a learning rate of 0.001. The final epoch's training loss was 0.00021, with a validation loss of 0.0016. Below is my loss graph over 25 epochs:

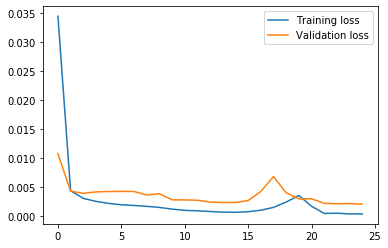

Here are two changes to the model network. First I tried a learning rate of 1e-5, which resulted in a training/validation loss converging around 0.004. Second, I removed the 3rd Conv Layer and had similar results where loss converged around 0.004. The two scenarios are shown below respectively:

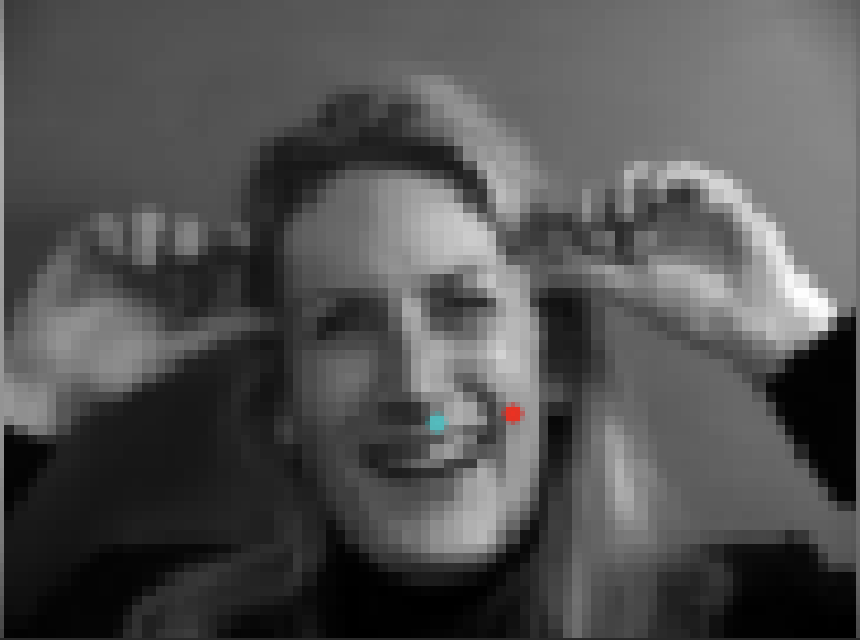

For the failure/success cases, red signifies the predicted nose keypoint, and cyan signifies the ground truth.

Here are two success cases for the Nose Net:

Here are two failure cases for the Nose Net:

These cases fail due to the irregular contour, nose-shape, and direction in which the person is facing.

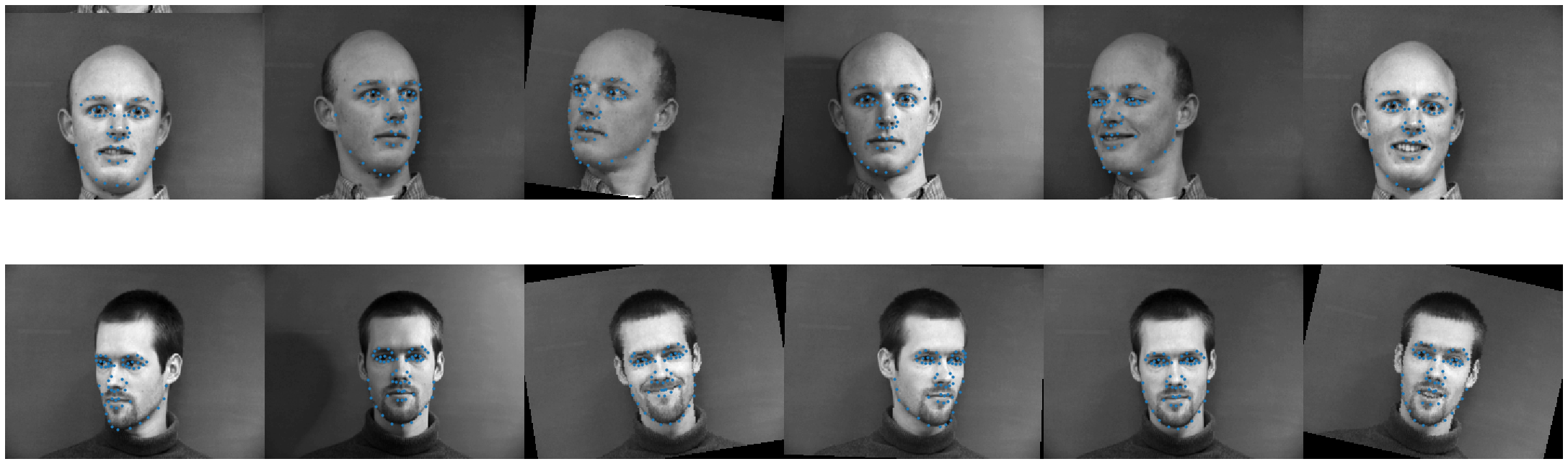

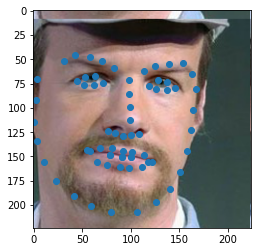

Here are two people with all six orientations as samples from the DataLoader, alongside Ground Truth Face Keypoints:

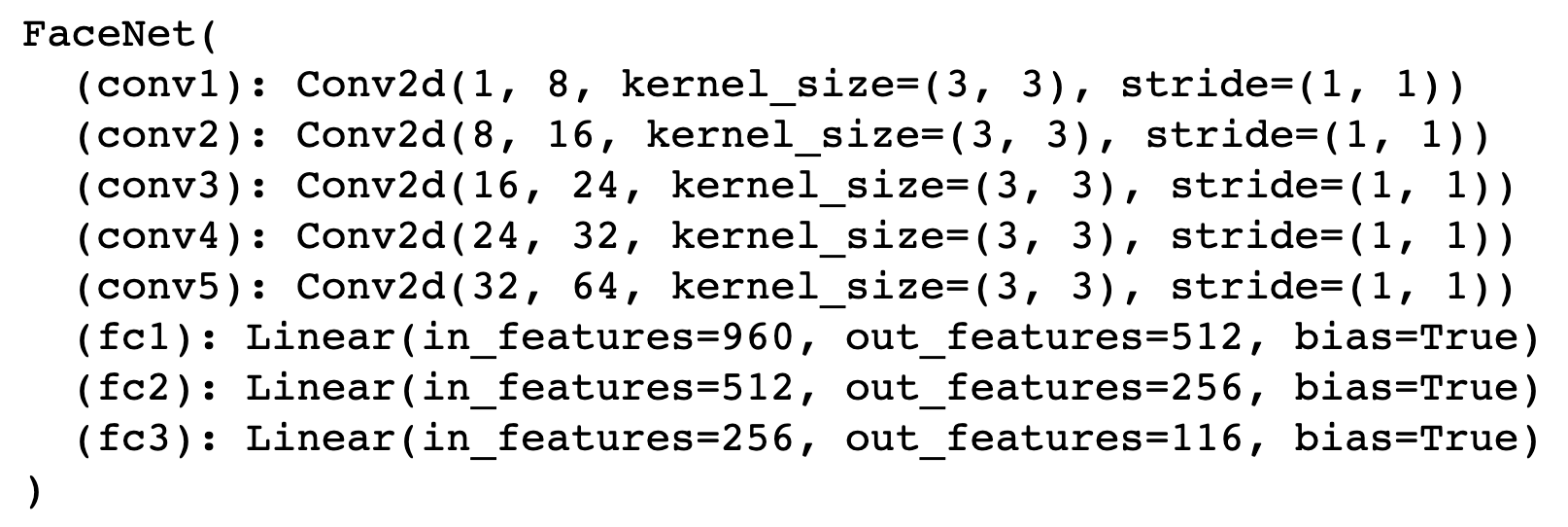

I chose the following architecture for my Face Net, using 5 Convolutional layers accompanied by MaxPool2D and ReLU + 2 FC Layers:

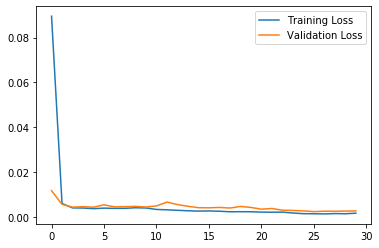

I trained the model with 30 epochs, batch size of 8, using the Adam optimizer with a learning rate of 0.001. The final epoch's training loss was 0.0015, with a validation loss of 0.0025. Below is my loss graph over 30 epochs:

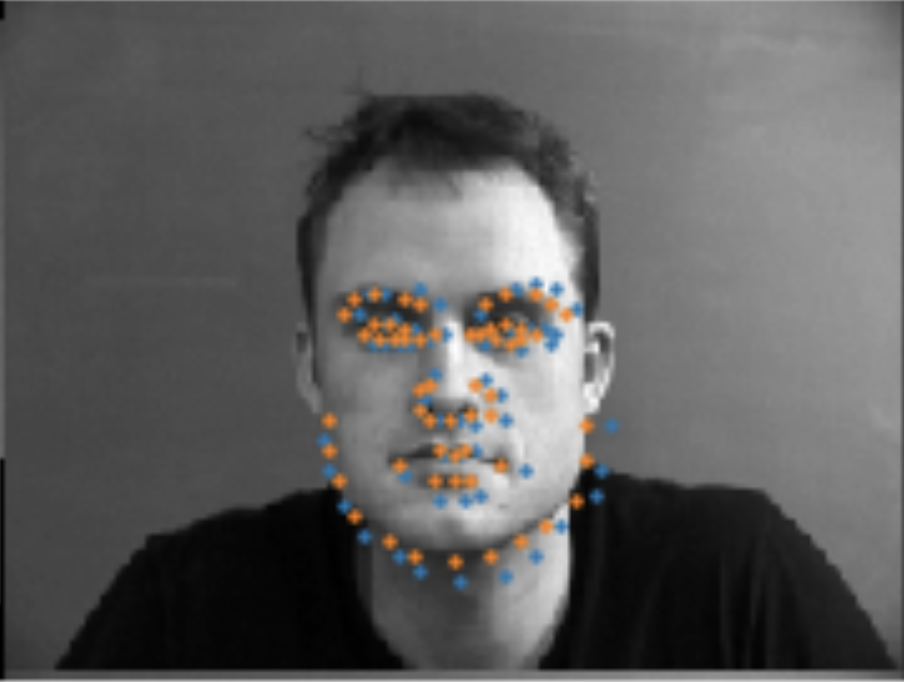

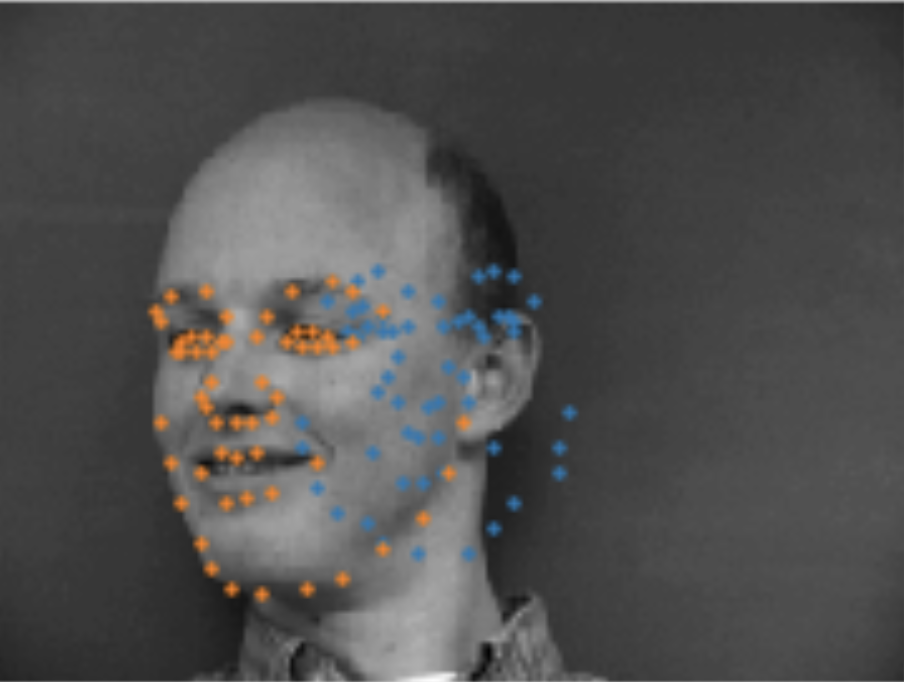

For the failure/success cases, blue signifies the predicted nose keypoint, and orange signifies the ground truth.

Here are two success cases for the Face Net:

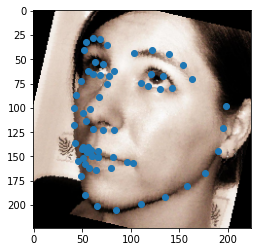

Here are two failure cases for the Face Net:

These cases fail due to the irregular contour, nose-shape, and direction in which the person is facing.

Here are the learned features visualized from the first Conv. Layer (size=8):

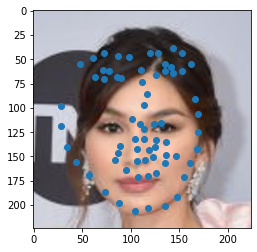

Here are a few people as samples from the DataLoader, alongside Ground Truth Face Keypoints:

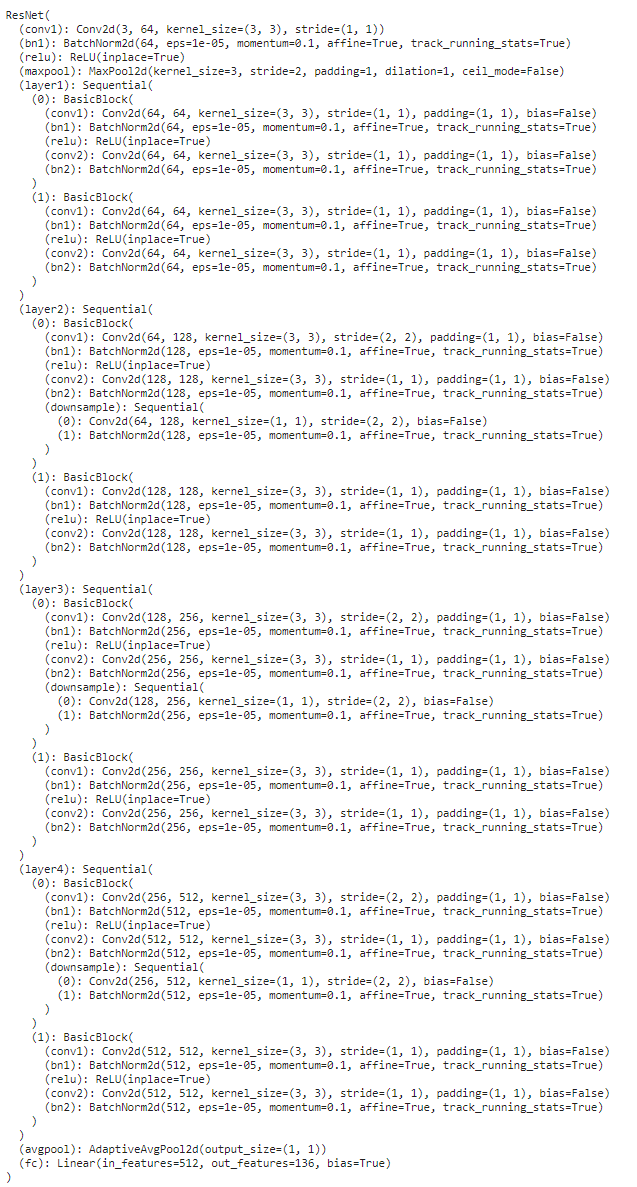

I chose the following architecture for my NN, using ResNet18 with a input Convolutional Layer (in=3, out=64) and output FC Layer (in=512, out=136)

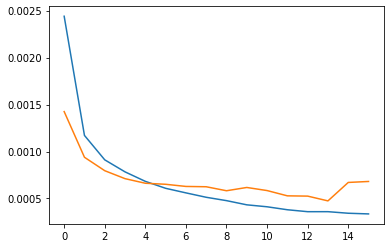

I trained the model with 16 epochs, batch size of 16, using the Adam optimizer with a learning rate of 0.0001. The final epoch's training loss was 0.00033, with a validation loss of 0.00068. Below is my loss graph over 16 epochs:

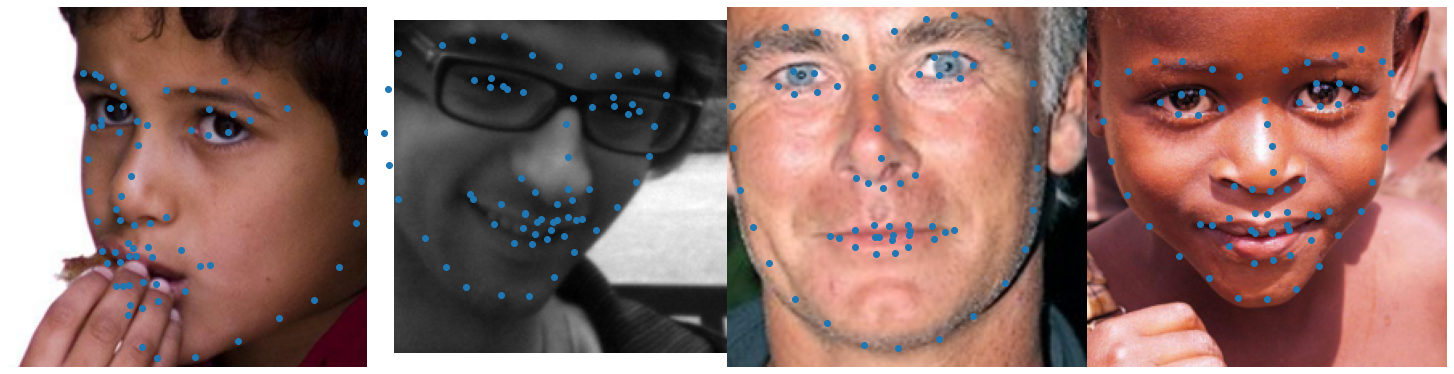

Here are a few predictions on the Kaggle Test Dataset for the ResNet:

Here are some predictions on my own images!