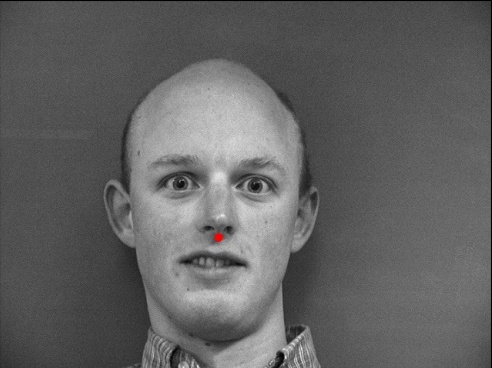

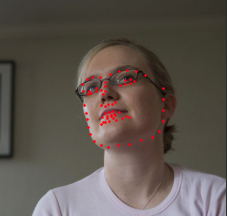

Dataloader Example 1

Before image processing

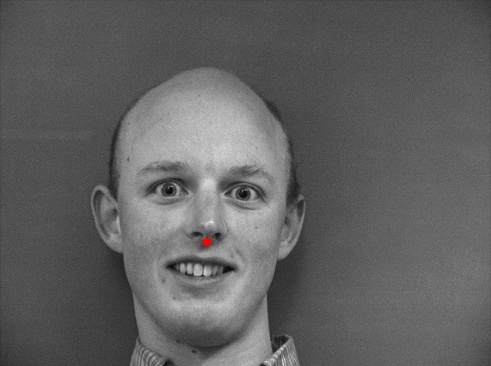

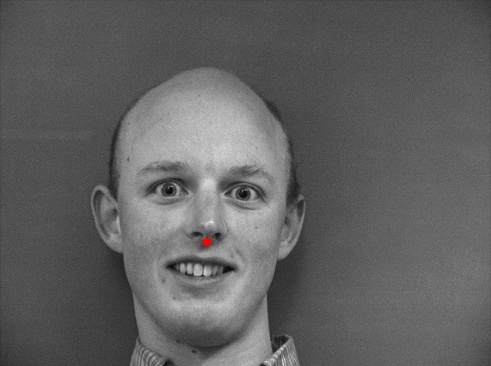

Dataloader Example 2

Before image processing

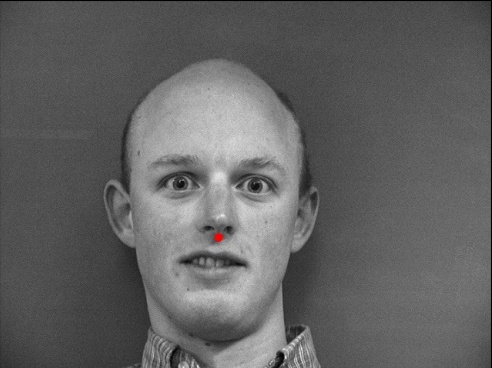

Dataloader Example 3

Before image processing

In this project, we attempt to use neural networks to detect facial keypoints automatically.

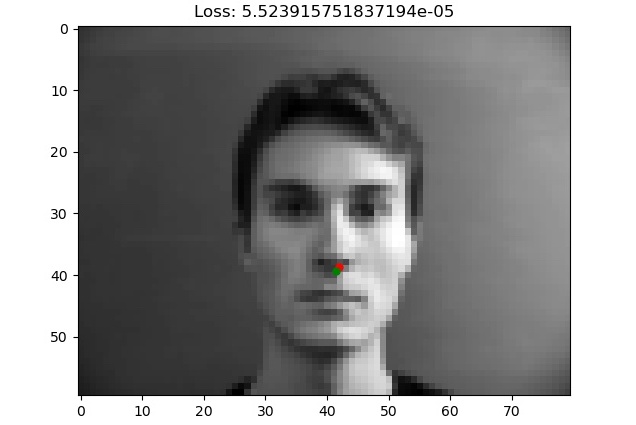

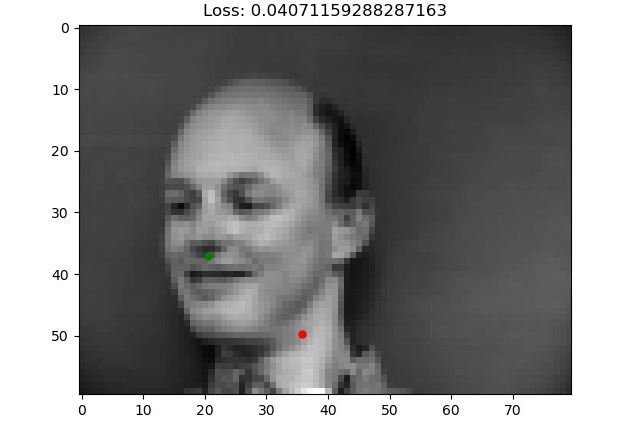

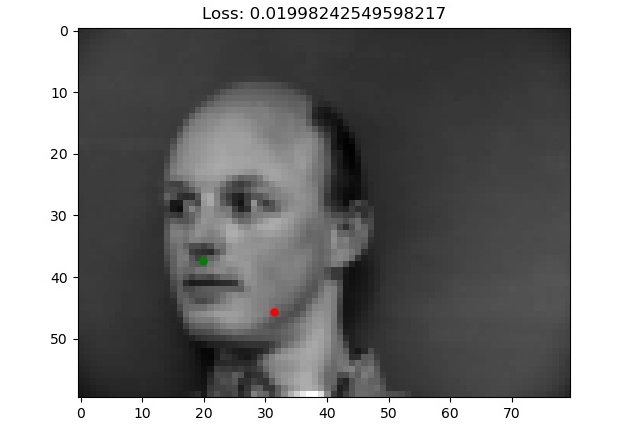

This part consisted of limiting our full facial keypoint detection to just the nose tip. I used convolutional neural networks to train our model with images and labels, using the IMM Face Database. I used PyTorch to build the model as well as setting up the data to be processed by the neural network. Here are some examples of the Dataloader with the nose tips annotated on the image.

Dataloader Example 1

Before image processing

Dataloader Example 2

Before image processing

Dataloader Example 3

Before image processing

Dataloader Example 1

After image processing

Dataloader Example 2

After image processing

Dataloader Example 3

After image processing

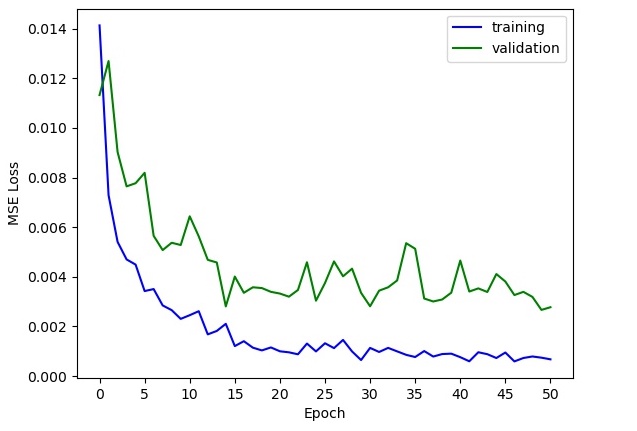

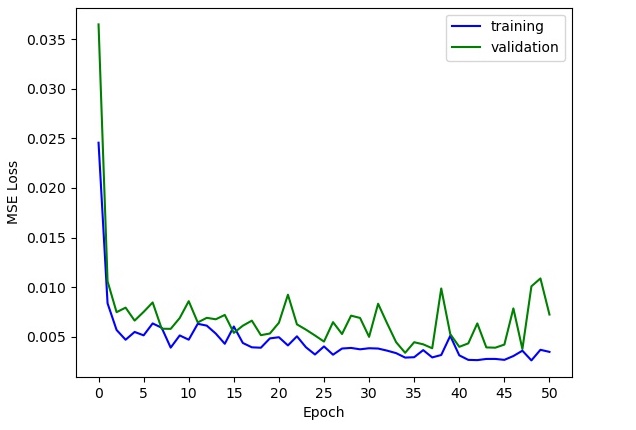

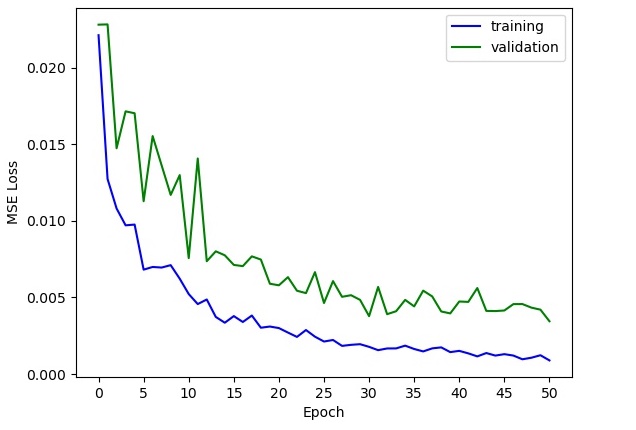

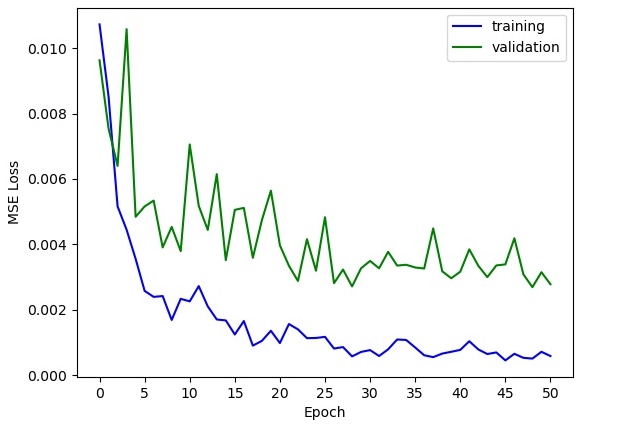

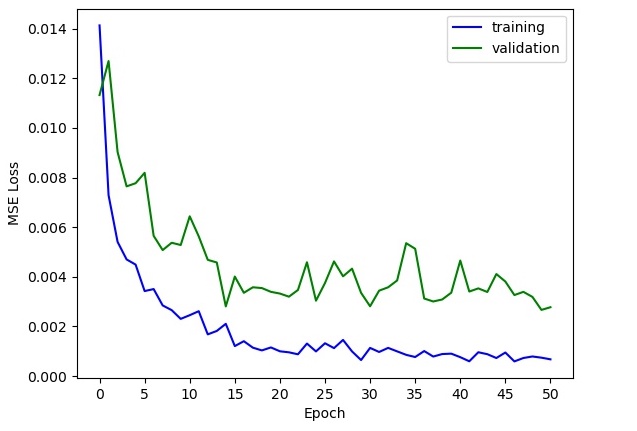

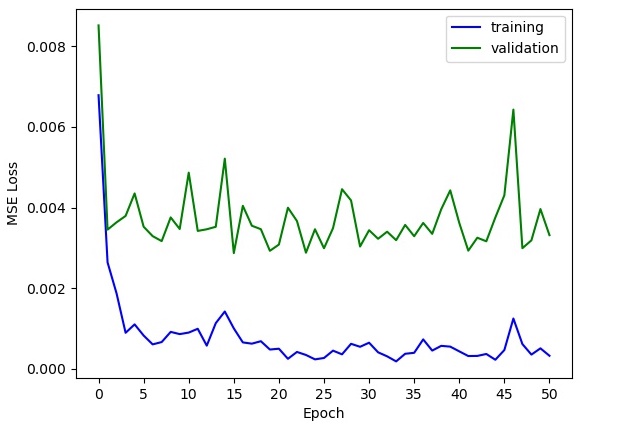

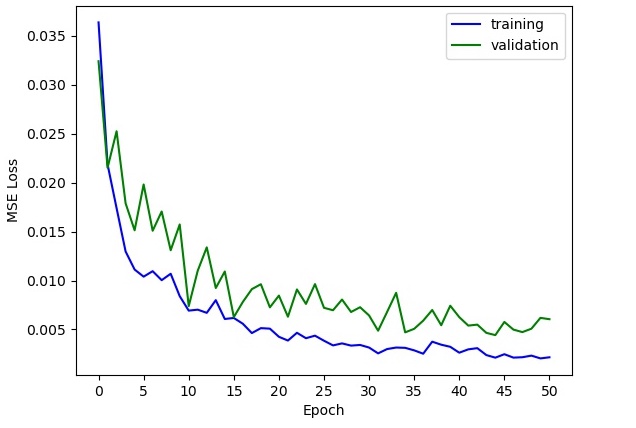

Here is a visualization of the training vs validation losses as the neural network trains with different learning rates.

My Model

lr = 8e-4

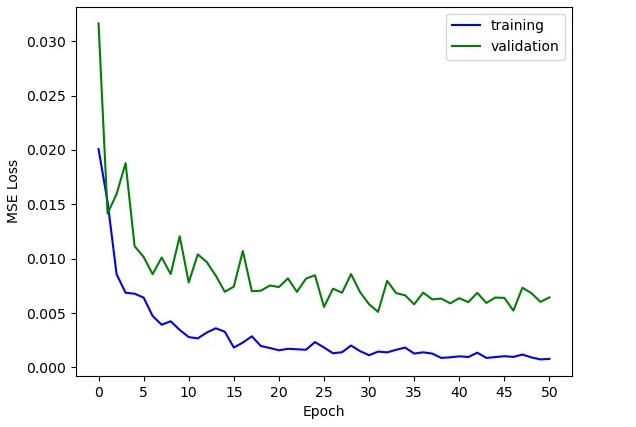

Different Model

lr = 1e-2

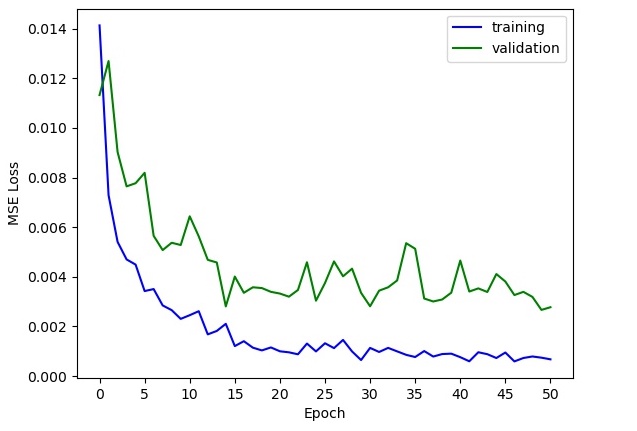

Different Model

lr = 1e-4

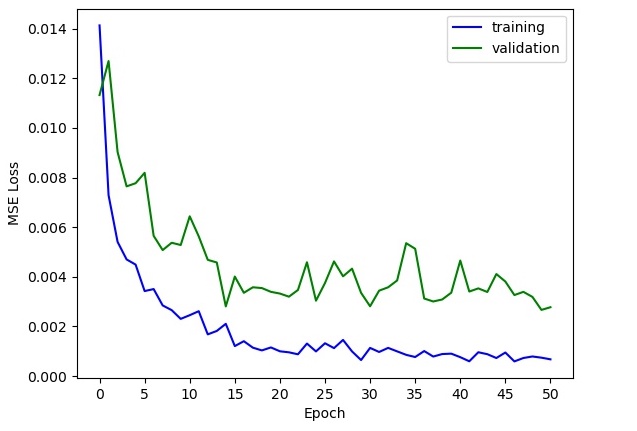

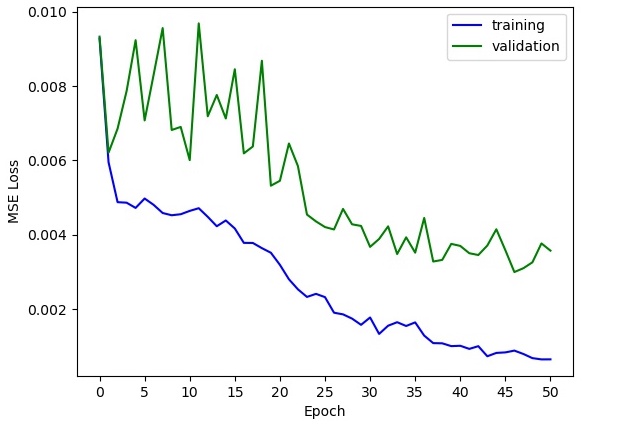

Here is a visualization of the training vs validation losses as the neural network trains with different batch sizes.

My Model

batch size = 12

Different Model

batch size = 6

Different Model

batch size = 24

Here is a visualization of the training vs validation losses as the neural network trains with/without batch normalization.

My Model

with batch normalization

Different Model

without batch normalization

Here is a visualization of the training vs validation losses as the neural network trains with/without batch dropout.

My Model

with dropout = .1

Different Model

without dropout

Different Model

with dropout = .5

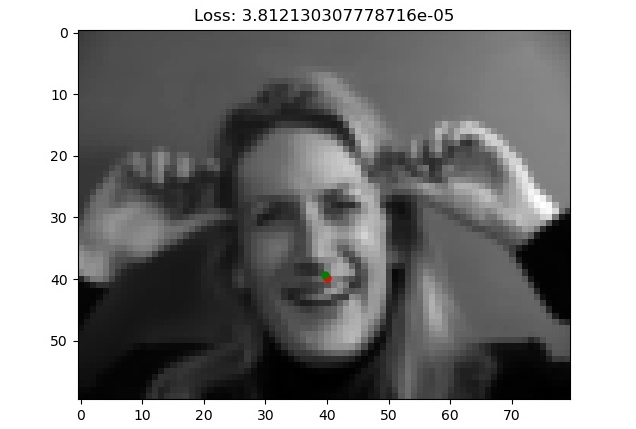

Here is a visualization of the neural network 2 best and worst predictions on the validation set. (green is truth and red is prediction)

Success #1

Success #2

Failure #1

Failure #2

I believe the success cases perform well because the headshots are taken straight on. Most of the dataset faces are pictures taken with their heads facing straight at the camera so it makes sense that the faces looking straight at us would perform better. However, the 2 failure cases feature a face drastically turned to the side. I believe that the model doesn't see enough faces turned to the side and especially not to this degree. Also, the network may overfit points towards the center of the image since that's where most of the nose keypoints in the training data lie.

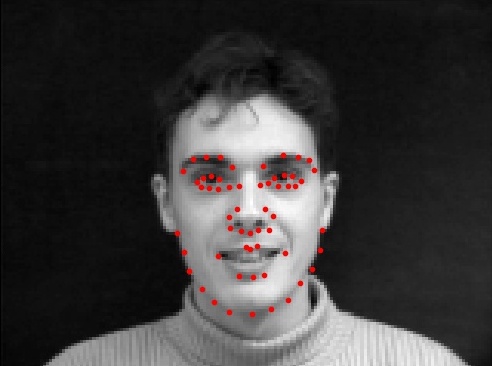

This part of the project extends the previous part by not just predicting the nose keypoint but all 58 facial keypoints by utilizing a neural network that we will train with our original data as well as data made with data augmentation techniques. I used color jitter, random rotations, and random translations as data augmentation techniques. The color jitter is hard to see in the grayscale but the other 2 are easier to understand visually. Here are some examples of the data augmentation teechniques present in the neural network.

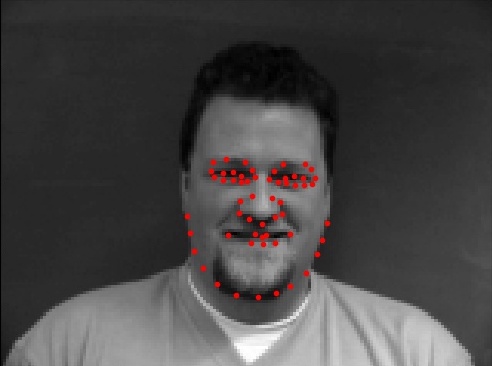

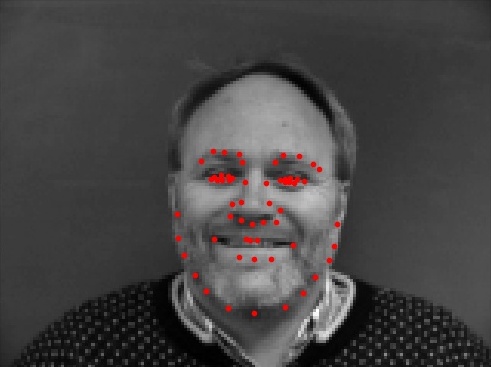

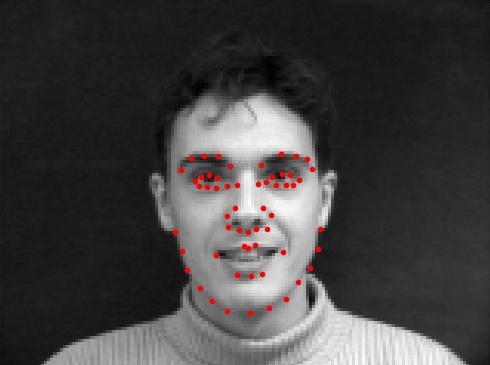

Dataloader Example 1

Before data augmentation

Dataloader Example 2

Before data augmentation

Dataloader Example 3

Before data augmentation

Color Jitter

After data augmentation

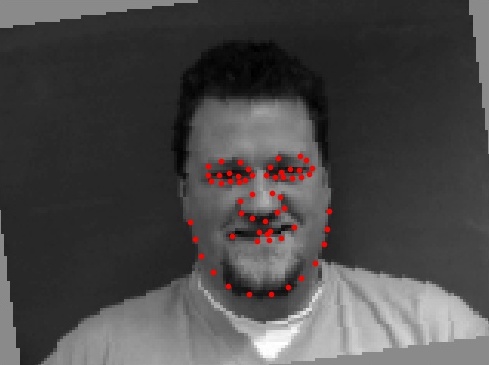

Rotation

After data augmentation

Translation

After data augmentation

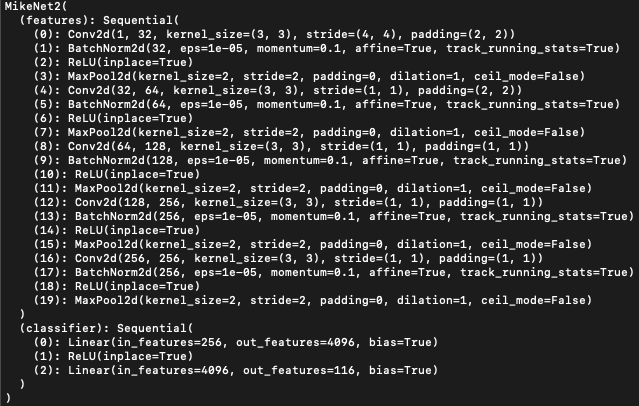

The image below details the architecture of my neural net on a layer by layer basis. Simply, I use 5 layers of (Conv2d, BatchNorm2d, ReLU, MaxPool2d), and I tried to follow the VGG best practices of doubling the channel size after every pool, using a kernel size of 3x3 for every convolution, always using ReLU (opposed to softmax, etc.). Other parameters not mentioned in the net are: input size: 120x160x1, optimizer: Adam, loss function: MSE, batch size = 16, learning rate = 1e-3, number of epochs trained on = 77.

Neural Net Architecture

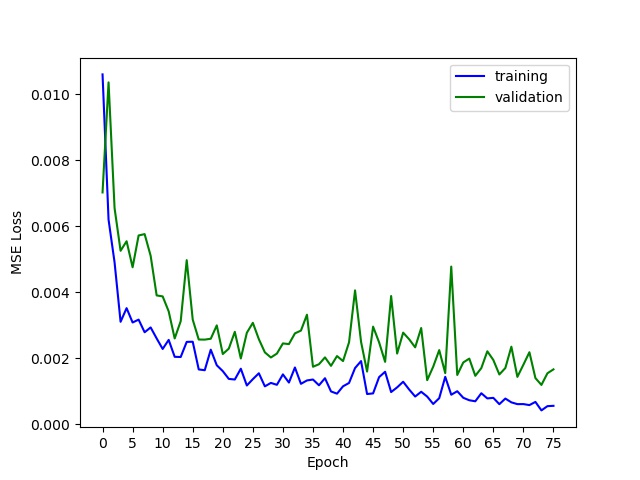

Here is a visualization of the training vs validation losses of the neural network over 77 epochs.

Loss Graph

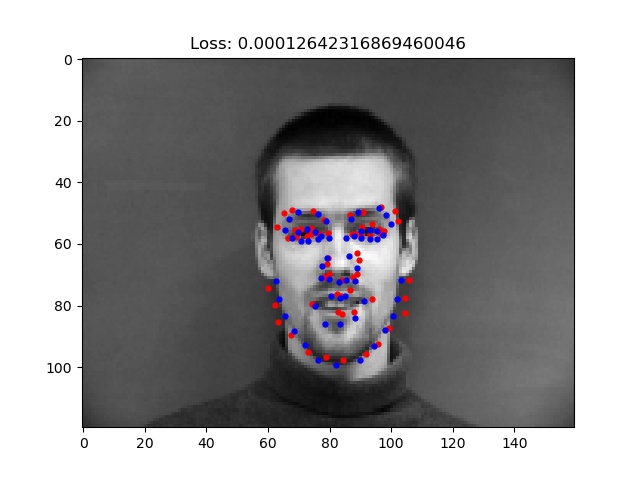

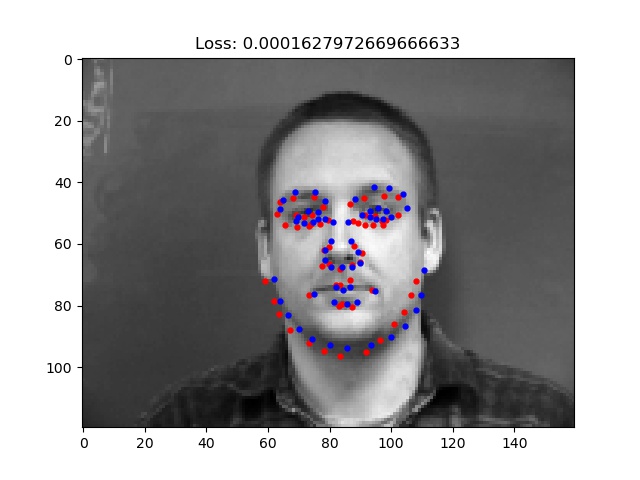

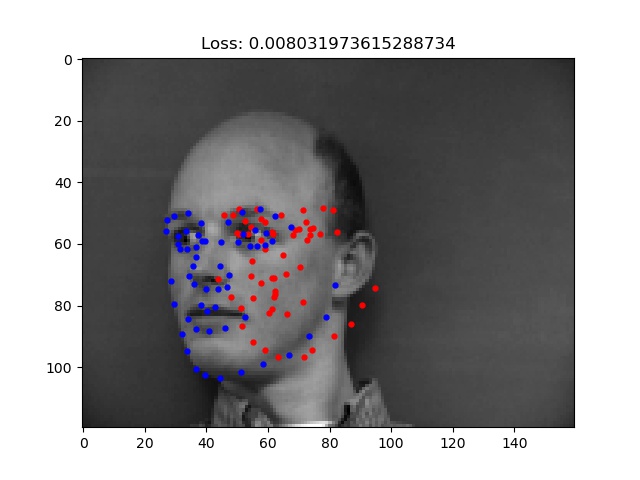

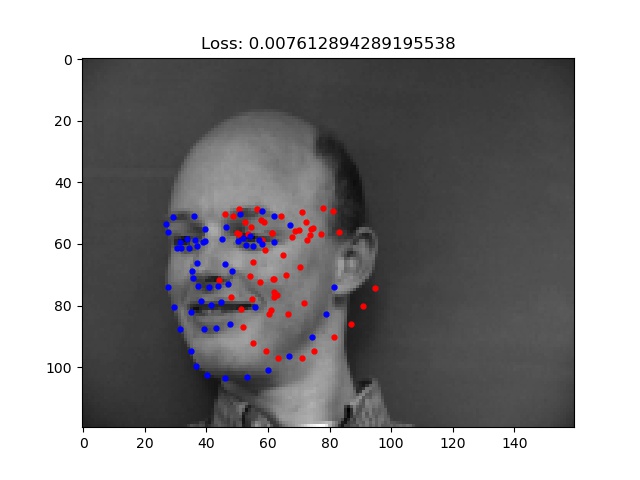

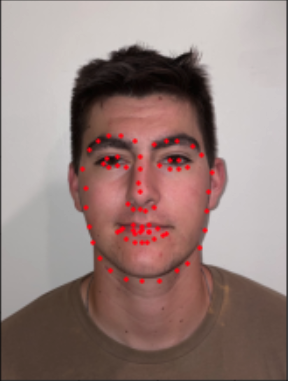

Here is a visualization of the neural network 2 best and worst predictions on the validation set w/ no data augmentation. (blue is truth and red is prediction)

Success #1

Success #2

Failure #1

Failure #2

I believe these success cases perform well once again because the headshots are taken straight on and faces are somehwat "average" shaped. And even with the data augmentation techniques, we still have a majority of straight on faces in our dataset. While, the 2 failure cases feature a face drastically turned to the side (featured as the failure cases in the previous part too). I believe that the model doesn't see enough faces turned to the side and especially not to this degree. Also, the network may overfit points towards the center of the image since that's where most of the full facial keypoints in the training data lie.

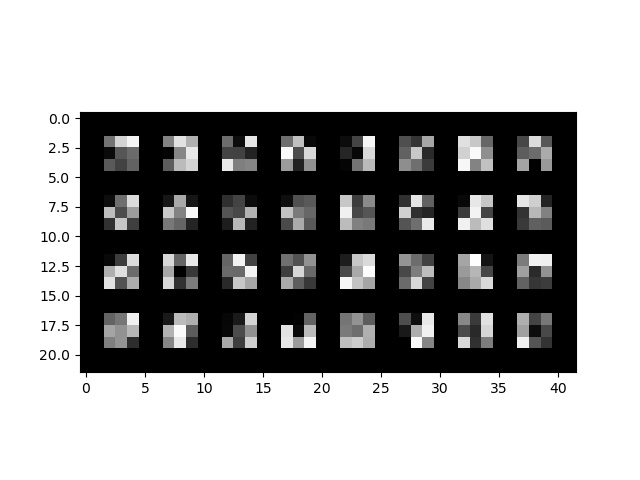

The grid below shows a visualization of the 32 filters in the first conv. layer of my neural net. Since the kernel size is 3x3, it's hard to make out and significant characteristics of the filters.

Filters

This part of the project extends the previous part by training and testing on a much larger dataset. I used Google Colab to train the neural network since it would be too much for my Mac Mini. I had to make 7 new Google accounts since Google's free running on Colab only gives you a couple hours of runtime which is not even close to enough for how long this has to train. Fun Fact: 7 accounts is the max number of gmail accounts for one phone number :,)

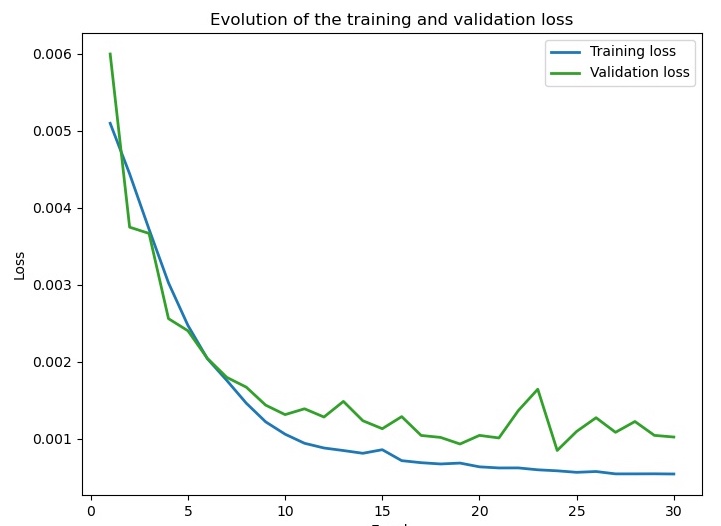

I made use of PyTorch's ResNet18 model and utilized transfer learning by setting the pretrained flag to True. I set the first layer of the model to take in 1 channel instead of 3 to use grayscale images instead of RGB and set the last fc layer to output 136 nodes. Training parameters: input size: 224x224x1, optimizer: Adam, loss function: MSE, batch size = 128, learning rate = 1e-3, learning scheduler with gamma = 0.9, number of epochs trained on = 30.

Here is a visualization of the training vs validation losses of the neural network over 30 epochs.

Training/Validation Loss Graph

Here are some examples of the neural net predicting facial keypoints on some random images from the test set.

Test Image 1

Test Image 2

Test Image 3

Test Image 4

Test Image 5

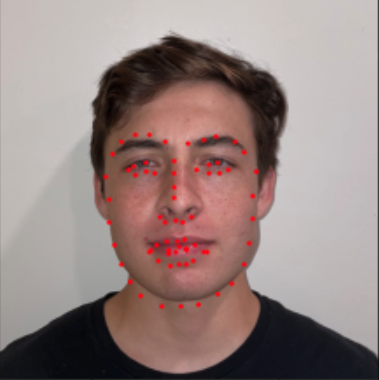

Here are some examples of the neural net predicting facial keypoints on images that I provided. The net does a good job of predicting all of their keypoints since they are straight on headshots, "easy" for the neural network since it was trained on a lot of similar images.

Steve Keypoints

Derek Keypoints

Jack Keypoints

My mean absolute error from the Kaggle site is: 17.10657.