In this project, we train a neural network to get keypoints from images. First, we try to just extract the nose keypoint of the danes dataset. Then, we do the same for all keypoints on the faces of the danes dataset. Finally, we use a bigger neural net on a large dataset, and compete in a kaggle competition to see how good our net is.

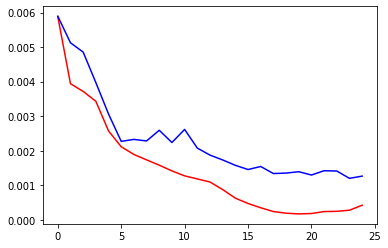

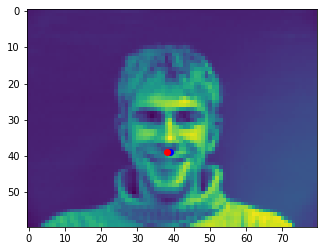

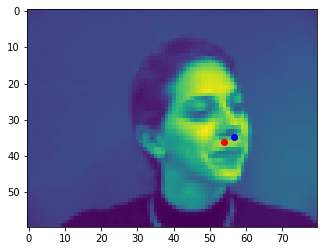

To get just the nose tip, we extract solely that keypoint, then have a 3 layer neural net with max pooling at each step and a couple fully connected layers at the end. The output of this net is the keypoint. I've attach results below. First is the train and validation loss, followed by some predictions. The noses were for the most part predicted pretty well, the failure cases were mainly when the keypoint was tagged a bit off. I used a learning rate of 0.001 for my network. I increased learning rate but that resulted in very noisy training loss. I also tried increasing the number of layers, but the network just kept outputting the same value.

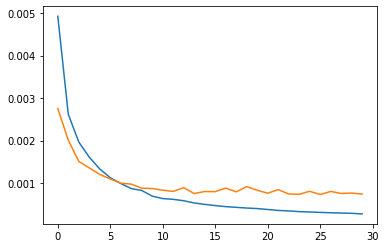

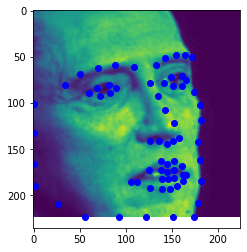

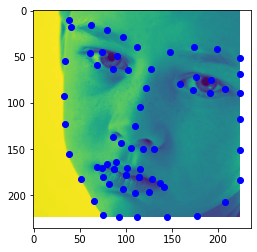

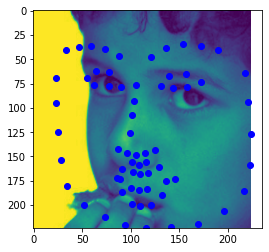

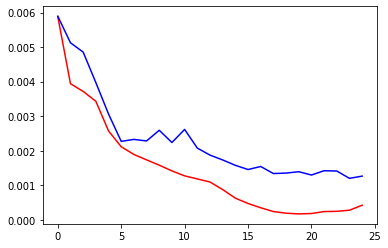

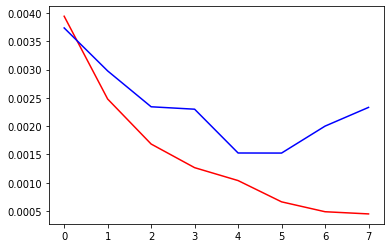

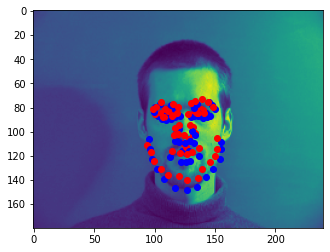

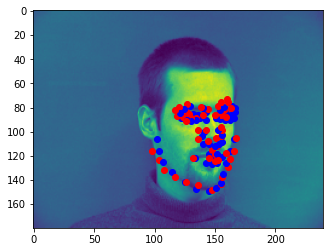

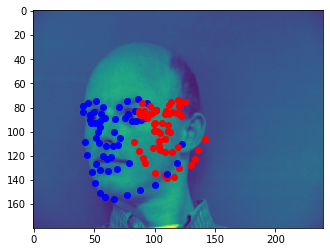

To get the face keypoints, we extract the keypoints with the dataloader and flatten and attach them, then have a 3 layer neural net with max pooling at each step and a couple fully connected layers at the end. I tried expanding to 5 layers, but 3 just ended up working way better. The output of this net is the flattened keypoints, which I iterate through for my results. I've attach results below. First is the train and validation loss, followed by some predictions. The noses were for the most part predicted pretty well, the failure cases were mainly when the keypoint was tagged a bit off, or when the face was rotated a bit (not facing the frame). I used a learning rate of 0.001 for my network, with 3 layers each followed by a relu layer.

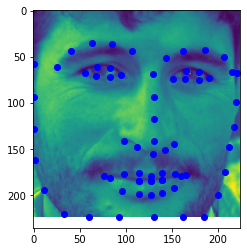

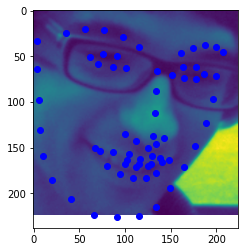

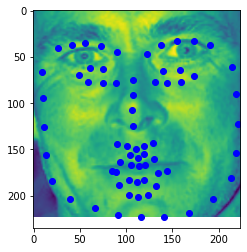

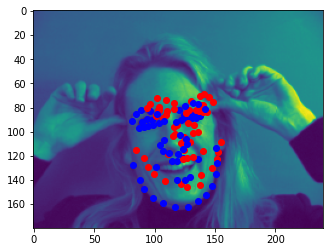

Here, I adapted the dataloader I had before to work with the larger dataset, which I ran in google colab. I used a base of resnet18, which had an output layer added on top that would take the output neurons from resnet18 and make it into 136 neurons which is the amount of keypoints I had to predict. I used a learning rate of 0.001. I've attached my results below, including train and validation loss. I've also attached some keypoint predictions, some of which were good and some of which are bad. My kaggle score was 25.05066.