In this part of the project, we used Pytorch to build a neural network to detect the nose tip location on a face. We trained on the IMM Face Dataset, which contained many images of faces and their corresponding facial keypoint locations. We had to write a custom dataloader, convolutional neural network, and network training/validation code.

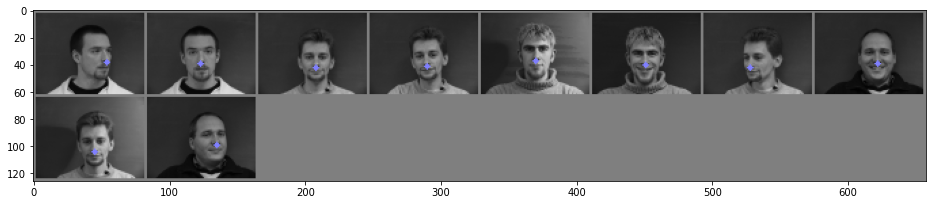

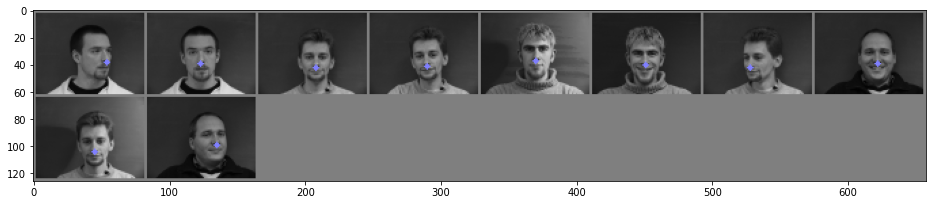

Samples from dataloader, with nose tip annotated (images were converted to grayscale, normalized, and resized to (80, 60)):

I divided my data into a training and validation set, using the images of the first 32 people in the dataset as training and the images of the remaining 8 people as validation.

For my final network, I used three convolutional layers (all outputting 16 channels), each of which was followed by a relu and a max pooling layer. At the end, I had two linear layers, which were size (1120, 256) and (256, 2). After the second convolution layer, I also added a dropout layer with p = 0.3, to reduce overfitting.

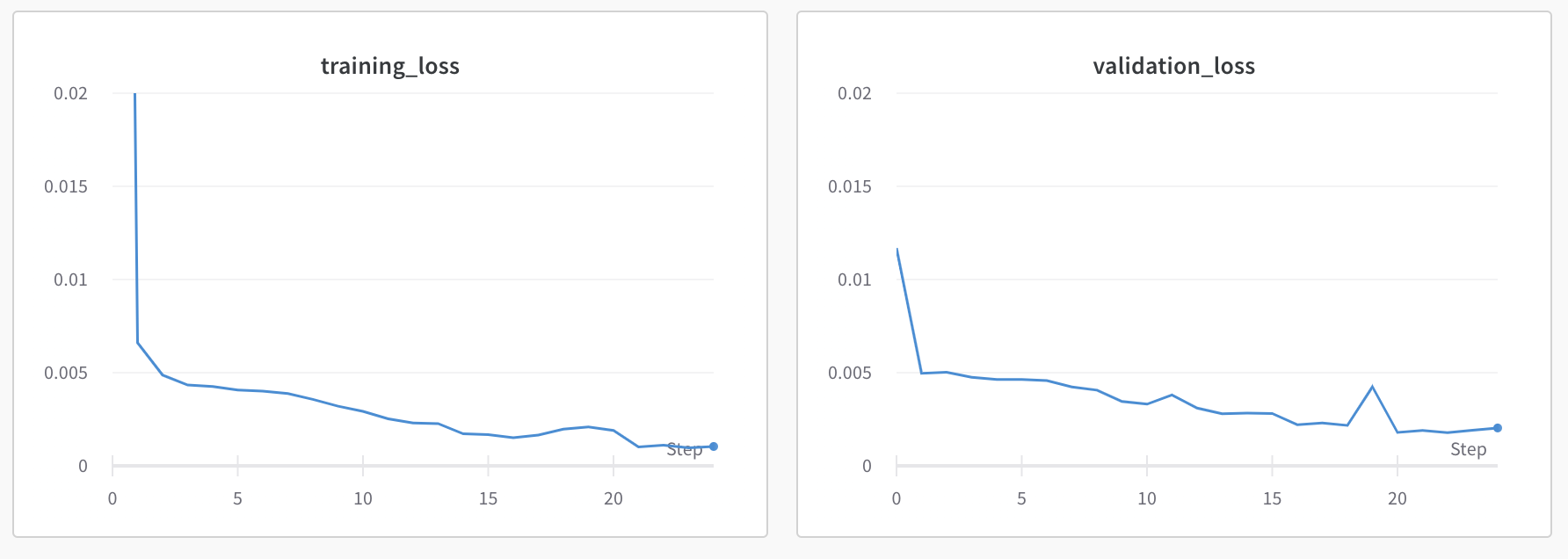

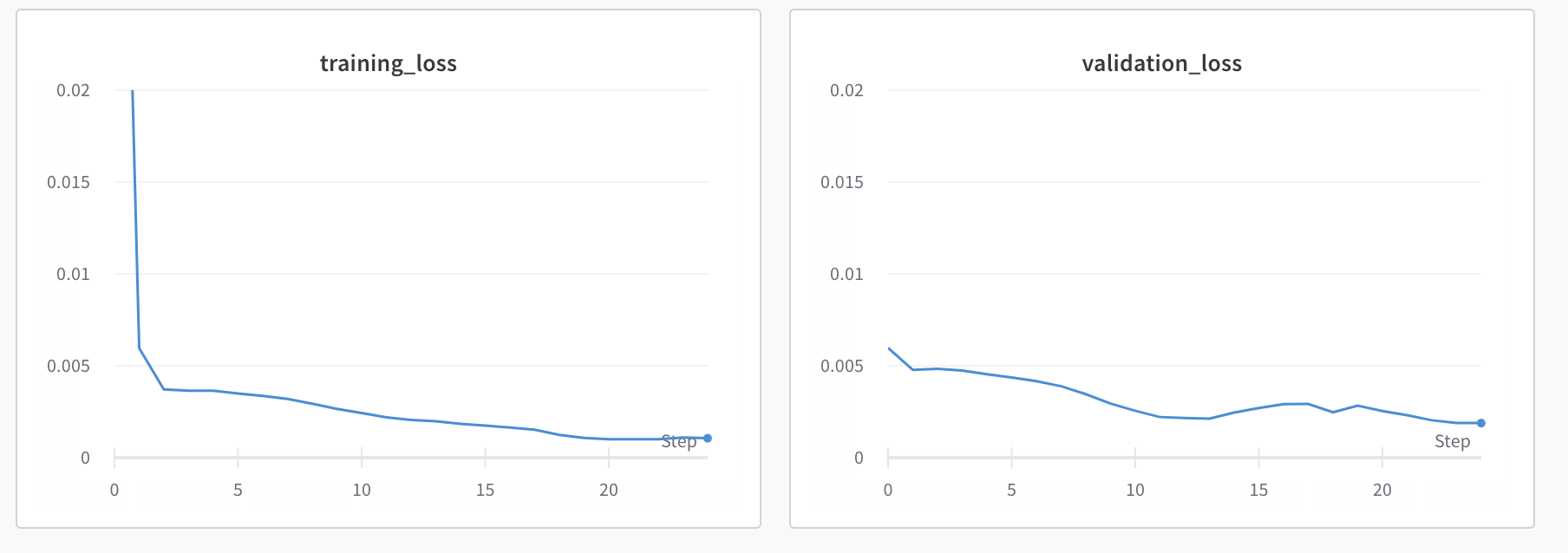

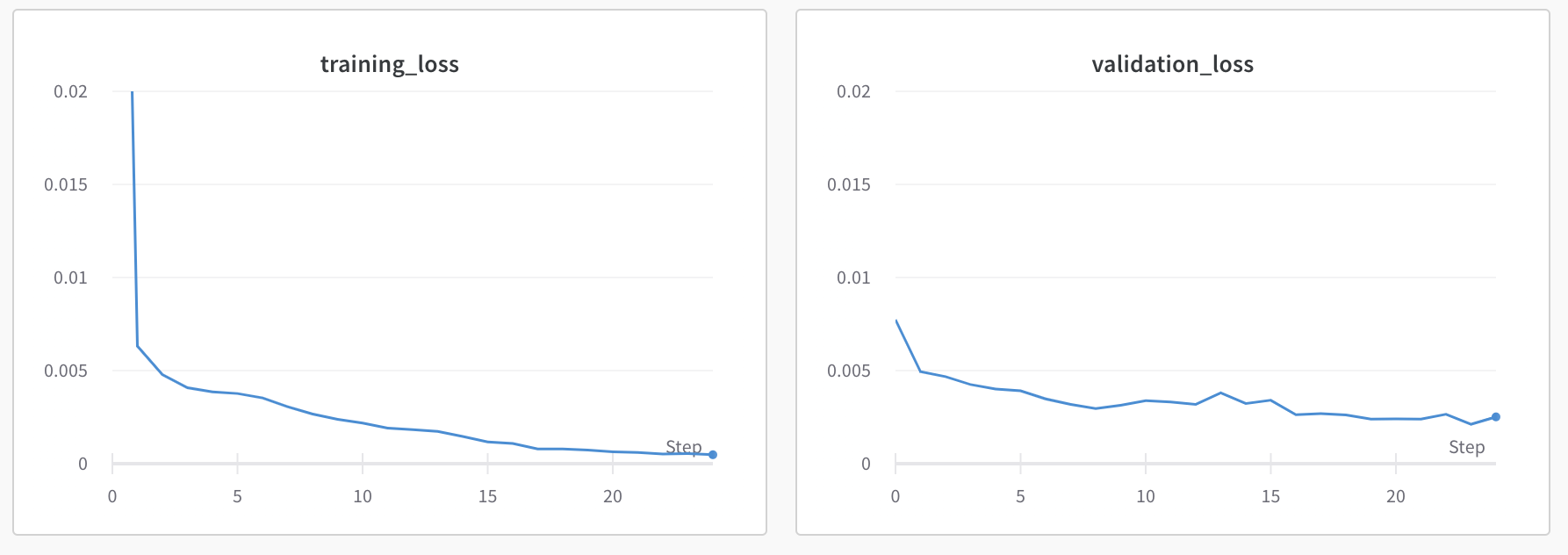

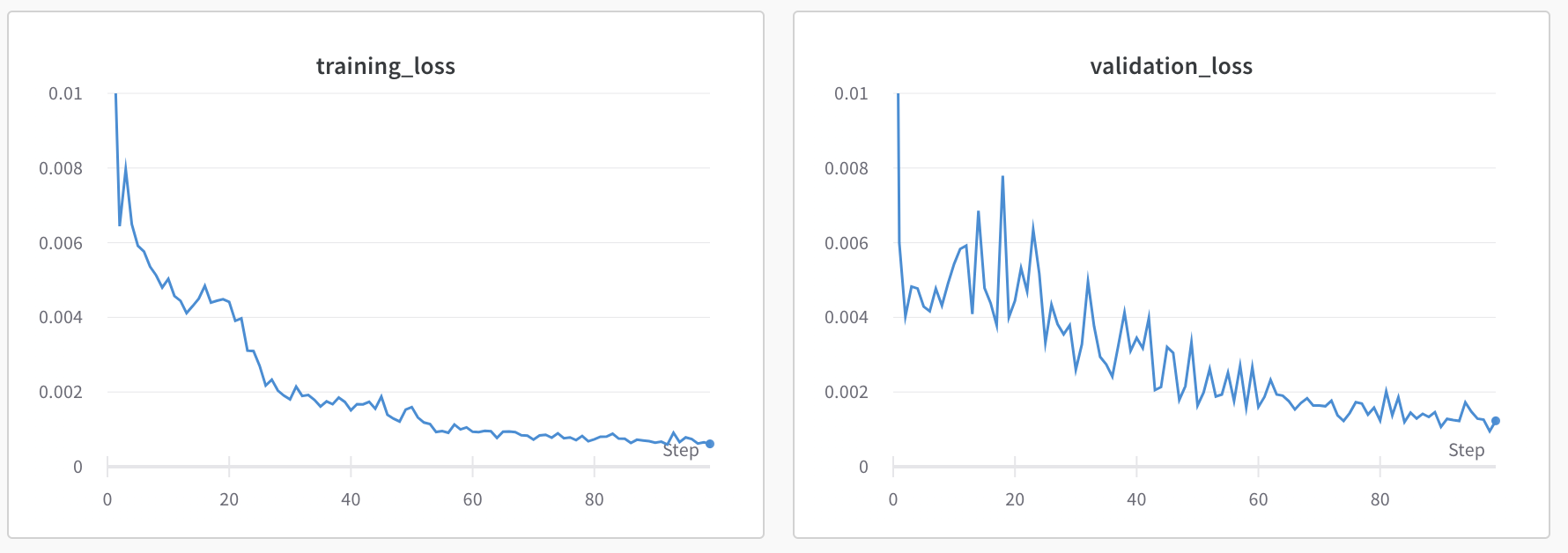

Below, I show the training/validation loss graphs for few different sets of hyperparameters I tried, in order to choose my final model:

Test 1: Learning rate = 1e-2, num_epochs = 25, batch_size = 10, filter_size = 3, dropout = 0.3:

This seems pretty good, so I increased the learning rate to see if it could learn even faster.

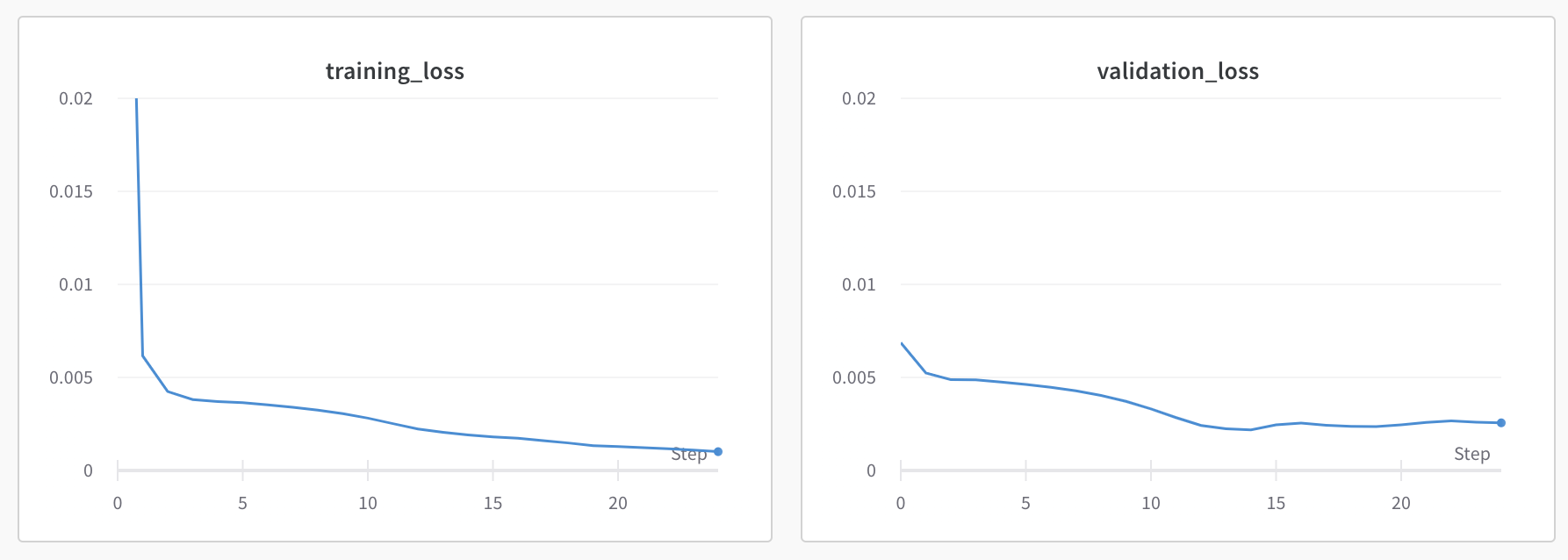

Test 2: Learning rate = 1e-3, num_epochs = 25, batch_size = 10, filter_size = 3, dropout = 0.3:

The final validation loss improved slightly. Next, I tried increasing the filter size for my convolutions to see if I could get the overall training/validation loss to go down (prevent underfitting).

Test 3: Learning rate = 1e-3, num_epochs = 25, batch_size = 10, filter_size = 5, dropout = 0.3:

This resulted in some overfitting, and a higher validation loss. So, I went back to Test 2 but decreased the dropout to see if I could fix underfitting.

Test 3: Learning rate = 1e-3, num_epochs = 25, batch_size = 10, filter_size = 5, dropout = 0.1:

This did not decrease the training loss, and resulted in a higher validation loss.

I ended up choosing the parameters from Test 2 because they resulted in the lowest final validation loss.

Results on all validation images, with final model:

Some success cases:

Some failure cases:

The model failed on most images of this person, possibly because he has a different hair color/hairstyle than the other men in the dataset, or because his eyebrows are closer together than the other people in the dataset. I don't think it has to do with the face angle in the picture, surprisingly, because the model still failed even on an image of him looking directly at the camera. It seems like the model just couldn't generalize to this person's face shape.

In this part, we expanded the previous part to be able to detect 58 facial keypoints instead of just the nose tip.

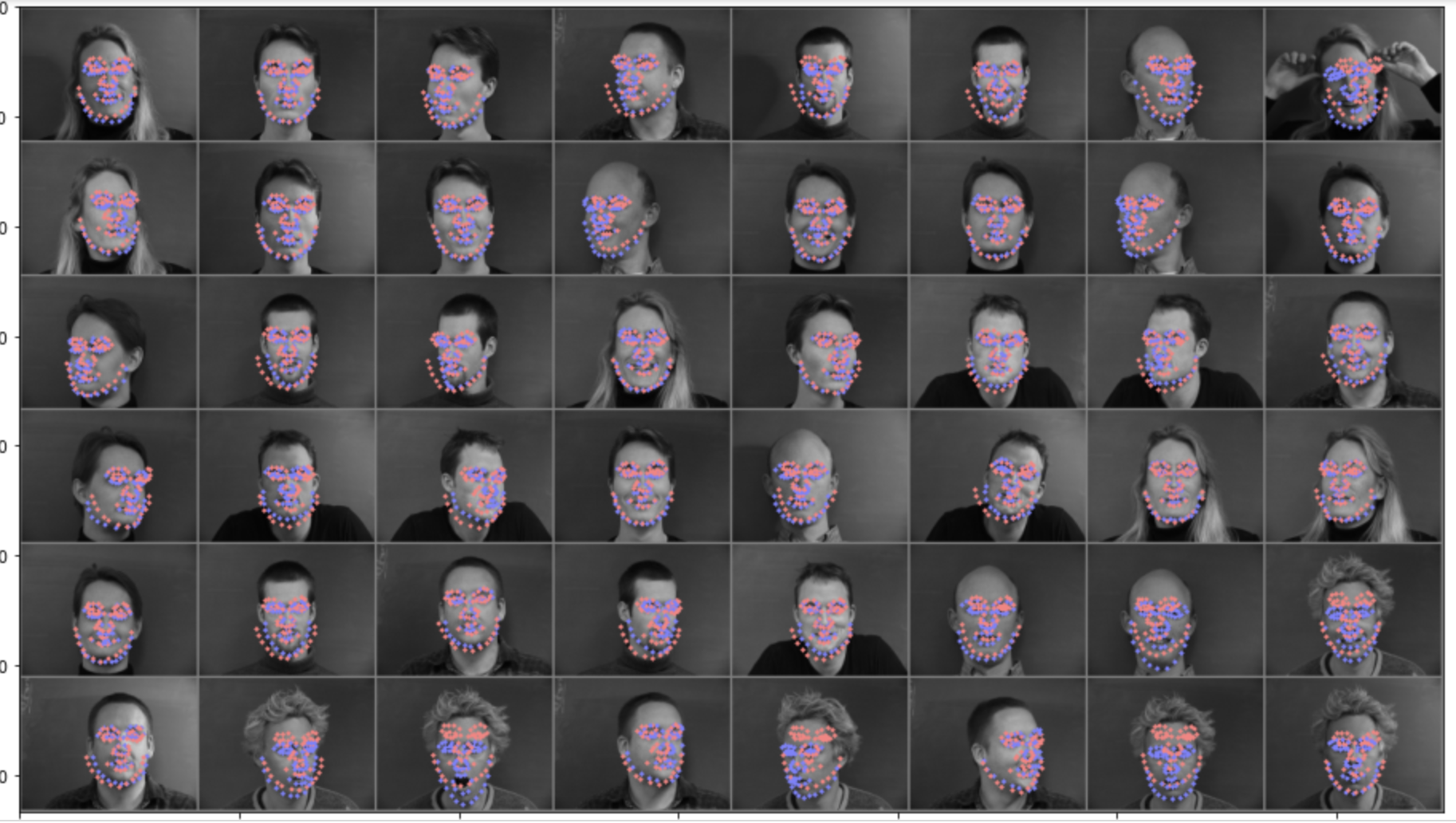

For the dataloader, I added in augmentation (only for the training data) since we have a small dataset and don't want to overfit. For each image, I randomly rotated it between -15 and 15 degrees, and randomly shifted it vertically and horizontally by between -10 and 10 pixels. Below, I show some sample training data from the dataloader, with the facial keypoints annotated (images were converted to grayscale, normalized, and resized to (160, 120)).

For my model architecture, I used five convolutional layers, followed by 3 linear layers. The first two convolutional layers had a filter size of 5, while the remaining 3 had a filter size of 3. The number of output channels from the convolutional layers was 12, 24, 32, 64, and 128. Each convolutional layer was followed by a relu and a max pooling layer (filter size 2 by 2, stride 2). I also added two dropout layers, with p = 0.3, to reduce overfitting. The final output of the network is of size (batch_size, 116), because we want to detect the (x,y) locations of the 58 keypoints for each image. The detailed model architecture is shown below (note that the output shape column assumes an input of size (10, 1, 160, 120), where batch_size is 10):

========================================================================================== Layer (type:depth-idx) Output Shape Param # ========================================================================================== Part2Net -- -- ├─Conv2d: 1-1 [10, 12, 158, 118] 312 ├─ReLU: 1-2 [10, 12, 158, 118] -- ├─MaxPool2d: 1-3 [10, 12, 79, 59] -- ├─Conv2d: 1-4 [10, 24, 77, 57] 7,224 ├─ReLU: 1-5 [10, 24, 77, 57] -- ├─MaxPool2d: 1-6 [10, 24, 38, 28] -- ├─Conv2d: 1-7 [10, 32, 38, 28] 6,944 ├─ReLU: 1-8 [10, 32, 38, 28] -- ├─MaxPool2d: 1-9 [10, 32, 19, 14] -- ├─Dropout: 1-10 [10, 32, 19, 14] -- ├─Conv2d: 1-11 [10, 64, 19, 14] 18,496 ├─ReLU: 1-12 [10, 64, 19, 14] -- ├─MaxPool2d: 1-13 [10, 64, 9, 7] -- ├─Conv2d: 1-14 [10, 128, 9, 7] 73,856 ├─ReLU: 1-15 [10, 128, 9, 7] -- ├─MaxPool2d: 1-16 [10, 128, 4, 3] -- ├─Dropout: 1-17 [10, 128, 4, 3] -- ├─Linear: 1-18 [10, 1024] 1,573,888 ├─ReLU: 1-19 [10, 1024] -- ├─Linear: 1-20 [10, 1024] 1,049,600 ├─ReLU: 1-21 [10, 1024] -- ├─Linear: 1-22 [10, 116] 118,900 ========================================================================================== Total params: 2,849,220 Trainable params: 2,849,220 Non-trainable params: 0 Total mult-adds (M): 572.27 ========================================================================================== Input size (MB): 0.77 Forward/backward pass size (MB): 31.23 Params size (MB): 11.40 Estimated Total Size (MB): 43.39 ==========================================================================================

Overall, my hyperparameters for the final model were:

First two convolutions filter size: 5

Next three convolutions filter size: 3

Batch Size: 10

Learning rate: 1e-3

Num Epochs: 100

Dropout: 0.3

Below, I show training/validation loss for this model:

Results on all validation images, with final model (these results display the predicted keypoints on the converted to grayscale/normalized/resized images):

Some success cases:

Some failure cases:

I think it failed for these two images because they have different facial expressions than the other images (the first has a wide open mouth, and the second one has a slight head tilt, a large smile, and hands by the head). All of these factors make it hard for the model to generalize to these images, because it hasn't seen too many images like these.

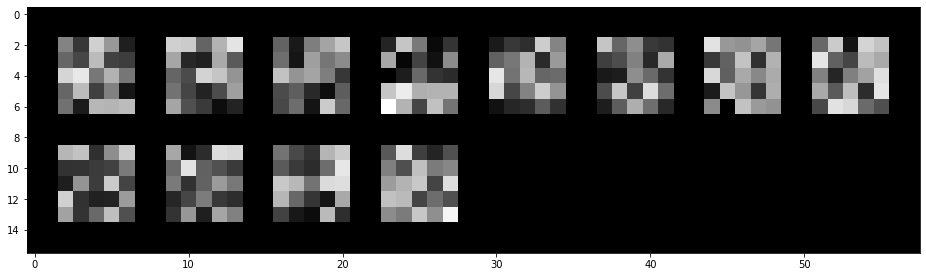

Visualizing the learned filters:

These are the learned weights for the 12 convolutions in the first layer (these filter images are normalized to have values between 0 and 1).

As a human, it is hard to interpret what these filters are doing, but I can see that they are all different from one another.

For this part, we wanted to do full facial keypoint detection, but training on a larger dataset (the ibug_300W_large_face_landmark_dataset). We were also provided with some test images from the same dataset.

I divided the training data into training and validation data, using a randomly selected 5500 images for training and another 1166 for validation. For the training data, I used the same data augmentation techniques as part 2 (randomly rotating and shifting the image). Also, I converted all the images to grayscale, normalized them, cropped them to their bounding boxes, and resized them to (224, 224). I made the bounding boxes 1.2 times their original height and width for the training and validation data, because I found that helped the model underfit less.

I used the resnet-18 model, but changed the input layer to take in an image with 1 channel instead of 3, otherwise keeping it the same, and I changed the final linear layer to output 136 values instead of 1000. The detailed architecture of my model is shown below (note that the output shape column assumes an input of size (64, 1, 224, 224), where batch_size is 64):