For this task, I implemented a simple CNN with two convolutional layers with 6 and 16 filters respectively. Both of them used a 3x3 kernel. I put maxpooling layer with a factor of 2 after each concolution layer and finally pass through two fully connected layers with a middle dimention of 120. The nonlinear activation is relu.

The data loading scheme is the suggest approach which resize the image to a size of 60x80 and normalize the pixel values.

I tried to vary the kernel size to 5x5, change the batch size during training, and use different learning rates. The results are more or less the same with some understandable variance.

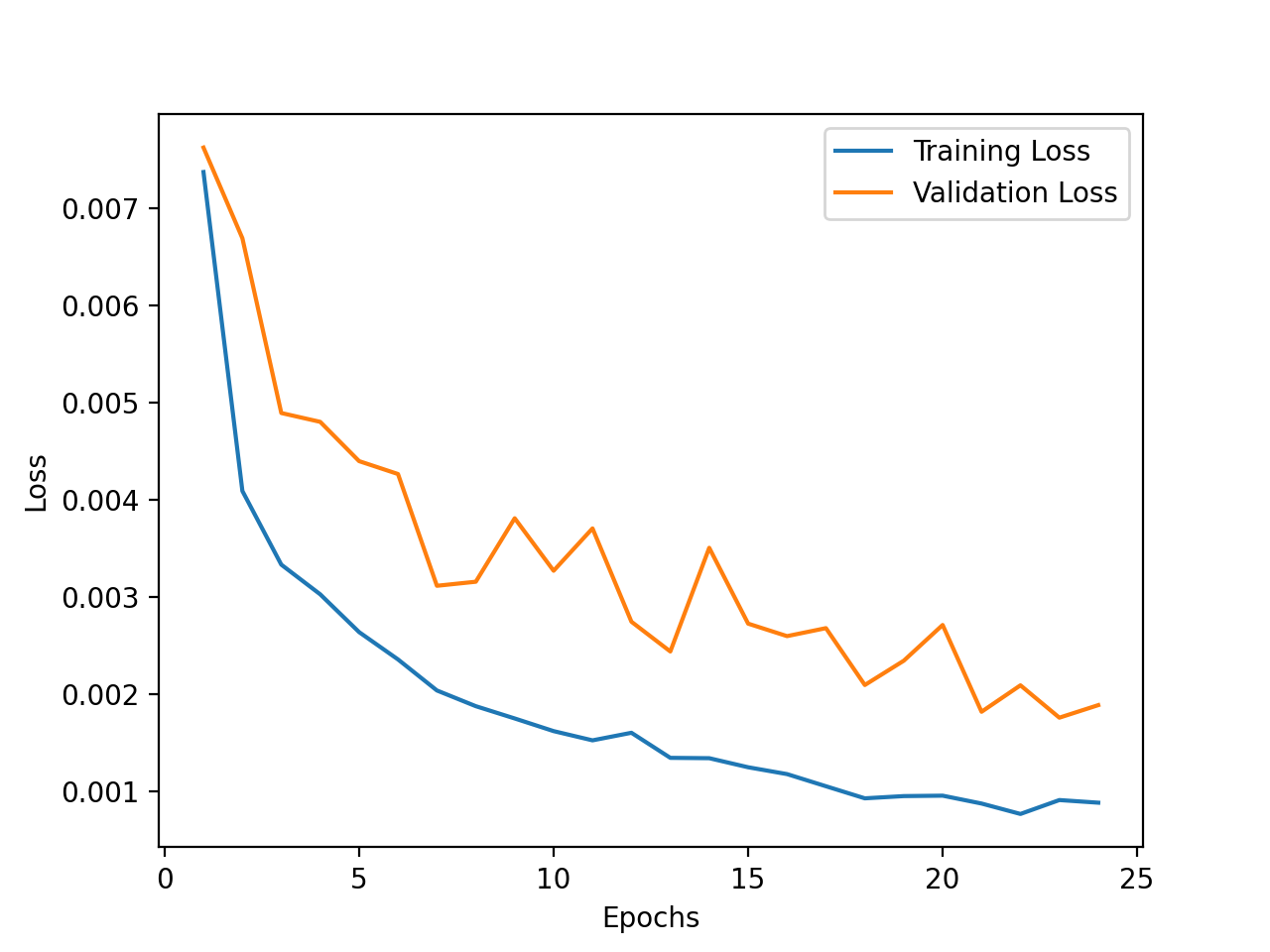

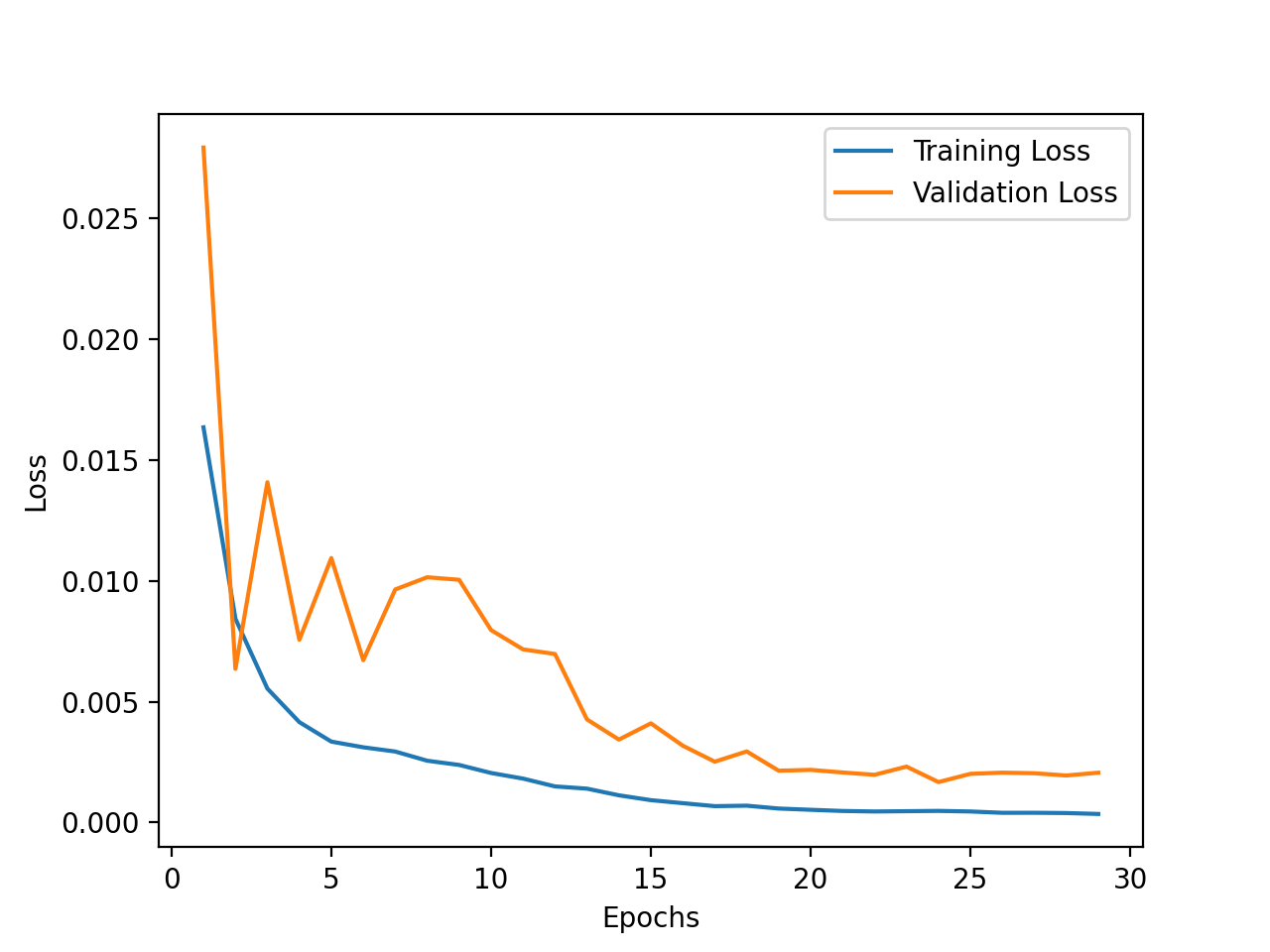

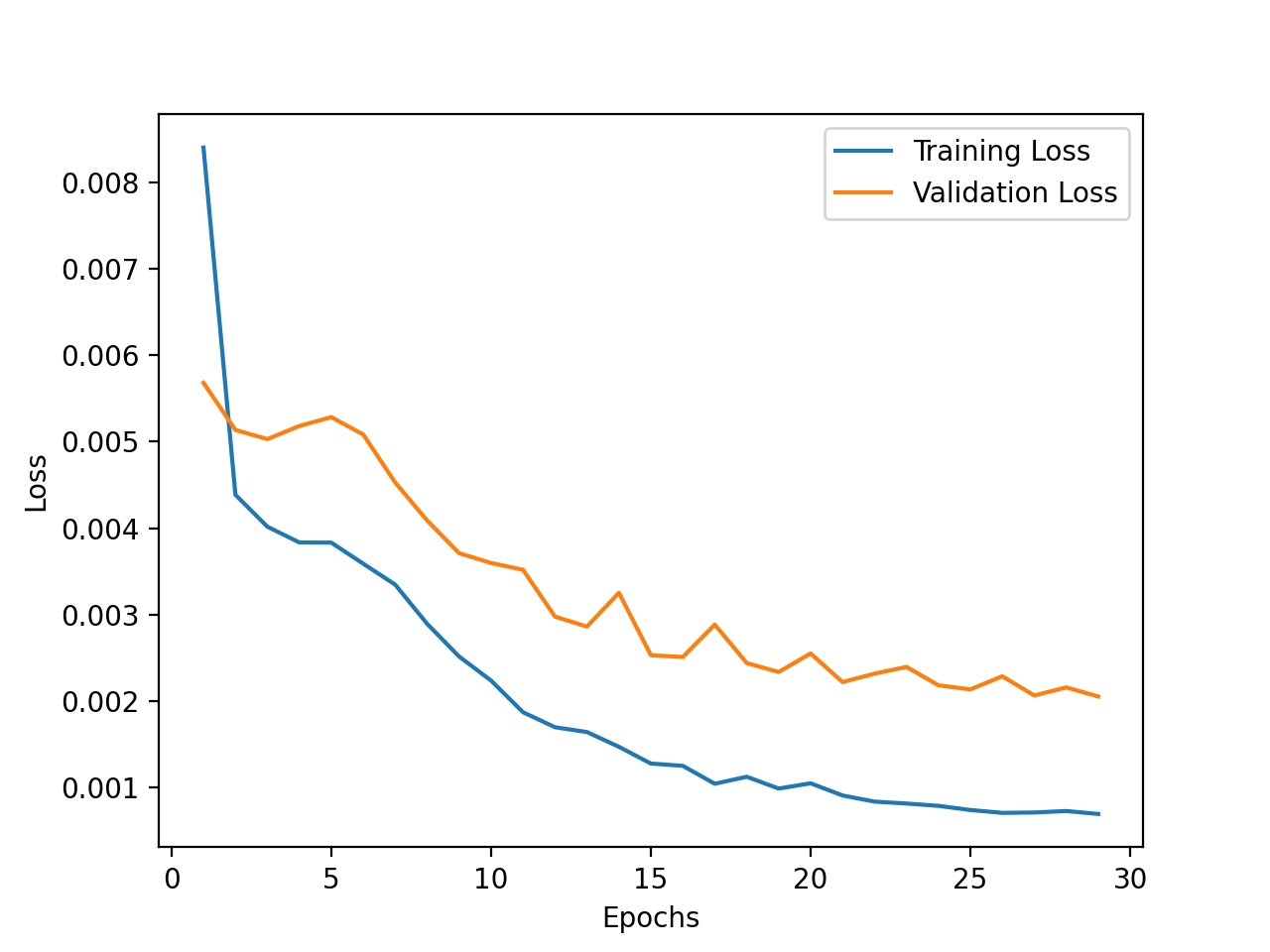

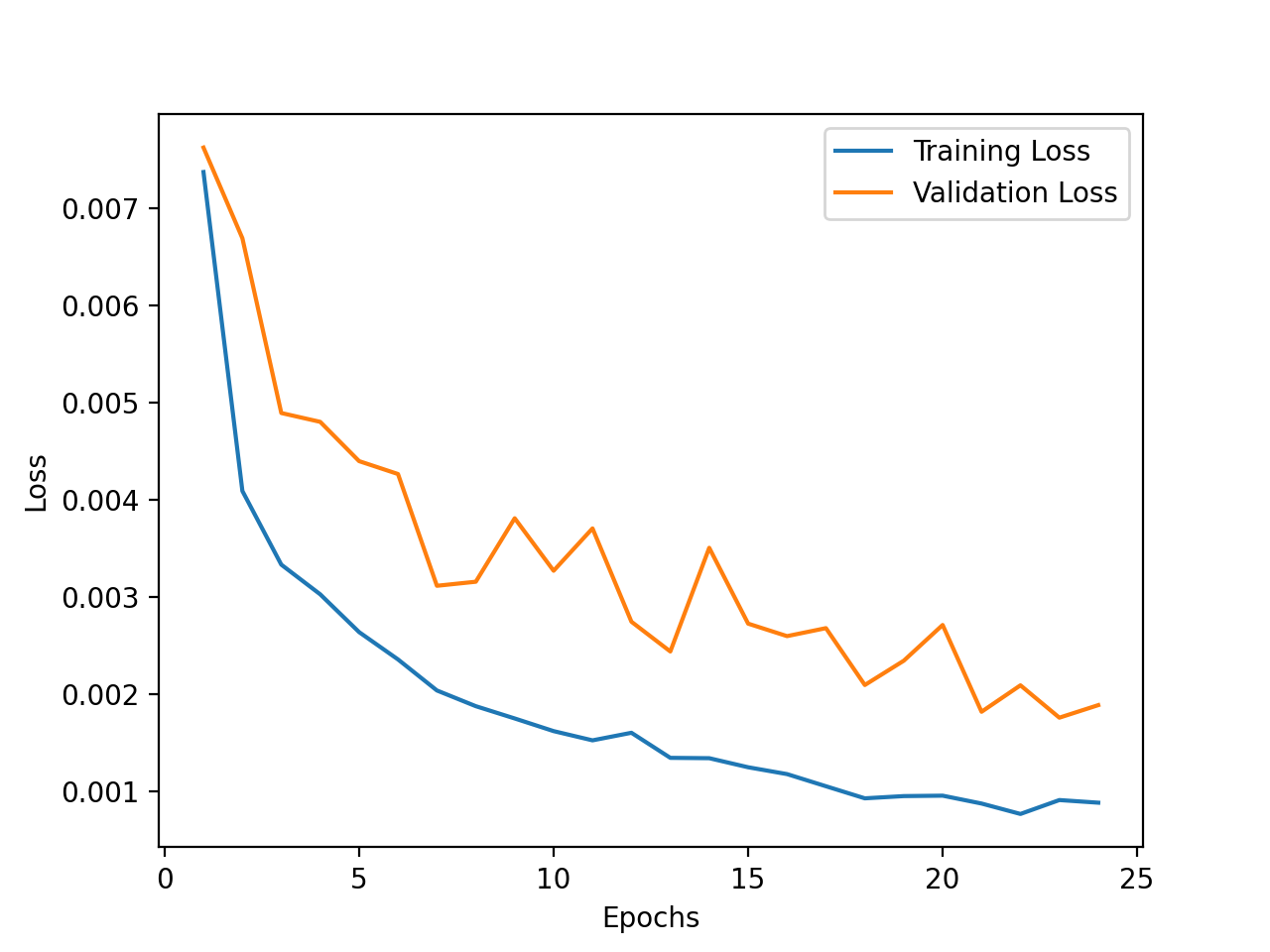

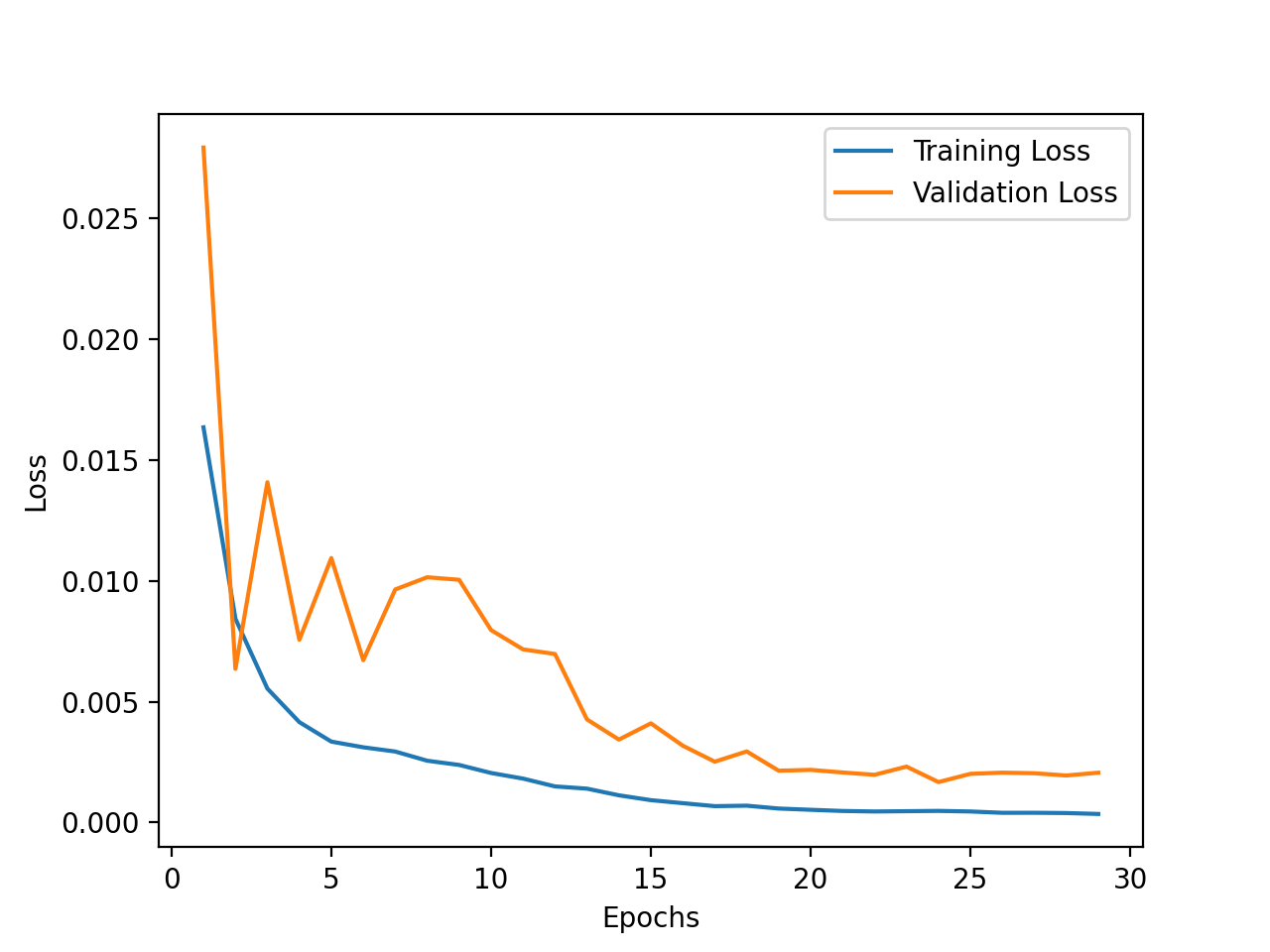

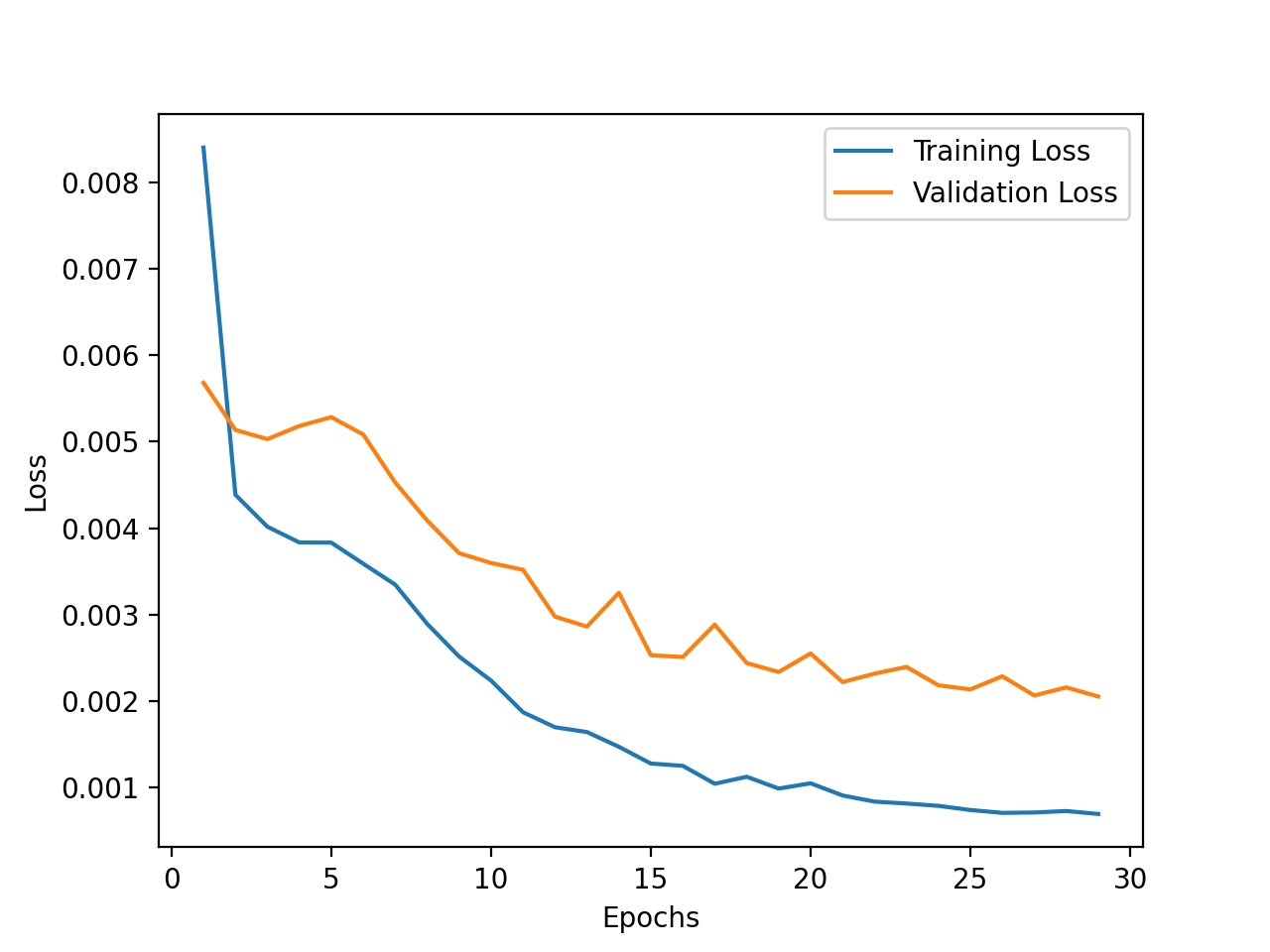

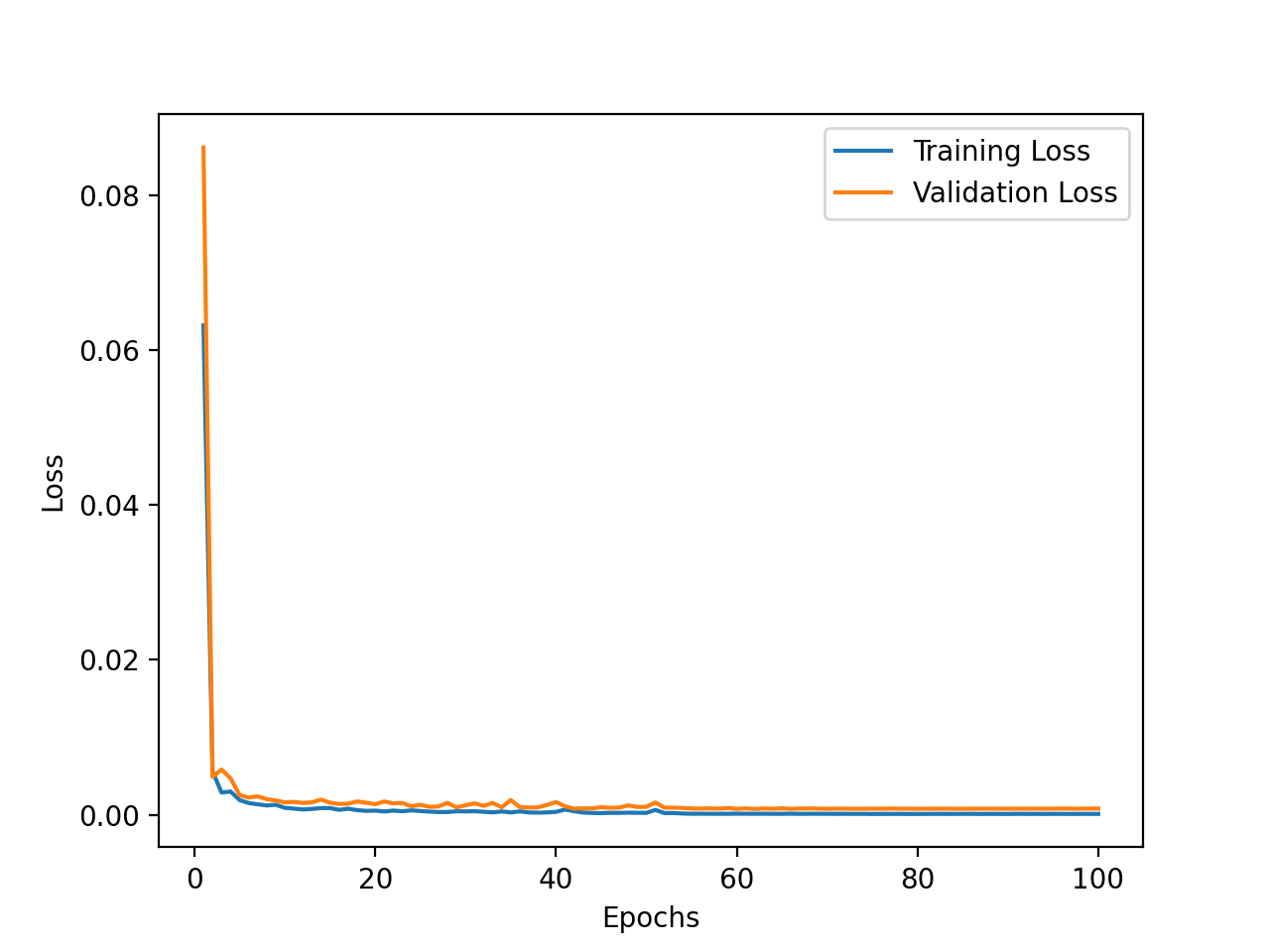

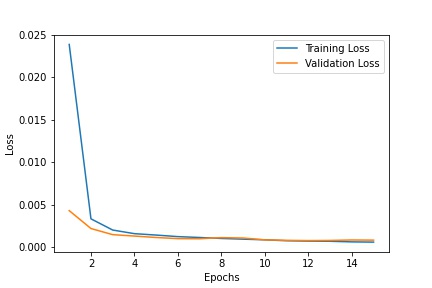

I ploted some training curves for comparison:

The first is the plot for the achitecture I end up with, the second is with doubling the batch size and learning rate, the third is with a 5x5 kernel.

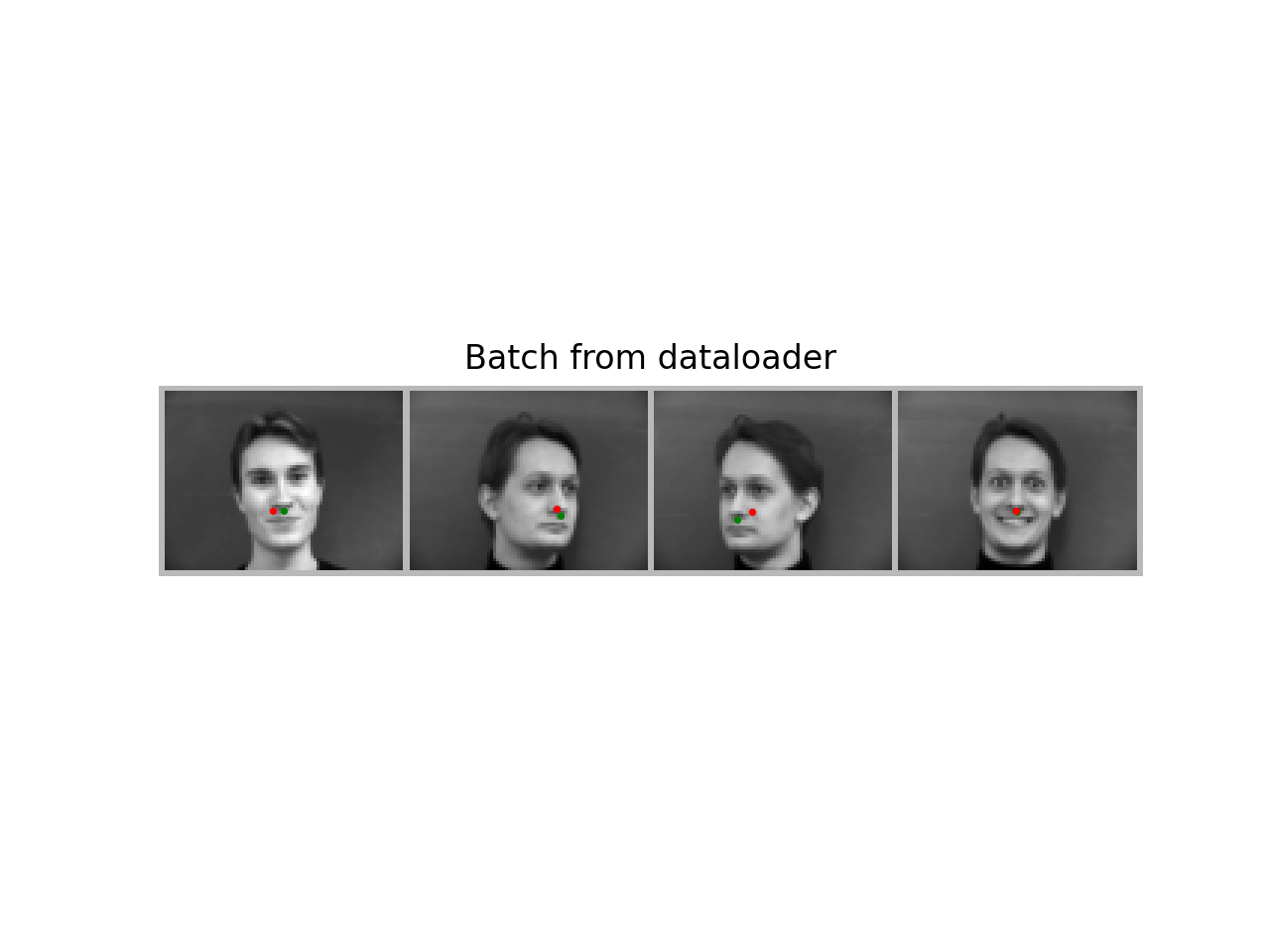

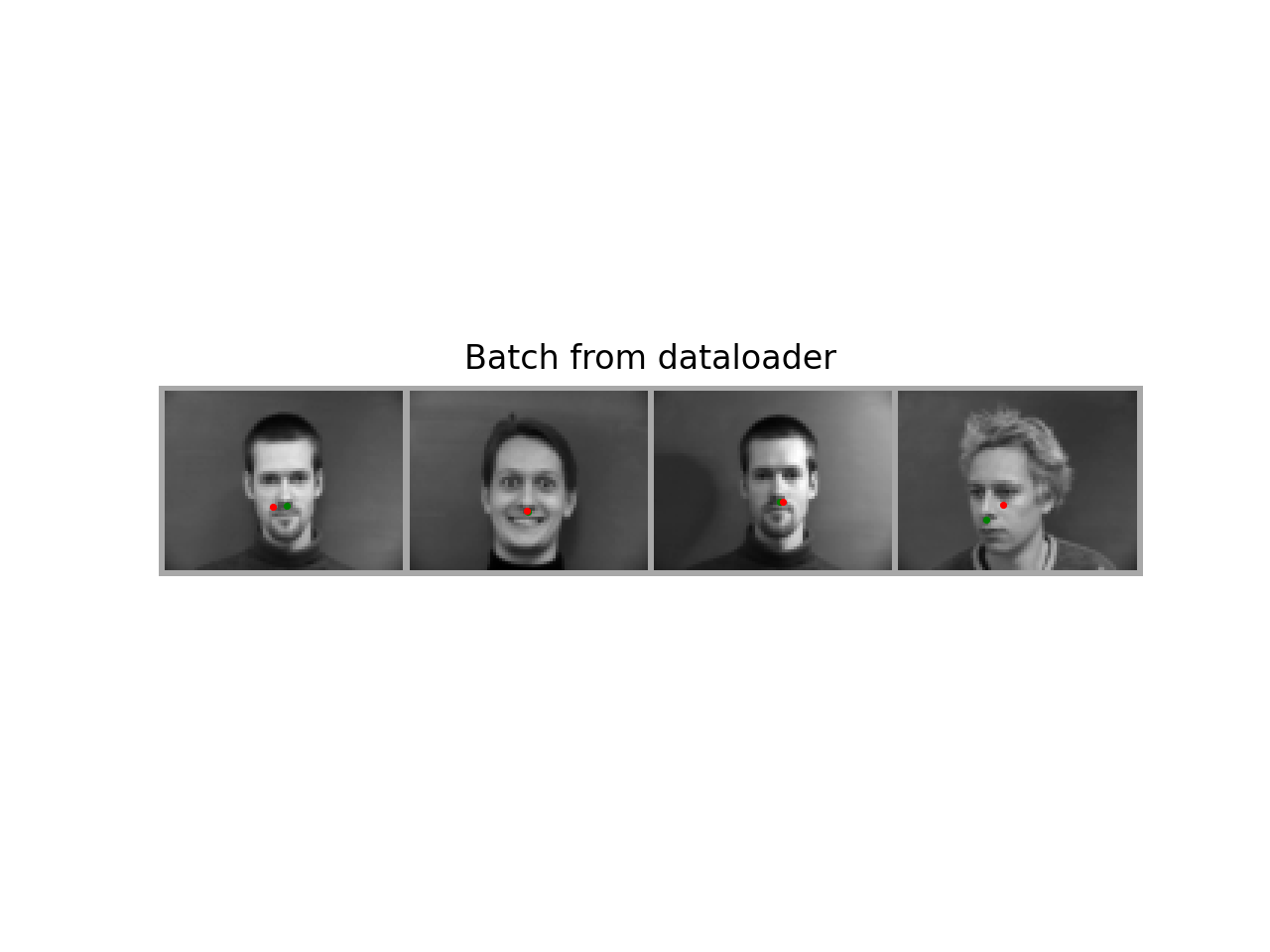

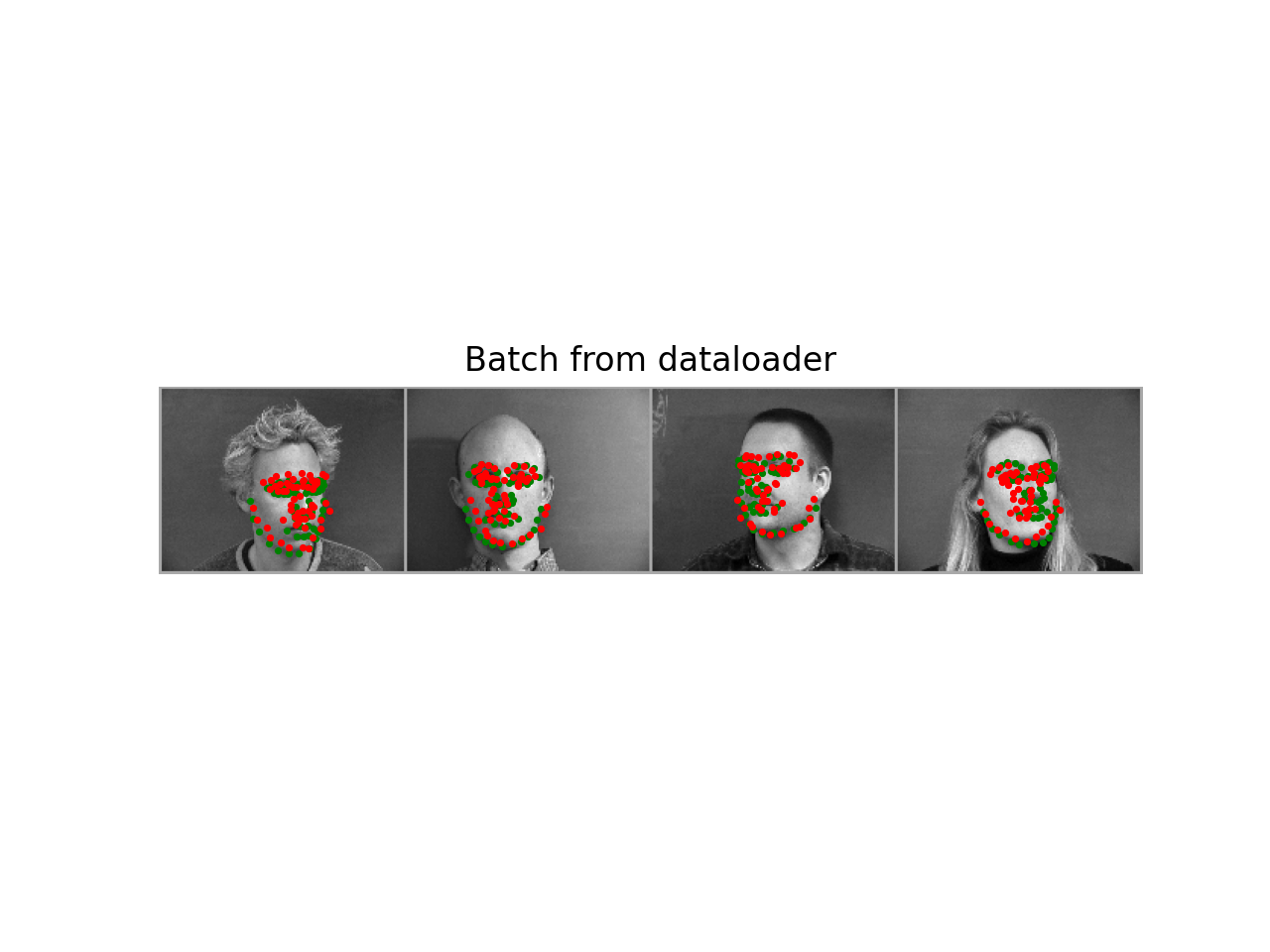

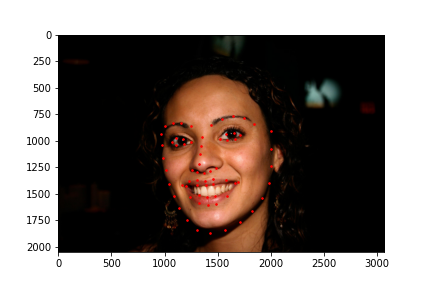

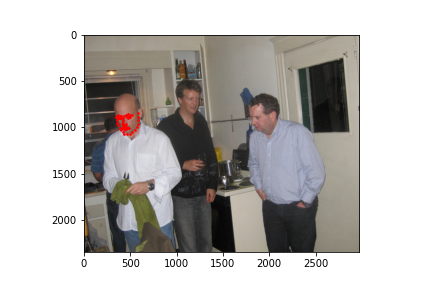

To visualize the results, I plot the ground-truth keypoints and my prediction together. The red is my prediction, and the green is the ground-truth:

Overall they are pretty good, especially for the front faced faces. But it sometimes gets wrong at faces that are facing the sides for example the thrid in the first batch and the last in the second batch. I think this is because the lack of data for side facing faces, and the model has learned that the nosetip is usually at the center of the image.

The architecture that I end up with utilize residual connections bewteen convolutional layers. I implment a custom ResBlock module that includes two convolutional layers with kernel size 5x5, and two BatchNormal layers after each convolution, and residual connection at the end. I used three of such ResBlocks each with 32, 64, 96 filters, and add maxpooling in bewteen. After these, there is another single convolutional layer with 128 filters and 3x3 kernel before sending in to the two fully connected layers with middle dimention of 320. Relu is used as the nonlinearity. For training, I use adam as the opimizer with lr = 1e-4 and a batch size of 8. I also used the reduce on plateau scheduler to dynamically adust learning rate if the validation loss doesn't go down for ten epochs. I used the suggested data augmentations to rotate, translate and color jitter the images.

Below is the training curve for 100 epochs:

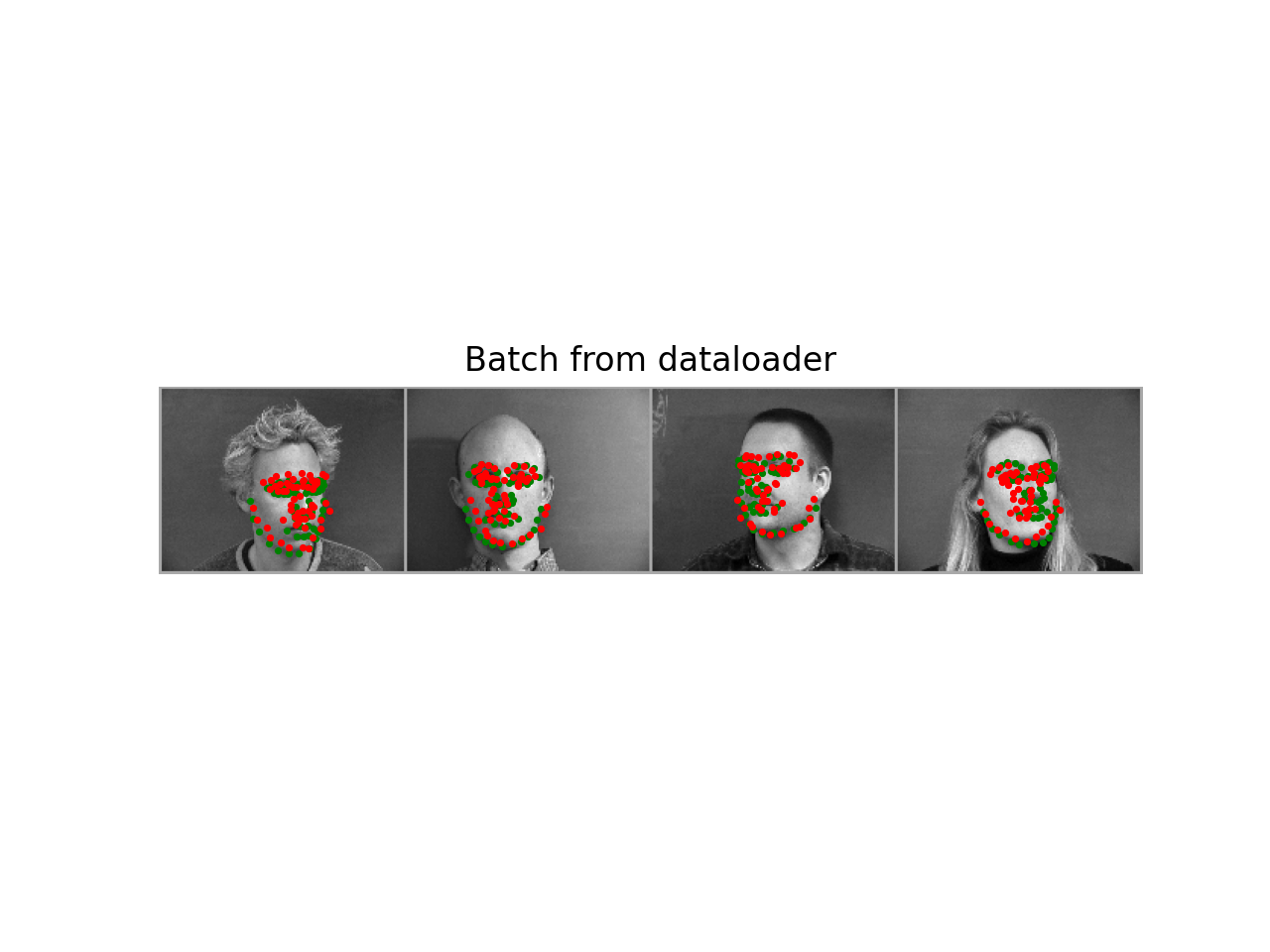

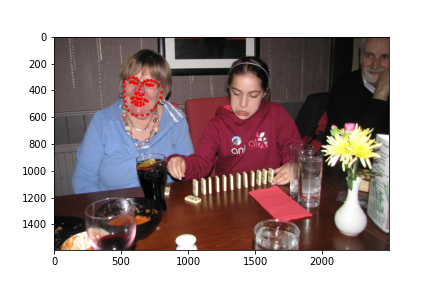

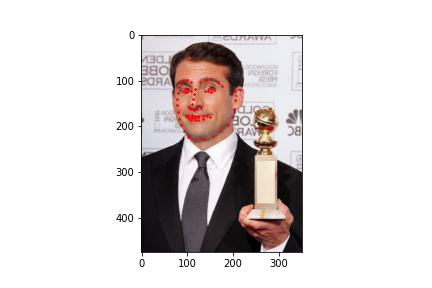

Here are some results in the validation set with red as prediction and green as ground-truth:

We can see that the first batch has some really good results. The second batch, however, especially the first two images, shows the model fails at predicting the right keypoints. I beileve the lighing on the cheeks is a major factor that confuses the model.

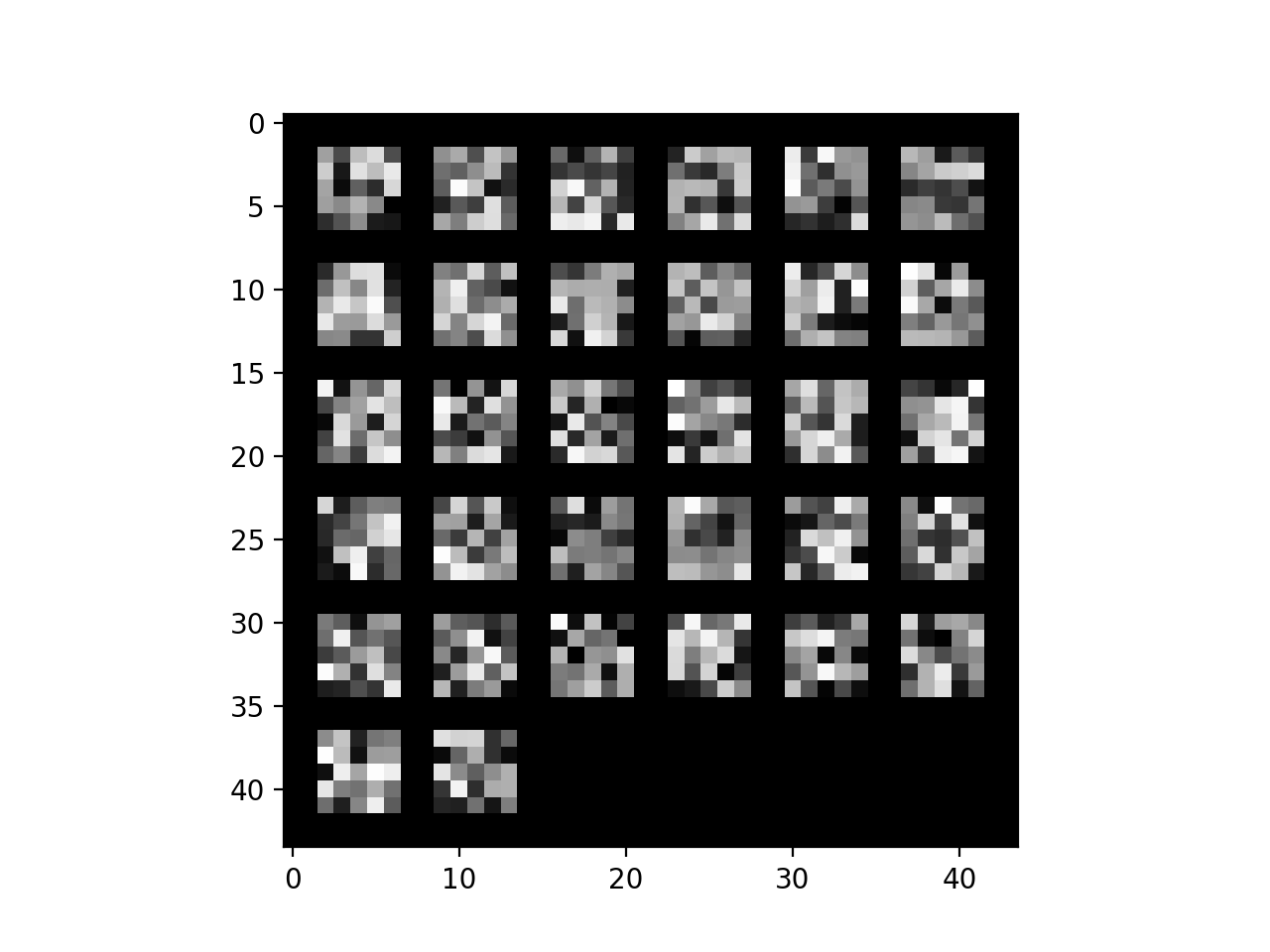

The visualized filters of the first layer, the 5x5 kernels:

Nothing too interesting going on.

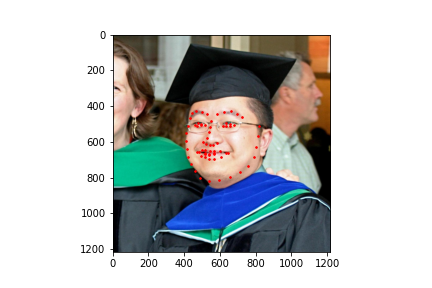

I used the pre-trained resnet18 model as a starting point and finetuned it on our dataset. I changed the first convolutional layer to be taking greyscale images and changed the output dimention of the last fully connected layer. I used adam with learning rate 1e-4 and a batch size of 8.

One thing to note is that the bounding boxes of the dataset don't include all the facial keypoints, which means some of the keypoints lay outside of the cropped image. I initially thought that would put the model at a disadvantage since it doesn't get to see the full picture. So I discarded the bounding box and used the max and min of the keypoints. However, the testset doesn't include the keypoints, so I had to use the bounding box again. This caused a mismatch between the training and test data distributions. I tried to expand the testset bounding box but it only improved a little bit. I ended up using the original bounding box for training and to my surprise the model is able to figure out the keypoints outside the range of the image. It learned to infer the face's shape from existing points which is amazing. This time the testset doesn't have distribution shift anymore and I yield my best result on Kaggle. My Kaggle user name is Boyuan Ma and my best MAE score is 7.59.

Below is the training curve:

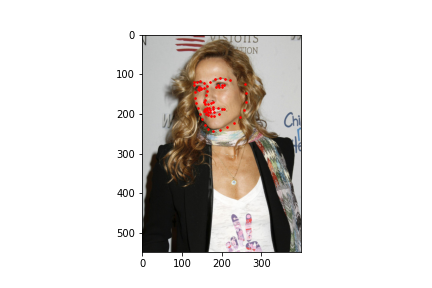

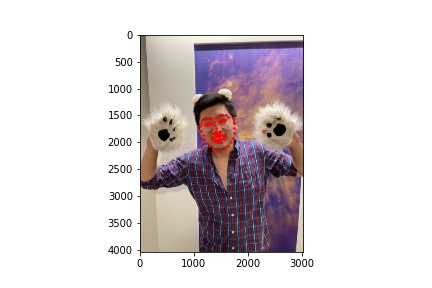

Testset image keypoints prediction:

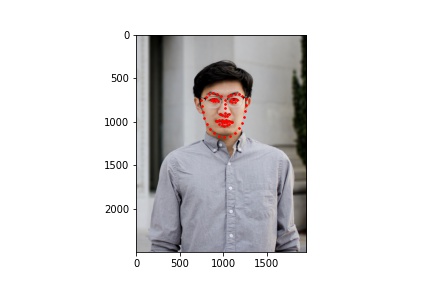

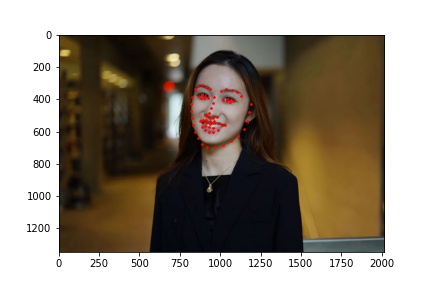

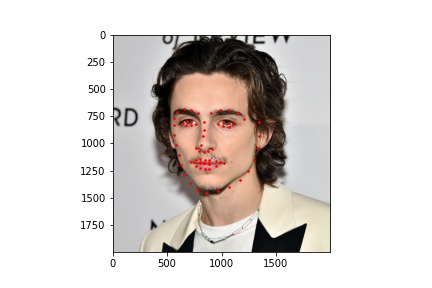

Keypoints prediction on my own images:

The results are pretty good! But the prediction for Timothee Chalamet's cheek is a little off. The model tends to smooth his sharp cheek bone. I guess the model is infering the general shape more than judging the local features of his cheek.