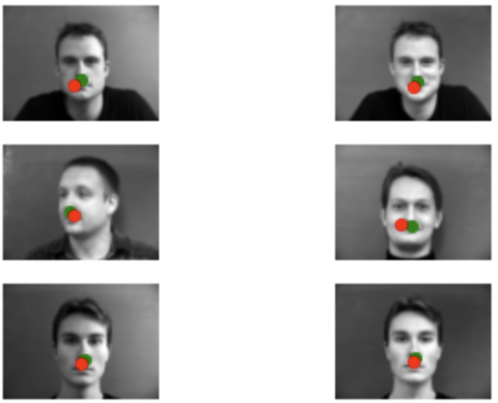

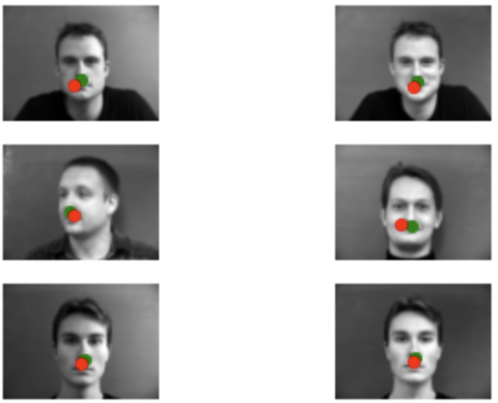

Example Predictions

The goal of this project is to use Neural Networks to automatically detect facial keypoints

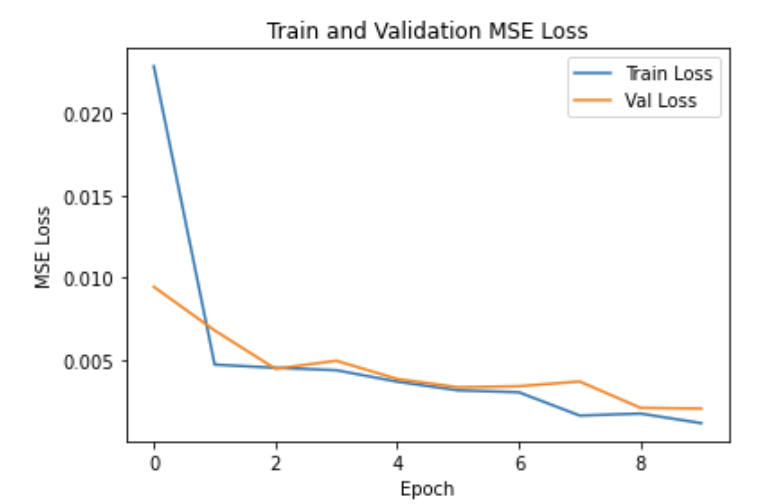

The first thing I did was create a custom Pytorch Dataset class to load my images/labels. I then created a basic convolutional neural network with 3 convolutional layers followed by 2 linear layers. I used ReLU and Max-Pooling in between all my layers. For my loss function I chose MSE and for my optimizer I used Adam. I then trained my network with a batch size of 4, learning rate of 1e-3 for 10 epochs.

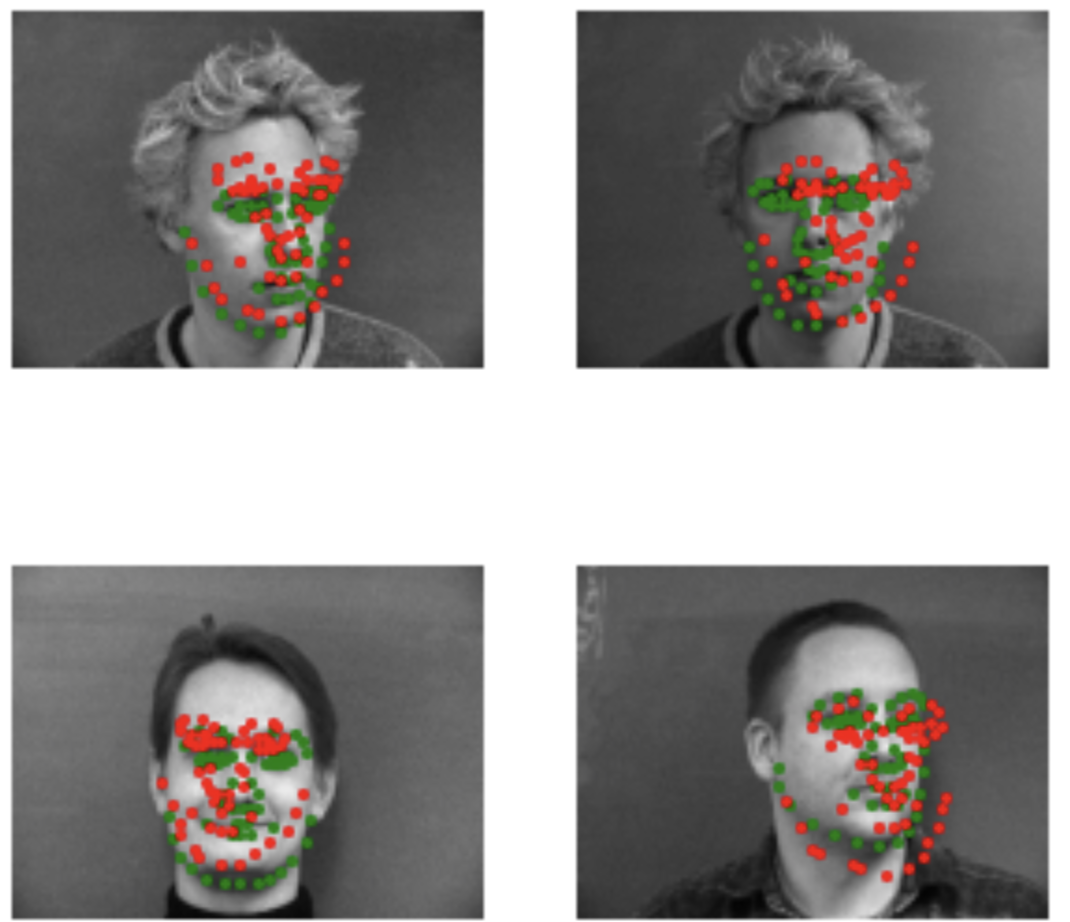

Example Predictions

We see that for some of the side profile images, the network doesn't give an accurate prediction.

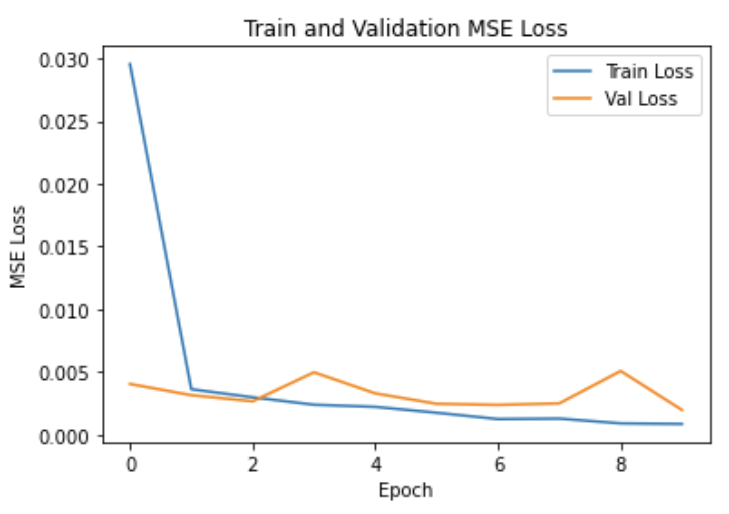

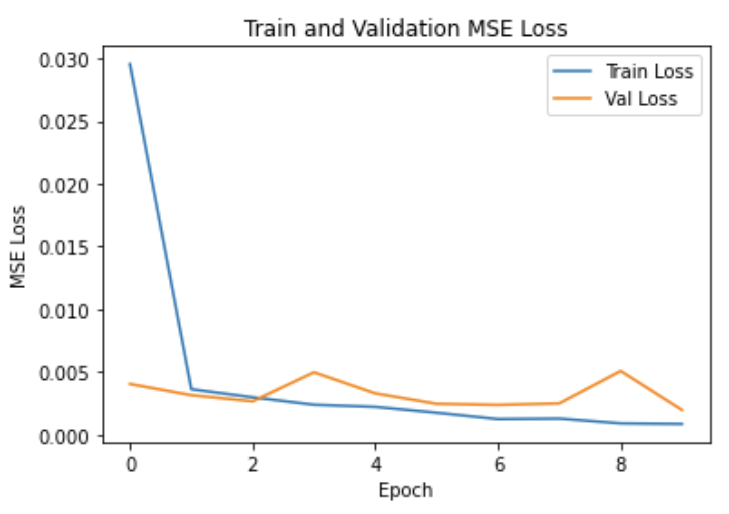

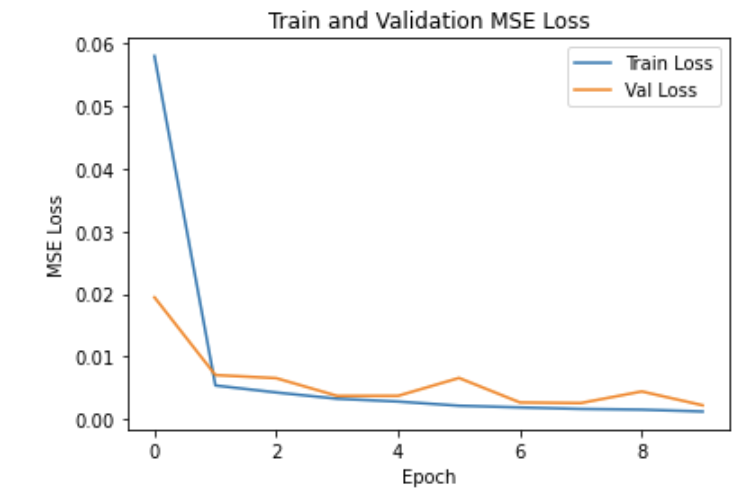

Train/Validation Loss

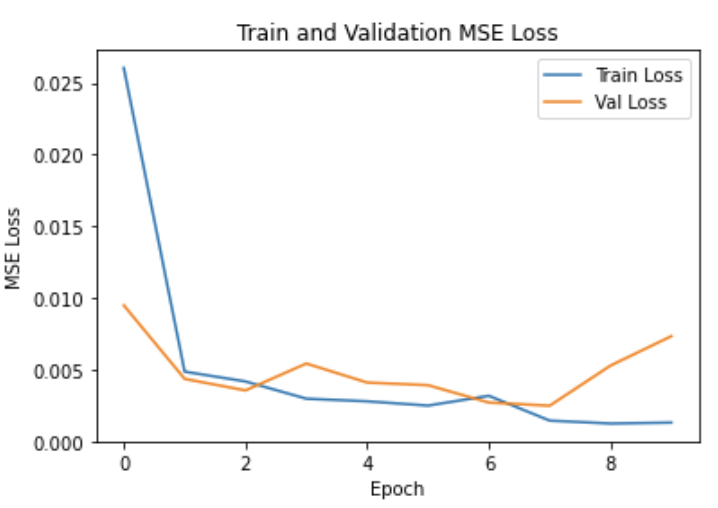

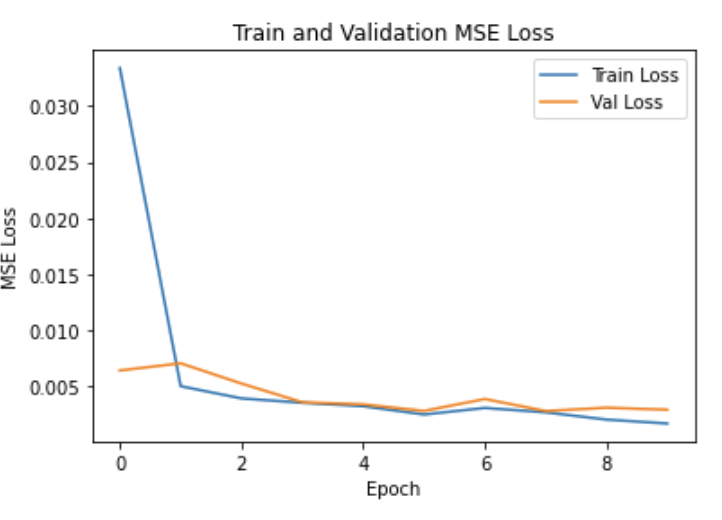

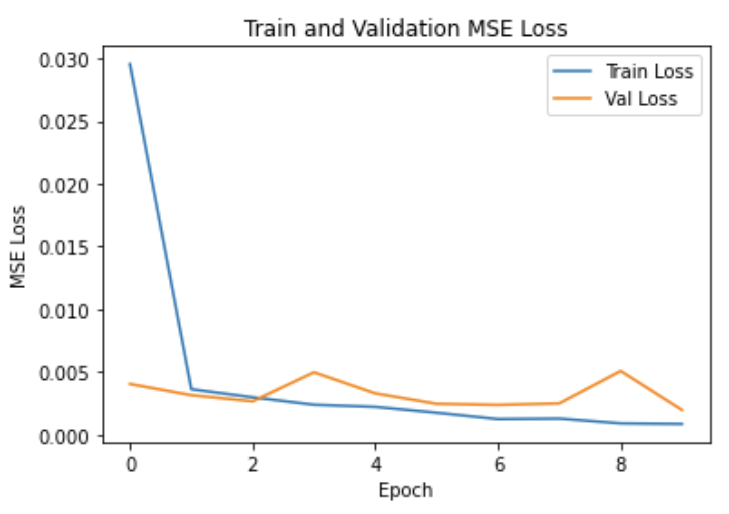

For this subpart, I chose to look at how changing my convolutional kernel size (3x3, 5x5, and 7x7) would affect model performance, and then also how changing the learning rate(5e-4, 1e-3, 5e-3) would affect performance. We can see that overall the performance didn't really change with kernel size, but learning rate did make a slight difference

Train/Validation Loss for Different Kernel Sizes

Train/Validation Loss for Different Learning Rates

This part was very similar to the previous part, except that I now predict all the keypoints instead of just the nose. For this part I resized my images to 240x180.

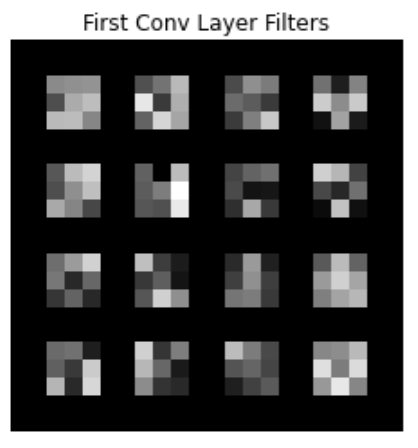

For my CNN, I used 6 convolutional layers instead of only 4 and kept my two fully connected layers and still used both max pooling and relu between my layers. In order to prevent overfitting, I used random rotations betweeen -15 and 15 degrees for data augmentation. For training, I used a batch size of 4, learning rate of 1e-3, MSE loss, and Adam as my optimizer.

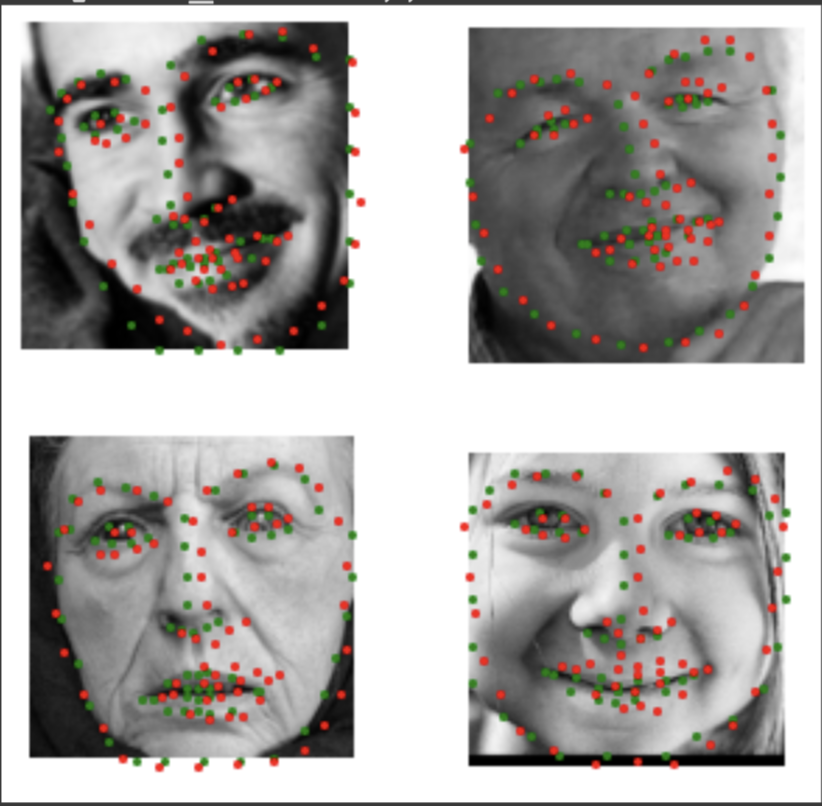

Sample Predictions

Again, we can see that for some of the side profile images, the predicted points aren't as accurate.

Here are visualizations of the learned filters in my network

Learned Filters

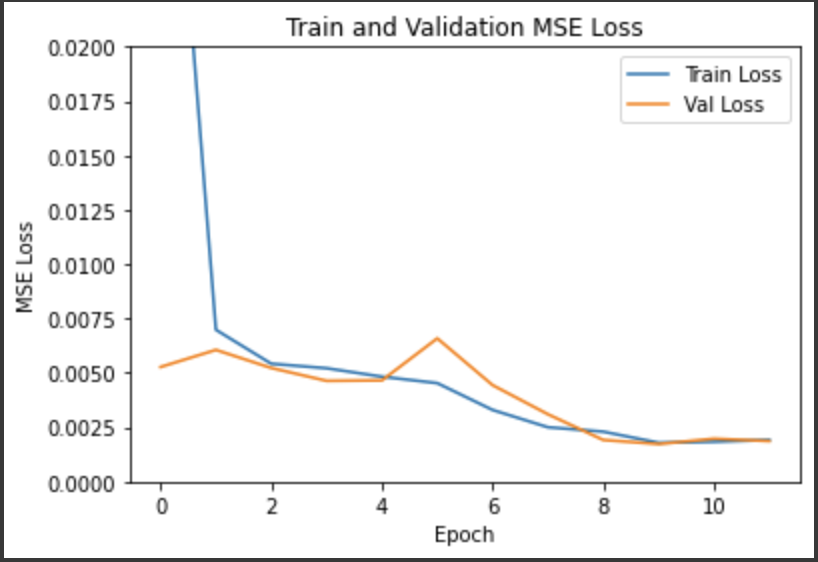

For this part, I had to create a new Dataset class to load the new dataset. I then chose to use a Resnet-18 for my model. For this part, I used Random Rotation between +20 and -20 degrees for data augmentation, a batch size of 64, a learning rate of 3e-3, and Adam as my optimizer. I trained for 12 epochs.

Train/Validation Loss

Sample Predictions

After uploading to Kaggle, I achieved a score of 14.65348

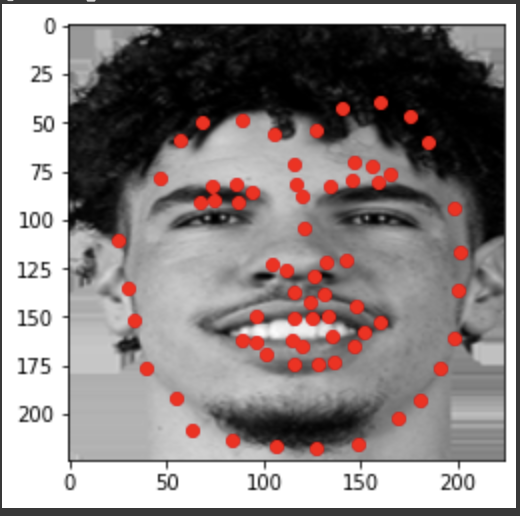

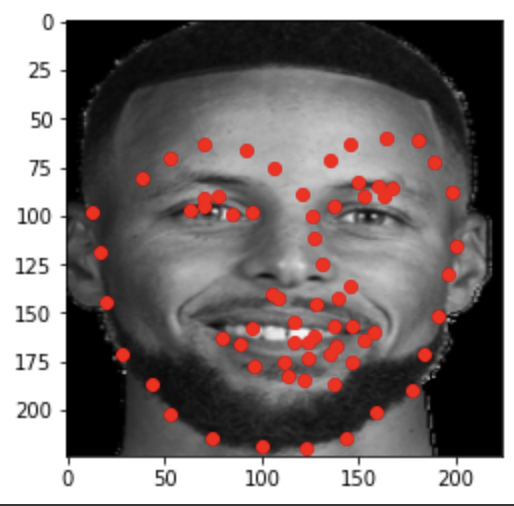

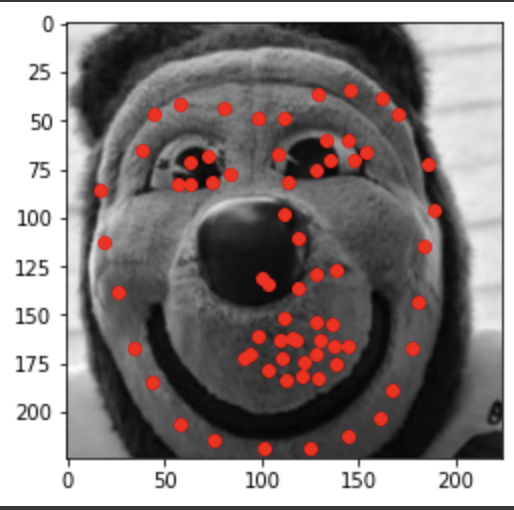

Predicted Keypoints For Other Faces

The model did pretty well with the two humans, but struggled a bit with Oski.

It was really cool to use neural networks to automatically detect keypoints, I learned a lot about training neural networks and data augmentation techniques.